It is a continuation of the article Versatile Information Era With Datafaker Gen about DataFaker Gen. On this part, we are going to discover the brand new BigQuery Sink characteristic for Google Cloud Platform, demonstrating the right way to make the most of completely different area sorts based mostly on the DataFaker schema.

BigQuery is a completely managed and AI-ready information analytics platform accessible on Google Cloud Platform that offers anybody the aptitude to research terabytes of information.

Let’s contemplate a state of affairs the place we goal to create a dummy dataset, aligned with our precise schema to facilitate executing and testing queries in BigQuery. By utilizing Datafaker Gen, this information can turn into significant and predictable, based mostly on predefined suppliers, thus permitting for extra life like and dependable testing environments.

This resolution leverages the BigQuery API Consumer libraries supplied by Google. For extra particulars, seek advice from the official documentation right here: BigQuery API Consumer Libraries.

Fast Begin With BigQuery Sink

It is a easy instance of BigQuery Sink simply to point out that it requires two easy actions to see the end result. This supplies readability on the method. The opposite a part of this text will cowl detailed configuration and the pliability of this characteristic.

And so, three easy steps must be completed:

1. Obtain the mission right here, construct it, and navigate to the folder with the BigQuery instance:

./mvnw clear confirm && cd ./datafaker-gen-examples/datafaker-gen-bigquery2. Configure schema in config.yaml :

default_locale: en-US

fields:

- identify: id

mills: [ Number#randomNumber ]

- identify: lastname

mills: [ Name#lastName ]

nullRate: 0.1

- identify: firstname

locale: ja-JP

mills: [ Name#firstName ]Configure BigQuery Sink in output.yamlwith the trail to the Service Account JSON (which needs to be obtained from GCP):

sinks:

bigquery:

project_id: [gcp project name]

dataset: datafaker

desk: customers

service_account: [path to service accout json]Run it:

# Format json, variety of traces 10000 and new BigQuery Sink

bin/datafaker_gen -f json -n 10000 -sink bigqueryIn-Depth Information To Utilizing BigQuery Sink

To organize a generator for BigQuery, observe these two steps:

- Outline the DataFaker Schema: The schema outlined in

config.yamlwill probably be reused for the BigQuery Sink. - Configure the BigQuery Sink: In

output.yaml, specify the connection credentials, connection properties, and era parameters.

Observe: At the moment, BigQuery Sink solely helps the JSON format. If one other format is used, the BigQuery Sink will throw an exception. On the identical time, it is perhaps alternative to introduce different codecs, comparable to protobuf.

1. Outline the DataFaker Schema

One of the necessary preparation duties is defining the schema within the config.yaml file. The schema specifies the sphere definitions of the file based mostly on the Datafaker supplier. It additionally permits for the definition of embedded fields like array and struct.

Contemplate this instance of a schema definition within the config.yaml file.

Step one is to outline the bottom locale that needs to be used for all fields. This needs to be completed on the high of the file within the property default_locale . The locale for a selected area may be custom-made straight.

This schema defines the default locale as ‘en-EN’ and lists the fields. Then all required fields needs to be outlined in fields part.

Let’s fill within the particulars of the sphere definitions. Datafaker Gen helps three primary area sorts: default, array, and struct.

Default Sort

It is a easy kind that means that you can outline the sphere identify and the right way to generate its worth utilizing generator property. Moreover, there are some optionally available parameters that permit for personalisation of locale and charge nullability.

default_locale: en-US

fields:

- identify: id

mills: [ Number#randomNumber ]

- identify: lastname

mills: [ Name#lastName ]

nullRate: 0.1

- identify: firstname

locale: ja-JP

mills: [ Name#firstName ]- identify: Defines the sphere identify.

- mills: Defines the Faker supplier strategies that generate worth.

For BigQuery, based mostly on the format supplied by the Faker supplier mills, it can generate JSON, which will probably be reused for BigQuery area sorts. In our instance,

Quantity#randomNumberreturns a protracted worth from the DataFaker supplier, which is then transformed to an integer for the BigQuery schema. Equally, the fieldsTitle#lastNameandTitle#firstNamethat are String and convert to STRING in BigQuery.

- nullRate: Decide how typically this area is lacking or has a null worth.

- locale: Defines a selected locale for the present area.

Array Sort

This kind permits the era of a group of values. It reuses the fields from the default kind and extends them with two further properties: minLength and maxLength.

In BigQuery, this kind corresponds to a area with the REPEATED mode.

The next fields must be configured with a view to allow the array kind:

- kind: Specify

arraykind for this area. - minLenght: Specify min size of array.

- maxLenght: Specify max size of array.

All these properties are obligatory for the array kind.

default_locale: en-US

fields:

- identify: id

mills: [ Number#randomNumber ]

- identify: lastname

mills: [ Name#lastName ]

nullRate: 0.1

- identify: firstname

mills: [ Name#firstName ]

locale: ja-JP

- identify: telephone numbers

kind: array

minLength: 2

maxLength: 5

mills: [ PhoneNumber#phoneNumber, PhoneNumber#cellPhone ]Additionally it is price noting that, generator property can include a number of sources of worth, comparable to for telephone numbers.

Struct Sort

This kind means that you can create a substructure that may include many nested ranges based mostly on all present sorts.

In BigQuery, this kind corresponds to RECORD kind.

struct kind doesn’t have a generator property however has a brand new property known as fields, the place a substructure based mostly on the default, array or struct kind may be outlined. There are two primary fields that must be added for the struct kind:

- kind: Specify

structkind for this area. - fields: Defines a listing of fields in a sub-structure.

default_locale: en-US

fields:

- identify: id

mills: [ Number#randomNumber ]

- identify: lastname

mills: [ Name#lastName ]

nullRate: 0.1

- identify: firstname

mills: [ Name#firstName ]

locale: ja-JP

- identify: telephone numbers

kind: array

minLength: 2

maxLength: 5

mills: [ PhoneNumber#phoneNumber, PhoneNumber#cellPhone ]

- identify: tackle

kind: struct

fields:

- identify: nation

mills: [ Address#country ]

- identify: metropolis

mills: [ Address#city ]

- identify: avenue tackle

mills: [ Address#streetAddress ]2. Configure BigQuery Sink

As beforehand talked about, the configuration for sinks may be added within the output.yaml file. The BigQuery Sink configuration means that you can arrange credentials, connection properties, and sink properties. Beneath is an instance configuration for a BigQuery Sink:

sinks:

bigquery:

batchsize: 100

project_id: [gcp project name]

dataset: datafaker

desk: customers

service_account: [path to service accout json]

create_table_if_not_exists: true

max_outstanding_elements_count: 100

max_outstanding_request_bytes: 10000

keep_alive_time_in_seconds: 60

keep_alive_timeout_in_seconds: 60Let’s evaluation your entire checklist of leverages you’ll be able to benefit from:

- batchsize: Specifies the variety of data to course of in every batch. A smaller batch measurement can scale back reminiscence utilization however could improve the variety of API calls.

- project_id: The Google Cloud Platform mission ID the place your BigQuery dataset resides.

- dataset: The identify of the BigQuery dataset the place the desk is positioned.

- desk: The identify of the BigQuery desk the place the info will probably be inserted.

- Google Credentials needs to be configured with ample permissions to entry and modify BigQuery datasets and tables. There are a number of methods to cross service account content material:

- service_account: The trail to the JSON file containing the service account credentials. This configuration needs to be outlined within the

output.yamlfile. SERVICE_ACCOUNT_SECRETThis atmosphere variable ought to include the JSON content material of the service account.- The ultimate possibility includes utilizing the gcloud configuration out of your atmosphere (extra particulars may be discovered right here). This feature is implicit and will probably result in unpredictable conduct.

- service_account: The trail to the JSON file containing the service account credentials. This configuration needs to be outlined within the

- create_table_if_not_exists: If set to

true, the desk will probably be created if it doesn’t exist already. A BigQuery Schema will probably be created based mostly on the DataFaker Schema. - max_outstanding_elements_count: The utmost variety of parts (data) allowed within the buffer earlier than they’re despatched to BigQuery.

- max_outstanding_request_bytes: The utmost measurement of the request in bytes allowed within the buffer earlier than they’re despatched to BigQuery.

- keep_alive_time_in_seconds: The period of time(in seconds) to maintain the connection alive for extra requests.

- keep_alive_timeout_in_seconds: The period of time(in seconds) to attend for extra requests earlier than closing the connection resulting from inactivity.

How one can Run

BigQuery Sink instance has been merged into the principle upstream Datafaker Gen mission, the place it may be tailored in your use.

Operating this generator is simple and light-weight. Nonetheless, it requires a number of preparation steps:

1. Obtain the GitHub repository. The datafaker-gen-examples folder contains the instance with BigQuery Sink, that we are going to use.

2. Construct your entire mission with all modules. The present resolution makes use of 2.2.3-SNAPSHOT model of DataFaker library.

3. Navigate to the ‘datafaker-gen-bigquery’ folder. This could function the working listing in your run.

cd ./datafaker-gen-examples/datafaker-gen-bigquery4. Outline the schema for data within the config.yaml file and place this file within the applicable location the place the generator needs to be run. Moreover, outline the sinks configuration within the output.yaml file, as demonstrated beforehand.

Datafake Gen may be executed by way of two choices:

1. Use bash script from the bin folder within the mum or dad mission:

# Format json, variety of traces 100 and new BigQuery Sink

bin/datafaker_gen -f json -n 10000 -sink bigquery2. Execute the JAR straight, like this:

java -cp [path_to_jar] internet.datafaker.datafaker_gen.DatafakerGen -f json -n 10000 -sink bigqueryQuestion Outcome and Consequence

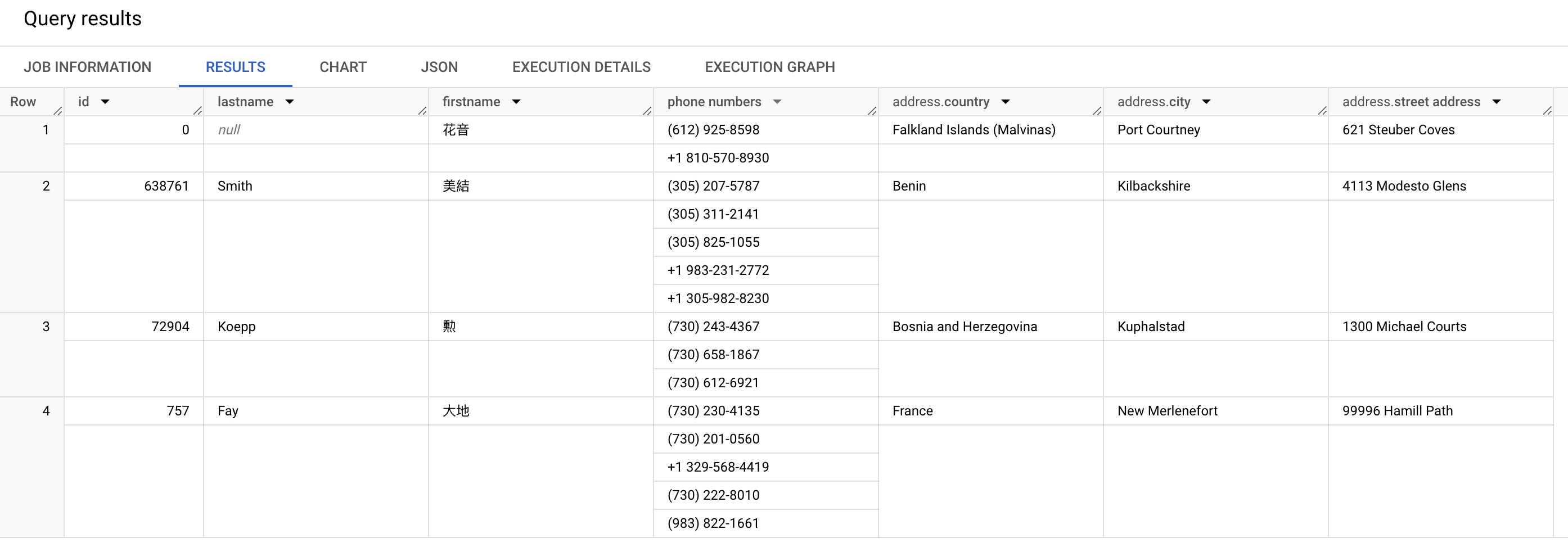

After making use of all the mandatory configurations and operating in my check atmosphere, it might be good to test the end result.

That is the SQL question to retrieve the generated end result:

SELECT

id,

lastname,

firstname,

`telephone numbers`,

tackle

FROM `datafaker.customers`;Right here is the results of all our work (the results of the question):

Solely the primary 4 data are proven right here with all of the fields outlined above. It additionally is sensible to notice that the telephone numbers array area comprises two or extra values relying on the entries. The tackle construction area has three nested fields.

Conclusion

This newly added BigQuery Sink characteristic allows you to publish data to Google Cloud Platform effectively. With the power to generate and publish giant volumes of life like information, builders and information analysts can extra successfully simulate the conduct of their purposes and instantly begin testing in real-world situations.

Your suggestions permits us to evolve this mission. Please be happy to go away a remark.

- The total supply code is accessible right here.

- I want to thank Sergey Nuyanzin for reviewing this text.

Thanks for studying! Glad to be of assist.