Of their seminal 2017 paper, “Attention Is All You Need,” Vaswani et al. launched the Transformer structure, revolutionizing not solely speech recognition expertise however many different fields as effectively. This weblog put up explores the evolution of Transformers, tracing their growth from the unique design to essentially the most superior fashions, and highlighting vital developments made alongside the way in which.

The Authentic Transformer

The unique Transformer mannequin launched a number of groundbreaking ideas:

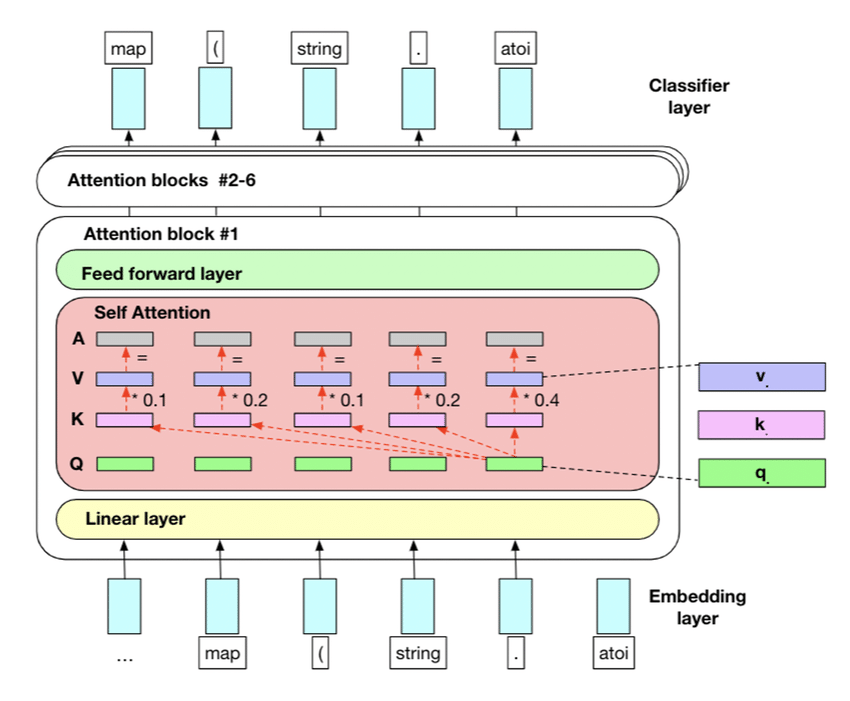

- Self-attention mechanism: This lets the mannequin decide how essential every part is within the enter sequence.

- Positional encoding: Provides details about a token’s place inside a sequence, enabling the mannequin to seize the order of the sequence.

- Multi-head consideration: This characteristic permits the mannequin to concurrently give attention to completely different elements of the enter sequence, enhancing its capability to grasp complicated relationships.

- Encoder-decoder structure: Separates the processing of enter and output sequences, enabling extra environment friendly and efficient sequence-to-sequence studying.

These parts mix to create a robust and versatile structure that outperforms earlier sequence-to-sequence (S2S) fashions, particularly in machine translation duties.

Encoder-Decoder Transformers and Past

The unique encoder-decoder construction has since been tailored and modified, resulting in a number of notable developments:

- BART (Bidirectional and auto-regressive transformers): Combines bidirectional encoding with autoregressive decoding, reaching notable success in textual content era.

- T5 (Textual content-to-text switch transformer): Recasts all NLP duties as text-to-text issues, facilitating multi-tasking and switch studying.

- mT5 (Multilingual T5): Expands T5’s capabilities to 101 languages, showcasing its adaptability to multilingual contexts.

- MASS (Masked sequence to sequence pre-training): Introduces a brand new pre-training goal for sequence-to-sequence studying, enhancing mannequin efficiency.

- UniLM (Unified language mannequin): Integrates bidirectional, unidirectional, and sequence-to-sequence language modeling, providing a unified strategy to numerous NLP duties.

BERT and the Rise of Pre-Coaching

BERT (Bidirectional Encoder Representations from Transformers), launched by Google in 2018, marked a big milestone in pure language processing. BERT popularized and perfected the idea of pre-training on massive textual content corpora, resulting in a paradigm shift within the strategy to NLP duties. Let’s take a better have a look at BERT’s improvements and their influence.

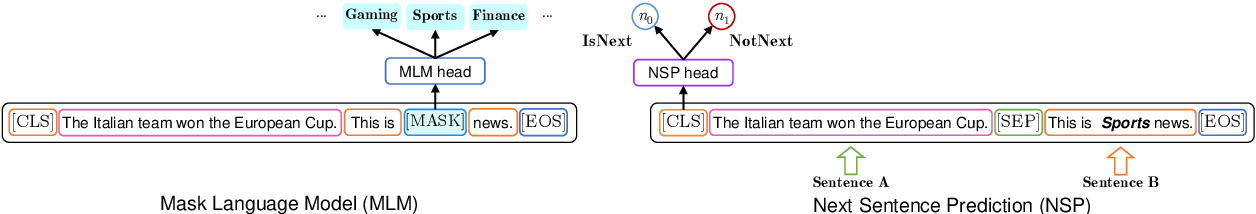

Masked Language Modeling (MLM)

- Course of: Randomly masks 15% of tokens in every sequence. The mannequin then makes an attempt to foretell these masked tokens based mostly on the encompassing context.

- Bidirectional context: In contrast to earlier fashions that processed textual content both left-to-right or right-to-left, MLM permits BERT to contemplate the context from each instructions concurrently.

- Deeper understanding: This strategy forces the mannequin to develop a deeper understanding of the language, together with syntax, semantics, and contextual relationships.

- Variant masking: To forestall the mannequin from over-relying on [MASK] tokens throughout fine-tuning (since [MASK] doesn’t seem throughout inference), 80% of the masked tokens are changed by [MASK], 10% by random phrases, and 10% stay unchanged.

Subsequent Sentence Prediction (NSP)

- Course of: The mannequin receives pairs of sentences and should predict whether or not the second sentence follows the primary within the unique textual content.

- Implementation: 50% of the time, the second sentence is the precise subsequent sentence, and 50% of the time, it’s a random sentence from the corpus.

- Function: This activity helps BERT perceive relationships between sentences, which is essential for duties like query answering and pure language inference.

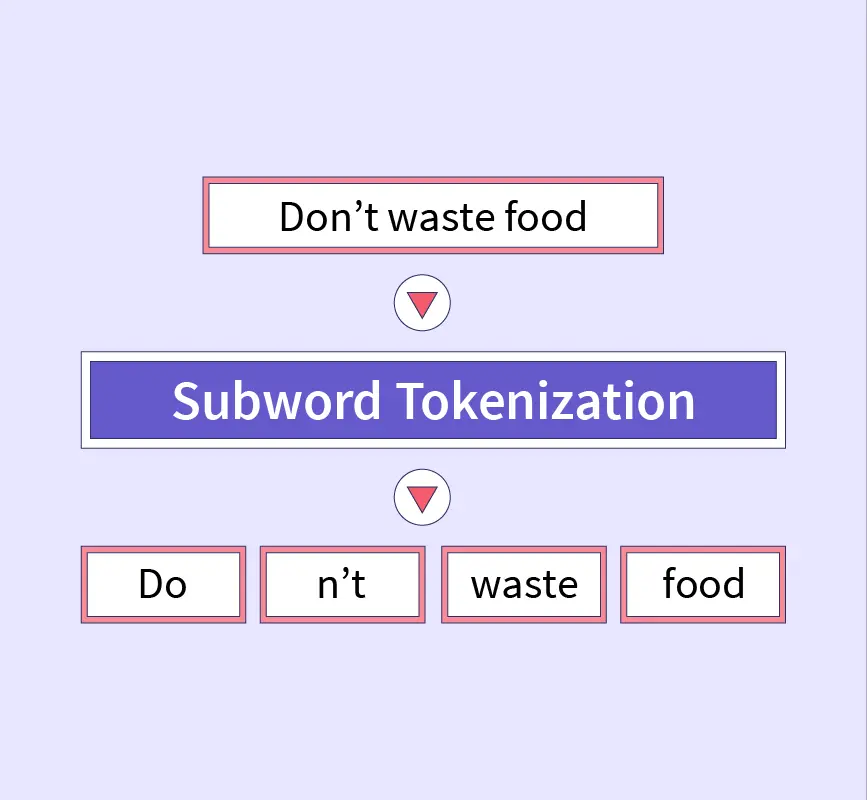

Subword Tokenization

- Course of: Phrases are divided into subword items, balancing the scale of the vocabulary and the power to deal with out-of-vocabulary phrases.

- Benefit: This strategy permits BERT to deal with a variety of languages and effectively course of morphologically wealthy languages.

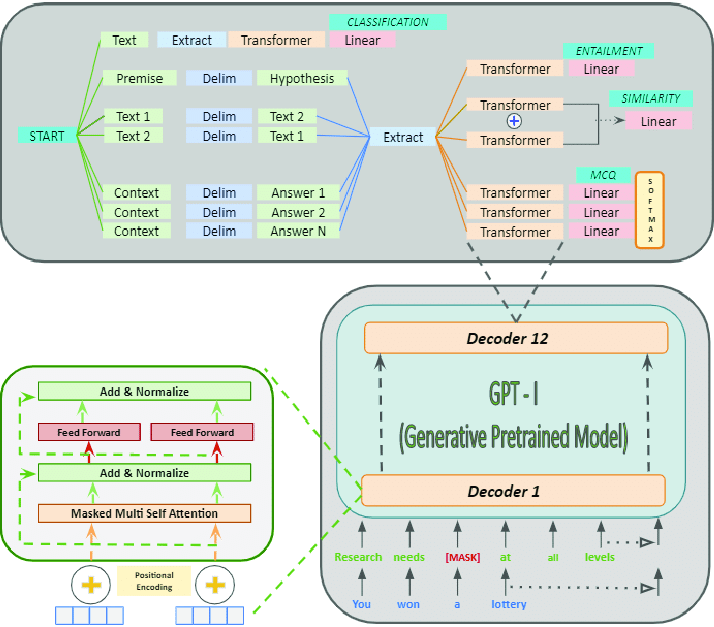

GPT: Generative Pre-Educated Transformers

OpenAI’s Generative Pre-trained Transformer (GPT) collection represents a big development in language modeling, specializing in the Transformer decoder structure for era duties. Every iteration of GPT has led to substantial enhancements in scale, performance, and influence on pure language processing.

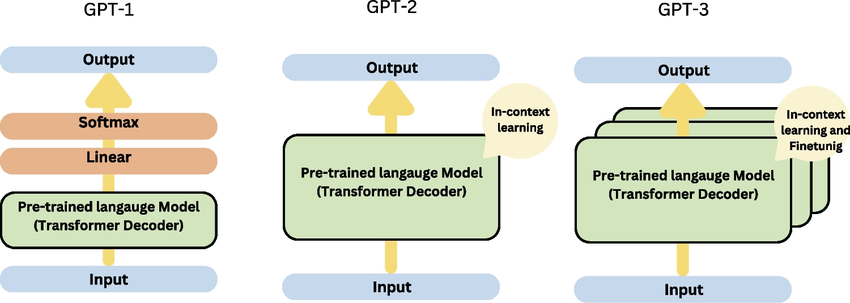

GPT-1 (2018)

The primary GPT mannequin launched the idea of pre-training for large-scale unsupervised language understanding:

- Structure: Based mostly on a Transformer decoder with 12 layers and 117 million parameters.

- Pre-training: Utilized a wide range of on-line texts.

- Job: Predicted the following phrase, contemplating all earlier phrases within the textual content.

- Innovation: Demonstrated {that a} single unsupervised mannequin could possibly be fine-tuned for various downstream duties, reaching excessive efficiency with out task-specific architectures.

- Implications: GPT-1 showcased the potential of switch studying in NLP, the place a mannequin pre-trained on a big corpus could possibly be fine-tuned for particular duties with comparatively little labeled information.

GPT-2 (2019)

GPT-2 considerably elevated the mannequin measurement and exhibited spectacular zero-shot studying capabilities:

- Structure: The biggest model had 1.5 billion parameters, greater than 10 occasions larger than GPT-1.

- Coaching information: Used a a lot bigger and extra various dataset of net pages.

- Options: Demonstrated the power to generate coherent and contextually related textual content on a wide range of matters and kinds.

- Zero-shot studying: Confirmed the power to carry out duties it was not particularly skilled for by merely offering directions within the enter immediate.

- Affect: GPT-2 highlighted the scalability of language fashions and sparked discussions concerning the moral implications of highly effective textual content era programs.

GPT-3 (2020)

GPT-3 represented an enormous leap in scale and capabilities:

- Structure: Consisted of 175 billion parameters, over 100 occasions bigger than GPT-2.

- Coaching information: Utilized an unlimited assortment of texts from the web, books, and Wikipedia.

- Few-shot studying: Demonstrated exceptional capability to carry out new duties with only some examples or prompts, with out the necessity for fine-tuning.

- Versatility: Exhibited proficiency in a variety of duties, together with translation, query answering, textual content summarization, and even fundamental coding.

GPT-4 (2023)

GPT-4 additional pushes the boundaries of what’s doable with language fashions, constructing on the foundations laid by its predecessors.

- Structure: Whereas particular architectural particulars and the variety of parameters haven’t been publicly disclosed, GPT-4 is believed to be considerably bigger and extra complicated than GPT-3, with enhancements to its underlying structure to enhance effectivity and efficiency.

- Coaching information: GPT-4 was skilled on an much more in depth and various dataset, together with a variety of web texts, educational papers, books, and different sources, guaranteeing a complete understanding of assorted topics.

- Superior few-shot and zero-shot studying: GPT-4 displays a good larger capability to carry out new duties with minimal examples, additional lowering the necessity for task-specific fine-tuning.

- Enhanced contextual understanding: Enhancements in contextual consciousness enable GPT-4 to generate extra correct and contextually applicable responses, making it much more efficient in functions like dialogue programs, content material era, and complicated problem-solving.

- Multimodal capabilities: GPT-4 integrates textual content with different modalities, corresponding to pictures and presumably audio, enabling extra refined and versatile AI functions that may course of and generate content material throughout completely different media varieties.

- Moral issues and security: OpenAI has positioned a powerful emphasis on the moral deployment of GPT-4, implementing superior security mechanisms to mitigate potential misuse and make sure that the expertise is used responsibly.

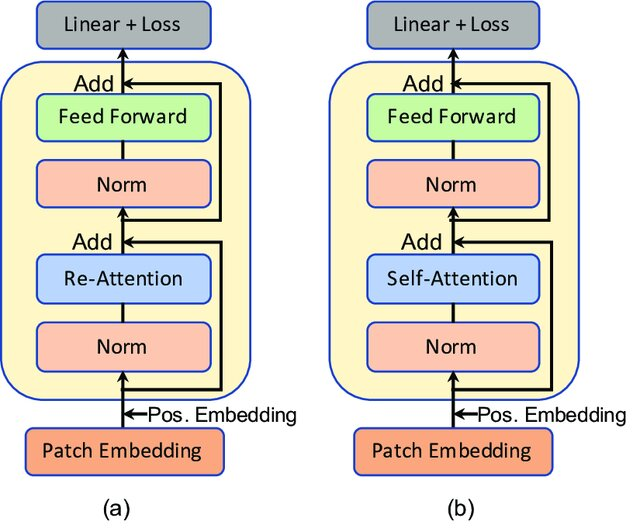

Improvements in Consideration Mechanisms

Researchers have proposed numerous modifications to the eye mechanism, resulting in vital developments:

- Sparse consideration: Permits for extra environment friendly processing of lengthy sequences by specializing in a subset of related parts.

- Adaptive consideration: Dynamically adjusts the eye span based mostly on the enter, enhancing the mannequin’s capability to deal with various duties.

- Cross-attention variants: Enhance how decoders attend to encoder outputs, leading to extra correct and contextually related generations.

Conclusion

The evolution of Transformer architectures has been exceptional. From their preliminary introduction to the present state-of-the-art fashions, Transformers have persistently pushed the boundaries of what is doable in synthetic intelligence. The flexibility of the encoder-decoder construction, mixed with ongoing improvements in consideration mechanisms and mannequin architectures, continues to drive progress in NLP and past. As analysis continues, we are able to count on additional improvements that can increase the capabilities and functions of those highly effective fashions throughout numerous domains.