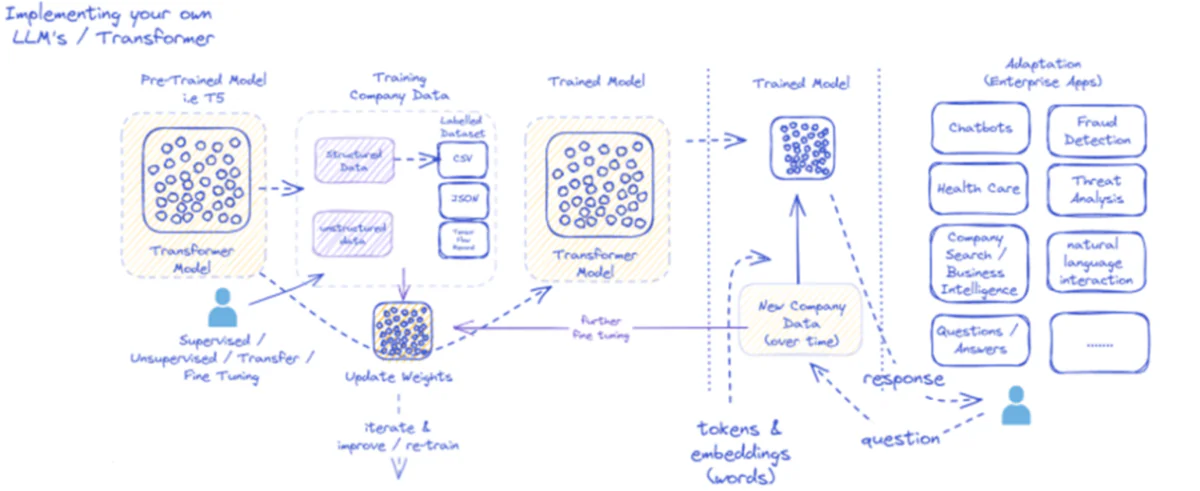

Nice-tuning massive language fashions (LLMs) like Llama 3 entails adapting a pre-trained mannequin to particular duties utilizing a domain-specific dataset. This course of leverages the mannequin’s pre-existing data, making it environment friendly and cost-effective in comparison with coaching from scratch. On this information, we’ll stroll by means of the steps to fine-tune Llama 3 utilizing QLoRA (Quantized LoRA), a parameter-efficient technique that minimizes reminiscence utilization and computational prices.

Overview of Nice-Tuning

Nice-tuning entails a number of key steps:

- Deciding on a Pre-trained Mannequin: Select a base mannequin that aligns together with your desired structure.

- Gathering a Related Dataset: Accumulate and preprocess a dataset particular to your job.

- Nice-Tuning: Adapt the mannequin utilizing the dataset to enhance its efficiency on particular duties.

- Analysis: Assess the fine-tuned mannequin utilizing each qualitative and quantitative metrics.

Ideas and Methods

Nice-tuning Giant Language Fashions

Full Nice-Tuning

Full fine-tuning updates all of the parameters of the mannequin, making it particular to the brand new job. This technique requires vital computational sources and is usually impractical for very massive fashions.

Parameter-Environment friendly Nice-Tuning (PEFT)

PEFT updates solely a subset of the mannequin’s parameters, decreasing reminiscence necessities and computational price. This method prevents catastrophic forgetting and maintains the final data of the mannequin.

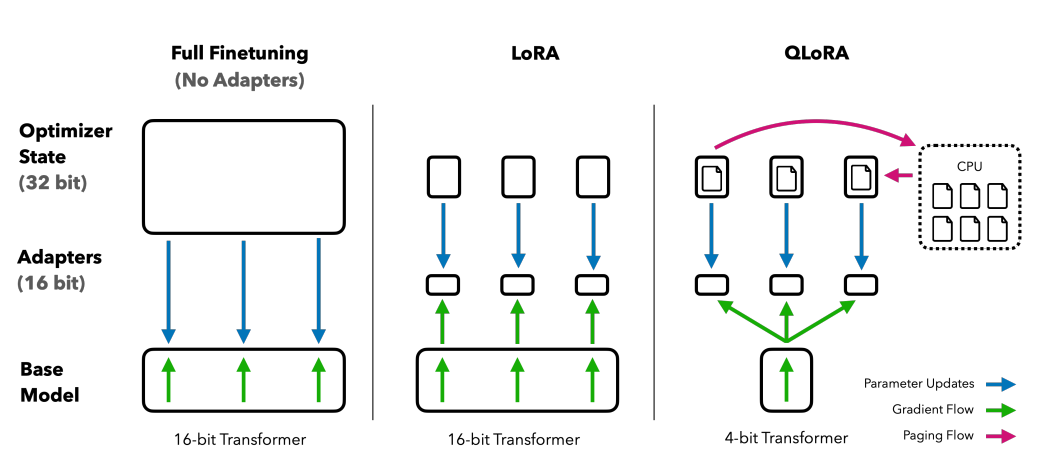

Low-Rank Adaptation (LoRA) and Quantized LoRA (QLoRA)

LoRA fine-tunes just a few low-rank matrices, whereas QLoRA quantizes these matrices to cut back the reminiscence footprint additional.

Nice-Tuning Strategies

- Full Nice-Tuning: This entails coaching all of the parameters of the mannequin on the task-specific dataset. Whereas this technique might be very efficient, it is usually computationally costly and requires vital reminiscence.

- Parameter Environment friendly Nice-Tuning (PEFT): PEFT updates solely a subset of the mannequin’s parameters, making it extra memory-efficient. Methods like Low-Rank Adaptation (LoRA) and Quantized LoRA (QLoRA) fall into this class.

What’s LoRA?

Evaluating finetuning strategies: QLORA enhances LoRA with 4-bit precision quantization and paged optimizers for reminiscence spike administration

LoRA is an improved fine-tuning technique the place, as an alternative of fine-tuning all of the weights of the pre-trained mannequin, two smaller matrices that approximate the bigger matrix are fine-tuned. These matrices represent the LoRA adapter. This fine-tuned adapter is then loaded into the pre-trained mannequin and used for inference.

Key Benefits of LoRA:

- Reminiscence Effectivity: LoRA reduces the reminiscence footprint by fine-tuning solely small matrices as an alternative of your complete mannequin.

- Reusability: The unique mannequin stays unchanged, and a number of LoRA adapters can be utilized with it, facilitating dealing with a number of duties with decrease reminiscence necessities.

What’s Quantized LoRA (QLoRA)?

QLoRA takes LoRA a step additional by quantizing the weights of the LoRA adapters to decrease precision (e.g., 4-bit as an alternative of 8-bit). This additional reduces reminiscence utilization and storage necessities whereas sustaining a comparable stage of effectiveness.

Key Benefits of QLoRA:

- Even Larger Reminiscence Effectivity: By quantizing the weights, QLoRA considerably reduces the mannequin’s reminiscence and storage necessities.

- Maintains Efficiency: Regardless of the lowered precision, QLoRA maintains efficiency ranges near that of full-precision fashions.

Activity-Particular Adaptation

Throughout fine-tuning, the mannequin’s parameters are adjusted based mostly on the brand new dataset, serving to it higher perceive and generate content material related to the particular job. This course of retains the final language data gained throughout pre-training whereas tailoring the mannequin to the nuances of the goal area.

Nice-Tuning in Follow

Full Nice-Tuning vs. PEFT

- Full Nice-Tuning: Includes coaching your complete mannequin, which might be computationally costly and requires vital reminiscence.

- PEFT (LoRA and QLoRA): Nice-tunes solely a subset of parameters, decreasing reminiscence necessities and stopping catastrophic forgetting, making it a extra environment friendly different.

Implementation Steps

- Setup Surroundings: Set up needed libraries and arrange the computing setting.

- Load and Preprocess Dataset: Load the dataset and preprocess it right into a format appropriate for the mannequin.

- Load Pre-trained Mannequin: Load the bottom mannequin with quantization configurations if utilizing QLoRA.

- Tokenization: Tokenize the dataset to organize it for coaching.

- Coaching: Nice-tune the mannequin utilizing the ready dataset.

- Analysis: Consider the mannequin’s efficiency on particular duties utilizing qualitative and quantitative metrics.

Steo by Step Information to Nice Tune LLM

Setting Up the Surroundings

We’ll use a Jupyter pocket book for this tutorial. Platforms like Kaggle, which provide free GPU utilization, or Google Colab are perfect for operating these experiments.

1. Set up Required Libraries

First, guarantee you have got the required libraries put in:

!pip set up -qqq -U bitsandbytes transformers peft speed up datasets scipy einops consider trl rouge_score

2. Import Libraries and Set Up Surroundings

import os

import torch

from datasets import load_dataset

from transformers import (

AutoModelForCausalLM, AutoTokenizer, BitsAndBytesConfig, TrainingArguments,

pipeline, HfArgumentParser

)

from trl import ORPOConfig, ORPOTrainer, setup_chat_format, SFTTrainer

from tqdm import tqdm

import gc

import pandas as pd

import numpy as np

from huggingface_hub import interpreter_login

# Disable Weights and Biases logging

os.environ['WANDB_DISABLED'] = "true"

interpreter_login()

3. Load the Dataset

We’ll use the DialogSum dataset for this tutorial:

Preprocess the dataset in line with the mannequin’s necessities, together with making use of acceptable templates and making certain the information format is appropriate for fine-tuning (Hugging Face) (DataCamp).

dataset_name = "neil-code/dialogsum-test" dataset = load_dataset(dataset_name)

Examine the dataset construction:

print(dataset['test'][0])

4. Create BitsAndBytes Configuration

To load the mannequin in 4-bit format:

compute_dtype = getattr(torch, "float16")

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type='nf4',

bnb_4bit_compute_dtype=compute_dtype,

bnb_4bit_use_double_quant=False,

)

5. Load the Pre-trained Mannequin

Utilizing Microsoft’s Phi-2 mannequin for this tutorial:

model_name = 'microsoft/phi-2'

device_map = {"": 0}

original_model = AutoModelForCausalLM.from_pretrained(

model_name,

device_map=device_map,

quantization_config=bnb_config,

trust_remote_code=True,

use_auth_token=True

)

6. Tokenization

Configure the tokenizer:

tokenizer = AutoTokenizer.from_pretrained(

model_name,

trust_remote_code=True,

padding_side="left",

add_eos_token=True,

add_bos_token=True,

use_fast=False

)

tokenizer.pad_token = tokenizer.eos_token

Nice-Tuning Llama 3 or Different Fashions

When fine-tuning fashions like Llama 3 or some other state-of-the-art open-source LLMs, there are particular issues and changes required to make sure optimum efficiency. Listed here are the detailed steps and insights on method this for various fashions, together with Llama 3, GPT-3, and Mistral.

5.1 Utilizing Llama 3

Mannequin Choice:

- Guarantee you have got the proper mannequin identifier from the Hugging Face mannequin hub. For instance, the Llama 3 mannequin is perhaps recognized as

meta-llama/Meta-Llama-3-8Bon Hugging Face. - Guarantee to request entry and log in to your Hugging Face account if required for fashions like Llama 3 (Hugging Face)

Tokenization:

- Use the suitable tokenizer for Llama 3, making certain it’s suitable with the mannequin and helps required options like padding and particular tokens.

Reminiscence and Computation:

- Nice-tuning massive fashions like Llama 3 requires vital computational sources. Guarantee your setting, akin to a robust GPU setup, can deal with the reminiscence and processing necessities. Make sure the setting can deal with the reminiscence necessities, which might be mitigated through the use of methods like QLoRA to cut back the reminiscence footprint (Hugging Face Boards)

Instance:

model_name = 'meta-llama/Meta-Llama-3-8B'

device_map = {"": 0}

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.float16,

bnb_4bit_use_double_quant=True,

)

original_model = AutoModelForCausalLM.from_pretrained(

model_name,

device_map=device_map,

quantization_config=bnb_config,

trust_remote_code=True,

use_auth_token=True

)

Tokenization:

Relying on the particular use case and mannequin necessities, guarantee right tokenizer configuration with out redundant settings. For instance, use_fast=True is advisable for higher efficiency (Hugging Face) (Weights & Biases).

tokenizer = AutoTokenizer.from_pretrained(

model_name,

trust_remote_code=True,

padding_side="left",

add_eos_token=True,

add_bos_token=True,

use_fast=False

)

tokenizer.pad_token = tokenizer.eos_token

5.2 Utilizing Different Standard Fashions (e.g., GPT-3, Mistral)

Mannequin Choice:

- For fashions like GPT-3 and Mistral, make sure you use the proper mannequin identify and identifier from the Hugging Face mannequin hub or different sources.

Tokenization:

- Just like Llama 3, make certain the tokenizer is appropriately arrange and suitable with the mannequin.

Reminiscence and Computation:

- Every mannequin might have totally different reminiscence necessities. Regulate your setting setup accordingly.

Instance for GPT-3:

model_name = 'openai/gpt-3'

device_map = {"": 0}

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.float16,

bnb_4bit_use_double_quant=True,

)

original_model = AutoModelForCausalLM.from_pretrained(

model_name,

device_map=device_map,

quantization_config=bnb_config,

trust_remote_code=True,

use_auth_token=True

)

Instance for Mistral:

model_name = 'mistral-7B'

device_map = {"": 0}

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.float16,

bnb_4bit_use_double_quant=True,

)

original_model = AutoModelForCausalLM.from_pretrained(

model_name,

device_map=device_map,

quantization_config=bnb_config,

trust_remote_code=True,

use_auth_token=True

)

Tokenization Concerns: Every mannequin might have distinctive tokenization necessities. Make sure the tokenizer matches the mannequin and is configured appropriately.

Llama 3 Tokenizer Instance:

tokenizer = AutoTokenizer.from_pretrained(

model_name,

trust_remote_code=True,

padding_side="left",

add_eos_token=True,

add_bos_token=True,

use_fast=False

)

tokenizer.pad_token = tokenizer.eos_token

GPT-3 and Mistral Tokenizer Instance:

tokenizer = AutoTokenizer.from_pretrained(

model_name,

use_fast=True

)

7. Take a look at the Mannequin with Zero-Shot Inferencing

Consider the bottom mannequin with a pattern enter:

from transformers import set_seed

set_seed(42)

index = 10

immediate = dataset['test'][index]['dialogue']

formatted_prompt = f"Instruct: Summarize the following conversation.n{prompt}nOutput:n"

# Generate output

def gen(mannequin, immediate, max_length):

inputs = tokenizer(immediate, return_tensors="pt").to(mannequin.machine)

outputs = mannequin.generate(**inputs, max_length=max_length)

return tokenizer.batch_decode(outputs, skip_special_tokens=True)

res = gen(original_model, formatted_prompt, 100)

output = res[0].cut up('Output:n')[1]

print(f'INPUT PROMPT:n{formatted_prompt}')

print(f'MODEL GENERATION - ZERO SHOT:n{output}')

8. Pre-process the Dataset

Convert dialog-summary pairs into prompts:

def create_prompt_formats(pattern):

blurb = "Below is an instruction that describes a task. Write a response that appropriately completes the request."

instruction = "### Instruct: Summarize the below conversation."

input_context = pattern['dialogue']

response = f"### Output:n{sample['summary']}"

finish = "### End"

components = [blurb, instruction, input_context, response, end]

formatted_prompt = "nn".be a part of(components)

pattern["text"] = formatted_prompt

return pattern

dataset = dataset.map(create_prompt_formats)

Tokenize the formatted dataset:

def preprocess_batch(batch, tokenizer, max_length):

return tokenizer(batch["text"], max_length=max_length, truncation=True)

max_length = 1024

train_dataset = dataset["train"].map(lambda batch: preprocess_batch(batch, tokenizer, max_length), batched=True)

eval_dataset = dataset["validation"].map(lambda batch: preprocess_batch(batch, tokenizer, max_length), batched=True)

9. Put together the Mannequin for QLoRA

Put together the mannequin for parameter-efficient fine-tuning:

original_model = prepare_model_for_kbit_training(original_model)

Hyperparameters and Their Affect

Hyperparameters play a vital position in optimizing the efficiency of your mannequin. Listed here are some key hyperparameters to contemplate:

- Studying Price: Controls the velocity at which the mannequin updates its parameters. A excessive studying price may result in quicker convergence however can overshoot the optimum answer. A low studying price ensures regular convergence however may require extra epochs.

- Batch Dimension: The variety of samples processed earlier than the mannequin updates its parameters. Bigger batch sizes can enhance stability however require extra reminiscence. Smaller batch sizes may result in extra noise within the coaching course of.

- Gradient Accumulation Steps: This parameter helps in simulating bigger batch sizes by accumulating gradients over a number of steps earlier than performing a parameter replace.

- Variety of Epochs: The variety of occasions your complete dataset is handed by means of the mannequin. Extra epochs can enhance efficiency however may result in overfitting if not managed correctly.

- Weight Decay: Regularization approach to forestall overfitting by penalizing massive weights.

- Studying Price Scheduler: Adjusts the training price throughout coaching to enhance efficiency and convergence.

Customise the coaching configuration by adjusting hyperparameters like studying price, batch dimension, and gradient accumulation steps based mostly on the particular mannequin and job necessities. For instance, Llama 3 fashions might require totally different studying charges in comparison with smaller fashions (Weights & Biases) (GitHub)

Instance Coaching Configuration

orpo_args = ORPOConfig( learning_rate=8e-6, lr_scheduler_type="linear",max_length=1024,max_prompt_length=512, beta=0.1,per_device_train_batch_size=2,per_device_eval_batch_size=2, gradient_accumulation_steps=4,optim="paged_adamw_8bit",num_train_epochs=1, evaluation_strategy="steps",eval_steps=0.2,logging_steps=1,warmup_steps=10, report_to="wandb",output_dir="./results/",)

10. Prepare the Mannequin

Arrange the coach and begin coaching:

coach = ORPOTrainer(

mannequin=original_model,

args=orpo_args,

train_dataset=train_dataset,

eval_dataset=eval_dataset,

tokenizer=tokenizer,)

coach.practice()

coach.save_model("fine-tuned-llama-3")

Evaluating the Nice-Tuned Mannequin

After coaching, consider the mannequin’s efficiency utilizing each qualitative and quantitative strategies.

1. Human Analysis

Examine the generated summaries with human-written ones to evaluate the standard.

2. Quantitative Analysis

Use metrics like ROUGE to evaluate efficiency:

from rouge_score import rouge_scorer scorer = rouge_scorer.RougeScorer(['rouge1', 'rouge2', 'rougeL'], use_stemmer=True) scores = scorer.rating(reference_summary, generated_summary) print(scores)

Widespread Challenges and Options

1. Reminiscence Limitations

Utilizing QLoRA helps mitigate reminiscence points by quantizing mannequin weights to 4-bit. Guarantee you have got sufficient GPU reminiscence to deal with your batch dimension and mannequin dimension.

2. Overfitting

Monitor validation metrics to forestall overfitting. Use methods like early stopping and weight decay.

3. Sluggish Coaching

Optimize coaching velocity by adjusting batch dimension, studying price, and utilizing gradient accumulation.

4. Information High quality

Guarantee your dataset is clear and well-preprocessed. Poor knowledge high quality can considerably influence mannequin efficiency.

Conclusion

Nice-tuning LLMs utilizing QLoRA is an environment friendly strategy to adapt massive pre-trained fashions to particular duties with lowered computational prices. By following this information, you may fine-tune PHI, Llama 3 or some other open-source mannequin to realize excessive efficiency in your particular duties.