It appears each industrial gadget now options some implementation of, or an try at, speech recognition. From cross-platform voice assistants to transcription companies and accessibility instruments, and extra not too long ago a differentiator for LLMs — dictation has grow to be an on a regular basis consumer interface. With the market measurement of voice-user interfaces (VUI) projected to develop at a CAGR of 23.39% from 2023 to 2028, we will count on many extra tech-first firms to undertake it. However how effectively do you perceive the know-how?

Let’s begin by dissecting and defining the commonest applied sciences that go into making speech recognition attainable.

The Mechanics of Speech Recognition: How Does It Work?

Function Extraction

Earlier than any “recognition” can happen, machines should convert the sound waves we produce right into a format they’ll perceive. This course of known as pre-processing and have extraction. The 2 commonest characteristic extraction strategies are Mel-Frequency Cepstral Coefficients (MFCCs) and Perceptual Linear Predictive (PLP) coefficients.

Mel-Frequency Cepstral Coefficients (MFCCs)

MFCCs seize the facility spectrum of audio indicators, basically figuring out what makes every sound distinctive. The method begins by amplifying excessive frequencies to steadiness the sign and make it extra legible. The sign is then divided into brief frames, or snippets of sound, lasting wherever between 20 to 40 milliseconds. These frames are then analyzed to grasp their frequency parts. By making use of a collection of filters that mimic how the human ear perceives audio, MFCCs seize the important thing, identifiable options of the speech sign. The ultimate step converts these options into an information format that an acoustic mannequin can use.

Perceptual Linear Predictive (PLP) Coefficients

PLP coefficients intention to imitate the human auditory system’s response as carefully as attainable. Equally to MFCCs, PLP filters sound frequencies to simulate the human ear. After filtering, the dynamic vary — the pattern’s vary of “loudness” — is compressed to mirror how our listening to responds in another way to numerous volumes. Within the closing step, PLP estimates the “spectral envelope,” which is a method of capturing probably the most important traits of the speech sign. This course of will increase the reliability of speech recognition programs, particularly in noisy environments.

Acoustic Modeling

Acoustic modeling is the guts of speech recognition programs. It kinds the statistical relationship between the audio indicators (sound) and phonetic items of speech (the distinct sounds that make up a language). Probably the most extensively used strategies embody Hidden Markov Fashions (HMM) and, extra not too long ago, Deep Neural Networks (DNN).

Hidden Markov Fashions (HMM)

HMMs have been a cornerstone of sample recognition engineering because the late Sixties. They’re notably efficient for speech processing as a result of they break down spoken phrases into smaller, extra manageable components often called phonemes. Every extracted phoneme is related to a state within the HMM, and the mannequin computes the likelihood of transitioning from one state to a different. This probabilistic method permits the system to deduce phrases from the acoustic indicators, even within the presence of noise and completely different people’ variances in speech.

Deep Neural Networks (DNN)

Lately, carefully paralleling the expansion and curiosity in AI and machine studying, DNNs have grow to be the primary selection for pure language processing (NLP). In contrast to HMMs, which depend on predefined states and transitions, DNNs be taught instantly from the information. They encompass a number of layers of interconnected neurons which progressively extract higher-level representations of the information. By specializing in context and the relationships between sure phrases and sounds, DNNs can seize far more advanced patterns in speech. This permits them to carry out higher when it comes to accuracy and robustness in comparison with HMMs, with further coaching to adapt to accents, dialects, and talking types—an enormous benefit in an more and more multilingual world.

Wanting Forward: Challenges and Innovation

Speech recognition know-how has made nice strides however, as any consumer will acknowledge, remains to be removed from good. Background noise, a number of audio system, accents, and latency are but unsolved challenges. As engineers have grown to acknowledge the potential in networked fashions, one promising innovation is the usage of hybrid options that leverage the strengths of each HMMs and DNNs. A further good thing about increasing AI analysis is the appliance of deep studying throughout domains, with Convolutional Neural Networks (CNN), historically utilized in picture evaluation, exhibiting promising outcomes for speech processing. One other thrilling growth is the usage of switch studying, the place fashions skilled on massive datasets might be fine-tuned for particular duties and languages with comparatively smaller companion datasets. This reduces the time and sources required to develop performant speech recognition for brand new functions, permitting for a greener method to repeat mannequin deployments.

Bringing It All Collectively: Actual-World Purposes

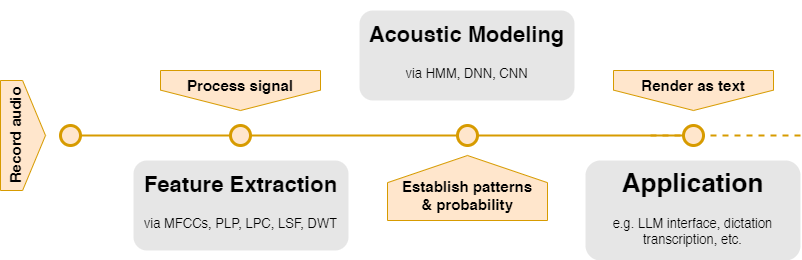

To recap, characteristic extraction and acoustic modeling work in tandem to type what is named a speech recognition system. The method begins with the conversion of sound waves into manageable knowledge utilizing pre-processing and have recognition. These knowledge factors, or options, are then fed into acoustic fashions, which interpret them and convert the inputs into textual content. From there, different functions can readily have interaction with the speech enter.

From the noisiest, most time-sensitive environments, like automotive interfaces to accessibility alternate options on private gadgets, we’re steadily trusting this know-how with extra vital capabilities. As somebody deeply engaged in enhancing this know-how, I imagine understanding these mechanics is not only educational; it ought to encourage technologists to understand these instruments and their potential to enhance accessibility, usability, and effectivity in customers’ experiences. As VUI turns into more and more related to massive language fashions (LLM), engineers and designers ought to familiarize themselves with what might grow to be the commonest interface for real-world functions of generative AI.