On this article, we are going to construct a sophisticated knowledge mannequin and use it for ingestion and varied search choices. For the pocket book portion, we are going to run a hybrid multi-vector search, re-rank the outcomes, and show the ensuing textual content and pictures.

- Ingest knowledge fields, enrich knowledge with lookups, and format: Be taught to ingest knowledge together with JSON and pictures, format and rework to optimize hybrid searches. That is accomplished contained in the streetcams.py utility.

- Retailer knowledge into Milvus: Be taught to retailer knowledge in Milvus, an environment friendly vector database designed for high-speed similarity searches and AI purposes. On this step, we’re optimizing the info mannequin with scalar and a number of vector fields — one for textual content and one for the digicam picture. We do that within the streetcams.py utility.

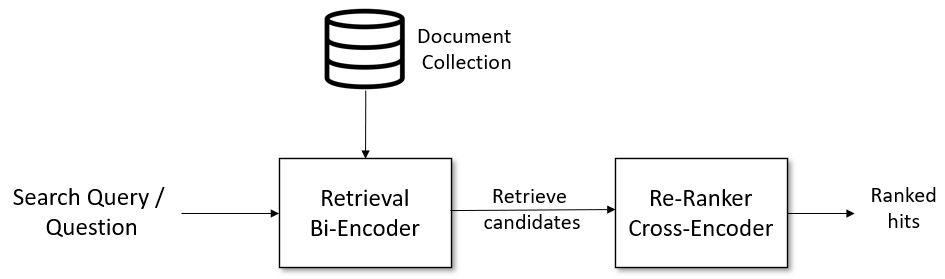

- Use open supply fashions for knowledge queries in a hybrid multi-modal, multi-vector search: Uncover the right way to use scalars and a number of vectors to question knowledge saved in Milvus and re-rank the ultimate outcomes on this pocket book.

- Show ensuing textual content and pictures: Construct a fast output for validation and checking on this pocket book.

- Easy Retrieval-Augmented Technology (RAG) with LangChain: Construct a easy Python RAG utility (streetcamrag.py) to make use of Milvus for asking concerning the present climate through Ollama. Whereas outputing to the display we additionally ship the outcomes to Slack formatted as Markdown.

Abstract

By the tip of this utility, you’ll have a complete understanding of utilizing Milvus, knowledge ingest object semi-structured and unstructured knowledge, and utilizing open supply fashions to construct a sturdy and environment friendly knowledge retrieval system. For future enhancements, we are able to use these outcomes to construct prompts for LLM, Slack bots, streaming knowledge to Apache Kafka, and as a Road Digital camera search engine.

Milvus: Open Supply Vector Database Constructed for Scale

Milvus is a well-liked open-source vector database that powers purposes with extremely performant and scalable vector similarity searches. Milvus has a distributed structure that separates compute and storage, and distributes knowledge and workloads throughout a number of nodes. This is among the major causes Milvus is very accessible and resilient. Milvus is optimized for varied {hardware} and helps numerous indexes.

You will get extra particulars within the Milvus Quickstart.

For different choices for working Milvus, take a look at the deployment web page.

New York Metropolis 511 Information

- REST Feed of Road Digital camera data with latitude, longitude, roadway title, digicam title, digicam URL, disabled flag, and blocked flag:

{

"Latitude": 43.004452, "Longitude": -78.947479, "ID": "NYSDOT-badsfsfs3",

"Name": "I-190 at Interchange 18B", "DirectionOfTravel": "Unknown",

"RoadwayName": "I-190 Niagara Thruway",

"Url": "https://nyimageurl",

"VideoUrl": "https://camera:443/rtplive/dfdf/playlist.m3u8",

"Disabled":true, "Blocked":false

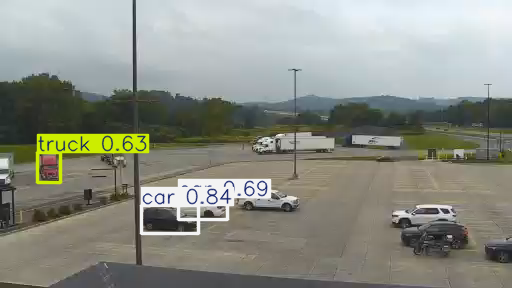

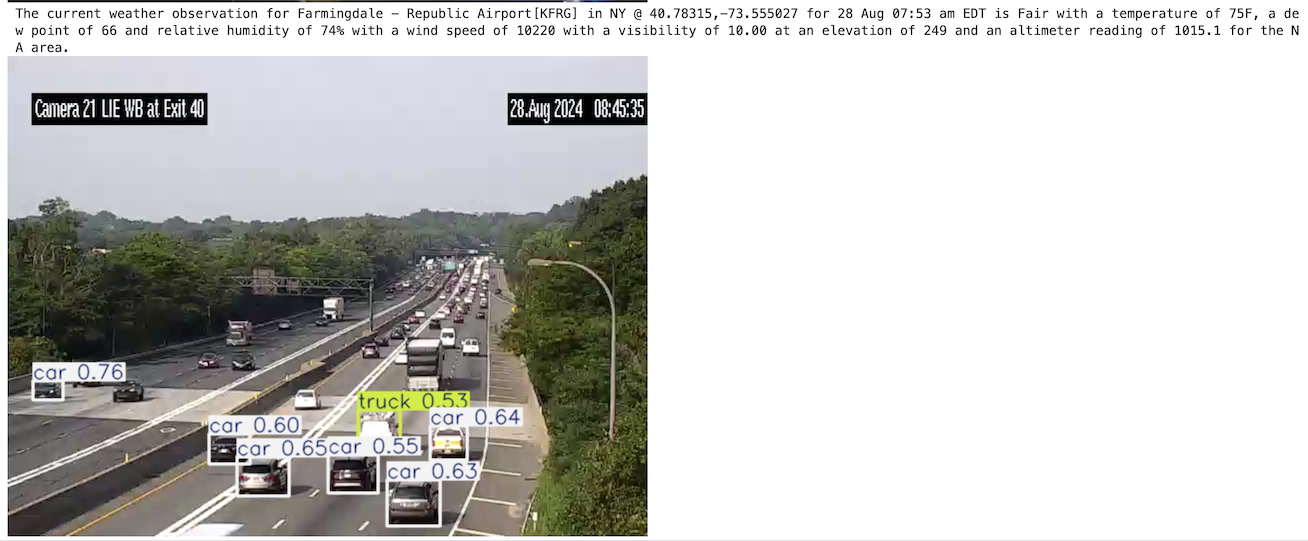

}- We then ingest the picture from the digicam URL endpoint for the digicam picture:

- After we run it by Ultralytics YOLO, we are going to get a marked-up model of that digicam picture.

NOAA Climate Present Situations for Lat/Lengthy

We additionally ingest a REST feed for climate circumstances assembly latitude and longitude handed in from the digicam report that features elevation, remark date, wind velocity, wind course, visibility, relative humidity, and temperature.

"currentobservation":{

"id":"KLGA",

"name":"New York, La Guardia Airport",

"elev":"20",

"latitude":"40.78",

"longitude":"-73.88",

"Date":"27 Aug 16:51 pm EDT",

"Temp":"83",

"Dewp":"60",

"Relh":"46",

"Winds":"14",

"Windd":"150",

"Gust":"NA",

"Weather":"Partly Cloudy",

"Weatherimage":"sct.png",

"Visibility":"10.00",

"Altimeter":"1017.1",

"SLP":"30.04",

"timezone":"EDT",

"state":"NY",

"WindChill":"NA"

}Ingest and Enrichment

- We’ll ingest knowledge from the NY REST feed in our Python loading script.

- In our streetcams.py Python script does our ingest, processing, and enrichment.

- We iterate by the JSON outcomes from the REST name then enrich, replace, run Yolo predict, then we run a NOAA Climate lookup on the latitude and longitude offered.

Construct a Milvus Information Schema

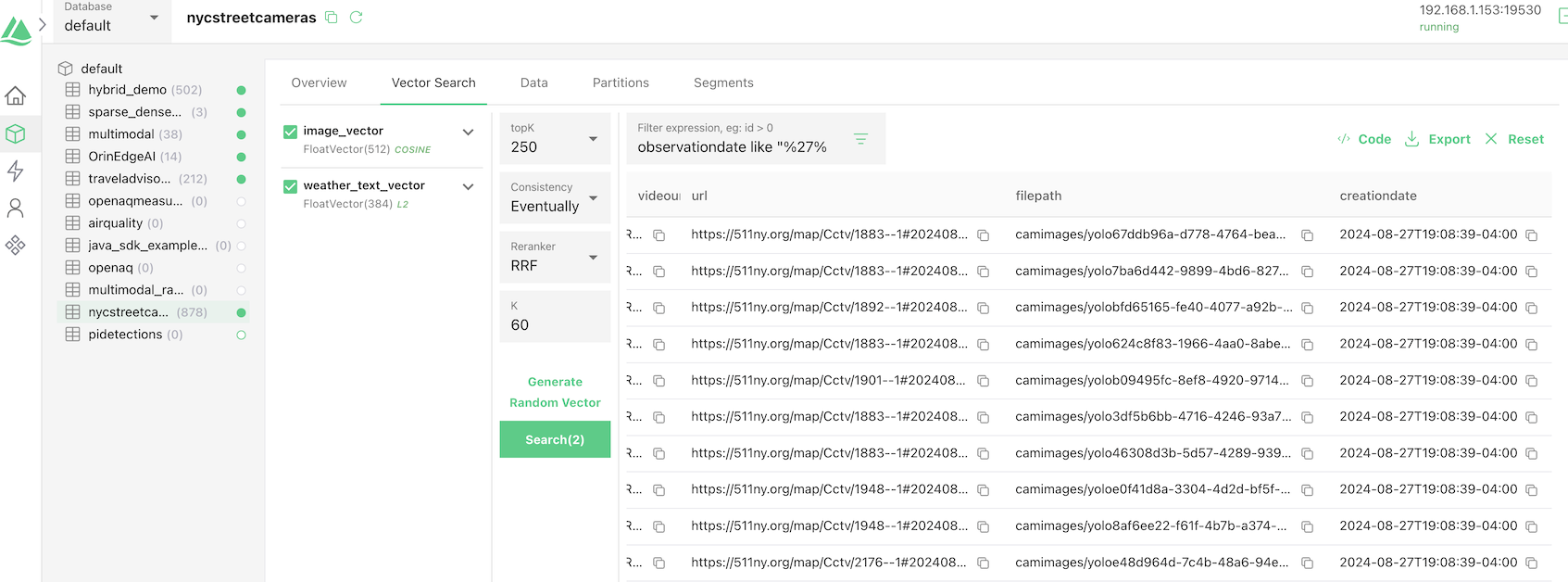

- We’ll title our assortment: “nycstreetcameras“.

- We add fields for metadata, a major key, and vectors.

- We now have a variety of varchar variables for issues like

roadwayname,county, andweathername.

FieldSchema(title="id", dtype=DataType.INT64, is_primary=True, auto_id=True),

FieldSchema(title="latitude", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="longitude", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="name", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="roadwayname", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="directionoftravel", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="videourl", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="url", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="filepath", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="creationdate", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="areadescription", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="elevation", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="county", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="metar", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="weatherid", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="weathername", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="observationdate", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="temperature", dtype=DataType.FLOAT),

FieldSchema(title="dewpoint", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="relativehumidity", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="windspeed", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="winddirection", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="gust", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="weather", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="visibility", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="altimeter", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="slp", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="timezone", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="state", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="windchill", dtype=DataType.VARCHAR, max_length=200),

FieldSchema(title="weatherdetails", dtype=DataType.VARCHAR, max_length=8000),

FieldSchema(title="image_vector", dtype=DataType.FLOAT_VECTOR, dim=512),

FieldSchema(title="weather_text_vector", dtype=DataType.FLOAT_VECTOR, dim=384)The 2 vectors are image_vector and weather_text_vector, which comprise a picture vector and textual content vector. We add an index for the first key id and for every vector. We now have a variety of choices for these indexes and so they can drastically enhance efficiency.

Insert Information Into Milvus

We then do a easy insert into our assortment with our scalar fields matching the schema title and kind. We now have to run an embedding perform on our picture and climate textual content earlier than inserting. Then we’ve inserted our report.

We are able to then examine our knowledge with Attu.

Constructing a Pocket book for Report

We’ll construct a Jupyter pocket book to question and report on our multi-vector dataset.

Put together Hugging Face Sentence Transformers for Embedding Sentence Textual content

We make the most of a mannequin from Hugging Face, “all-MiniLM-L6-v2”, a sentence transformer to construct our Dense embedding for our quick textual content strings. This textual content is a brief description of the climate particulars for the closest location to our avenue digicam.

Put together Embedding Mannequin for Pictures

We make the most of a normal resnet34 Pytorch function extractor that we regularly use for pictures.

Instantiate Milvus

As said earlier, Milvus is a well-liked open-source vector database that powers AI purposes with extremely performant and scalable vector similarity search.

- For our instance, we’re connecting to Milvus working in Docker.

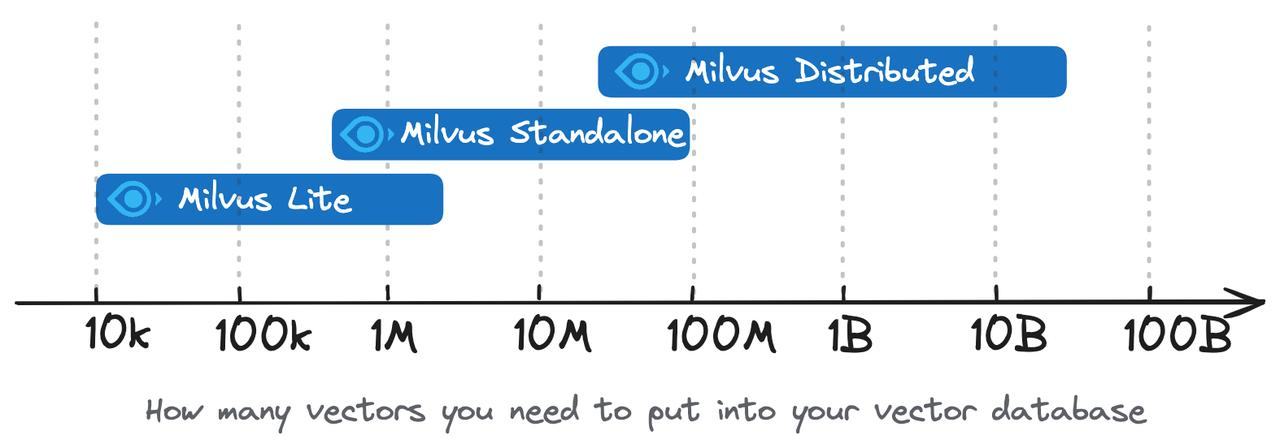

- Setting the URI as an area file, e.g.,

./milvus.db, is probably the most handy methodology, because it robotically makes use of Milvus Lite to retailer all knowledge on this file. - When you’ve got a big scale of knowledge, say greater than 1,000,000 vectors, you’ll be able to arrange a extra performant Milvus server on Docker or Kubernetes. On this setup, please use the server URI, e.g.

http://localhost:19530, as your uri. - If you wish to use Zilliz Cloud, the absolutely managed cloud service for Milvus, regulate the URI and token, which correspond to the Public Endpoint and API key in Zilliz Cloud.

Put together Our Search

We’re constructing two searches (AnnSearchRequest) to mix collectively for a hybrid search which is able to embody a reranker.

Show Our Outcomes

We show the outcomes of our re-ranked hybrid search of two vectors. We present a number of the output scalar fields and a picture we learn from the saved path.

The outcomes from our hybrid search will be iterated and we are able to simply entry all of the output fields we select. filepath accommodates the hyperlink to the regionally saved picture and will be accessed from the key.entity.filepath. The key accommodates all our outcomes, whereas key.entity has all of our output fields chosen in our hybrid search within the earlier step.

We iterate by our re-ranked outcomes and show the picture and our climate particulars.

RAG Utility

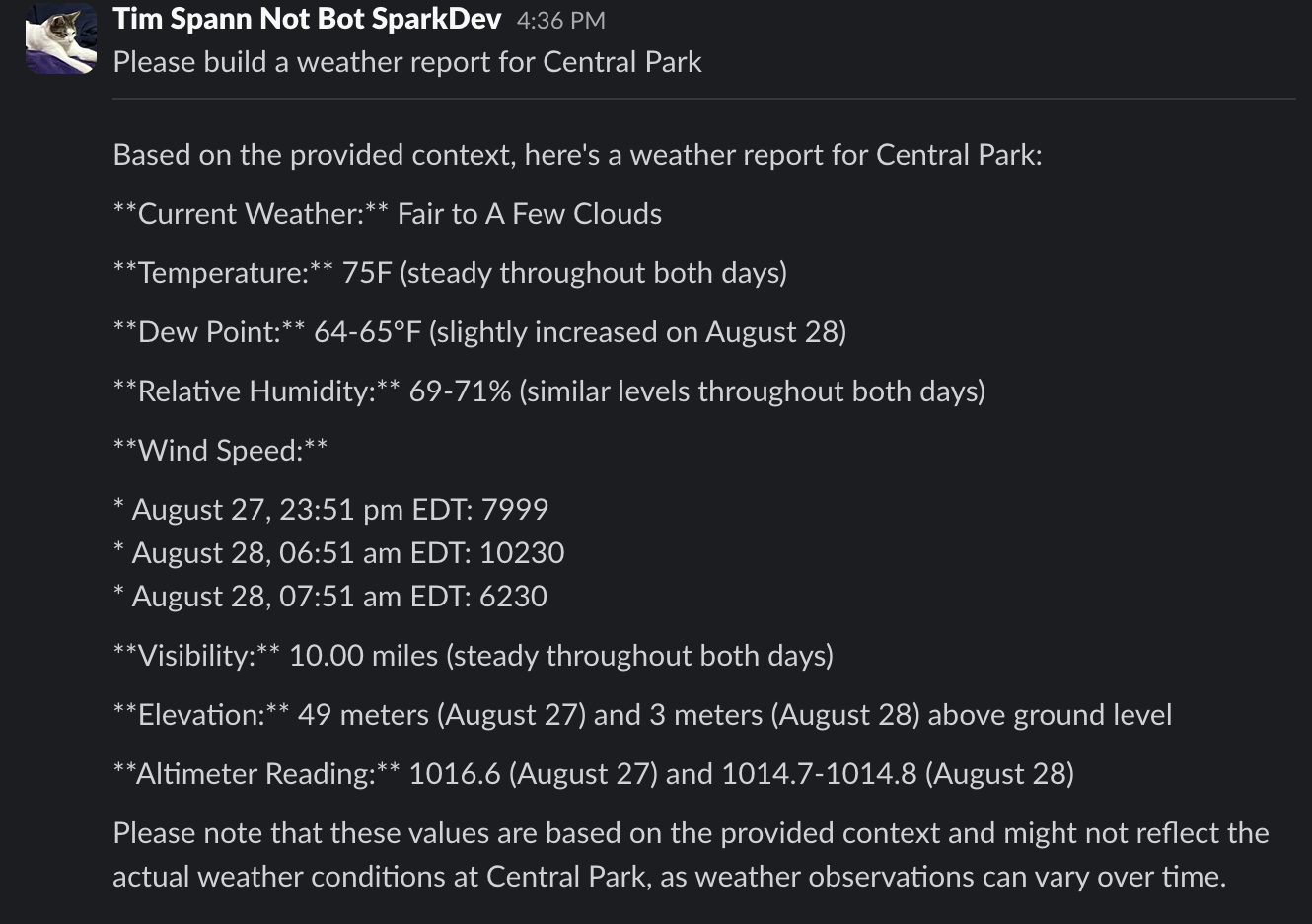

Since we’ve loaded a group with climate knowledge, we are able to use that as a part of a RAG (Retrieval Augmented Technology). We’ll construct a very open-source RAG utility using the native Ollama, LangChain, and Milvus.

- We arrange our

vector_storeas Milvus with our assortment.

vector_store = Milvus(

embedding_function=embeddings,

collection_name="CollectionName",

primary_field = "id",

vector_field = "weather_text_vector",

text_field="weatherdetails",

connection_args={"uri": "https://localhost:19530"},

)- We then connect with Ollama.

llm = Ollama(

mannequin="llama3",

callback_manager=CallbackManager([StreamingStdOutCallbackHandler()]),

cease=[""],

)- We immediate for interacting questions.

question = enter("nQuery: ")- We arrange a

RetrievalQAconnection between our LLM and our vector retailer. We go in ourquestionand get theoutcome.

qa_chain = RetrievalQA.from_chain_type(

llm, retriever=vector_store.as_retriever(assortment = SC_COLLECTION_NAME))

outcome = qa_chain({"query": question})

resultforslack = str(outcome["result"])- We then publish the outcomes to a Slack channel.

response = shopper.chat_postMessage(channel="C06NE1FU6SE", textual content="",

blocks=[{"type": "section",

"text": {"type": "mrkdwn",

"text": str(query) +

" nn" }},

{"type": "divider"},

{"type": "section","text":

{"type": "mrkdwn","text":

str(resultforslack) +"n" }}]

)Beneath is the output from our chat to Slack.

You will discover all of the supply code for the pocket book, the ingest script, and the interactive RAG utility in GitHub beneath.

Conclusion

On this pocket book, you’ve seen how you should use Milvus to do a hybrid search on a number of vectors in the identical assortment and re-ranking the outcomes. You additionally noticed the right way to construct a fancy knowledge modal that features a number of vectors and lots of scalar fields that characterize a variety of metadata associated to our knowledge.

You discovered the right way to ingest JSON, pictures, and textual content to Milvus with Python.

And eventually, we constructed a small chat utility to take a look at the climate for areas close to site visitors cameras.

To construct your individual purposes, please take a look at the sources beneath.

Sources

Within the following checklist, you will discover sources useful in studying extra about utilizing pre-trained embedding fashions for Milvus, performing searches on textual content knowledge, and an amazing instance pocket book for embedding capabilities.