How do you forestall hallucinations from massive language fashions (LLMs) in GenAI functions? LLMs want real-time, contextualized, and reliable information to generate essentially the most dependable outputs. This weblog publish explains how RAG and a knowledge streaming platform with Apache Kafka and Flink make that doable. A lightboard video reveals find out how to construct a context-specific real-time RAG structure. Additionally, find out how the journey company Expedia leverages information streaming with Generative AI utilizing conversational chatbots to enhance the client expertise and cut back the price of service brokers.

What Is Retrieval Augmented Era (RAG) in GenAI?

Generative AI (GenAI) refers to synthetic intelligence (AI) methods that may create new content material, resembling textual content, photos, music, or code, usually mimicking human creativity. These methods use superior machine studying strategies, notably deep studying fashions like neural networks, to generate information that resembles the coaching information they had been fed. Standard examples embrace language fashions like GPT-3 for textual content technology and DALL-E for picture creation.

Massive Language Fashions like ChatGPT use plenty of public information, are very costly to coach, and don’t present domain-specific context. Coaching their very own fashions isn’t an possibility for many corporations due to limitations in price and experience.

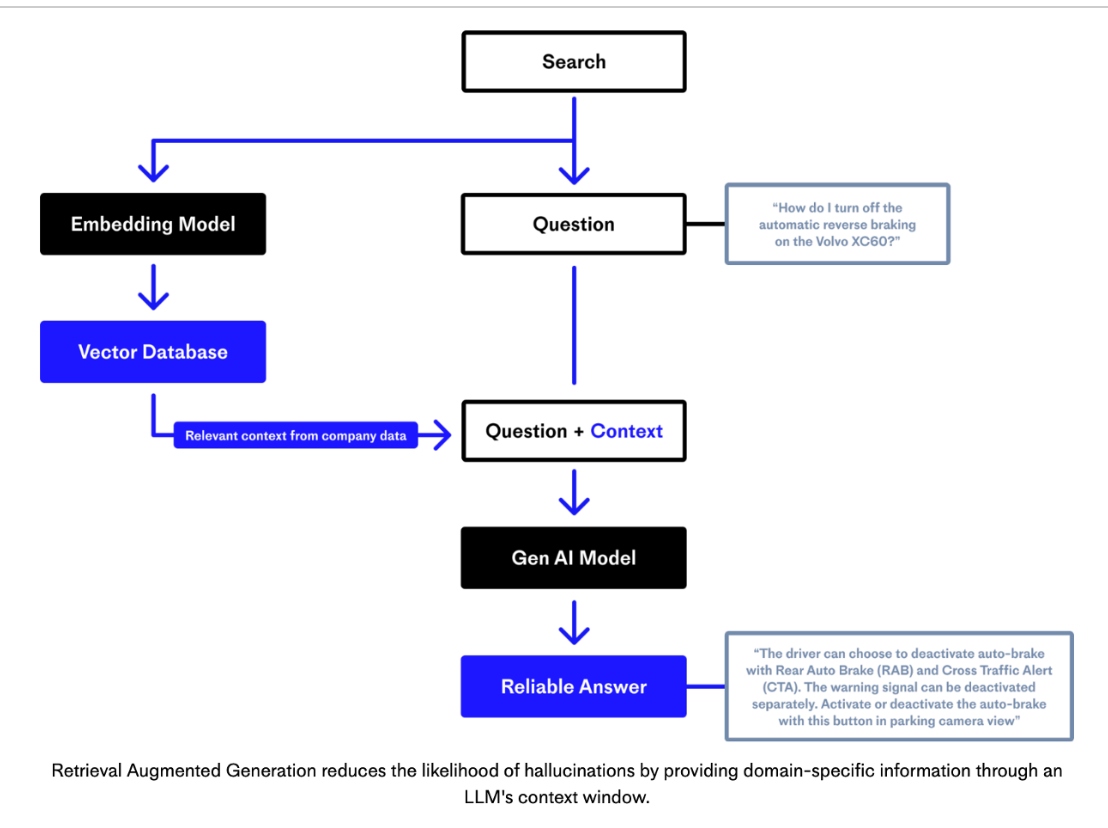

Retrieval Augmented Era (RAG) is a way in Generative AI to resolve this downside. RAG enhances the efficiency of language fashions by integrating data retrieval mechanisms into the technology course of. This method goals to mix the strengths of data retrieval methods and generative fashions to supply extra correct and contextually related outputs.

Pinecone created a superb diagram that explains RAG and reveals the relation to an embedding mannequin and vector database:

Supply: Pinecone

Advantages of Retrieval Augmented Era

RAG brings numerous advantages to the GenAI enterprise structure:

- Entry to exterior data: By retrieving related paperwork from an unlimited vector database, RAG permits the generative mannequin to leverage up-to-date and domain-specific data that it could not have been educated on.

- Lowered hallucinations: Generative fashions can generally produce assured however incorrect solutions (hallucinations). By grounding responses in retrieved paperwork, RAG reduces the chance of such errors.

- Area-specific functions: RAG may be tailor-made to particular domains by curating the retrieval database with domain-specific paperwork, enhancing the mannequin’s efficiency in specialised areas resembling medication, regulation, finance, or journey.

Nonetheless, one of the important issues nonetheless exists: the lacking proper context and up-to-date data.

Information Streaming With Apache Kafka and Flink within the RAG Structure

RAG is clearly essential in enterprises the place information privateness, up-to-date context, and information integration with transactional and analytical methods like an order administration system, reserving platform, or cost fraud engine have to be constant, scalable, and in actual time.

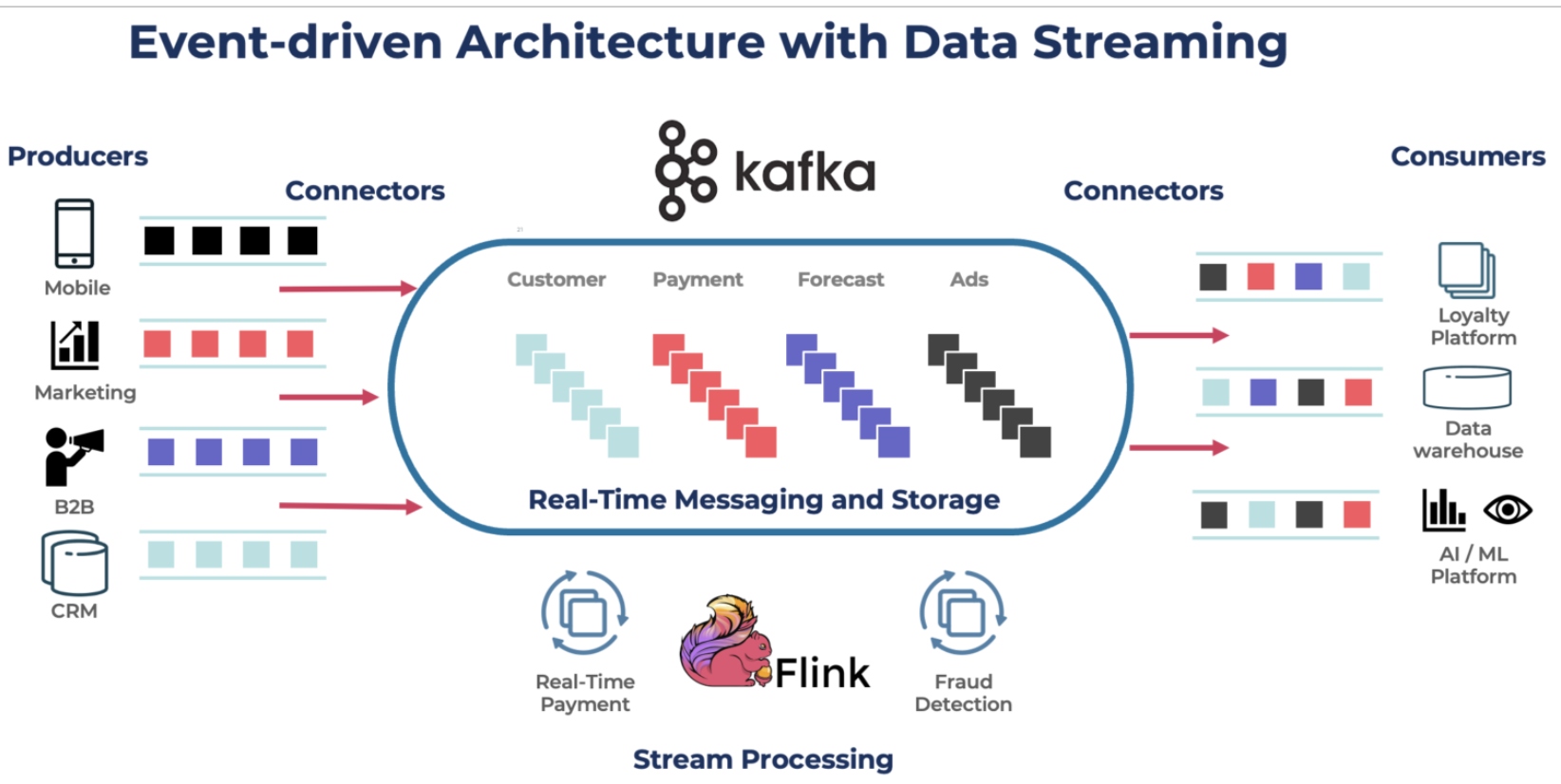

An event-driven structure is the inspiration of knowledge streaming with Kafka and Flink:

Apache Kafka and Apache Flink play a vital function within the Retrieval Augmented Era (RAG) structure by making certain real-time information circulate and processing, which boosts the system’s potential to retrieve and generate up-to-date and contextually related data.

This is how Kafka and Flink contribute to the RAG structure:

1. Actual-Time Information Ingestion and Processing

- Information ingestion: Kafka acts as a high-throughput, low-latency messaging system that ingests real-time information from numerous information sources, resembling databases, APIs, sensors, or person interactions.

- Occasion streaming: Kafka streams the ingested information, making certain that the info is obtainable in actual time to downstream methods. That is crucial for functions that require rapid entry to the newest data.

- Stream processing: Flink processes the incoming information streams in real-time. It could actually carry out complicated transformations, aggregations, and enrichments on the info because it flows by way of the system.

- Low latency: Flink’s potential to deal with stateful computations with low latency ensures that the processed information is shortly accessible for retrieval operations.

2. Enhanced Information Retrieval

- Actual-time updates: Through the use of Kafka and Flink, the retrieval part of RAG can entry essentially the most present information. That is essential for producing responses that aren’t solely correct but in addition well timed.

- Dynamic indexing: As new information arrives, Flink can replace the retrieval index in actual time, making certain that the newest data is all the time retrievable in a vector database.

3. Scalability and Reliability

- Scalable structure: Kafka’s distributed structure permits it to deal with massive volumes of knowledge, making it appropriate for functions with excessive throughput necessities. Flink’s scalable stream processing capabilities guarantee it might course of and analyze massive information streams effectively. Cloud-native implementations or cloud providers take over the operations and elastic scale.

- Fault tolerance: Kafka gives built-in fault tolerance by replicating information throughout a number of nodes, making certain information sturdiness and availability, even within the case of node failures. Flink provides state restoration and exactly-once processing semantics, making certain dependable and constant information processing.

4. Contextual Enrichment

- Contextual information processing: Flink can enrich the uncooked information with further context earlier than the generative mannequin makes use of it. For example, Flink can be part of incoming information streams with historic information or exterior datasets to supply a richer context for retrieval operations.

- Characteristic extraction: Flink can extract options from the info streams that assist enhance the relevance of the retrieved paperwork or passages.

5. Integration and Flexibility

- Seamless integration: Kafka and Flink combine nicely with mannequin servers (e.g., for mannequin embeddings) and storage methods (e.g., vector information bases for sematic search). This makes it simple to include the suitable data and context into the RAG structure.

- Modular design: The usage of Kafka and Flink permits for a modular design the place completely different parts (information ingestion, processing, retrieval, technology) may be developed, scaled, and maintained independently.

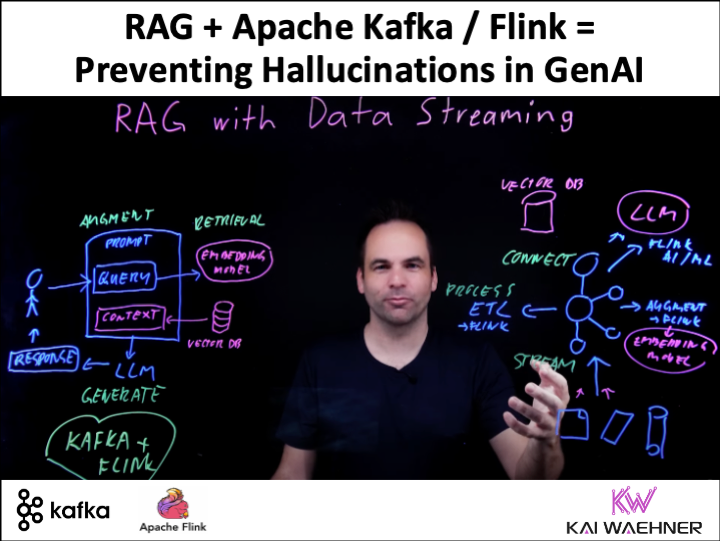

Lightboard Video: RAG with Information Streaming

The next ten-minute lightboard video is a wonderful interactive rationalization for constructing a RAG structure with an embedding mannequin, vector database, Kafka, and Flink to make sure up-to-date and context-specific prompts into the LLM:

Expedia: Generative AI within the Journey Trade

Expedia is a web based journey company that gives reserving providers for flights, motels, automotive leases, trip packages, and different travel-related providers. The IT structure is constructed round information streaming for a few years already, together with the combination of transactional and analytical methods.

When Covid hit, Expedia needed to innovate quick to deal with all of the help visitors spikes relating to flight rebookings, cancellations, and refunds. The mission crew educated a domain-specific conversational chatbot (lengthy earlier than ChatGPT and the time period GenAI existed) and built-in it into the enterprise course of.

Listed below are a number of the outcomes:

- Fast time to market with progressive new know-how to resolve enterprise issues

- 60%+ of vacationers are self-servicing in chat after the rollout

- 40%+ saved in variable agent prices by enabling self-service

Kafka and Flink Present Actual-Time Context for RAG in a GenAI Structure

By leveraging Apache Kafka and Apache Flink, the RAG structure can deal with real-time information ingestion, processing, and retrieval effectively. This ensures that the generative mannequin has entry to essentially the most present and contextually wealthy data, leading to extra correct and related responses. The scalability, fault tolerance, and suppleness provided by Kafka and Flink make them ideally suited parts for enhancing the capabilities of RAG methods.

If you wish to be taught extra about information streaming with GenAI, learn these articles:

How do you construct a RAG structure? Do you already leveraging Kafka and Flink for it? Or what applied sciences and architectures do you utilize? Let’s join on LinkedIn and talk about it!