Lately, Apache Flink has established itself because the de facto commonplace for real-time stream processing. Stream processing is a paradigm for system constructing that treats occasion streams (sequences of occasions in time) as its most important constructing block. A stream processor, equivalent to Flink, consumes enter streams produced by occasion sources and produces output streams which can be consumed by sinks (the sinks retailer outcomes and make them accessible for additional processing).

Family names like Amazon, Netflix, and Uber depend on Flink to energy knowledge pipelines working at super scale on the coronary heart of their companies, however Flink additionally performs a key position in lots of smaller firms with related necessities for having the ability to react rapidly to essential enterprise occasions.

What’s Flink getting used for? Widespread use instances fall into these three classes:

|

Streaming knowledge pipelines |

Actual-time analytics |

Occasion-driven functions |

|---|---|---|

|

Repeatedly ingest, enrich, and rework knowledge streams, loading them into vacation spot techniques for well timed motion (vs. batch processing). |

Repeatedly produce and replace outcomes that are displayed and delivered to customers as real-time knowledge streams are consumed. |

Acknowledge patterns and react to incoming occasions by triggering computations, state updates, or exterior actions. |

|

Some examples embrace:

|

Some examples embrace: |

Some examples embrace: |

Flink contains:

- Sturdy help for knowledge streaming workloads on the scale wanted by world enterprises

- Robust ensures of exactly-once correctness and failure restoration

- Help for Java, Python, and SQL, with unified help for each batch and stream processing

- Flink is a mature open-source undertaking from the Apache Software program Basis and has a really lively and supportive group.

Flink is usually described as being complicated and tough to study. Sure, the implementation of Flink’s runtime is complicated, however that shouldn’t be stunning, because it solves some tough issues. Flink APIs could be considerably difficult to study, however this has extra to do with the ideas and organizing ideas being unfamiliar than with any inherent complexity.

Flink could also be completely different from something you’ve used earlier than, however in lots of respects, it’s truly slightly easy. In some unspecified time in the future, as you turn into extra accustomed to the best way that Flink is put collectively, and the problems that its runtime should tackle, the small print of Flink’s APIs ought to start to strike you as being the plain penalties of some key ideas, slightly than a set of arcane particulars you need to memorize.

This text goals to make the Flink studying journey a lot simpler, by laying out the core ideas underlying its design.

Flink Embodies a Few Large Concepts

Streams

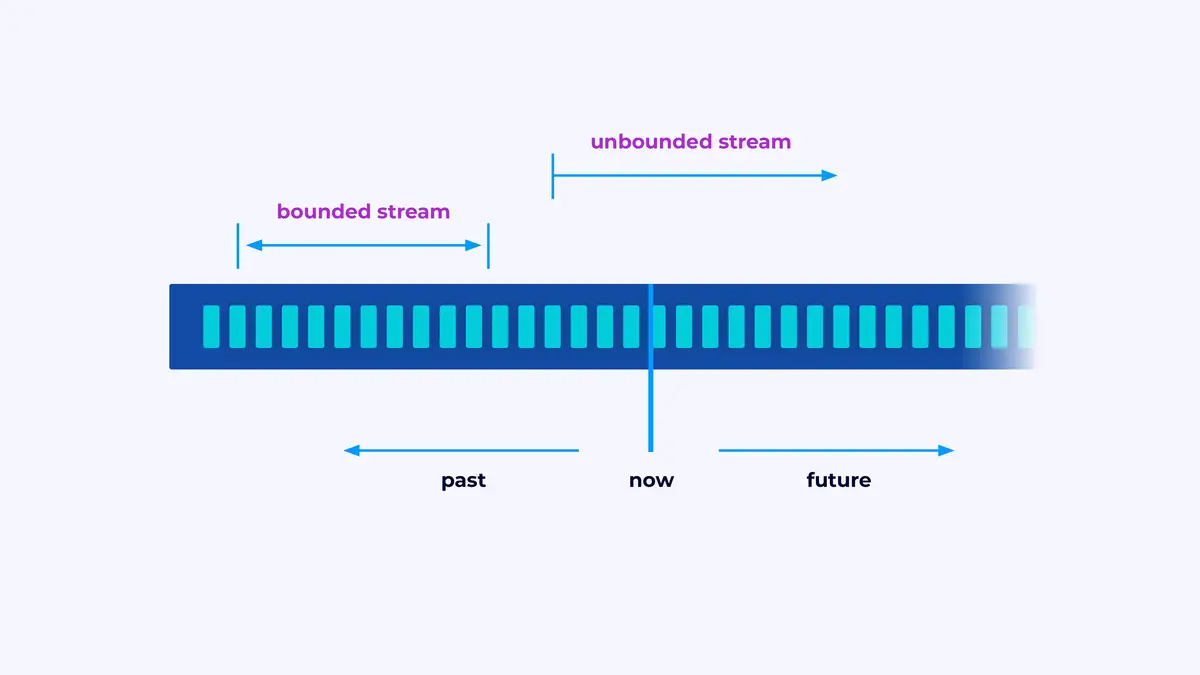

Flink is a framework for constructing functions that course of occasion streams, the place a stream is a bounded or unbounded sequence of occasions.

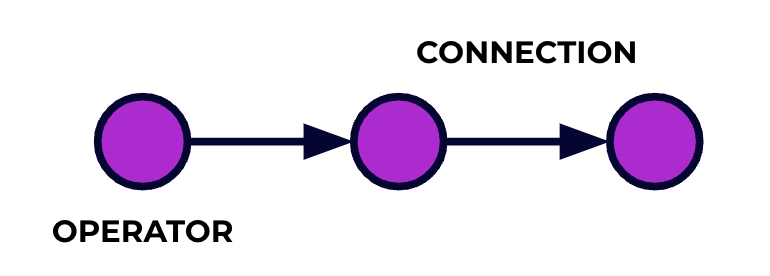

A Flink utility is an information processing pipeline. Your occasions circulation by means of this pipeline and are operated on at every stage by the code you write. We name this pipeline the job graph, and the nodes of this graph (or in different phrases, the levels of the processing pipeline) are known as operators.

The code you write utilizing certainly one of Flink’s APIs describes the job graph, together with the habits of the operators and their connections.

Parallel Processing

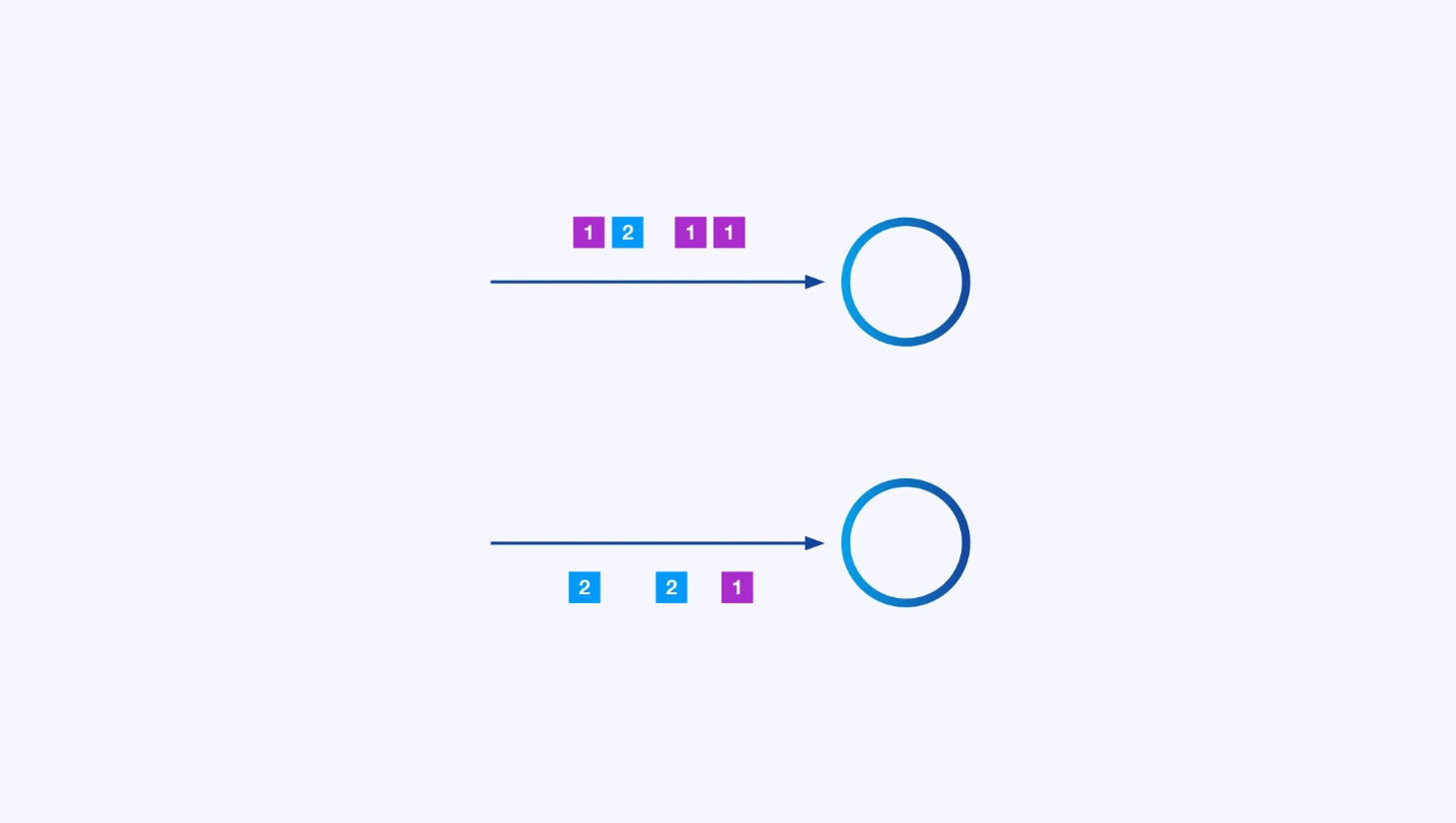

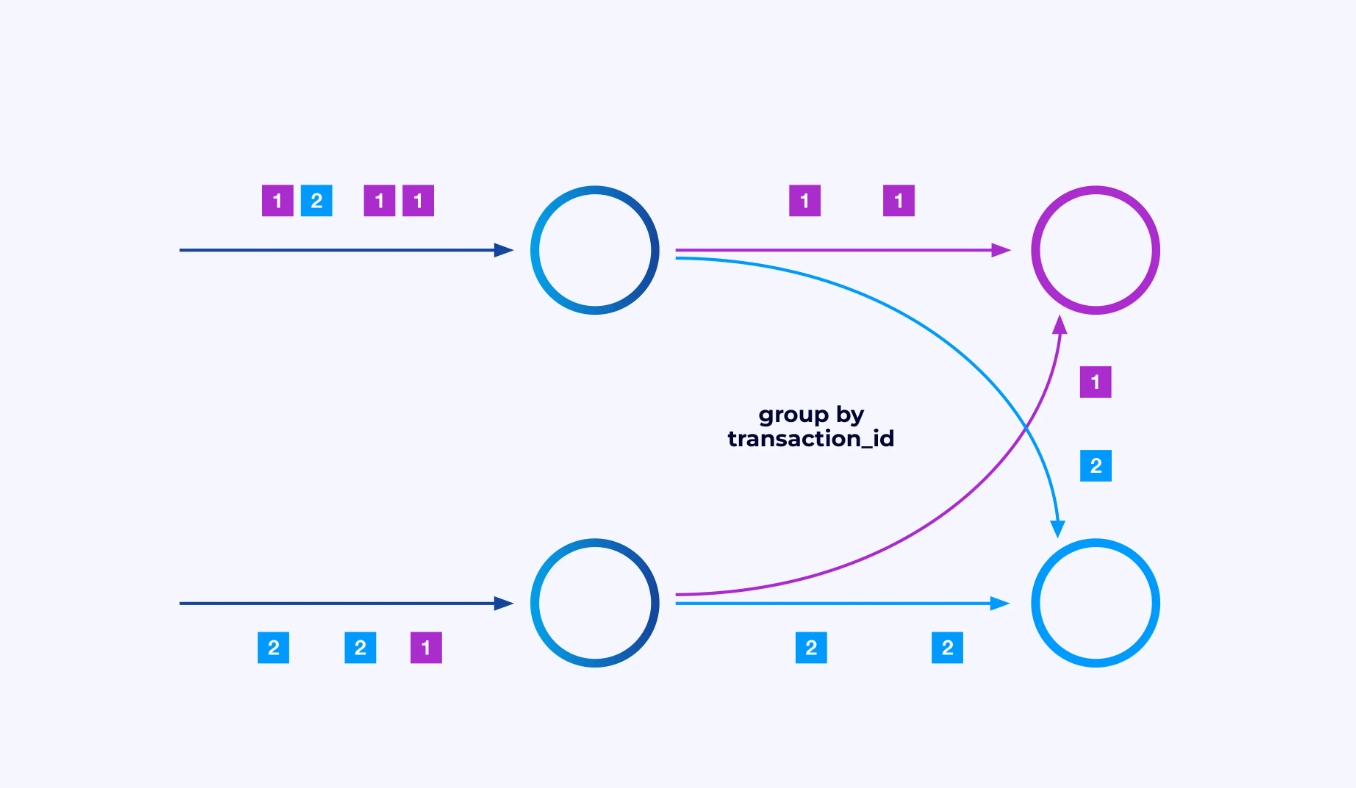

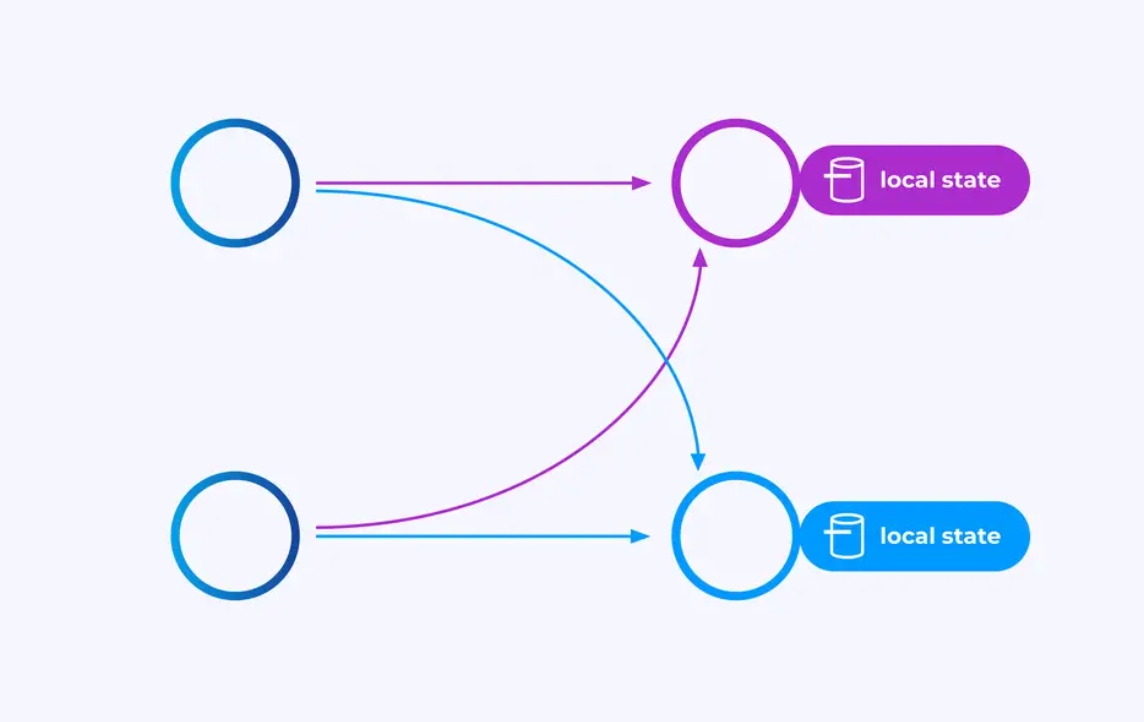

Every operator can have many parallel cases, every working independently on some subset of the occasions.

Typically you’ll want to impose a selected partitioning scheme on these sub-streams in order that the occasions are grouped collectively based on some application-specific logic. For instance, in the event you’re processing monetary transactions, you would possibly want to rearrange for each occasion for any given transaction to be processed by the identical thread. This can assist you to join collectively the varied occasions that happen over time for every transaction

In Flink SQL, you’d do that with GROUP BY transaction_id, whereas within the DataStream API you’d use keyBy(occasion -> occasion.transaction_id) to specify this grouping or partitioning. In both case, this can present up within the job graph as a totally related community shuffle between two consecutive levels of the graph.

State

Operators engaged on key-partitioned streams can use Flink’s distributed key/worth state retailer to durably persist no matter they need. The state for every key’s native to a selected occasion of an operator, and can’t be accessed from wherever else. The parallel sub-topologies share nothing — that is essential for unrestrained scalability.

A Flink job may be left working indefinitely. If a Flink job is repeatedly creating new keys (e.g., transaction IDs) and storing one thing for every new key, then that job dangers blowing up as a result of it’s utilizing an unbounded quantity of state. Every of Flink’s APIs is organized round offering methods that will help you keep away from runaway explosions of state.

Time

One method to keep away from hanging onto a state for too lengthy is to retain it solely till some particular cut-off date. For example, if you wish to depend transactions in minute-long home windows, as soon as every minute is over, the end result for that minute could be produced, and that counter could be freed.

Flink makes an vital distinction between two completely different notions of time:

- Processing (or wall clock) time, which is derived from the precise time of day when an occasion is being processed

- Occasion time, which is predicated on timestamps recorded with every occasion

For instance the distinction between them, take into account what it means for a minute-long window to be full:

- A processing time window is full when the minute is over. That is completely simple.

- An occasion time window is full when all occasions that occurred throughout that minute have been processed. This may be tough since Flink can’t know something about occasions it hasn’t processed but. The most effective we are able to do is to make an assumption about how out-of-order a stream may be and apply that assumption heuristically.

Checkpointing for Failure Restoration

Failures are inevitable. Regardless of failures, Flink is ready to present successfully exactly-once ensures, which means that every occasion will have an effect on the state Flink is managing precisely as soon as, simply as if the failure by no means occurred. It does this by taking periodic, world, self-consistent snapshots of all of the states. These snapshots, created and managed routinely by Flink, are known as checkpoints.

Restoration entails rolling again to the state captured in the latest checkpoint and performing a world restart of the entire operators from that checkpoint. Throughout restoration, some occasions are reprocessed, however Flink is ready to assure correctness by making certain that every checkpoint is a world, self-consistent snapshot of the whole state of the system.

System Structure

Flink functions run in Flink clusters, so earlier than you possibly can put a Flink utility into manufacturing, you’ll want a cluster to deploy it to. Happily, throughout growth and testing it’s simple to get began by working Flink regionally in an built-in growth atmosphere (IDE) like IntelliJ or Docker.

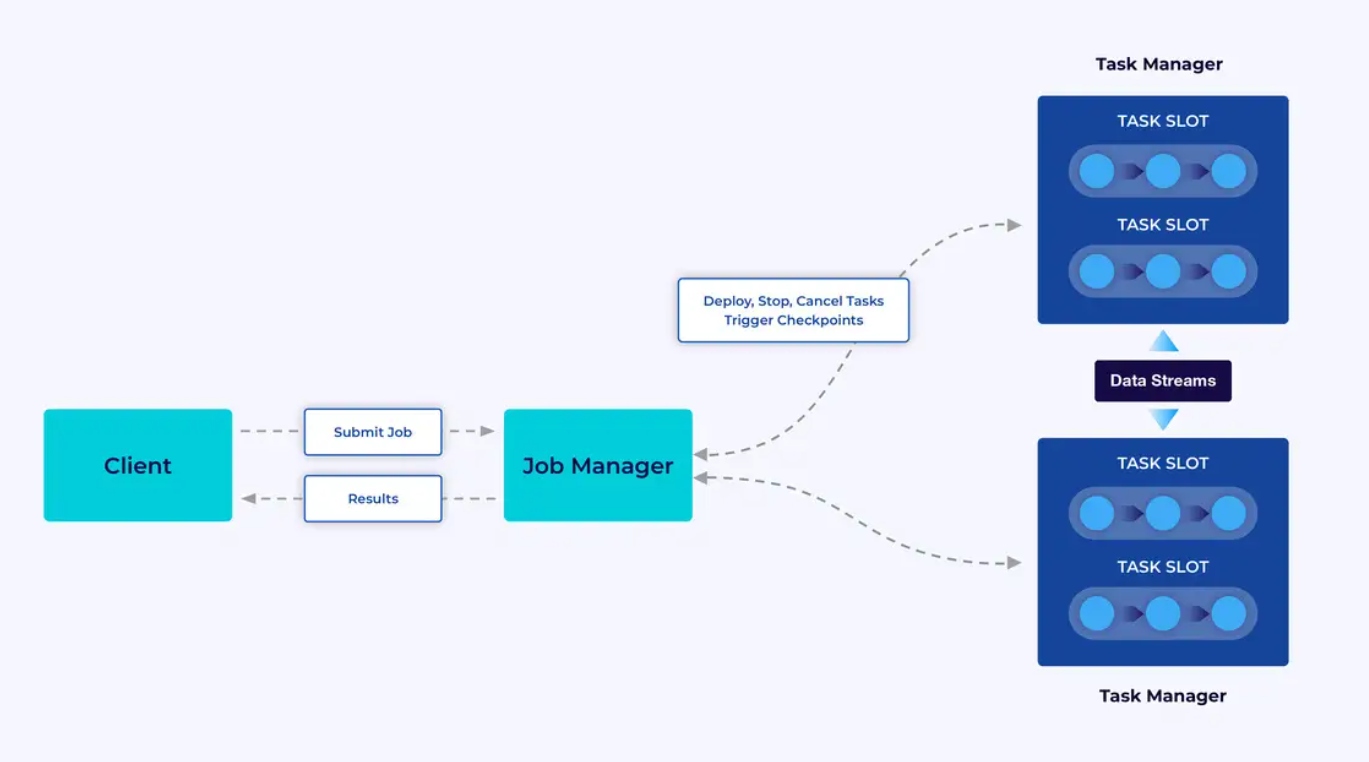

A Flink cluster has two sorts of parts: a Job Supervisor and a set of Activity Managers. The duty managers run your utility(s) (in parallel), whereas the job supervisor acts as a gateway between the duty managers and the skin world. Functions are submitted to the job supervisor, which manages the sources offered by the duty managers, coordinates checkpointing, and offers visibility into the cluster within the type of metrics.

The Developer Expertise

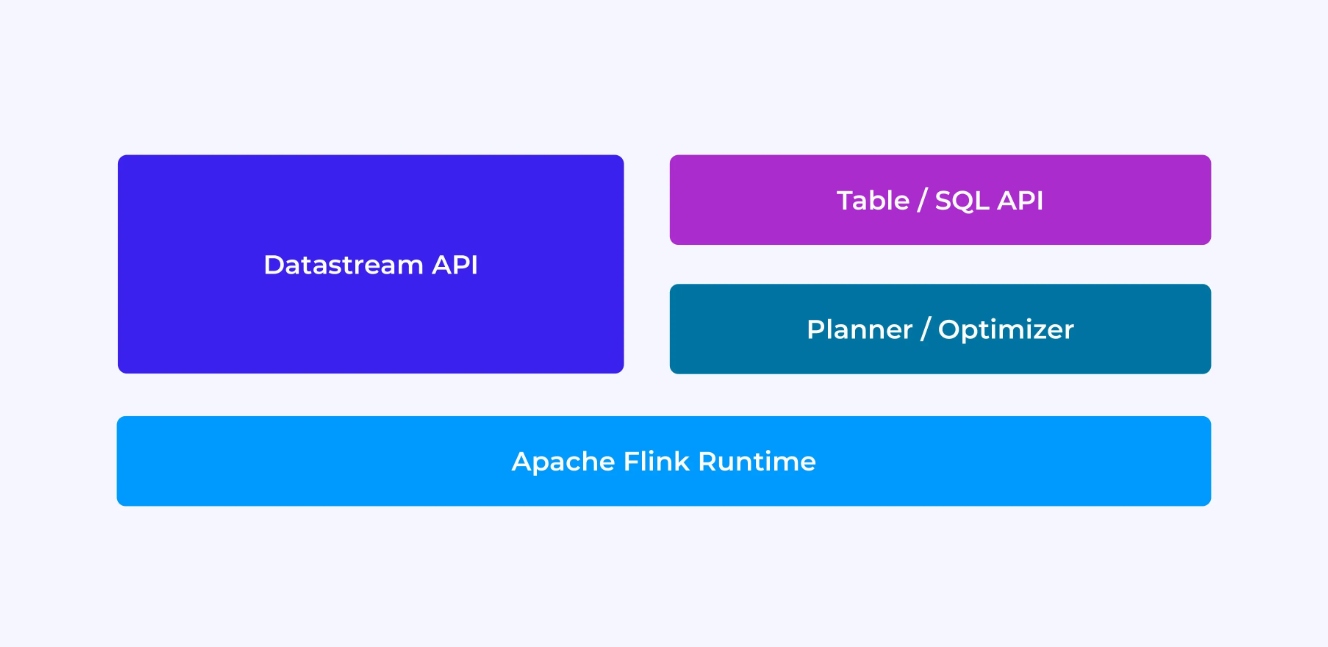

The expertise you’ll have as a Flink developer relies upon, to a sure extent, on which of the APIs you select: both the older, lower-level DataStream API or the newer, relational Desk and SQL APIs.

When you find yourself programming with Flink’s DataStream API, you’re consciously fascinated about what the Flink runtime shall be doing because it runs your utility. Which means you’re increase the job graph one operator at a time, describing the state you’re utilizing together with the kinds concerned and their serialization, creating timers, and implementing callback features to be executed when these timers are triggered, and many others. The core abstraction within the DataStream API is the occasion, and the features you write shall be dealing with one occasion at a time, as they arrive.

However, if you use Flink’s Desk/SQL API, these low-level issues are taken care of for you, and you’ll focus extra straight on your small business logic. The core abstraction is the desk, and you’re pondering extra by way of becoming a member of tables for enrichment, grouping rows collectively to compute aggregated analytics, and many others. A built-in SQL question planner and optimizer handle the small print. The planner/optimizer does a wonderful job of managing sources effectively, usually outperforming hand-written code.

A pair extra ideas earlier than diving into the small print: first, you don’t have to decide on the DataStream or the Desk/SQL API – each APIs are interoperable, and you’ll mix them. That may be a great way to go in the event you want a little bit of customization that isn’t doable within the Desk/SQL API. However one other good method to transcend what Desk/SQL API provides out of the field is so as to add some extra capabilities within the type of user-defined features (UDFs). Right here, Flink SQL provides a variety of choices for extension.

Establishing the Job Graph

No matter which API you employ, the final word function of the code you write is to assemble the job graph that Flink’s runtime will execute in your behalf. Which means these APIs are organized round creating operators and specifying each their habits and their connections to at least one one other. With the DataStream API, you’re straight establishing the job graph, whereas with the Desk/SQL API, Flink’s SQL planner is caring for this.

Serializing Features and Knowledge

Finally, the code you provide to Flink shall be executed in parallel by the employees (the duty managers) in a Flink cluster. To make this occur, the operate objects you create are serialized and despatched to the duty managers the place they’re executed. Equally, the occasions themselves will typically must be serialized and despatched throughout the community from one process supervisor to a different. Once more, with the Desk/SQL API you don’t have to consider this.

Managing State

The Flink runtime must be made conscious of any state that you simply count on it to get well for you within the occasion of a failure. To make this work, Flink wants kind info it will probably use to serialize and deserialize these objects (to allow them to be written into, and browse from, checkpoints). You’ll be able to optionally configure this managed state with time-to-live descriptors that Flink will then use to routinely expire the state as soon as it has outlived its usefulness.

With the DataStream API, you usually find yourself straight managing the state your utility wants (the built-in window operations are the one exception to this). However, with the Desk/SQL API, this concern is abstracted away. For instance, given a question just like the one beneath, you understand that someplace within the Flink runtime some knowledge construction has to take care of a counter for every URL, however the particulars are all taken care of for you.

SELECT url, COUNT(*)

FROM pageviews

GROUP BY URL;Setting and Triggering Timers

Timers have many makes use of in stream processing. For instance, it is not uncommon for Flink functions to want to collect info from many various occasion sources earlier than finally producing outcomes. Timers work effectively for instances the place it is sensible to attend (however not indefinitely) for knowledge that will (or might not) finally arrive.

Timers are additionally important for implementing time-based windowing operations. Each the DataStream and Desk/SQL APIs have built-in help for Home windows and are creating and managing timers in your behalf.

Use Instances

Circling again to the three broad classes of streaming use instances launched in the beginning of this text, let’s see how they map to what you’ve simply been studying about Flink.

Streaming Knowledge Pipeline

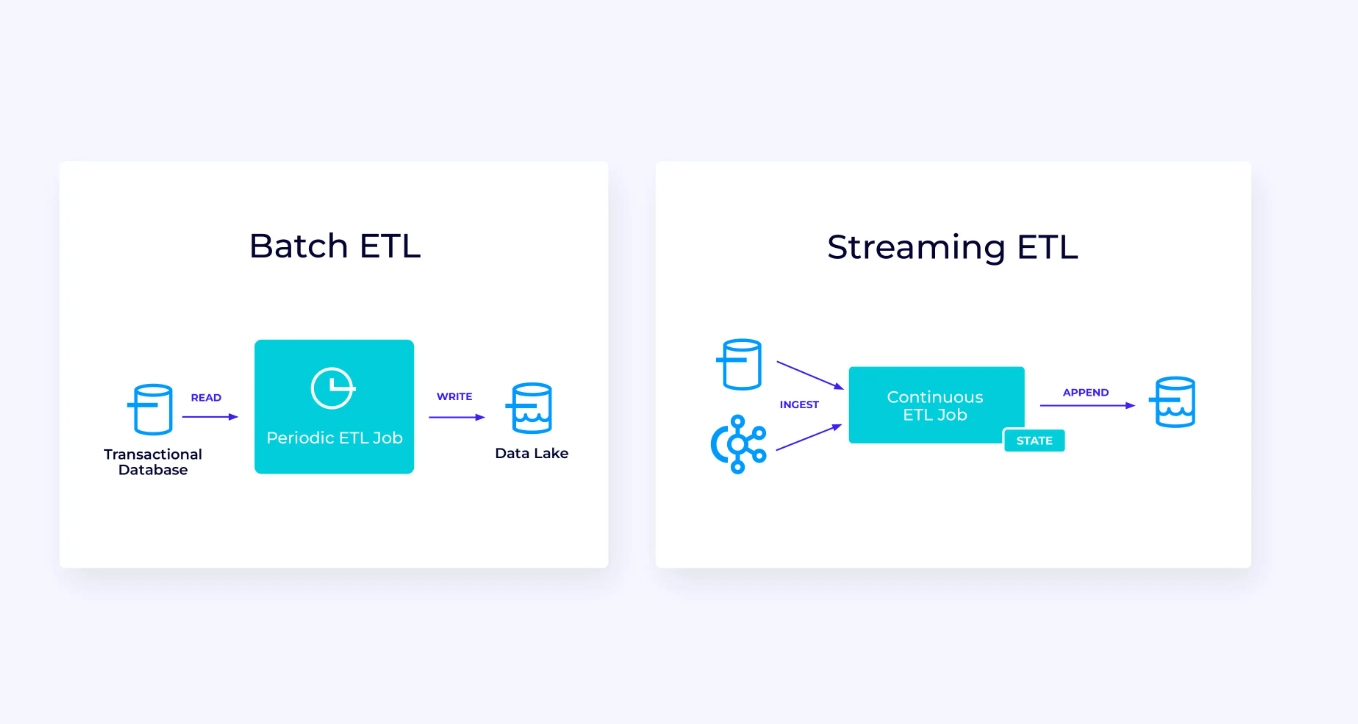

Under, at left, is an instance of a standard batch extract, rework, and cargo (ETL) job that periodically reads from a transactional database, transforms the info, and writes the outcomes out to a different knowledge retailer, equivalent to a database, file system, or knowledge lake.

The corresponding streaming pipeline is superficially related, however has some important variations:

- The streaming pipeline is all the time working.

- The transactional knowledge is delivered to the streaming pipeline in two components: an preliminary bulk load from the database, together with a change knowledge seize (CDC) stream carrying the database updates since that bulk load.

- The streaming model repeatedly produces new outcomes as quickly as they turn into accessible.

- The state is explicitly managed in order that it may be robustly recovered within the occasion of a failure. Streaming ETL pipelines usually use little or no state. The info sources maintain monitor of precisely how a lot of the enter has been ingested, usually within the type of offsets that depend information because the starting of the streams. The sinks use transactions to handle their writes to exterior techniques, like databases or Kafka. Throughout checkpointing, the sources report their offsets, and the sinks commit the transactions that carry the outcomes of getting learn precisely as much as, however not past, these supply offsets.

For this use case, the Desk/SQL API can be a good selection.

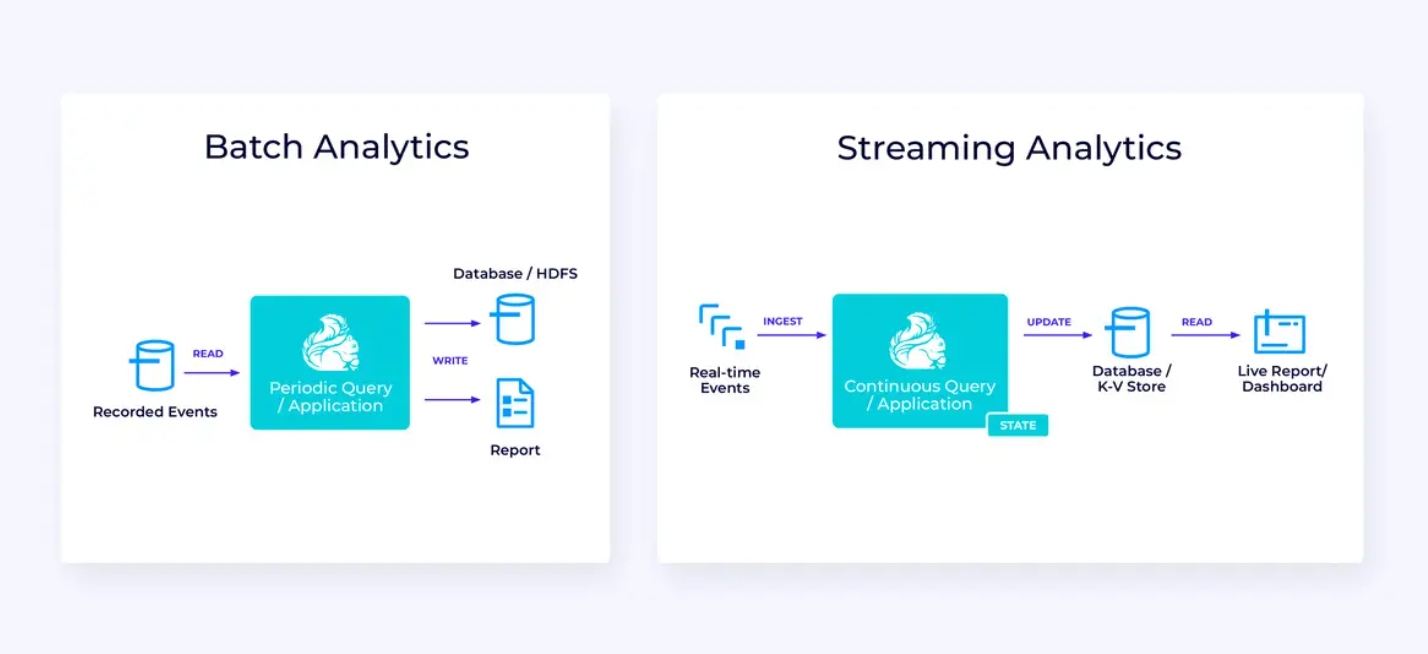

Actual-Time Analytics

In comparison with the streaming ETL utility, this streaming analytics utility has a few fascinating variations:

- As soon as once more, Flink is getting used to run a steady utility, however for this utility, Flink will most likely have to handle considerably extra state.

- For this use case, it is sensible for the stream being ingested to be saved in a stream-native storage system, equivalent to Apache Kafka.

- Reasonably than periodically producing a static report, the streaming model can be utilized to drive a stay dashboard.

As soon as once more, the Desk/SQL API is often a good selection for this use case.

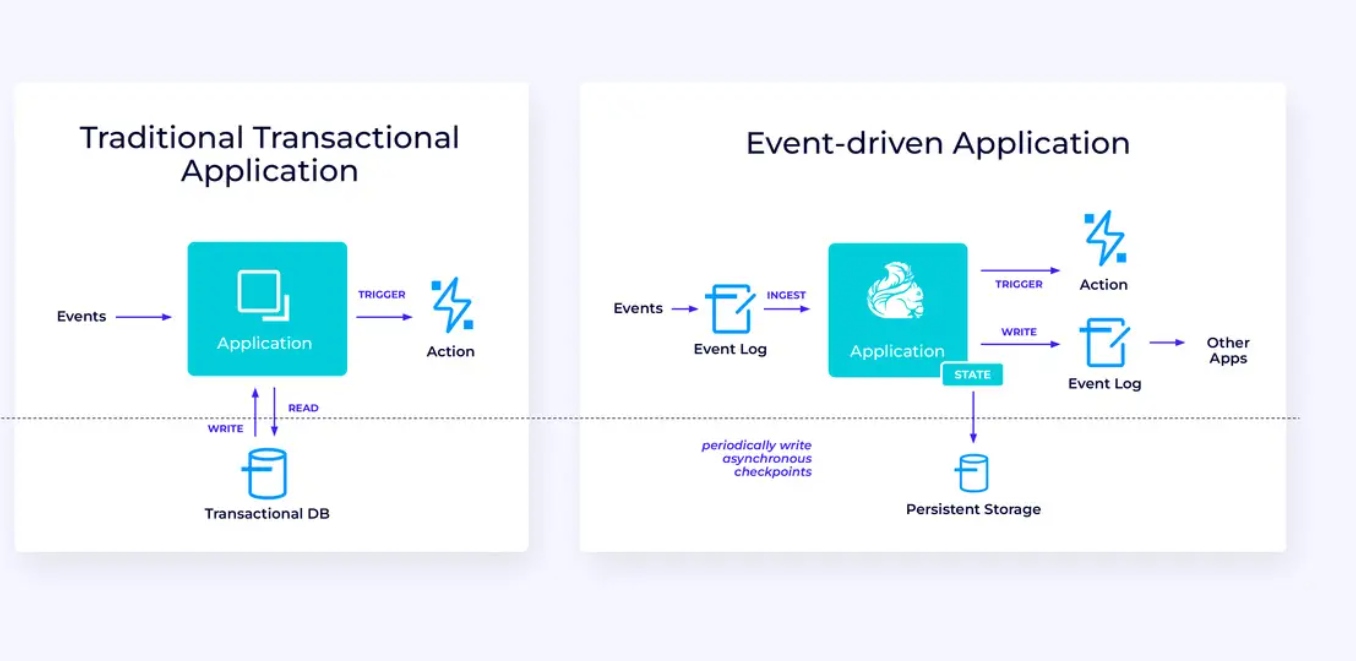

Occasion-Pushed Functions

Our third and closing household of use instances entails the implementation of event-driven functions or microservices. A lot has been written elsewhere on this subject; that is an architectural design sample that has a variety of advantages.

Flink is usually a nice match for these functions, particularly in the event you want the form of efficiency Flink can ship. In some instances, the Desk/SQL API has the whole lot you want, however in lots of instances, you’ll want the extra flexibility of the DataStream API for at the least a part of the job.

Getting Began With Flink

Flink offers a strong framework for constructing functions that course of occasion streams. As we’ve lined, a few of the ideas could appear novel at first, however when you’re accustomed to the best way Flink is designed and operates, the software program is intuitive to make use of, and the rewards of realizing Flink are important.

As a subsequent step, observe the directions within the Flink documentation, which is able to information you thru the method of downloading, putting in, and working the newest steady model of Flink. Take into consideration the broad use instances we mentioned — fashionable knowledge pipelines, real-time analytics, and event-driven microservices — and the way these might help to handle a problem or drive worth to your group.

Knowledge streaming is likely one of the most fun areas of enterprise know-how at this time, and stream processing with Flink makes it much more highly effective. Studying Flink shall be useful not solely to your group but in addition to your profession as a result of real-time knowledge processing is turning into extra worthwhile to companies globally. So take a look at Flink at this time and see what this highly effective know-how might help you obtain.