This text describes the way to configure your Pods to run in particular nodes primarily based on affinity and anti-affinity guidelines. Affinity and anti-affinity let you inform the Kubernetes Scheduler whether or not to assign or not assign your Pods, which may also help optimize efficiency, reliability, and compliance.

There are two varieties of affinity and anti-affinity, as per the Kubernetes documentation:

requiredDuringSchedulingIgnoredDuringExecution: The scheduler cannot schedule the Pod except the rule is met. This capabilities likenodeSelector, however with a extra expressive syntax.preferredDuringSchedulingIgnoredDuringExecution: The scheduler tries to discover a node that meets the rule. If an identical node just isn’t accessible, the scheduler nonetheless schedules the Pod.

Let’s have a look at a few eventualities the place you should use this configuration.

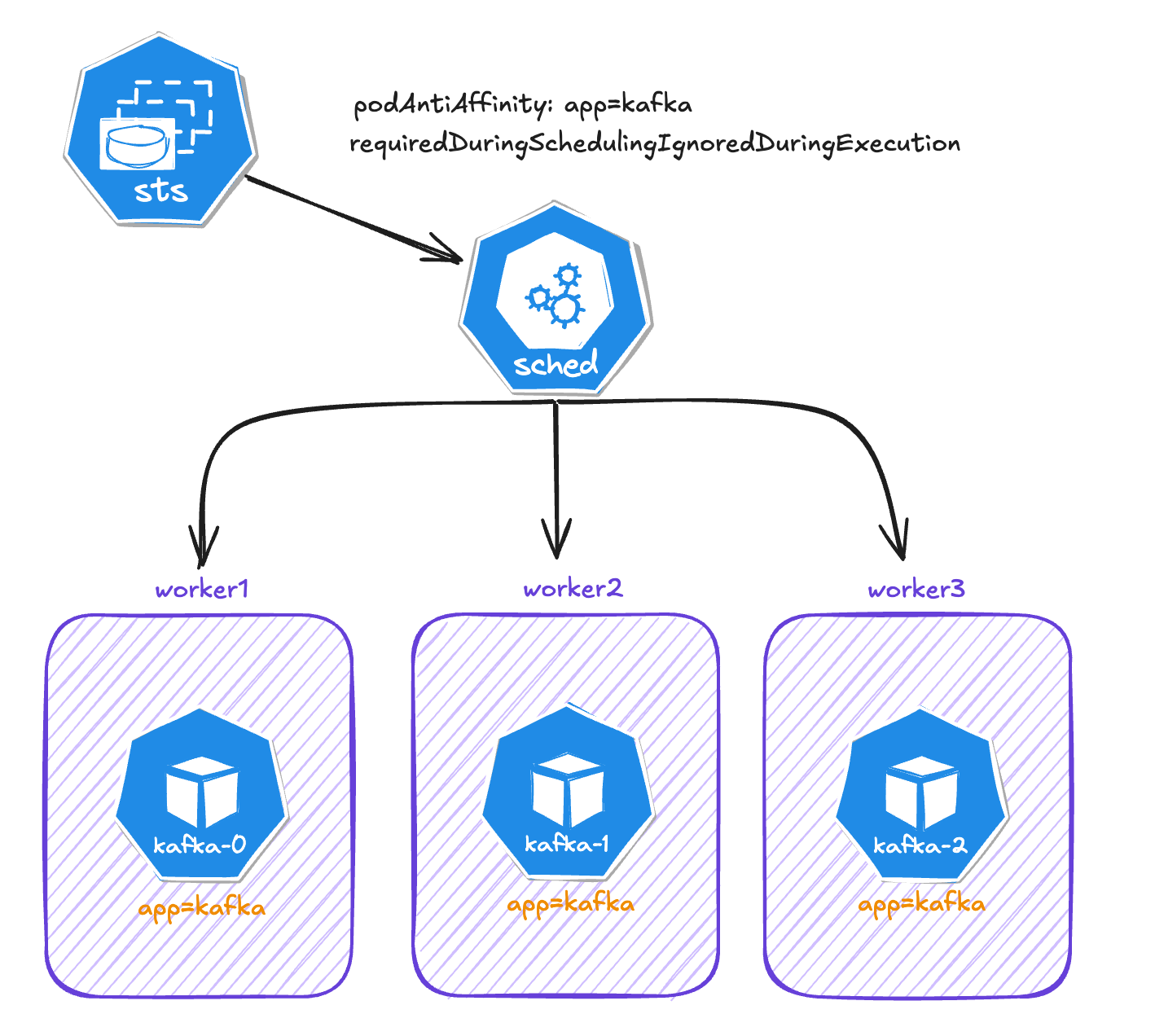

State of affairs 1: Kafka Cluster With a Pod in a Completely different K8s Employee Node

On this situation, I am working a Kafka cluster with 3 nodes (Pods). For resilience and excessive availability, I need to have every Kafka node working in a special employee node.

On this case, Kafka is deployed as a StatefulSet, so the affinity is configured within the .template.spec.affinity subject:

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- kafka

topologyKey: kubernetes.io/hostname

Within the configuration above, I am utilizing podAntiAffinity as a result of it permits us to create guidelines primarily based on labels on Pods and never solely within the node itself. Along with that, I am setting the requiredDuringSchedulingIgnoredDuringExecution as a result of I do not need two Kafka Pods working in the identical cluster at any time. The labelSelector subject search for the label app=kafka within the Pod and topologyKey is the label node. Pod anti-affinity requires nodes to be constantly labeled, in different phrases, each node within the cluster will need to have an applicable label matching topologyKey.

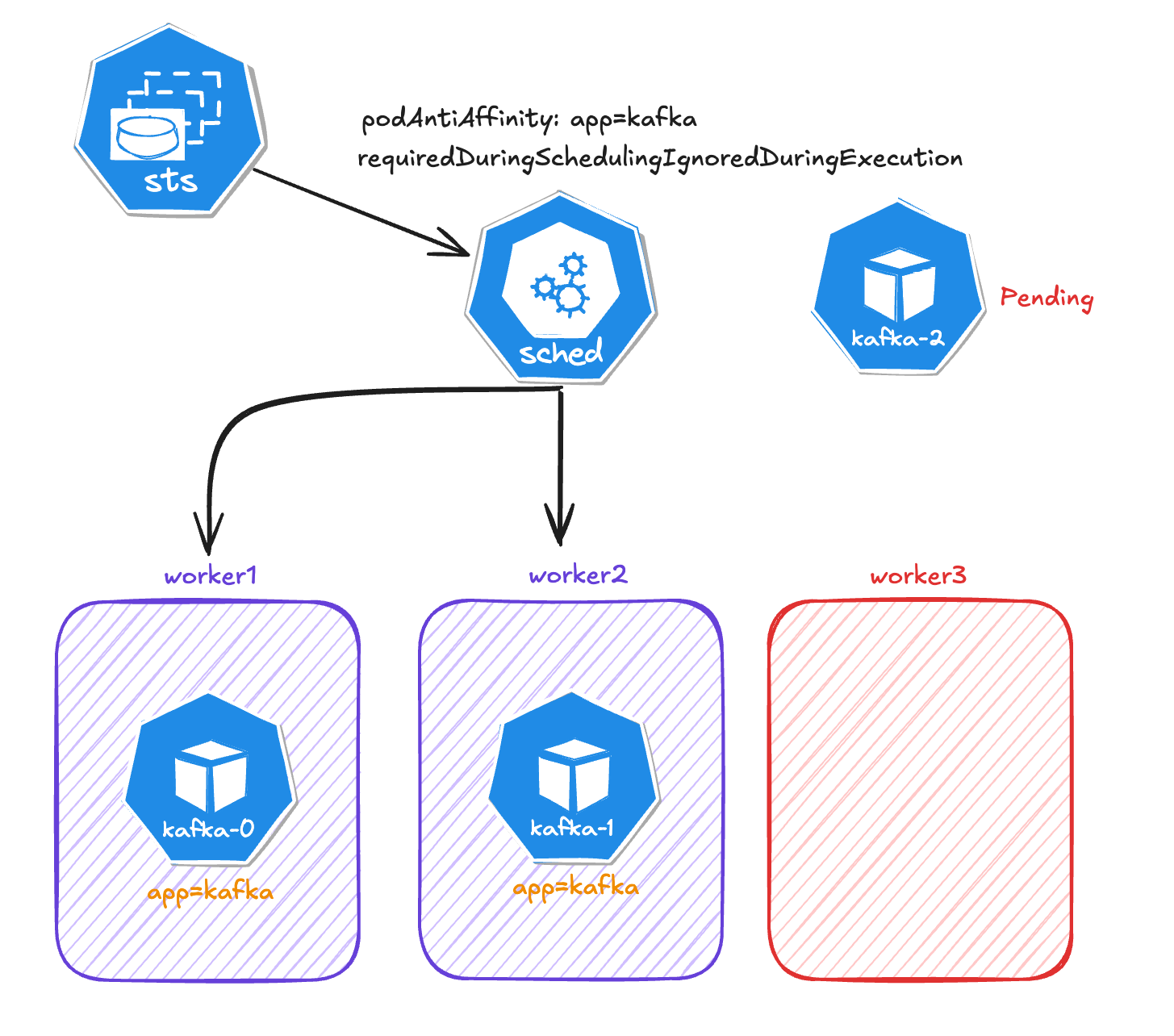

Within the occasion of a failure in one of many employees (contemplating that we solely have three employees), the Kafka Pod will likely be in a Pending standing as a result of the opposite two nodes have already got a Kafka node working.

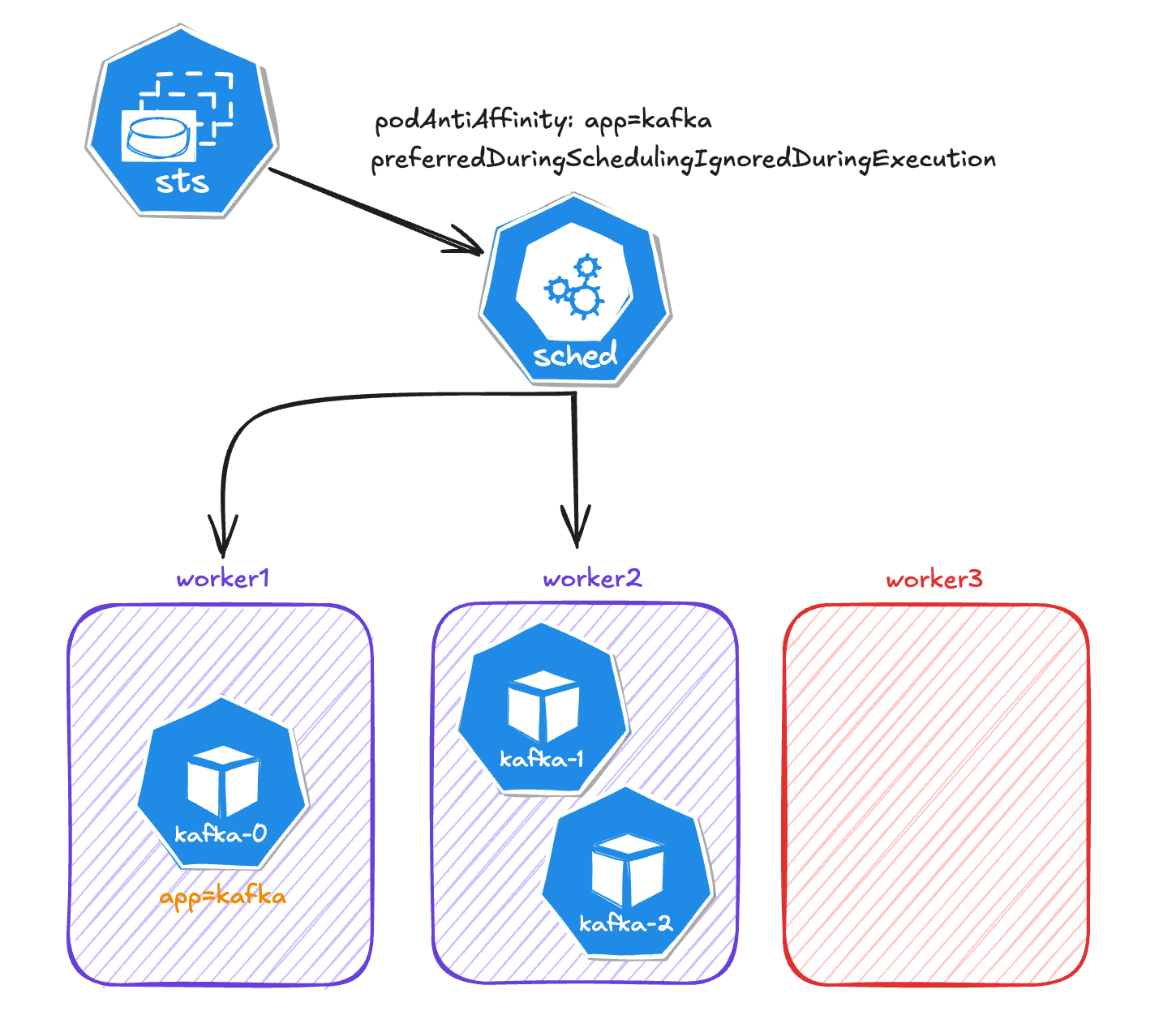

If we modify the sort from requiredDuringSchedulingIgnoredDuringExecution to preferredDuringSchedulingIgnoredDuringExecution, then kafka-2 can be assigned to a different employee.

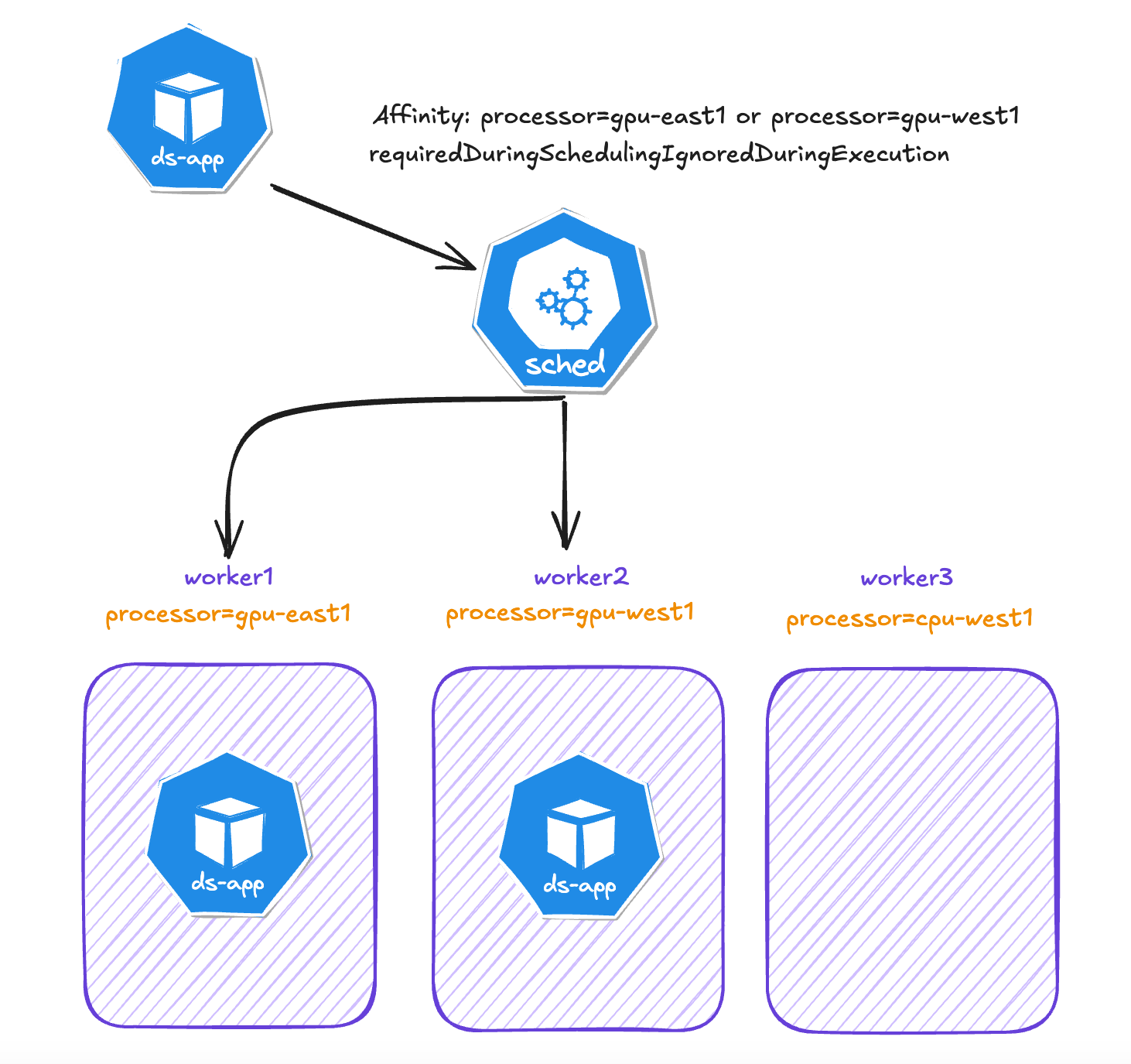

State of affairs 2: Information Science Purposes That Should Run on Particular Nodes

Conceptually, node affinity is much like nodeSelector the place you outline the place a Pod will run. Nonetheless, affinity provides us extra flexibility. For instance that in our cluster we’ve two employee nodes with GPU processors and a few of our purposes should run in one in all these nodes.

Within the Pod configuration we configure the .spec.affinity subject:

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: processor

operator: In

values:

- gpu-east1

- gpu-west1

On this instance, the next guidelines apply:

On this situation, the Information Science purposes will likely be assigned to employees 1 and a pair of. Employee 3 won’t ever host a Information Science utility.

Abstract

Affinity and anti-affinity guidelines present us flexibility and management on the place to run our purposes in Kubernetes. It is an vital function to create a extremely accessible and resilient platform. There are extra options, like weight, which you can examine within the official Kubernetes documentation.