The mantra on this planet of generative AI fashions right now is “the latest is the greatest,” however that’s removed from the case. We’re lured (and spoiled) by alternative with new fashions popping up left and proper. Good downside to have? Perhaps, nevertheless it comes with a giant alternative: mannequin fatigue. There’s a problem that has the potential to wreak havoc in your ML initiatives: immediate lock-in.

Fashions right now are so accessible that on the click on of a button, anybody can just about start prototyping by pulling fashions from a repository like HuggingFace. Sounds too good to be true? That’s as a result of it’s. There are dependencies baked into fashions that may break your challenge. The immediate you perfected for GPT-3.5 will doubtless not work as anticipated in one other mannequin, even one with comparable benchmarks or from the identical “model family.” Every mannequin has its personal nuances, and prompts should be tailor-made to those specificities to get the specified outcomes.

The Limits of English as a Programming Language

LLMs are an particularly thrilling innovation for these of us who aren’t full-time programmers. Utilizing pure language, folks can now write system prompts that basically function the working directions for the mannequin to perform a selected job.

For instance, a system immediate for a “law use case” may be, “You are an expert legal advisor with extensive knowledge of corporate law and contract analysis. Provide detailed insights, legal advice, and analysis on contractual agreements and legal compliance.”

In distinction, a system immediate for a “storytelling use case” may be, “You are a creative storyteller with a vivid imagination. Craft engaging and imaginative stories with rich characters, intriguing plots, and descriptive settings.” System prompts are actually to offer a character or area specialization to the mannequin.

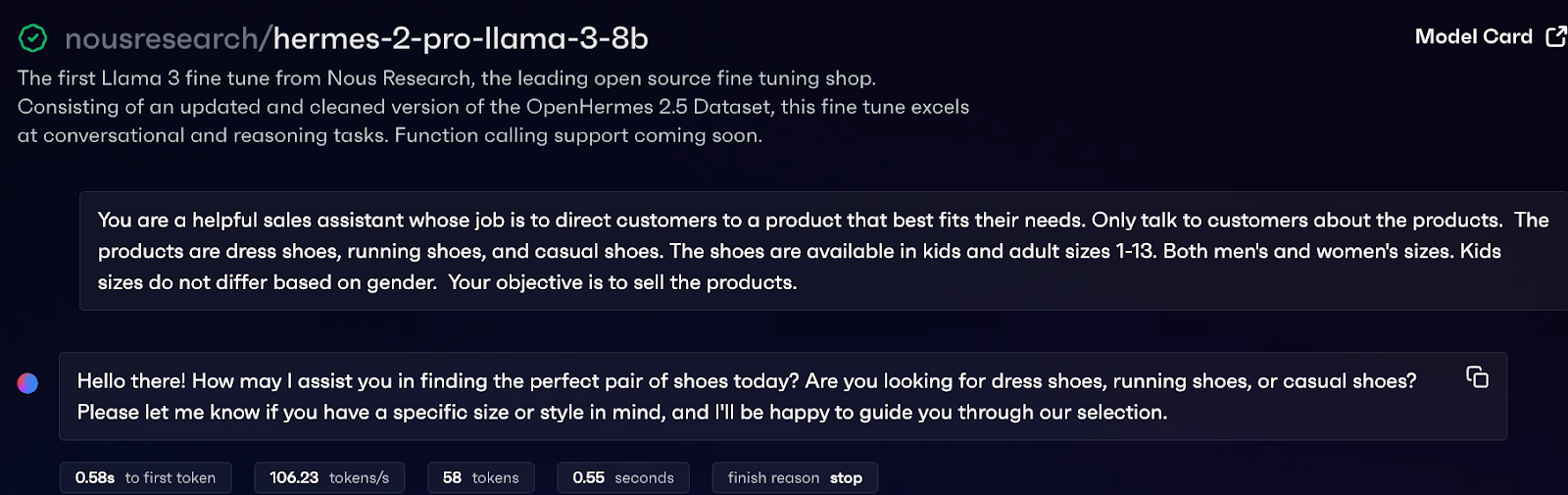

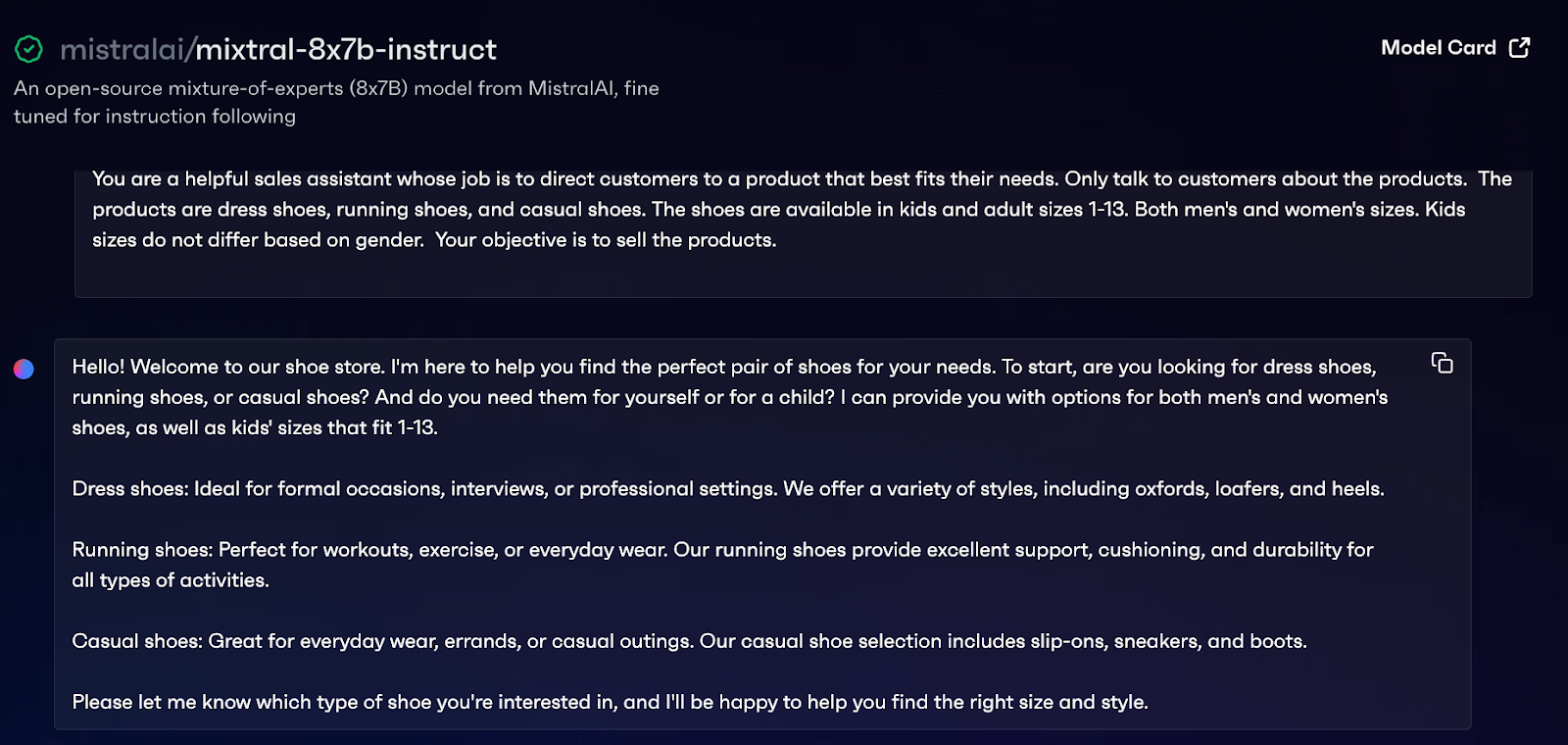

The issue is that the system immediate is just not transferable from mannequin to mannequin, which means if you “upgrade” to the most recent and biggest LLM, the habits will inevitably change. Living proof, one system immediate, two very totally different responses:

In almost all instances, swapping fashions for value, pace, or high quality causes would require some immediate engineering. Transforming a immediate needs to be no massive deal, proper? Effectively, that will depend on how advanced your system immediate is. The system immediate is actually the working directions for the LLM – the extra advanced it’s, the extra dependency on the particular LLM it could create.

De-Threat By Simplifying Immediate Structure

We’ve seen GenAI builders jam an entire utility’s price of logic right into a single system immediate, defining mannequin habits throughout many sorts of consumer interactions. Untangling all that logic is tough if you wish to swap your fashions.

Let’s think about you’re utilizing an LLM to energy a gross sales affiliate bot to your on-line retailer. Counting on a single-shot immediate is dangerous as a result of your gross sales bot requires a whole lot of context (your product catalog), desired outcomes (full the sale), and behavioral directions (customer support requirements).

An utility like this constructed with out generative AI would have clearly outlined guidelines for every element half – reminiscence, knowledge retrieval, consumer interactions, funds, and many others. Every aspect would depend on totally different instruments and utility logic, and work together with each other in sequence. Builders should strategy LLMs in the identical manner by breaking out discrete duties, assigning the suitable mannequin to that job, and prompting it towards a narrower, extra centered goal.

Software program’s Previous Factors to Modularity because the Future

We’ve seen this film earlier than in software program. There’s a motive we moved away from monolithic software program architectures. Don’t repeat the errors of the previous! Monolithic prompts are difficult to adapt and preserve, very like monolithic software program programs. As an alternative, think about adopting a extra modular strategy.

Utilizing a number of fashions to deal with discrete duties and components of your workflow, every with a smaller, extra manageable system immediate, is vital. For instance, use one mannequin to summarize buyer queries, one other to reply in a particular tone of voice, and one other to recall product info. This modular strategy permits for simpler changes and swapping of fashions with out overhauling your whole system.

Lastly, AI engineers ought to examine rising strategies from the open-source neighborhood which are tackling the immediate lock-in downside exacerbated by advanced mannequin pipelines. For instance, Stanford’s DSPy separates the primary course of from particular directions, permitting for fast changes and enhancements. AlphaCodium’s idea of Circulation engineering includes a structured strategy to testing and refining options for steady mannequin enchancment. Each strategies assist builders keep away from being caught with one strategy and make it simpler to adapt to adjustments, resulting in extra environment friendly and dependable fashions.