Are you able to get began with cloud-native observability with telemetry pipelines?

This text is a part of a collection exploring a workshop guiding you thru the open supply venture Fluent Bit, what it’s, a primary set up, and organising the primary telemetry pipeline venture. Discover ways to handle your cloud-native information from supply to vacation spot utilizing the telemetry pipeline phases masking assortment, aggregation, transformation, and forwarding from any supply to any vacation spot.

Within the earlier article on this collection, we explored what backpressure was, the way it manifests in telemetry pipelines, and took the primary steps to mitigate this with Fluent Bit. On this article, we take a look at the best way to allow Fluent Bit options that can assist with avoiding telemetry information loss as we noticed within the earlier article.

You could find extra particulars within the accompanying workshop lab.

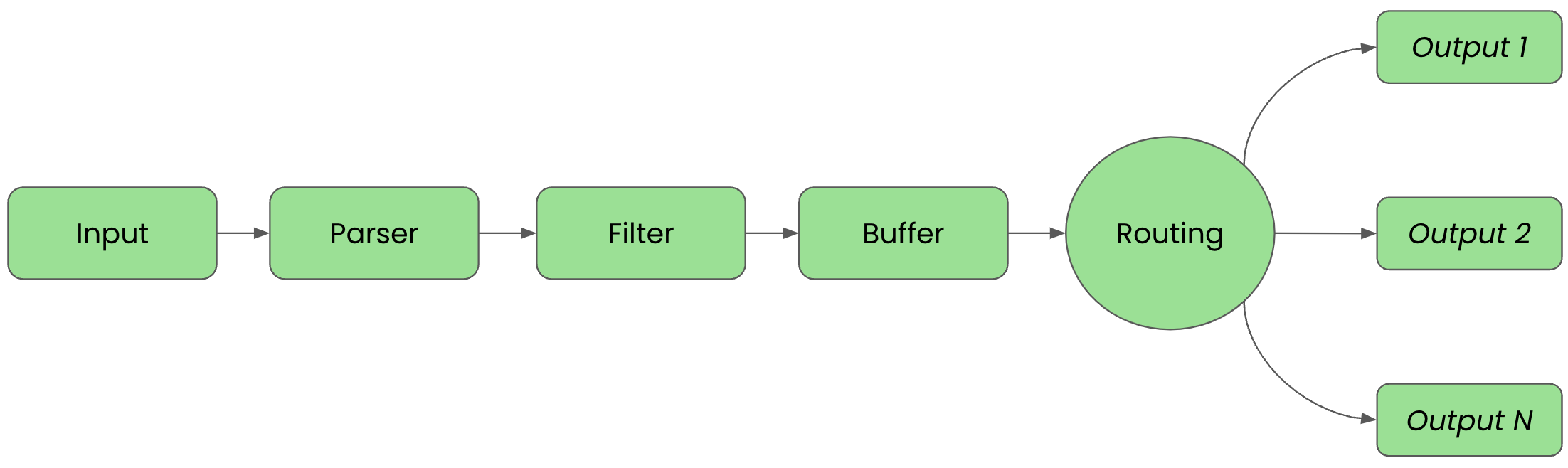

Earlier than we get began it is necessary to evaluate the phases of a telemetry pipeline. Within the diagram beneath we see them laid out once more. Every incoming occasion goes from enter to parser to filter to buffer to routing earlier than they’re despatched to its closing output vacation spot(s).

For readability on this article, we’ll break up up the configuration into information which can be imported right into a foremost fluent bit configuration file we’ll title workshop-fb.conf.

Tackling Knowledge Loss

Beforehand, we explored how enter plugins can hit their ingestion limits when our telemetry pipelines scale past reminiscence limits when utilizing default in-memory buffering of our occasions. We additionally noticed that we will restrict the scale of our enter plugin buffers to forestall our pipeline from failing on out-of-memory errors, however that the pausing of the ingestion can even result in information loss if the clearing of the enter buffers takes too lengthy.

To rectify this drawback, we’ll discover one other buffering answer that Fluent Bit presents, making certain information and reminiscence security at scale by configuring filesystem buffering.

To that finish, let’s discover how the Fluent Bit engine processes information that enter plugins emit. When an enter plugin emits occasions, the engine teams them right into a Chunk. The chunk dimension is round 2MB. The default is for the engine to position this Chunk solely in reminiscence.

We noticed that limiting in-memory buffer dimension didn’t remedy the issue, so we’re taking a look at modifying this default conduct of solely inserting chunks into reminiscence. That is achieved by altering the property storage.sort from the default Reminiscence to Filesystem.

It is necessary to know that reminiscence and filesystem buffering mechanisms will not be mutually unique. By enabling filesystem buffering for our enter plugin we routinely get efficiency and information security

Filesystem Buffering Suggestions

When altering our buffering from reminiscence to filesystem with the property storage.sort filesystem, the settings for mem_buf_limit are ignored.

As an alternative, we have to use the property storage.max_chunks_up for controlling the scale of our reminiscence buffer. Shockingly, when utilizing the default settings the property storage.pause_on_chunks_overlimit is ready to off, inflicting the enter plugins to not pause. As an alternative, enter plugins will swap to buffering solely within the filesystem. We will management the quantity of disk area used with storage.total_limit_size.

If the property storage.pause_on_chunks_overlimit is ready to on, then the buffering mechanism to the filesystem behaves identical to our mem_buf_limit situation demonstrated beforehand.

Configuring Harassed Telemetry Pipeline

On this instance, we’re going to use the identical careworn Fluent Bit pipeline to simulate a necessity for enabling filesystem buffering. All examples are going to be proven utilizing containers (Podman) and it is assumed you might be accustomed to container tooling comparable to Podman or Docker.

We start the configuration of our telemetry pipeline within the INPUT section with a easy dummy plugin producing a lot of entries to flood our pipeline with as follows in our configuration file inputs.conf (observe that the mem_buf_limit repair is commented out):

# This entry generates a considerable amount of success messages for the workshop.

[INPUT]

Identify dummy

Tag massive.information

Copies 15000

Dummy {"message":"true 200 success", "big_data": "blah blah blah blah blah blah blah blah blah"}

#Mem_Buf_Limit 2MB

Now make sure the output configuration file outputs.conf has the next configuration:

# This entry directs all tags (it matches any we encounter) # to print to plain output, which is our console. [OUTPUT] Identify stdout Match *

With our inputs and outputs configured, we will now carry them collectively in a single foremost configuration file. Utilizing a file known as workshop-fb.conf in our favourite editor, guarantee the next configuration is created. For now, simply import two information:

# Fluent Bit foremost configuration file. # # Imports part. @INCLUDE inputs.conf @INCLUDE outputs.conf

Let’s now strive testing our configuration by working it utilizing a container picture. The very first thing that’s wanted is to make sure a file known as Buildfile is created. That is going for use to construct a brand new container picture and insert our configuration information. Notice this file must be in the identical listing as our configuration information, in any other case modify the file path names:

FROM cr.fluentbit.io/fluent/fluent-bit:3.0.4 COPY ./workshop-fb.conf /fluent-bit/and many others/fluent-bit.conf COPY ./inputs.conf /fluent-bit/and many others/inputs.conf COPY ./outputs.conf /fluent-bit/and many others/outputs.conf

Now we’ll construct a brand new container picture, naming it with a model tag as follows utilizing the Buildfile and assuming you might be in the identical listing:

$ podman construct -t workshop-fb:v8 -f Buildfile STEP 1/4: FROM cr.fluentbit.io/fluent/fluent-bit:3.0.4 STEP 2/4: COPY ./workshop-fb.conf /fluent-bit/and many others/fluent-bit.conf --> a379e7611210 STEP 3/4: COPY ./inputs.conf /fluent-bit/and many others/inputs.conf --> f39b10d3d6d0 STEP 4/4: COPY ./outputs.conf /fluent-bit/and many others/outputs.conf COMMIT workshop-fb:v6 --> e74b2f228729 Efficiently tagged localhost/workshop-fb:v8 e74b2f22872958a79c0e056efce66a811c93f43da641a2efaa30cacceb94a195

If we run our pipeline in a container configured with constricted reminiscence, in our case, we have to give it round a 6.5MB restrict, then we’ll see the pipeline run for a bit after which fail attributable to overloading (OOM):

$ podman run --memory 6.5MB --name fbv8 workshop-fb:v8

The console output reveals that the pipeline ran for a bit; in our case, beneath to occasion quantity 862 earlier than it hit the OOM limits of our container setting (6.5MB):

...

[860] massive.information: [[1716551898.202389716, {}], {"message"=>"true 200 success", "big_data"=>"blah blah blah blah blah blah blah blah"}]

[861] massive.information: [[1716551898.202389925, {}], {"message"=>"true 200 success", "big_data"=>"blah blah blah blah blah blah blah blah"}]

[862] massive.information: [[1716551898.202390133, {}], {"message"=>"true 200 success", "big_data"=>"blah blah blah blah blah blah blah blah"}]

[863] massive.information: [[1

We can validate that the stressed telemetry pipeline actually failed on an OOM status by viewing our container, and inspecting it for an OOM failure to validate our backpressure worked:

# Use the container name to inspect for reason it failed $ podman inspect fbv8 | grep OOM "OOMKilled": true,

Already having tried in a previous lab to manage this with mem_buf_limit settings, we’ve seen that this also is not the real fix. To prevent data loss we need to enable filesystem buffering so that overloading the memory buffer means that events will be buffered in the filesystem until there is memory free to process them.

Using Filesystem Buffering

The configuration of our telemetry pipeline in the INPUT phase needs a slight adjustment by adding storage.type to as shown, set to filesystem to enable it. Note that mem_buf_limit has been removed:

# This entry generates a large amount of success messages for the workshop.

[INPUT]

Identify dummy

Tag massive.information

Copies 15000

Dummy {"message":"true 200 success", "big_data": "blah blah blah blah blah blah blah blah blah"}

storage.sort filesystem

We will now carry all of it collectively in the principle configuration file. Utilizing a file known as the next workshop-fb.conf in our favourite editor, replace the file to incorporate SERVICE configuration is added with settings for managing the filesystem buffering:

# Fluent Bit foremost configuration file. [SERVICE] flush 1 log_Level information storage.path /tmp/fluentbit-storage storage.sync regular storage.checksum off storage.max_chunks_up 5 # Imports part @INCLUDE inputs.conf @INCLUDE outputs.conf

A number of phrases on the SERVICE part properties may be wanted to clarify their perform:

storage.path– Placing filesystem buffering within the tmp filesystemstorage.sync– Utilizing regular and turning off checksum processingstorage.max_chunks_up– Set to ~10MB, quantity of allowed reminiscence for occasions

Now it is time for testing our configuration by working it utilizing a container picture. The very first thing that’s wanted is to make sure a file known as Buildfile is created. That is going for use to construct a brand new container picture and insert our configuration information. Notice this file must be in the identical listing as our configuration information, in any other case modify the file path names:

FROM cr.fluentbit.io/fluent/fluent-bit:3.0.4 COPY ./workshop-fb.conf /fluent-bit/and many others/fluent-bit.conf COPY ./inputs.conf /fluent-bit/and many others/inputs.conf COPY ./outputs.conf /fluent-bit/and many others/outputs.conf

Now we’ll construct a brand new container picture, naming it with a model tag, as follows utilizing the Buildfile and assuming you might be in the identical listing:

$ podman construct -t workshop-fb:v9 -f Buildfile STEP 1/4: FROM cr.fluentbit.io/fluent/fluent-bit:3.0.4 STEP 2/4: COPY ./workshop-fb.conf /fluent-bit/and many others/fluent-bit.conf --> a379e7611210 STEP 3/4: COPY ./inputs.conf /fluent-bit/and many others/inputs.conf --> f39b10d3d6d0 STEP 4/4: COPY ./outputs.conf /fluent-bit/and many others/outputs.conf COMMIT workshop-fb:v6 --> e74b2f228729 Efficiently tagged localhost/workshop-fb:v9 e74b2f22872958a79c0e056efce66a811c93f43da641a2efaa30cacceb94a195

If we run our pipeline in a container configured with constricted reminiscence (barely bigger worth attributable to reminiscence wanted for mounting the filesystem) – in our case, we have to give it round a 9MB restrict – then we’ll see the pipeline working with out failure:

$ podman run -v ./:/tmp --memory 9MB --name fbv9 workshop-fb:v9

The console output reveals that the pipeline runs till we cease it with CTRL-C, with occasions rolling by as proven beneath.

...

[14991] massive.information: [[1716559655.213181639, {}], {"message"=>"true 200 success", "big_data"=>"blah blah blah blah blah blah blah"}]

[14992] massive.information: [[1716559655.213182181, {}], {"message"=>"true 200 success", "big_data"=>"blah blah blah blah blah blah blah"}]

[14993] massive.information: [[1716559655.213182681, {}], {"message"=>"true 200 success", "big_data"=>"blah blah blah blah blah blah blah"}]

...

We will now validate the filesystem buffering by wanting on the filesystem storage. Examine the filesystem from the listing the place you began your container. Whereas the pipeline is working with reminiscence restrictions, it will likely be utilizing the filesystem to retailer occasions till the reminiscence is free to course of them. In the event you view the contents of the file earlier than stopping your pipeline, you will see a messy message format saved inside (cleaned up for you right here):

$ ls -l ./fluentbit-storage/dummy.0/1-1716558042.211576161.flb -rw------- 1 username groupname 1.4M Might 24 15:40 1-1716558042.211576161.flb $ cat fluentbit-storage/dummy.0/1-1716558042.211576161.flb ??wbig.information???fP?? ?????message?true 200 success?big_data?'blah blah blah blah blah blah blah blah???fP?? ?p???message?true 200 success?big_data?'blah blah blah blah blah blah blah blah???fP?? ߲???message?true 200 success?big_data?'blah blah blah blah blah blah blah blah???fP?? ?F???message?true 200 success?big_data?'blah blah blah blah blah blah blah blah???fP?? ?d???message?true 200 success?big_data?'blah blah blah blah blah blah blah blah???fP?? ...

Final Ideas on Filesystem Buffering

This answer is the best way to cope with backpressure and different points which may flood your telemetry pipeline and trigger it to crash. It is value noting that utilizing a filesystem to buffer the occasions additionally introduces the bounds of the filesystem getting used.

It is necessary to know that simply as reminiscence can run out, so can also the filesystem storage attain its limits. It is best to have a plan to handle any attainable filesystem challenges when utilizing this answer, however that is outdoors the scope of this text.

This completes our use circumstances for this text. Make sure you discover this hands-on expertise with the accompanying workshop lab.

What’s Subsequent?

This text walked us by how Fluent Bit filesystem buffering supplies a data- and memory-safe answer to the issues of backpressure and information loss.

Keep tuned for extra hands-on materials that will help you along with your cloud-native observability journey.