An unchecked flood will sweep away even the strongest dam.

– Historical Proverb

The quote above means that even essentially the most sturdy and well-engineered dams can not face up to the damaging forces of an unchecked and uncontrolled flood. Equally, within the context of a distributed system, an unchecked caller can typically overwhelm your complete system and trigger cascading failures. In a earlier article, I wrote about how a retry storm has the potential to take down a complete service if correct guardrails should not in place. Right here, I am exploring when a service ought to think about making use of backpressure to its callers, how it may be utilized, and what callers can do to cope with it.

Backpressure

Because the identify itself suggests, backpressure is a mechanism in distributed methods that refers back to the capability of a system to throttle the speed at which information is consumed or produced to stop overloading itself or its downstream elements. A system making use of backpressure on its caller is just not all the time express, like within the type of throttling or load shedding, however generally additionally implicit, like slowing down its personal system by including latency to requests served with out being express about it. Each implicit and express backpressure intend to decelerate the caller, both when the caller is just not behaving effectively or the service itself is unhealthy and desires time to get well.

Want for Backpressure

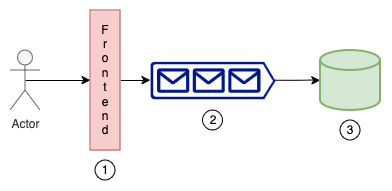

Let’s take an instance for instance when a system would want to use backpressure. On this instance, we’re constructing a management airplane service with three most important elements: a frontend the place buyer requests are acquired, an inside queue the place buyer requests are buffered, and a shopper app that reads messages from the queue and writes to a database for persistence.

Determine 1: A pattern management airplane

Producer-Client Mismatch

Take into account a situation the place actors/clients are hitting the entrance finish at such a excessive price that both the interior queue is full or the employee writing to the database is busy, resulting in a full queue. In that case, requests cannot be enqueued, so as a substitute of dropping buyer requests, it is higher to tell the shoppers upfront. This mismatch can occur for varied causes, like a burst in incoming site visitors or a slight glitch within the system the place the buyer was down for a while however now has to work further to empty the backlog gathered throughout its downtime.

Useful resource Constraints and Cascading Failures

Think about a situation the place your queue is approaching 100% of its capability, but it surely’s usually at 50%. To match this improve within the incoming price, you scale up your shopper app and begin writing to the database at the next price. Nonetheless, the database cannot deal with this improve (e.g., on account of limits on writes/sec) and breaks down. This breakdown will take down the entire system with it and improve the Imply Time To Get well (MTTR). Making use of backpressure at acceptable locations turns into vital in such situations.

Missed SLAs

Take into account a situation the place information written to the database is processed each 5 minutes, which one other utility listens to maintain itself up-to-date. Now, if the system is unable to fulfill that SLA for no matter cause, just like the queue being 90% full and doubtlessly taking as much as 10 minutes to clear all messages, it is higher to resort to backpressure methods. You may inform clients that you will miss the SLA and ask them to strive once more later or apply backpressure by dropping non-urgent requests from the queue to fulfill the SLA for vital occasions/requests.

Backpressure Challenges

Based mostly on what’s described above, it looks as if we should always all the time apply backpressure, and there should not be any debate about it. As true because it sounds, the primary problem is just not round if we should always apply backpressure however largely round how to determine the best factors to use backpressure and the mechanisms to use it that cater to particular service/enterprise wants.

Backpressure forces a trade-off between throughput and stability, made extra complicated by the problem of load prediction.

Figuring out the Backpressure Factors

Discover Bottlenecks/Weak Hyperlinks

Each system has bottlenecks. Some can face up to and defend themselves, and a few cannot. Consider a system the place a big information airplane fleet (1000’s of hosts) relies on a small management airplane fleet (fewer than 5 hosts) to obtain configs endured within the database, as highlighted within the diagram above. The massive fleet can simply overwhelm the small fleet. On this case, to guard itself, the small fleet ought to have mechanisms to use backpressure on the caller. One other frequent weak hyperlink in structure is centralized elements that make selections about the entire system, like anti-entropy scanners. In the event that they fail, the system can by no means attain a secure state and may deliver down your complete service.

Use System Dynamics: Screens/Metrics

One other frequent method to discover backpressure factors on your system is to have acceptable displays/metrics in place. Constantly monitor the system’s habits, together with queue depths, CPU/reminiscence utilization, and community throughput. Use this real-time information to determine rising bottlenecks and modify the backpressure factors accordingly. Creating an mixture view by means of metrics or observers like efficiency canaries throughout totally different system elements is one other method to know that your system is below stress and will assert backpressure on its customers/callers. These efficiency canaries might be remoted for various facets of the system to search out the choke factors. Additionally, having a real-time dashboard on inside useful resource utilization is one other good way to make use of system dynamics to search out the factors of curiosity and be extra proactive.

Boundaries: The Precept of Least Astonishment

The obvious issues to clients are the service floor areas with which they work together. These are sometimes APIs that clients use to get their requests served. That is additionally the place the place clients can be least shocked in case of backpressure, because it clearly highlights that the system is below stress. It may be within the type of throttling or load shedding. The identical precept might be utilized throughout the service itself throughout totally different subcomponents and interfaces by means of which they work together with one another. These surfaces are the most effective locations to exert backpressure. This might help decrease confusion and make the system’s habits extra predictable.

The way to Apply Backpressure in Distributed Programs

Within the final part, we talked about how you can discover the best factors of curiosity to say backpressure. As soon as we all know these factors, listed below are some methods we will assert this backpressure in apply:

Construct Specific Move Management

The concept is to make the queue dimension seen to your callers and allow them to management the decision price primarily based on that. By figuring out the queue dimension (or any useful resource that could be a bottleneck), they’ll improve or lower the decision price to keep away from overwhelming the system. This sort of approach is especially useful the place a number of inside elements work collectively and behave effectively as a lot as they’ll with out impacting one another. The equation under can be utilized anytime to calculate the caller price. Observe: The precise name price will rely upon varied different elements, however the equation under ought to give a good suggestion.

CallRate_new = CallRate_normal * (1 – (Q_currentSize / Q_maxSize))

Invert Duties

In some methods, it is potential to alter the order the place callers do not explicitly ship requests to the service however let the service request work itself when it is able to serve. This sort of approach offers the receiving service full management over how a lot it could actually do and may dynamically change the request dimension primarily based on its newest state. You’ll be able to make use of a token bucket technique the place the receiving service fills the token, and that tells the caller when and the way a lot they’ll ship to the server. Here’s a pattern algorithm the caller can use:

# Service requests work if it has capability

if Tokens_available > 0:

Work_request_size = min (Tokens_available, Work_request_size _max) # Request work, as much as a most restrict

send_request_to_caller(Work_request_size) # Caller sends work if it has sufficient tokens

if Tokens_available >= Work_request_size:

send_work_to_service(Work_request_size)

Tokens_available = Tokens_available – Work_request_size

# Tokens are replenished at a sure price

Tokens_available = min (Tokens_available + Token_Refresh_Rate, Token_Bucket_size)Proactive Changes

Typically, you recognize upfront that your system goes to get overwhelmed quickly, and you’re taking proactive measures like asking the caller to decelerate the decision quantity and slowly improve it. Consider a situation the place your downstream was down and rejected all of your requests. Throughout that interval, you queued up all of the work and at the moment are prepared to empty it to fulfill your SLA. While you drain it quicker than the conventional price, you danger taking down the downstream companies. To deal with this, you proactively restrict the caller limits or have interaction the caller to scale back its name quantity and slowly open the floodgates.

Throttling

Limit the variety of requests a service can serve and discard requests past that. Throttling might be utilized on the service stage or the API stage. This throttling is a direct indicator of backpressure for the caller to decelerate the decision quantity. You’ll be able to take this additional and do precedence throttling or equity throttling to make sure that the least influence is seen by the shoppers.

Load Shedding

Throttling factors to discarding requests whenever you breach some predefined limits. Buyer requests can nonetheless be discarded if the service faces stress and decides to proactively drop requests it has already promised to serve. This sort of motion is usually the final resort for companies to guard themselves and let the caller learn about it.

Conclusion

Backpressure is a vital problem in distributed methods that may considerably influence efficiency and stability. Understanding the causes and results of backpressure, together with efficient administration methods, is essential for constructing sturdy and high-performance distributed methods. When applied appropriately, backpressure can improve a system’s stability, reliability, and scalability, resulting in an improved consumer expertise. Nonetheless, if mishandled, it could actually erode buyer belief and even contribute to system instability. Proactively addressing backpressure by means of cautious system design and monitoring is vital to sustaining system well being. Whereas implementing backpressure could contain trade-offs, reminiscent of doubtlessly impacting throughput, the advantages when it comes to general system resilience and consumer satisfaction are substantial.