Massive Language Fashions (LLMs) are more and more shaping the way forward for software program improvement, providing new prospects in code era, debugging, and error decision. Latest developments in these AI-driven instruments have prompted a better examination of their sensible functions and potential impression on developer workflows.

This text explores the effectiveness of LLMs in software program improvement, with a specific give attention to error decision. Drawing from industry-wide observations and insights gained via my work with AI Error Decision at Raygun, I’ll analyze LLMs’ present capabilities and their implications for the way forward for improvement practices. The dialogue will weigh each the promising developments and the challenges that come up as these applied sciences combine into our every day work.

OpenAI Fashions for Software program Growth

OpenAI has efficiently launched newer, sooner, and allegedly smarter fashions. Whereas benchmark websites verify these outcomes, we see increasingly anecdotal information claiming that these fashions really feel dumber. Most current benchmarks focus purely on the logical reasoning facet of those fashions, akin to finishing SAT questions, moderately than specializing in qualitative responses, particularly within the software program engineering area. My aim right here is to quantitatively and qualitatively consider these fashions utilizing error decision as a benchmark, given its frequent use in builders’ workflows.

Our complete analysis will cowl a number of fashions, together with GPT-3.5 Turbo, GPT-4, GPT-4 Turbo, GPT-4o, and GPT-4o mini. We’ll use real-life stack traces and related info despatched to us to evaluate how these fashions deal with error decision. Components akin to response pace, high quality of solutions, and developer preferences will probably be completely examined. This evaluation will result in ideas for extracting the perfect responses from these fashions, such because the impression of offering extra context, like supply maps and supply code, on their effectiveness.

Experimental Methodology

As talked about, we are going to consider the next fashions: GPT-3.5 Turbo, GPT-4, GPT-4 Turbo, GPT-4o, and GPT-4o Mini. The particular variants used are the default fashions supplied by the OpenAI API as of July 30, 2024.

For our analysis, we chosen seven real-world errors from numerous programming languages, together with Python, TypeScript, and .NET, every mixed with totally different frameworks. We chosen these by sampling current errors inside our accounts and private tasks for a consultant pattern. Errors that had been transient or didn’t level to a direct trigger weren’t chosen.

| Identify | Language | Answer | Problem |

|---|---|---|---|

| Android lacking file | .NET Core | The .dll file that was tried to be learn was not current | Straightforward |

| Division by zero | Python | Division by zero error brought on by empty array – no error checking | Straightforward |

| Invalid cease id | TypeScript | Cease ID extracted from Alexa request envelope was not legitimate – Alexa fuzz testing despatched an invalid worth of xyzxyz | Onerous |

| IRaygunUserProvider not registered | .NET Core | IRaygunUserProvider was not registered within the DI container, inflicting a failure to create the MainPage in MAUI | Medium |

| JSON Serialization Error | .NET Core | Strongly typed object map didn’t match the JSON object supplied, brought on by non-compliant error payloads being despatched by a Raygun shopper | Onerous |

| Fundamental web page not registered ILogger | .NET Core | ILogger was added, however MainPage was not added as a singleton to the DI container, inflicting ILogger |

Medium |

| Postgres lacking desk | .NET Core/Postgres | Postgres lacking desk when being invoked by a C# program, inflicting a messy stack hint | Straightforward – Medium |

We then used a templated system immediate from Raygun’s AI Error Decision, which included info from the crash experiences despatched to us. We examined this instantly throughout all of the fashions via OpenAI’s API. This course of generated 35 LLM error-response pairs. These pairs had been then randomly assigned to our engineers, who rated them on a scale of 1 to five primarily based on accuracy, readability, and usefulness. We had a pattern of 11 engineers, together with Software program and Information Engineers, with combined ranges of expertise, from engineers with a few years of expertise to a few a long time of expertise.

Along with the desire rankings, we can even conduct an analytical evaluation of the fashions’ efficiency. This evaluation will give attention to two key points, response time and response size, which we are going to then use to derive a number of measures of those fashions’ effectiveness.

Developer Preferences: Qualitative Outcomes

Basic Observations

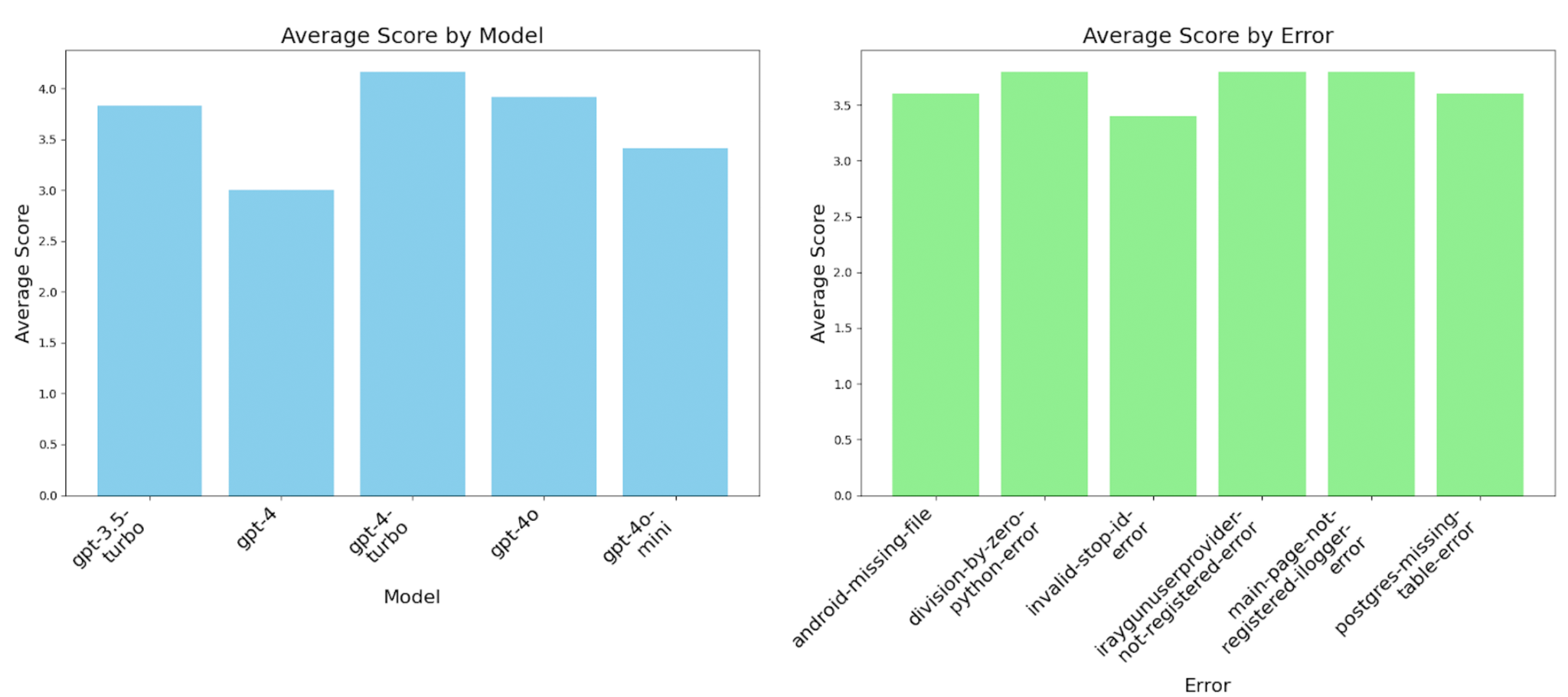

We produced the graph beneath primarily based on the engineers’ rankings. From this, there are just a few distinct outcomes that each help and contradict the anecdotal proof. Whereas this evaluation focuses on error decision, evaluating these findings with different motivational components mentioned within the introduction is important. As an example, the mannequin’s effectiveness in error decision may differ from their efficiency in duties like code era or debugging, which might affect general perceptions. This broader perspective helps us perceive the various impacts of enormous language fashions on totally different points of a developer’s workflow.

Sudden Findings

We assumed GPT-4 can be the perfect mannequin, however our software program engineers rated it the worst. We will present potential justifications for this end result utilizing the suggestions from our software program engineers and among the analytic information, which we are going to present within the subsequent part. These hypotheses resulted from my discussions with one other engineer who carefully adopted this research. The later fashions of GPT-4 Turbo and onwards embrace code snippets when suggesting modifications and engineers have reported that this offers them a greater understanding of the options. GPT-4 didn’t generate snippets and has longer options than GPT-3.5 Turbo, indicating that engineers dislike longer responses that don’t comprise supplementary sources.

Error Patterns

We additionally noticed that the JSON validation error constantly acquired very low rankings throughout all mannequin variants as a result of the stack hint alone doesn’t present answer for this error; this leads us to immediate engineering and what info is useful when asking an LLM for assist.

Contextual Influences

For .NET Errors

.NET errors comprised all of those take a look at circumstances apart from the division by zero error and the invalid cease ID, as described in an earlier desk. The result’s that solely the context the LLMs and engineers had been made conscious of was the stack hint, tags, breadcrumbs, and customized information. We see the next reported rating on these errors, doubtless as a result of the engineers right here at Raygun primarily work with .NET. Nevertheless, within the circumstances the place we examined totally different languages, we nonetheless noticed good outcomes.

Different Languages

Based mostly on the engineers’ feedback, the rationale for that is that in each the Python and TypeScript circumstances, the stack hint got here with the encircling code context. The encircling code context was supplied as a part of the stack hint in Python, and within the TypeScript error, this was from the supply maps with supply code included. With this extra info, the LLMs might generate code snippets that instantly addressed the errors, which additionally helped with the rankings of the later sequence of GPT-4 variants.

Efficiency Insights

Decline in Efficiency After GPT-4 Turbo

Trying on the scores of GPT-4 Turbo and onwards, we see a drop in rankings, particularly as soon as we attain GPT-4o, although these outcomes are nonetheless higher than GPT-4, and most are higher than GPT-3.5 Turbo. If we take away the JSON serialization error as an outlier, we are able to nonetheless observe a decline in efficiency after GPT-4 Turbo. This end result clearly exhibits that the efficiency of the GPT-4 sequence peaked with GPT-4 Turbo and declined afterward.

The Significance of Context for Non-Descriptive Stack Traces

This poor efficiency from the JSON serialization error is probably going as a result of want for supporting info on the underlying subject. Simply trying on the stack hint makes it difficult to pinpoint the error, as there have been a number of factors of failure. Once more, this performs into the subject of together with extra context, akin to supply code and variable values, to trace at the place the problem may very well be. An enhancement right here may very well be a RAG lookup implementation on the supply code, so it’s potential to affiliate the stack hint with the corresponding code.

Impression of Response Size on Efficiency

One concept for this worsening efficiency within the later fashions is the rise in response size. These fashions could fare higher in heavier logic-based questions, however these longer responses are undesirable in on a regular basis dialog. I’ve encountered this when asking questions on Python libraries, the place I would really like a direct reply. Every time, it might repeat an entire introduction part on establishing the library and ineffective info relating to my query.

If so, we wish to see some corrections to this when newer fashions come out, akin to GPT-5 and different opponents, however for now, the wordiness of those fashions is right here to remain.

Analytical Evaluation: Quantitative Outcomes

Response Time and Content material Technology

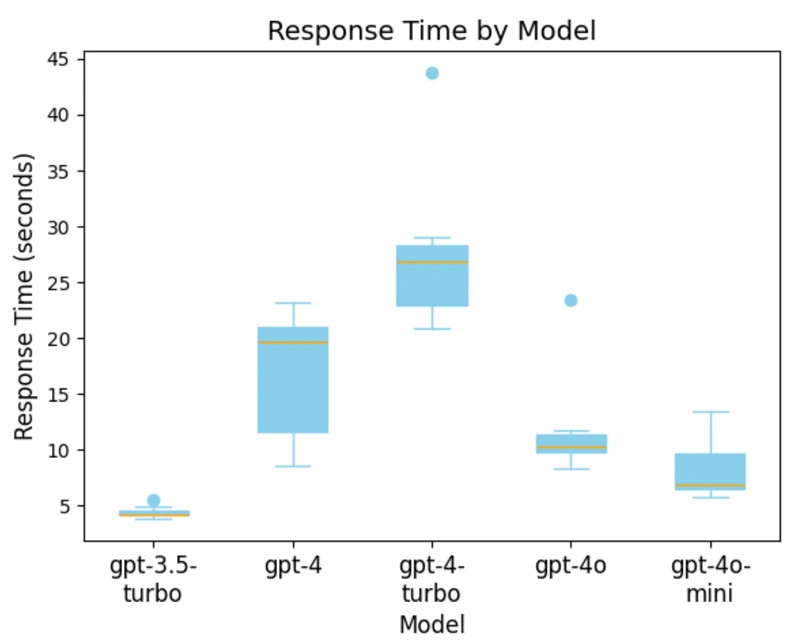

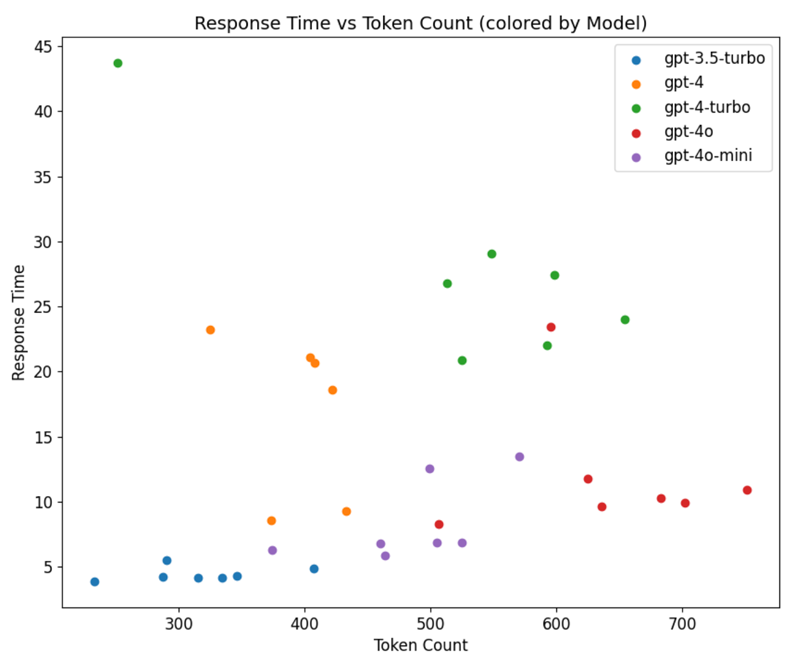

Whereas qualitative analysis of LLM responses is important, response time/era pace and the quantity of content material generated additionally considerably impression these instruments’ usefulness. Beneath is a graph displaying the typical response time to create a chat completion for the error-response pair.

Apparently, GPT-4 Turbo is the slowest mannequin relating to common response time to generate a chat completion. This can be a stunning end result, as common understanding means that GPT-4 Turbo must be sooner than GPT-4.

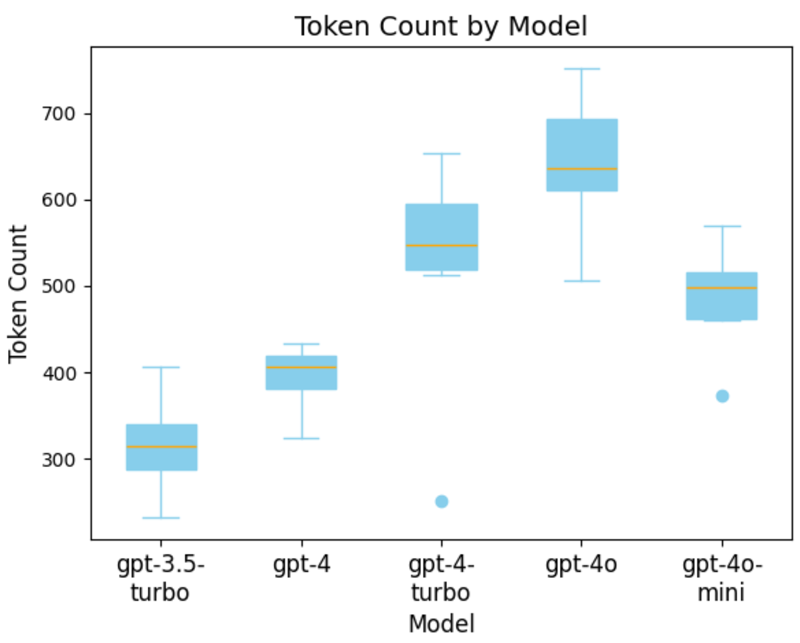

Token Technology and Mannequin Efficiency

The following graph explains this stunning end result by measuring the typical variety of tokens generated by every mannequin. This exhibits that GPT-4 Turbo generates considerably extra tokens on common than GPT-4. Apparently, the earlier graph exhibits that GPT-4o generates essentially the most tokens however continues to be significantly sooner than GPT-4 Turbo.

We additionally see that this pattern of extra tokens doesn’t proceed with OpenAI’s newest mannequin, GPT-4o mini. The common variety of tokens decreases in comparison with GPT-4 Turbo however stays nicely above GPT-4. The mannequin producing the least variety of tokens is GPT-3.5 Turbo, which aligns with the qualitative evaluation outcomes, the place engineers most popular shorter responses versus lengthier responses with no supplemental rationalization.

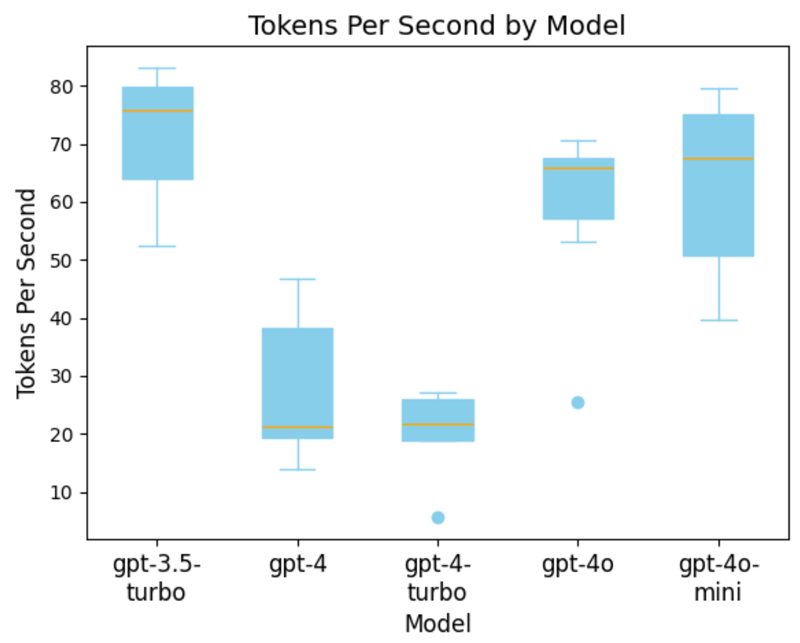

Per-Token Response Time

After inspecting the response time and common token depend by mannequin, we are able to decide every mannequin’s pace with respect to response time and token era.

Beneath is a graph displaying the per-token response time by mannequin. Right here, we see that GPT-4 is quicker than GPT-4 Turbo, however this is because of an outlier within the information. Given its tendency to generate longer outputs, its general response time continues to be longer than GPT-4. This will likely imply that GPT-4 Turbo is a much less fascinating mannequin when it generates an excessive amount of content material.

Notice: GPT-3.5, GPT 4, and GPT-4o fashions use totally different tokenizers.

Comparability of GPT-4o and GPT-4o Mini

Apparently, the information exhibits that GPT-4o and GPT-4o mini have related response speeds, contrasting with different sources’ findings. This discrepancy suggests {that a} bigger pattern dimension could also be wanted to disclose a extra pronounced distinction of their efficiency. One other rationalization is that on condition that we’re measuring the tokens per second by the entire response time, we’re barely skewing the values to be decrease on account of Time To First Token (TTFT) and different network-related bottlenecks.

Scaling Patterns

Plotting the response time versus token depend, grouped by mannequin, reveals distinct patterns in these fashions’ scaling. For GPT-3.5, GPT-4o, and GPT-4o Mini, the scaling is usually linear, with a rise in token depend resulting in a corresponding improve in response time.

Nevertheless, this sample doesn’t maintain for the bigger and older fashions of the GPT-4 sequence, the place these two variables haven’t any constant relationship. This inconsistency may very well be on account of a smaller pattern dimension or fewer sources devoted to those requests, leading to various response instances. The latter rationalization is extra doubtless given the linear relationship noticed within the different fashions.

GPT-4 Context Limitations

One ultimate piece of study emerged from producing these error-response pairs. Whereas the GPT-4 mannequin is competent, its context size is considerably restricted for duties requiring lengthy inputs, akin to stack traces. Attributable to this, one error-response pair couldn’t be generated as a result of the mixed enter and output would exceed the mannequin’s 8192-token context window.

Joint Evaluation

After assessing the qualitative information, it’s evident that GPT-4 Turbo is the perfect mannequin for this process. Nevertheless, evaluating this to the quantitative information introduces response time and price issues. The brand new GPT-4o fashions are considerably sooner and significantly cheaper than all different fashions, presenting a tradeoff. GPT-4 Turbo is the popular selection if barely higher efficiency is required. Nevertheless, if price and pace are priorities, GPT-4o and GPT-4o mini are higher alternate options.

Conclusion

In conclusion, our research supplies combined proof relating to the efficiency of later fashions. Whereas some newer fashions, like GPT-4 Turbo and GPT-4o, confirmed enhancements on account of their capacity to incorporate concise code snippets, others, like GPT-4, fell brief on account of verbose and fewer sensible responses.

Key Takeaways

- Code snippets matter: Fashions that present code snippets and explanations are more practical and most popular by builders.

- Context is essential: Including surrounding code or supply maps considerably enhances the standard of responses.

- Stability response size: Shorter, extra concise responses are usually extra useful than longer, verbose ones.

- Common analysis: Constantly assess mannequin efficiency to make sure you use the simplest instrument in your wants.

- Thoughts context limits: Concentrate on the context size limitations and plan accordingly.

By specializing in these components, builders can higher leverage LLMs for error decision, in the end enhancing their productiveness and the accuracy of their options.

Future experiments that may complement this research might embrace a deeper evaluation of code era, as talked about within the introduction. One potential experiment might contain taking the ideas from the error decision and offering further context to the LLMs. Ideally, if this research had been to be redone, it might be useful to incorporate a greater variety of errors, together with tougher ones, and collect rankings from a extra various set of engineers.