Usually, the information bases over which we develop an LLM-based retrieval software comprise a whole lot of knowledge in varied codecs. To supply the LLM with probably the most related context to reply the query particular to a piece inside the information base, we depend on chunking the textual content inside the information base and protecting it useful.

Chunking

Chunking is the method of slicing textual content into significant models to enhance info retrieval. By making certain every chunk represents a centered thought or concept, chunking assists in sustaining the contextual integrity of the content material.

On this article, we are going to have a look at 3 points of chunking:

- How poor chunking results in much less related outcomes

- How good chunking results in higher outcomes

- How good chunking with metadata results in well-contextualized outcomes

To successfully showcase the significance of chunking, we are going to take the identical piece of textual content, apply 3 completely different chunking methodologies to it, and study how info is retrieved based mostly on the question.

Chunk and Retailer to Qdrant

Allow us to have a look at the next code which exhibits three alternative ways to chunk the identical textual content.

import qdrant_client

from qdrant_client.fashions import PointStruct, Distance, VectorParams

import openai

import yaml

# Load configuration

with open('config.yaml', 'r') as file:

config = yaml.safe_load(file)

# Initialize Qdrant consumer

consumer = qdrant_client.QdrantClient(config['qdrant']['url'], api_key=config['qdrant']['api_key'])

# Initialize OpenAI with the API key

openai.api_key = config['openai']['api_key']

def embed_text(textual content):

print(f"Generating embedding for: '{text[:50]}'...") # Present a snippet of the textual content being embedded

response = openai.embeddings.create(

enter=[text], # Enter must be a listing

mannequin=config['openai']['model_name']

)

embedding = response.knowledge[0].embedding # Entry utilizing the attribute, not as a dictionary

print(f"Generated embedding of length {len(embedding)}.") # Affirm embedding era

return embedding

# Perform to create a set if it does not exist

def create_collection_if_not_exists(collection_name, vector_size):

collections = consumer.get_collections().collections

if collection_name not in [collection.name for collection in collections]:

consumer.create_collection(

collection_name=collection_name,

vectors_config=VectorParams(dimension=vector_size, distance=Distance.COSINE)

)

print(f"Created collection: {collection_name} with vector size: {vector_size}") # Assortment creation

else:

print(f"Collection {collection_name} already exists.") # Assortment existence verify

# Textual content to be chunked which is flagged for AI and Plagiarism however is simply used for illustration and instance.

textual content = """

Synthetic intelligence is reworking industries throughout the globe. One of many key areas the place AI is making a major impression is healthcare. AI is getting used to develop new medication, personalize therapy plans, and even predict affected person outcomes. Regardless of these developments, there are challenges that have to be addressed. The moral implications of AI in healthcare, knowledge privateness considerations, and the necessity for correct regulation are all important points. As AI continues to evolve, it's essential that these challenges are usually not neglected. By addressing these points head-on, we will make sure that AI is utilized in a means that advantages everybody.

"""

# Poor Chunking Technique

def poor_chunking(textual content, chunk_size=40):

chunks = [text[i:i + chunk_size] for i in vary(0, len(textual content), chunk_size)]

print(f"Poor Chunking produced {len(chunks)} chunks: {chunks}") # Present chunks produced

return chunks

# Good Chunking Technique

def good_chunking(textual content):

import re

sentences = re.break up(r'(?The above code does the next:

embed_texttechnique takes within the textual content, generates embedding by utilizing the OpenAI embedding mannequin, and returns the embedding generated.- Initializes a textual content string that’s used for chunking and later content material retrieval

- Poor chunking technique: Splits textual content into chunks of 40 characters every

- Good chunking technique: Splits textual content based mostly on sentences to acquire a extra significant context

- Good chunking technique with metadata: Provides applicable metadata to sentence-level chunks

- As soon as embeddings are generated for the chunks, they’re saved in corresponding collections in Qdrant Cloud.

Consider the poor chunks are created solely to showcase how poor chunking impacts retrieval.

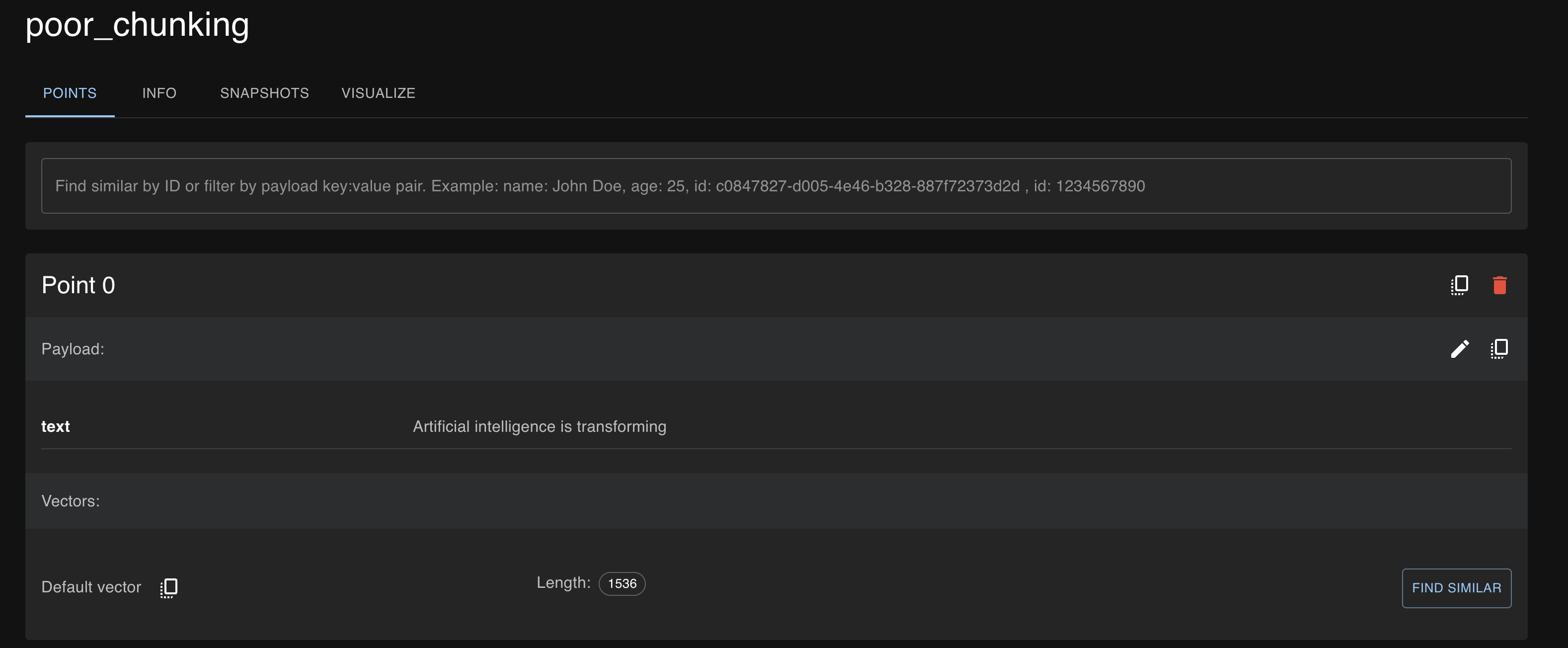

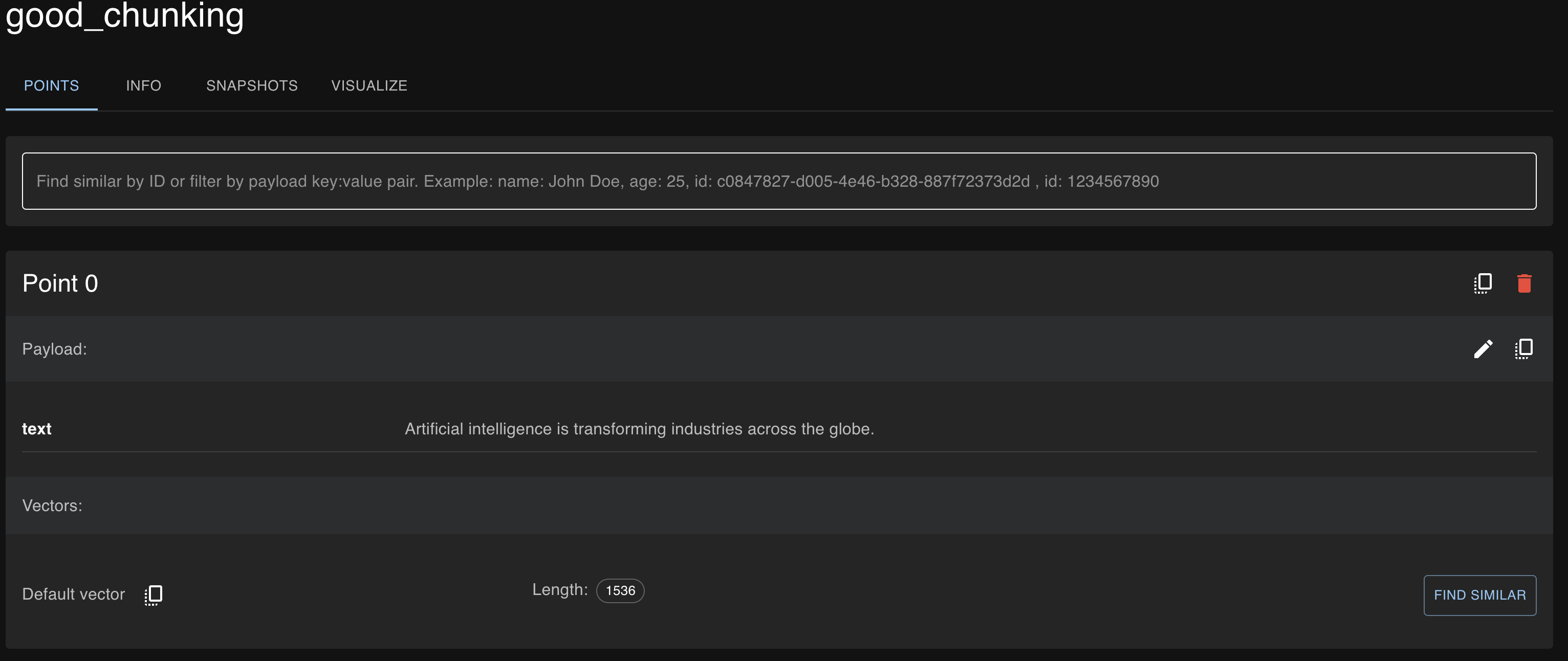

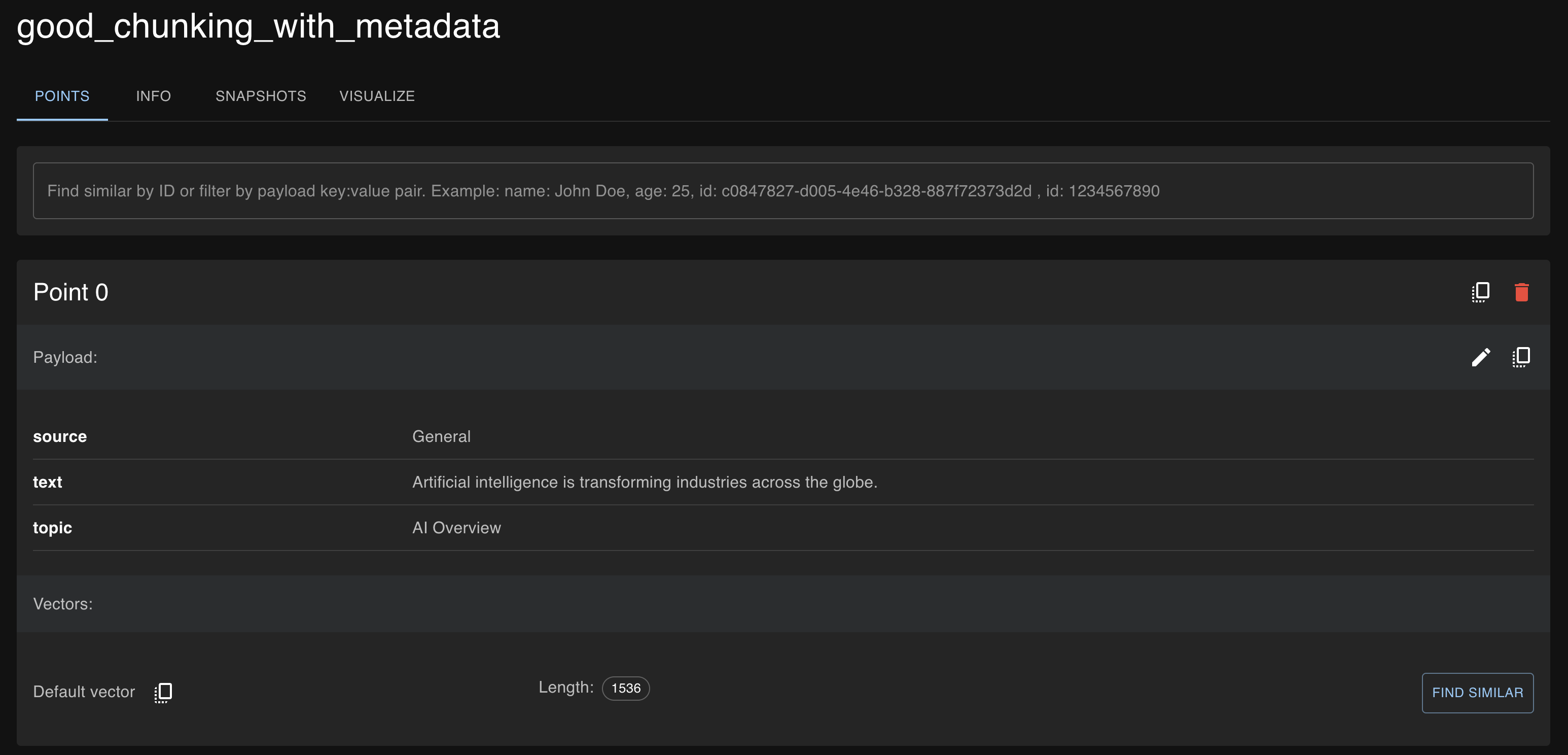

Beneath are the screenshots from Qdrant Cloud for the chunks, the place you possibly can see metadata was added to the sentence-level chunks to point the supply and matter.

Retrieval Outcomes Based mostly on Chunking Technique

Now allow us to write some code to retrieve the content material from Qdrant Vector DB based mostly on a question.

import qdrant_client

import openai

import yaml

# Load configuration

with open('config.yaml', 'r') as file:

config = yaml.safe_load(file)

# Initialize Qdrant consumer

consumer = qdrant_client.QdrantClient(config['qdrant']['url'], api_key=config['qdrant']['api_key'])

# Initialize OpenAI with the API key

openai.api_key = config['openai']['api_key']

def embed_text(textual content):

response = openai.embeddings.create(

enter=[text], # Guarantee enter is a listing of strings

mannequin=config['openai']['model_name']

)

# Appropriately entry the embedding knowledge

embedding = response.knowledge[0].embedding # Entry utilizing the attribute, not as a dictionary

return embedding

# Outline the question

question = "ethical implications of AI in healthcare"

query_embedding = embed_text(question)

# Perform to carry out retrieval and print outcomes

def retrieve_and_print(collection_name):

outcome = consumer.search(

collection_name=collection_name,

query_vector=query_embedding,

restrict=3

)

print(f"nResults from '{collection_name}' collection for the query: '{query}':")

if not outcome:

print("No results found.")

return

for idx, res in enumerate(outcome):

if 'textual content' in res.payload and res.payload['text']:

print(f"Result {idx + 1}:")

print(f" Text: {res.payload['text']}")

print(f" Source: {res.payload.get('source', 'N/A')}")

print(f" Topic: {res.payload.get('topic', 'N/A')}")

else:

print(f"Result {idx + 1}:")

print(" No relevant text found for this chunk. It may be too fragmented or out of context to match the query effectively.")

# Execute retrieval and supply applicable explanations

retrieve_and_print("poor_chunking")

retrieve_and_print("good_chunking")

retrieve_and_print("good_chunking_with_metadata")

The above code does the next:

- Defines a question and generates the embedding for the question

- The search question is ready to

"ethical implications of AI in healthcare". - The

retrieve_and_printperform searches the actual Qdrant assortment and retrieves the highest 3 vectors closest to the question embedding.

Now allow us to have a look at the output:

python retrieval_test.py

Outcomes from 'poor_chunking' assortment for the question: 'moral implications of AI in healthcare':

Outcome 1:

Textual content: . The moral implications of AI in heal

Supply: N/A

Subject: N/A

Outcome 2:

Textual content: ant impression is healthcare. AI is being us

Supply: N/A

Subject: N/A

Outcome 3:

Textual content:

Synthetic intelligence is reworking

Supply: N/A

Subject: N/A

Outcomes from 'good_chunking' assortment for the question: 'moral implications of AI in healthcare':

Outcome 1:

Textual content: The moral implications of AI in healthcare, knowledge privateness considerations, and the necessity for correct regulation are all important points.

Supply: N/A

Subject: N/A

Outcome 2:

Textual content: One of many key areas the place AI is making a major impression is healthcare.

Supply: N/A

Subject: N/A

Outcome 3:

Textual content: By addressing these points head-on, we will make sure that AI is utilized in a means that advantages everybody.

Supply: N/A

Subject: N/A

Outcomes from 'good_chunking_with_metadata' assortment for the question: 'moral implications of AI in healthcare':

Outcome 1:

Textual content: The moral implications of AI in healthcare, knowledge privateness considerations, and the necessity for correct regulation are all important points.

Supply: Healthcare Part

Subject: AI in Healthcare

Outcome 2:

Textual content: One of many key areas the place AI is making a major impression is healthcare.

Supply: Healthcare Part

Subject: AI in Healthcare

Outcome 3:

Textual content: By addressing these points head-on, we will make sure that AI is utilized in a means that advantages everybody.

Supply: Basic

Subject: AI Overview

The output for a similar search question varies relying on the chunking technique applied.

- Poor chunking technique: The outcomes listed below are much less related, as you possibly can discover, and that’s as a result of the textual content was break up into small, arbitrary chunks.

- Good chunking technique: The outcomes listed below are extra related as a result of the textual content was break up into sentences, preserving the semantic that means.

- Good chunking technique with metadata: The outcomes listed below are most correct as a result of the textual content was thoughtfully chunked and enhanced utilizing metadata.

Inference From the Experiment

- Chunking must be rigorously strategized, and the chunk dimension shouldn’t be too small or too huge.

- An instance of poor chunking is when the chunks are too small, chopping off sentences in unnatural locations, or too huge, with a number of subjects included in the identical chunk, making it very complicated for retrieval.

- The entire concept of chunking revolves across the idea of offering higher context to the LLM.

- Metadata massively enhances correctly structured chunking by offering additional layers of context. For instance, now we have added supply and matter as metadata components to our chunks.

- The retrieval system advantages from this extra info. For instance, if the metadata signifies {that a} chunk belongs to the “Healthcare Section,” the system can prioritize these chunks when a question associated to healthcare is made.

- By bettering upon chunking, the outcomes could be structured and categorized. If the question matches a number of contexts inside the similar textual content, we will establish which context or part the data belongs to by wanting on the metadata for the chunks.

Maintain these methods in thoughts and chunk your solution to success in LLM-based search functions.