An SQL database should deal with a number of incoming connections concurrently to keep up optimum system efficiency. The expectation is that the database can settle for and course of quite a few requests in parallel. It is simple when totally different requests goal separate knowledge, corresponding to one request studying from Desk 1 and one other from Desk 2. Nevertheless, problems come up when a number of requests contain studying from and writing to the identical desk. How can we hold the efficiency excessive and but keep away from consistency points? Let’s learn on to know how issues work in SQL databases and distributed techniques.

Transactions and Points

An SQL transaction is a set of queries (corresponding to SELECT, INSERT, UPDATE, DELETE) despatched to the database to be executed as a single unit of labor. Because of this both all queries within the transaction have to be executed, or none needs to be. Executing transactions is just not atomic and takes time. For instance, a single UPDATE assertion may modify a number of rows, and the database system wants time to replace every row. Throughout this replace course of, one other transaction may begin and try to learn the modified rows. This raises the query: ought to the opposite transaction learn the brand new values of the rows (even when not all rows are up to date but), the outdated values of the rows (even when some rows have been up to date), or ought to it wait? What occurs if the primary transaction have to be canceled later for any cause? How ought to this have an effect on the opposite transaction?

ACID

Transactions in SQL databases should adhere to ACID. They have to be:

- Atomic — every transaction is both accomplished or reverted fully. The transaction can’t modify just some data and fail for others.

- Constant — modified entities should nonetheless meet all of the consistency necessities. Overseas keys should level to current entities, values should meet the info kind specs, and different checks should nonetheless go.

- Remoted — transactions should work together with one another following the isolation ranges they specified.

- Sturdy — when the transaction is confirmed to be dedicated, the modifications have to be persistent even within the face of software program points and {hardware} failures.

Earlier than explaining what isolation ranges are, we have to perceive what points we could face in SQL databases (and distributed techniques on the whole).

Learn Phenomena

Relying on how we management concurrency within the database, totally different learn phenomena could seem. The usual SQL 92 defines three learn phenomena describing varied points which will occur when two transactions are executed concurrently with no transaction isolation in place.

We’ll use the next Folks desk for the examples:

|

id |

identify |

wage |

|

1 |

John |

150 |

|

2 |

Jack |

200 |

Soiled Learn

When two transactions entry the identical knowledge and we permit for studying values that aren’t but dedicated, we could get a unclean learn. Let’s say that we’ve two transactions doing the next:

|

Transaction 1 |

Transaction 2 |

|

UPDATE Folks SET wage = 180 WHERE id = 1 |

|

|

SELECT wage FROM Folks WHERE id = 1 |

|

|

ROLLBACK |

Transaction 2 modifies the row with id = 1, then Transaction 1 reads the row and will get a price of 180, and Transaction 2 rolls issues again. Successfully, Transaction 1 makes use of values that don’t exist within the database. What we might anticipate right here is that Transaction 1 makes use of values that had been efficiently dedicated within the database in some unspecified time in the future in time.

Repeatable Learn

Repeatable learn is an issue when a transaction reads the identical factor twice and will get totally different outcomes every time. Let’s say the transactions do the next:

|

Transaction 1 |

Transaction 2 |

|

SELECT wage FROM Folks WHERE id = 1 |

|

|

UPDATE Folks SET wage = 180 WHERE id = 1 |

|

|

COMMIT |

|

|

SELECT wage FROM Folks WHERE id = 1 |

Transaction 1 reads a row and will get a price of 150. Transaction 2 modifies the identical row. Then Transaction 1 reads the row once more and will get a unique worth (180 this time).

What we might anticipate right here is to learn the identical worth twice.

Phantom Learn

Phantom learn is a case when a transaction appears for rows the identical manner twice however will get totally different outcomes. Let’s take the next:

|

Transaction 1 |

Transaction 2 |

|

SELECT * FROM Folks WHERE wage |

|

|

INSERT INTO Folks(id, identify, wage) VALUES (3, Jacob, 120) |

|

|

COMMIT |

|

|

SELECT * FROM Folks WHERE wage |

Transaction 1 reads rows and finds two of them matching the circumstances. Transaction 2 provides one other row that matches the circumstances utilized by Transaction 1. When Transaction 1 reads once more, it will get a unique set of rows. We might anticipate to get the identical rows for each SELECT statements of Transaction 1.

Consistency Points

Aside from learn phenomena, we could face different issues that aren’t outlined within the SQL commonplace and are sometimes uncared for within the SQL world. Nevertheless, they’re quite common as soon as we scale out the database or begin utilizing learn replicas. They’re all associated to the consistency fashions which we are going to cowl afterward.

Soiled Writes

Two transactions could modify parts independently which can result in a scenario wherein the end result is inconsistent with any of the transactions.

|

Transaction 1 |

Transaction 2 |

|

UPDATE Folks SET wage = 180 WHERE id = 1 |

|

|

UPDATE Folks SET wage = 130 WHERE id = 1 |

|

|

UPDATE Folks SET wage = 230 WHERE id = 2 |

|

|

COMMIT |

|

|

UPDATE Folks SET wage = 280 WHERE id = 2 |

|

|

COMMIT |

After executing the transactions, we finish with values 130 and 280 that are inconsistent and don’t come from any of the transactions.

Outdated Variations

A learn transaction could return any worth that was written prior to now however is just not the newest worth.

|

Transaction 1 |

Transaction 2 |

Transaction 3 |

|

UPDATE Folks SET wage = 180 WHERE id = 1 |

||

|

COMMIT |

||

|

SELECT * FROM Folks WHERE id = 1 |

||

|

SELECT * FROM Folks WHERE id = 1 |

On this instance, Transaction 2 and Transaction 3 could also be issued towards secondary nodes of the system that will not have the newest modifications. If that’s the case, then Transaction 2 could return any of 150 and 180. The identical goes for Transaction 3. It’s completely legitimate for Transaction 2 to return 180 (which is anticipated) after which Transaction 3 could learn 150.

Delayed Writes

Think about that we’ve the next transactions:

|

Shopper 1 Transaction 1 |

|

UPDATE Folks SET wage = 180 WHERE id = 1 |

|

COMMIT |

|

Shopper 1 Transaction 2 |

|

SELECT * FROM Folks WHERE id = 1 |

We might anticipate Transaction 2 to return the worth that we wrote within the earlier transaction, so Transaction 2 ought to return 180. Nevertheless, Transaction 2 could also be triggered towards a learn duplicate of the principle database. If we don’t have the Learn Your Writes property, then the transaction could return 150.

Non-Monotonic Reads

One other situation we could have is across the freshness of the info. Let’s take the next instance:

|

Shopper 1 Transaction 1 |

|

UPDATE Folks SET wage = 180 WHERE id = 1 |

|

COMMIT |

|

Shopper 1 Transaction 2 |

|

SELECT * FROM Folks WHERE id = 1 |

|

Shopper 1 Transaction 3 |

|

SELECT * FROM Folks WHERE id = 1 |

Transaction 2 could return the newest worth (which is 180 on this case), after which Transaction 3 could return some older worth (on this case 150).

Transaction Isolation Ranges

To battle the learn phenomena, the SQL 92 commonplace defines varied isolation ranges that outline which learn phenomena can happen.

There are 4 commonplace ranges: READ UNCOMMITED, READ COMMITED, REPEATABLE READ, and SERIALIZABLE.

READ UNCOMMITED permits a transaction to learn knowledge that’s not but dedicated to the database. This permits for the best efficiency but it surely additionally results in probably the most undesired learn phenomena.

READ COMMITTED permits a transaction to learn solely the info that’s dedicated. This avoids the problem of studying knowledge that “later disappears” however doesn’t shield it from different learn phenomena.

REPEATABLE READ degree tries to keep away from the problem of studying knowledge twice and getting totally different outcomes.

Lastly, SERIALIZABLE tries to keep away from all learn phenomena.

The next desk reveals which phenomena are allowed:

|

Degree Phenomena |

Soiled Learn |

Repeatable learn |

Phantom |

|

READ UNCOMMITTED |

+ |

+ |

+ |

|

READ COMMITTED |

– |

+ |

+ |

|

REPEATABLE READ |

– |

– |

+ |

|

SERIALIZABLE |

– |

– |

– |

The isolation degree is outlined per transaction. For instance, it’s allowed for one transaction to run with a SERIALIZALBLE degree, and for an additional to run with READ UNCOMMITED.

Curiously, there isn’t any requirement for varied isolation ranges to be carried out in an SQL database. It’s acceptable if all isolation ranges are carried out as SERIALIZABLE.

The isolation ranges shield us from among the points described earlier. Earlier than shifting on, we have to perceive how they’re carried out.

Locks and Latches and Impasse Detection

Locks are synchronization mechanisms used to guard shared reminiscence from corruption, as atomic operations aren’t doable. To grasp this in observe, contemplate how CPUs work together with reminiscence, which is split into cells of assorted sizes.

CPU Internals

A typical CPU can entry a fixed-size reminiscence cell, also known as an “int.” The dimensions of those cells relies on the CPU structure and the precise operation, with some CPUs dealing with as little as 8 bits and others as much as 512 bits concurrently. Most fashionable CPUs, just like the widely-used Intel 64 structure, function with a local knowledge dimension of 64 bits, utilizing 64-bit lengthy registers for many operations.

Virtually, this implies a CPU can’t write greater than 64 bits in a single operation. Writing 512 bits, for instance, requires eight separate writes. Whereas some CPUs can carry out these writes in parallel, they often execute them sequentially. This limitation turns into important when modifying knowledge buildings bigger than 64 bits, because the CPU can’t replace them in a single time slice. With multi-core CPUs, one other core may begin studying the construction earlier than it’s totally modified, risking knowledge corruption. To forestall this, we have to mark the construction as “unavailable” to different CPU cores till the modification is full. That is the place locks are available.

Within the context of SQL, reminiscence buildings like tables, rows, pages, and the database itself are sometimes a lot bigger than 64 bits. Moreover, a single SQL operation could replace a number of rows without delay, which exceeds the CPU’s capability for a single operation. Therefore, locks are essential to make sure knowledge modification happens with out compromising knowledge consistency.

Locks

The kinds of locks accessible rely on the SQL engine in use, however many databases undertake the same strategy.

Every lock might be acquired with a particular mode that dictates what different transactions are allowed to do with the lock. Some widespread lock modes are:

- Unique — Solely the transaction that acquires the lock can use it, stopping different transactions from buying the lock. Used when modifying knowledge (DELETE, INSERT, UPDATE). Usually acquired on the web page or row degree.

- Shared — A learn lock that enables a number of transactions to amass it for studying knowledge. Typically used as a preliminary step earlier than changing to a lock that allows modifications.

- Replace — Signifies an upcoming replace. As soon as the proprietor is able to modify the info, the lock is transformed to an Unique lock.

- Intent Lock — Signifies a lock on a subpart of the locked construction. For instance, an intent lock on a desk suggests {that a} row on this desk may also be locked. Prevents others from taking unique locks on the construction. For instance, if transaction A needs to switch a row in a desk, it takes an unique lock on the row. If transaction B then needs to switch a number of rows and takes an unique lock on the desk, a battle arises. An intent lock on the desk alerts transaction B that there is already an ongoing lock throughout the desk, avoiding such conflicts. Kinds of intent locks embrace intent unique, intent shared, intent replace, shared with intent unique, shared with intent replace, and replace with intent unique.

- Schema — Locks for schema modifications. To arrange for a schema change, the SQL engine wants time, so different transactions have to be prevented from modifying the schema, although they will nonetheless learn or change knowledge.

- Bulk Replace — A lock used for bulk import operations.

Locks might be utilized to varied objects within the hierarchy:

- Database — Usually includes a shared lock.

- Desk — Normally takes an intent lock or unique lock.

- Web page — A gaggle of rows of fastened dimension, usually taking an intent lock.

- Row — Can take a shared, unique, or replace lock.

Locks also can escalate from rows to pages to tables or be transformed to differing kinds. For extra detailed info, seek the advice of your SQL engine’s documentation.

Latches

Along with logical locks that shield the precise knowledge saved within the database, the SQL engine should additionally safeguard its inside knowledge buildings. These protecting mechanisms are usually known as latches. Latches are utilized by the engine when studying and writing reminiscence pages, in addition to throughout I/O and buffer operations.

Just like locks, latches are available varied varieties and modes, together with

- Shared — Used for studying a web page.

- Maintain – Used to maintain the web page within the buffer, stopping it from being disposed of.

- Replace – Just like an replace lock, indicating a pending replace.

- Unique – Just like unique locks, stopping different latches from being acquired.

- Destroy Latch – Acquired when a buffer is to be faraway from the cache.

Latches are usually managed completely by the SQL engine (typically known as the SQL Working System) and can’t be modified by customers. They’re acquired and launched mechanically. Nevertheless, by observing latches, customers can establish the place and why efficiency degrades

Isolation Ranges

Now, let’s discover how isolation ranges have an effect on lock acquisition. Totally different isolation ranges require several types of locks.

Think about the READ COMMITTED isolation degree. In line with the SQL-92 commonplace, this degree disallows solely soiled reads, that means a transaction can’t learn uncommitted knowledge. Right here’s how the transaction modifies the row if it makes use of Strict 2-Section Locking (as an example utilized by MS SQL):

- The row is locked with an unique lock. No different transaction can now lock this row. That is Section one.

- The information is modified.

- Both the transaction is dedicated, or the transaction is rolled again and the row is restored to its authentic model.

- The lock is launched. That is Section 2.

This is the way it works when scanning a desk:

- The primary row is locked with a learn lock. No different transaction can now modify the row as they will’t purchase an unique lock.

- The information is learn and processed. For the reason that row is protected with a lock, we’re certain the info was dedicated in some unspecified time in the future.

- The lock is launched.

- The second row is locked with a learn lock.

- This course of continues row by row.

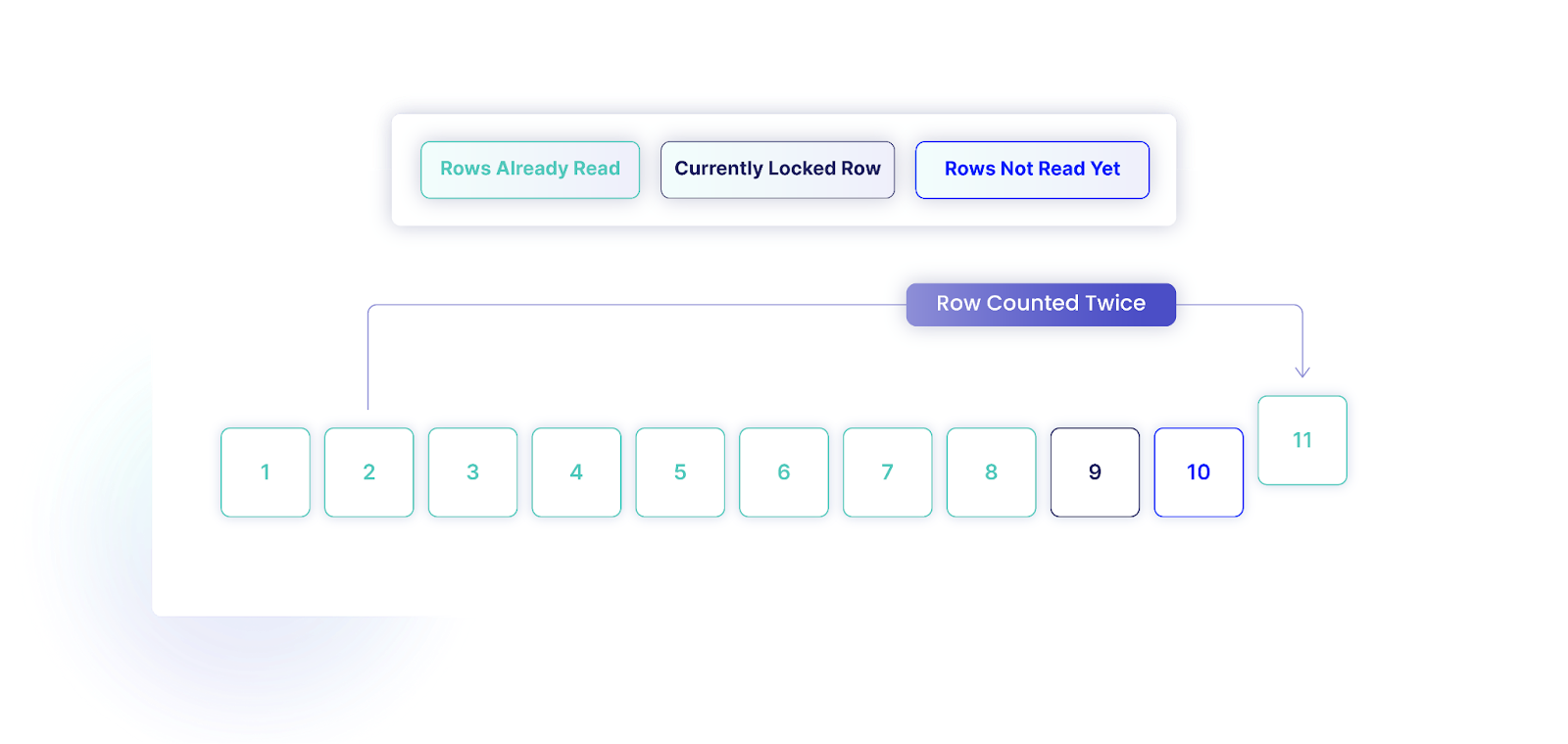

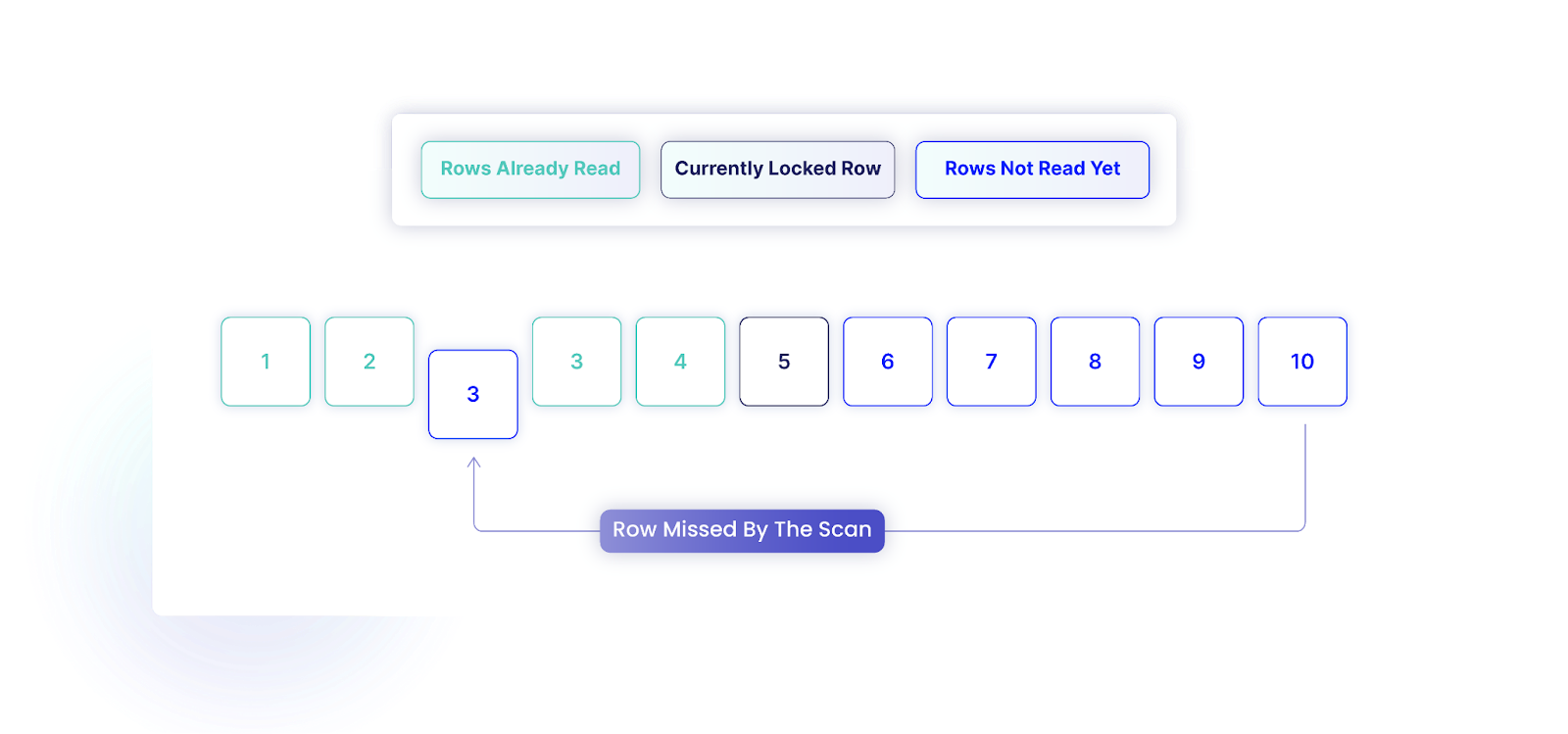

This course of ensures that we keep away from soiled reads. Nevertheless, the row lock is launched as soon as a row is totally processed. This implies if one other transaction modifies and commits a row in between, the studying transaction will decide up the modifications. This will trigger duplicates when studying and even lacking rows.

A typical database administration system shops rows in a desk in an ordered method, usually utilizing the desk’s main key inside a B-tree construction. The first key often imposes a clustered index, inflicting the info to be bodily ordered on the disk.

Think about a desk with 10 rows, with IDs starting from 1 to 10. If our transaction has already learn the primary 8 rows (IDs 1 via 8), and one other transaction modifies the row with ID 2, altering its ID to 11, and commits the change, we are going to encounter a problem. As we proceed scanning, we are going to now discover 11 rows in complete. Moreover, we’ll try to learn the row with the unique ID 2, which now not exists.

Equally, we will miss a row as a result of this ordering. Think about we’ve 10 rows, and we have learn rows with IDs 1 to 4. If one other transaction modifications the row with ID 10, setting its ID to three, our transaction is not going to discover this row due to the reordering.

To unravel this, a lock on your entire desk is important to stop different transactions from including rows throughout the scan. When utilizing SERIALIZABLE, the locks could be launched solely after the transaction and could be taken on the entire database as a substitute of specific entities. Nevertheless, this may trigger all different transactions to attend till the scan is full, which may cut back efficiency.

Impasse Detection

Locks result in deadlocks, subsequently, we want an answer for understanding whether or not the system is deadlocked or not. There are two fundamental approaches to impasse detection.

The primary is to observe transactions and verify in the event that they make any progress. In the event that they anticipate too lengthy and don’t progress, then we could suspect there’s a impasse and we will cease the transactions which are operating for too lengthy.

One other strategy is to investigate the locks and search for cycles within the wait graph. As soon as we establish a cycle, there isn’t any method to remedy the issue apart from terminating one of many transactions.

Nevertheless, deadlocks occur as a result of we use locks. Let’s see one other resolution that doesn’t use locks in any respect.

Multiversion Concurrency Management and Snapshots and Impasse Avoidance

We don’t have to take locks to implement isolation. As a substitute, we will use snapshot isolation or Multiversion Concurrency Management (MVCC).

MVCC

Optimistic locking, also called snapshot isolation or Multiversion Concurrency Management (MVCC) is a technique for avoiding locks and nonetheless offering concurrency. On this methodology, every row in a desk has an related model quantity. When a row must be modified, it’s copied and the copy’s model quantity is incremented so different transactions can detect the change.

When a transaction begins, it data the present model numbers to ascertain the state of the rows. Throughout reads, the transaction extracts solely these rows that had been final modified earlier than the transaction began. Upon modifying knowledge and trying to commit, the database checks the row variations. If any rows had been modified by one other transaction within the meantime, the replace is rejected, and the transaction is rolled again and should begin over.

The concept right here is to maintain a number of variations of the entities (known as snapshots) that may be accessed concurrently. We would like the transactions to by no means anticipate different transactions to finish. Nevertheless, the longer the transaction runs, the upper the possibilities that the database content material can be modified in different transactions. Subsequently, long-running transactions have greater probabilities of observing conflicts. Successfully, extra transactions could also be rolled again.

This strategy is efficient when transactions have an effect on totally different rows, permitting them to commit with out points. It enhances scalability and efficiency as a result of transactions don’t want to amass locks. Nevertheless, if transactions incessantly modify the identical rows, rollbacks will happen usually, resulting in efficiency degradation.

Moreover, sustaining row variations will increase the database system’s complexity. The database should keep many variations of entities. This will increase disk area utilization and reduces learn and write efficiency. As an example, MS SQL requires an extra 14 bytes for every common row (aside from storing the brand new knowledge).

We additionally have to take away out of date variations which can require the stop-the-world course of to traverse all of the content material and rewrite it. This decreases the database efficiency.

Snapshots

Snapshot isolation ranges are one other transaction isolation degree that databases present to make the most of MVCC. Relying on the database techniques, they could be known as this fashion explicitly (like MS SQL’s SNAPSHOT) or could also be carried out this fashion (like Oracle’s SERIALIZABLE or CockroachDB’s REPEATABLE READ). Let’s see how MS SQL implements the MVCC mechanism.

At a look, MS SQL supplies two new isolation ranges:

- READ COMMITTED SNAPSHOT ISOLATION (RCSI)

- SNAPSHOT

Nevertheless, should you have a look at the documentation, you will discover that solely SNAPSHOT is talked about. It’s because you’ll want to reconfigure your database to allow snapshots and this implicitly modifications READ COMMITED to RCSI.

When you allow snapshots, the database makes use of snapshot scans as a substitute of the common locking scans. This unblocks the readers and stops locking the entities throughout all isolation ranges! Simply by enabling snapshots, you successfully modify all isolation ranges without delay. Let’s see an instance.

When you run these queries with snapshots disabled, you’ll observe that the second transaction wants to attend:

|

Transaction 1 |

Transaction 2 |

|

SET TRANSACTION ISOLATION LEVEL READ COMMITTED |

|

|

BEGIN TRANSACTION |

|

|

SELECT * FROM Folks |

SET TRANSACTION ISOLATION LEVEL SERIALIZABLE |

|

SELECT * FROM Folks |

|

|

This wants to attend |

|

|

COMMIT |

|

|

This could lastly return knowledge |

|

|

COMMIT |

Nevertheless, while you allow snapshots, the second transaction doesn’t wait anymore!

|

Transaction 1 |

Transaction 2 |

|

SET TRANSACTION ISOLATION LEVEL READ COMMITTED |

|

|

BEGIN TRANSACTION |

|

|

SELECT * FROM Folks |

SET TRANSACTION ISOLATION LEVEL SERIALIZABLE |

|

SELECT * FROM Folks |

|

|

This doesn’t wait anymore and returns knowledge instantly |

|

|

COMMIT |

COMMIT |

Though we don’t use snapshot isolation ranges explicitly, we nonetheless change how the transactions behave.

You can even use the SNAPSHOT isolation degree explicitly which is kind of equal to SERIALIZABLE. Nevertheless, it creates an issue known as white and black marbles.

White and Black Marbles Downside

Let’s take the next Marbles desk:

|

id |

coloration |

row_version |

|

1 |

black |

1 |

|

2 |

white |

1 |

We begin with one marble in every coloration. Let’s now say that we need to run two transactions. First tries to alter all black stones into white. One other one tries to do the alternative – it tries to alter all whites into blacks. We’ve got the next:

|

Transaction 1 |

Transaction 2 |

|

UPDATE Marbles SET coloration=”white” WHERE coloration=”black” |

UPDATE Marbles SET coloration=”black” WHERE coloration=”white” |

Now, if we implement SERIALIZABLE with pessimistic locking, a typical implementation will lock your entire desk. After operating each of the transactions we finish with both two black stones (if first we execute Transaction 1 after which Transaction 2) or two white stones (if we execute Transaction 2 after which Transaction 1).

Nevertheless, if we use SNAPSHOT, we’ll find yourself with the next:

|

id |

coloration |

row_version |

|

1 |

white |

2 |

|

2 |

black |

2 |

Since each transactions contact totally different units of rows, they will run in parallel. This results in an sudden consequence.

Whereas it could be shocking, that is how Oracle implements its SERIALIZABLE isolation degree. You’ll be able to’t get actual serialization and also you all the time get snapshots which are susceptible to the white and black marbles downside.

Impasse Avoidance

Snapshots allow us to keep away from deadlocks. As a substitute of checking if the impasse occurred, we will keep away from them fully if transactions are quick sufficient.

This strategy works nice when transactions are certainly short-lived. As soon as we’ve long-running transactions, the efficiency of the system degrades quickly, as these transactions have to be retried when they’re rolled again. This makes the issue even larger – not solely do we’ve a gradual transaction, however we additionally have to run it many instances to let it succeed.

Consistency Fashions

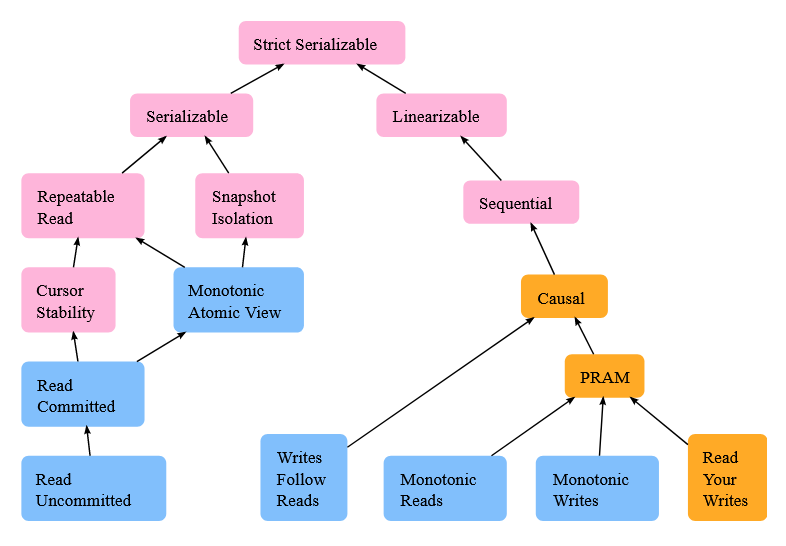

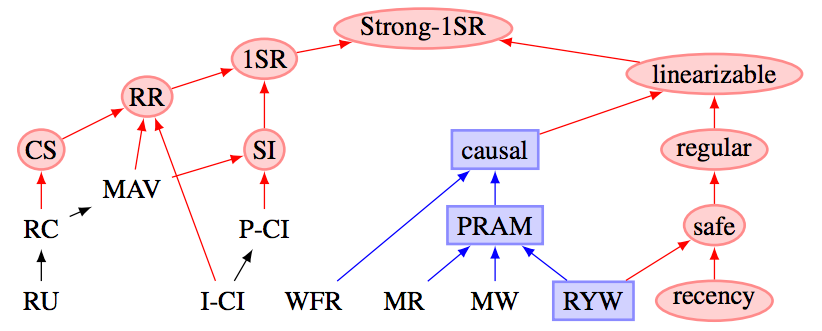

The isolation ranges outline how transactions needs to be executed from the angle of a single SQL database. Nevertheless, they disregard the propagation delay between replicas. Furthermore, SQL 92 definitions of isolation ranges concentrate on avoiding learn phenomena, however they don’t assure that we’ll even learn the “correct” knowledge. As an example, READ COMMITTED doesn’t specify that we should learn the newest worth of the row. We’re solely assured to learn a price that was dedicated in some unspecified time in the future.

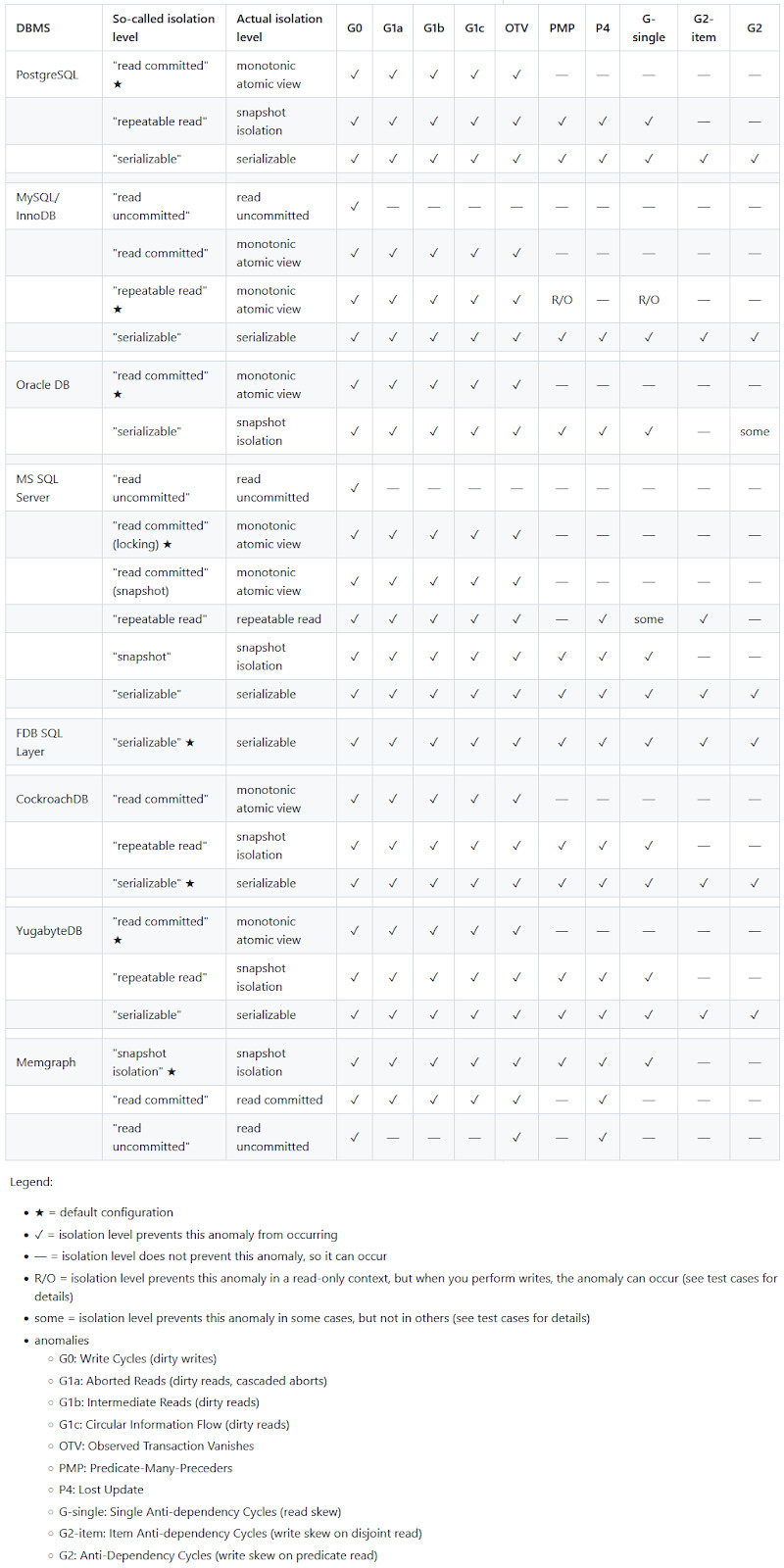

As soon as we contemplate all these features, we find yourself with a way more complicated view of the consistency in distributed techniques and databases. Jepsen analyzes the next fashions.

Sadly, the terminology is just not constant and different authors outline different ensures, as an example, these by Doug Terry:

- Learn Your Writes

- Monotonic Reads

- Bounded Staleness

- Constant Prefix

- Eventual Consistency

- Robust Consistency

Martin Kleppmann reveals the next specializing in the databases:

It’s very handy to contemplate a few learn phenomena that we mentioned above. Sadly, the world is far more complicated. The extra complete classification consists of:

- Soiled writes

- Soiled reads

- Aborted Reads (cascaded aborts)

- Intermediate Reads (soiled reads)

- Round Info Circulate

- Misplaced Replace

- Single Anti-dependency Cycles (learn skew)

- Merchandise Anti-dependency Cycles (write skew on disjoint learn)

- Noticed Transaction Vanishes

- Predicate-Many-Preceders

- Write Skew variants within the distributed database

The Hermitage challenge exams isolation ranges in varied databases. These are the anomalies that you could be anticipate when utilizing varied databases:

A few issues value noting from the picture above:

- Databases use totally different default isolation ranges.

- SERIALIZABLE could be very not often used as a default.

- Ranges with the identical identify differ between databases.

- There are various extra anomalies than solely the basic learn phenomena.

Add bugs on high of that, and we find yourself with a reasonably unreliable panorama.

Let’s see among the properties that we wish to protect in distributed techniques and databases.

Eventual Consistency

That is the weakest type of assure wherein we will solely make sure that we learn a price that was written prior to now. In different phrases:

- We could not learn the newest knowledge

- If we learn twice, we could get the “newer” and “older” values on this order

- Reads might not be repeatable

- We could not see some worth in any respect

- We don’t know once we will get the newest worth

Monotonic Reads

This ensures that when studying the identical object many instances, we’ll all the time see the values in non-decreasing write instances. In different phrases, when you learn an object’s worth written at T, all the following reads will return both the identical worth or some worth that was written after T.

This property focuses on one object solely.

Constant Prefix

This ensures that we observe an ordered sequence of database writes. That is similar to Monotonic Reads however applies to all of the writes (not a single object solely). As soon as we learn an object and get a price written at T, all subsequent reads for all objects will return values that had been written at T or later.

Bounded Staleness

This property ensures that we learn values that aren’t “too old”. In observe, we will measure the “staleness” of the item’s worth with a interval T. Any learn that we carry out will return a price that’s no older than T.

Learn Your Writes

This property ensures that if we first write a price after which strive studying it, then we’ll get the worth that we wrote or some newer worth. In different phrases, as soon as we write one thing at time T, we’ll by no means learn a price that was written earlier than T. This could embrace our writes that aren’t accomplished (dedicated) but.

Robust Consistency

This property ensures that we all the time learn the newest accomplished write. That is what we might anticipate from a SERIALIZABLE isolation degree (which isn’t all the time the case).

Sensible Issues

Primarily based on the dialogue above, we will study the next.

Use Many Ranges

We must always use many isolation ranges relying on our wants. Not each reader must see the newest knowledge, and typically it’s acceptable to learn older values for a lot of hours. Relying on what a part of the system we implement, we should always all the time contemplate with what we want and which ensures are required.

Much less strict isolation ranges can significantly enhance efficiency. They decrease the necessities of the database system and instantly have an effect on how we implement sturdiness, replication, and transaction processing. The less necessities we’ve, the better it’s to implement the system and the quicker the system is.

For instance, learn this whitepaper explaining learn how to implement a baseball-keeping rating system. Relying on the a part of the system, we could require the next properties:

|

Participant |

Properties |

|

Official scorekeeper |

Learn My Writes |

|

Umpire |

Robust Consistency |

|

Radio reporter |

Constant Prefix & Monotonic Reads |

|

Sportswriter |

Bounded Staleness |

|

Statistician |

Robust Consistency, Learn My Writes |

|

Stat watcher |

Eventual Consistency |

Utilizing varied isolation ranges could result in totally different system traits:

|

Property |

Consistency |

Efficiency |

Availability |

|

Robust Consistency |

Wonderful |

Poor |

Poor |

|

Eventual Consistency |

Poor |

Wonderful |

Wonderful |

|

Constant Prefix |

Okay |

Good |

Wonderful |

|

Bounded Staleness |

Good |

Okay |

Poor |

|

Monotonic Reads |

Okay |

Good |

Good |

|

Learn My Writes |

Okay |

Okay |

Okay |

Isolation Ranges Are Not Necessary

Databases don’t have to implement all isolation ranges or implement them in the identical manner. Many instances, databases skip among the isolation ranges to not hassle with much less common ones. Equally, typically they implement varied ranges utilizing related approaches that present the identical ensures.

Defaults Matter

We not often change the default transaction isolation degree. When utilizing many incompatible databases, we could face totally different isolation ranges and get totally different ensures. As an example, the default isolation degree in MySQL is REPEATABLE READ whereas PostgreSQL makes use of READ COMMITTED. When shifting from MySQL to PostgreSQL, your utility could cease working accurately.

Database Hints

We will management locking with database hints for every single question we execute. This helps the database engine to optimize to be used instances like delayed updates or long-running transactions. Not all databases help that, although.

Avoiding Transactions

The easiest way to enhance efficiency is to keep away from locks and reduce the ensures that we require. Avoiding transactions and shifting to sagas might be useful.

Abstract

Pace and efficiency are necessary components of each database and distributed system. Locks can considerably degrade efficiency however are sometimes essential for sustaining ACID and guaranteeing knowledge correctness. Understanding how issues work behind the scenes helps us obtain greater efficiency and nice database reliability.