Within the final article, I established the essential structure for a fundamental RAG app. In case you missed that, I like to recommend that you just first learn that article. That may set the bottom from which we will enhance our RAG system. Additionally in that final article, I listed some frequent pitfalls that RAG functions are likely to fail on. We will probably be tackling a few of them with some superior methods on this article.

To recap, a fundamental RAG app makes use of a separate data base that aids the LLM in answering the consumer’s questions by offering it with extra context. That is additionally known as a retrieve-then-read method.

The Downside

To reply the consumer’s query, our RAG app will retrieve acceptable primarily based on the question itself. It should discover chunks of textual content on the vector DB with comparable content material to regardless of the consumer is asking. Different data bases (search engines like google, and so on.) additionally apply. The issue is that the chunk of data the place the reply lies won’t be just like what the consumer is asking. The query may be badly written, or expressed in another way to what we anticipate. And, if our RAG app can’t discover the data wanted to reply the query, it received’t reply appropriately.

There are numerous methods to unravel this downside, however for this text, we’ll take a look at question rewriting.

What Is Question Rewriting?

Merely put, question rewriting means we’ll rewrite the consumer question in our personal phrases in order that our RAG app will know greatest the way to reply. As an alternative of simply doing retrieve-then-read, our app will do a rewrite-retrieve-read method.

We use a Generative AI mannequin to rewrite the query. This mannequin is usually a giant mannequin, like (or the identical as) the one we use to reply the query within the remaining step. It may also be a smaller mannequin, specifically skilled to carry out this job.

Moreover, question rewriting can take many various types relying on the wants of the app. More often than not, fundamental question rewriting will probably be sufficient. However, relying on the complexity of the questions we have to reply, we would want extra superior methods like HyDE, multi-querying, or step-back questions. Extra info on these is within the following part.

Why Does It Work?

Question rewriting often provides higher efficiency in any RAG app that’s knowledge-intensive. It’s because RAG functions are delicate to the phrasing and particular key phrases of the question. Paraphrasing this question is useful within the following eventualities:

- It restructures oddly written questions to allow them to be higher understood by our system.

- It erases context given by the consumer which is irrelevant to the question.

- It could possibly introduce frequent key phrases, which is able to give it a greater likelihood of matching up with the right context.

- It could possibly break up complicated questions into totally different sub-questions, which may be extra simply responded to individually, every with their corresponding context.

- It could possibly reply questions that require a number of ranges of pondering by producing a step-back query, which is a higher-level idea query to the one from the consumer. It then makes use of each the unique and the step-back inquiries to retrieve context.

- It could possibly use extra superior question rewriting methods like HyDE to generate hypothetical paperwork to reply the query. These hypothetical paperwork will higher seize the intent of the query and match up with the embeddings that include the reply within the vector DB.

How To Implement Question Rewriting

We’ve got established that there are totally different methods for question rewriting relying on the complexity of the questions. We’ll briefly focus on the way to implement every of them. After, we’ll see an actual instance to match the results of a fundamental RAG app versus a RAG app with question rewriting. It’s also possible to observe all of the examples within the article’s Google Colab pocket book.

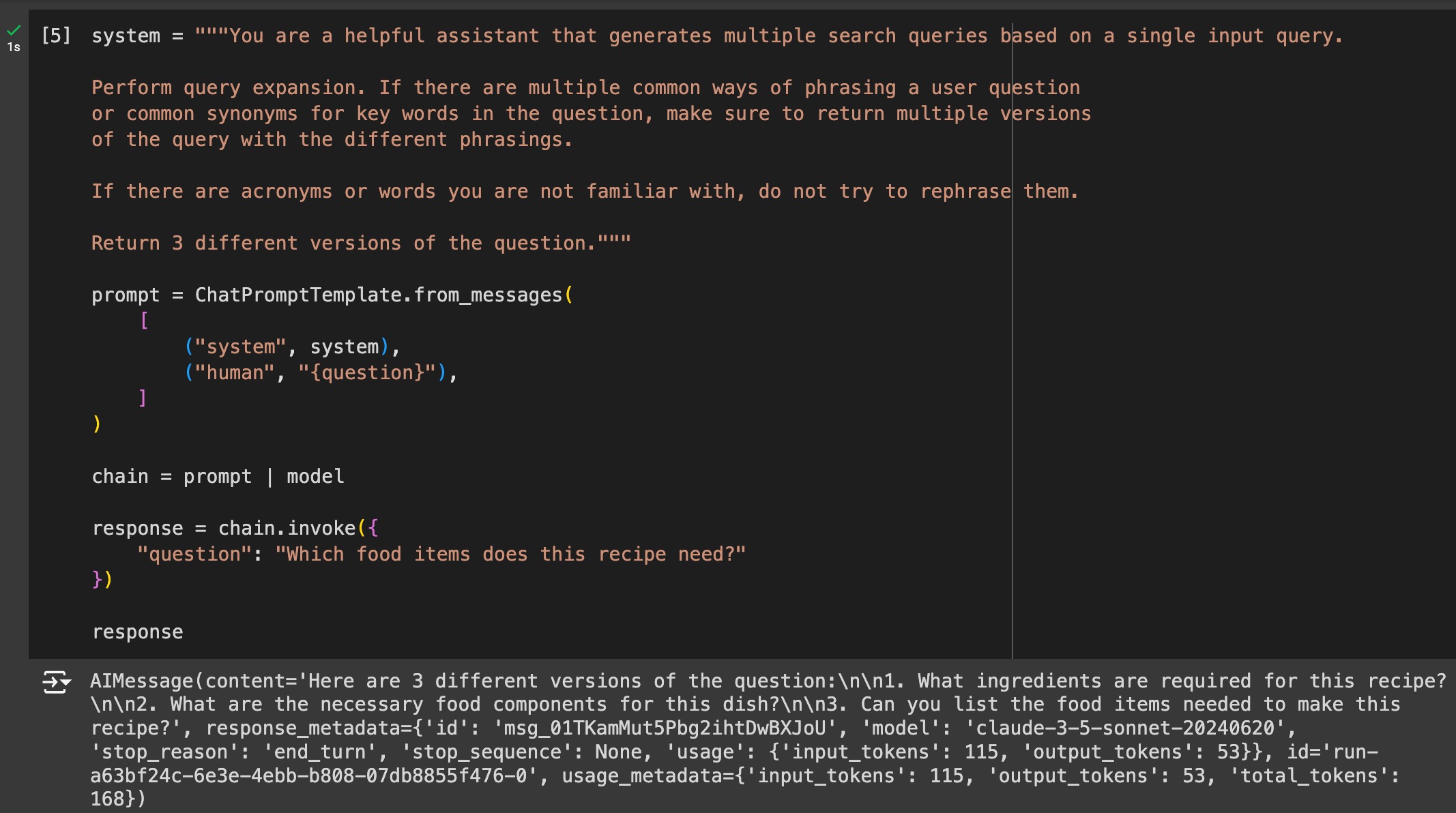

Zero-Shot Question Rewriting

That is easy question rewriting. Zero-shot refers back to the immediate engineering strategy of giving examples of the duty to the LLM, which on this case, we give none.

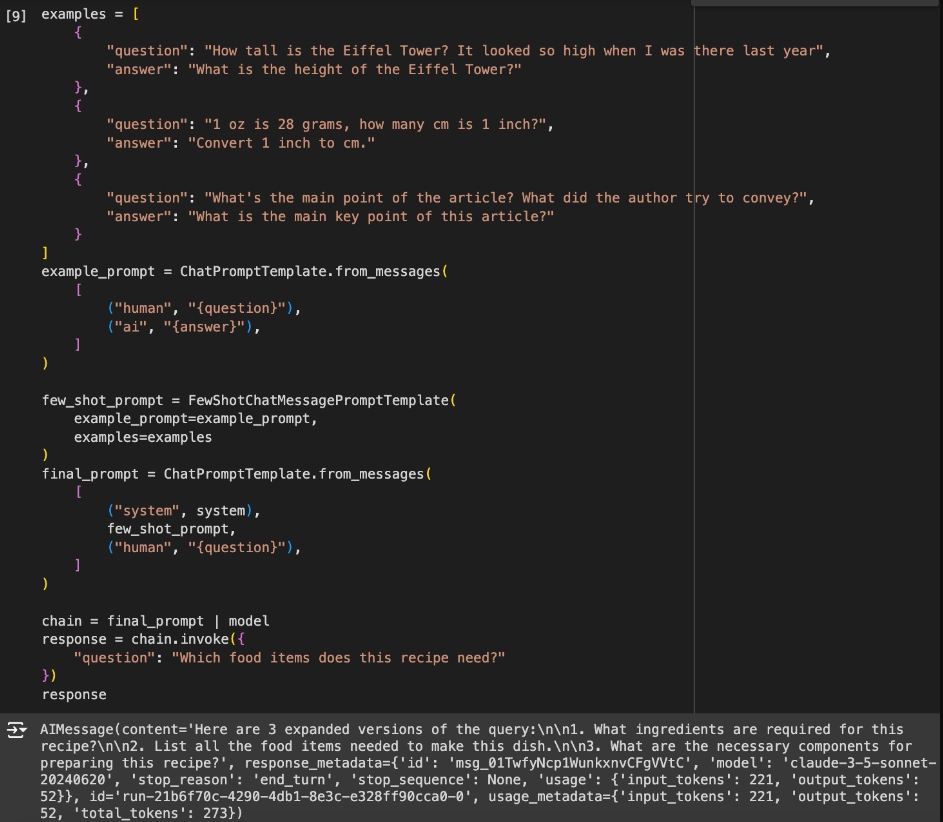

Few-Shot Question Rewriting

For a barely higher outcome at the price of utilizing a number of extra tokens per rewrite, we may give some examples of how we wish the rewrite to be accomplished.

Trainable Rewriter

We will fine-tune a pre-trained mannequin to carry out the question rewriting job. As an alternative of counting on examples, we will educate it how question rewriting ought to be accomplished to realize the perfect ends in context retrieving. Additionally, we will additional practice it utilizing reinforcement studying so it will probably be taught to acknowledge problematic queries and keep away from poisonous and dangerous phrases. We will additionally use an open-source mannequin that has already been skilled by someone else on the duty of question rewriting.

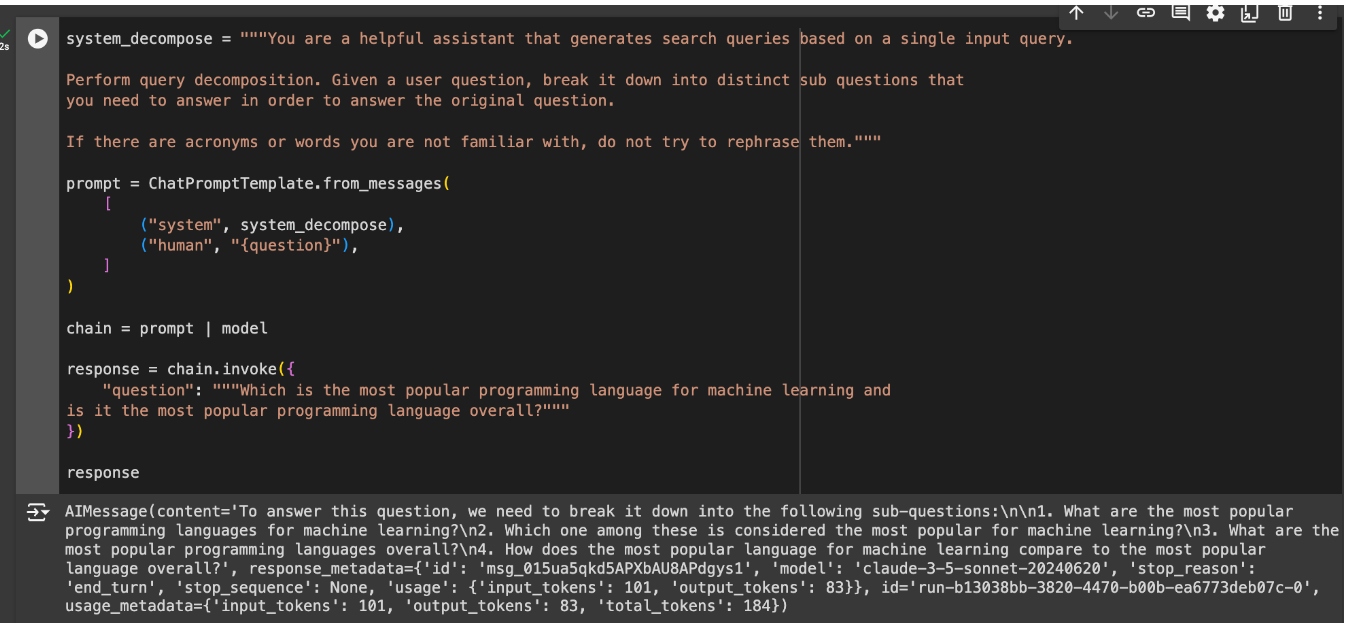

Sub-Queries

If the consumer question incorporates a number of questions, this will make context retrieval tough. Every query most likely wants totally different info, and we’re not going to get all of it utilizing all of the questions as the idea for info retrieval. To resolve this downside, we will decompose the enter into a number of sub-queries, and carry out retrieval for every of the sub-queries.

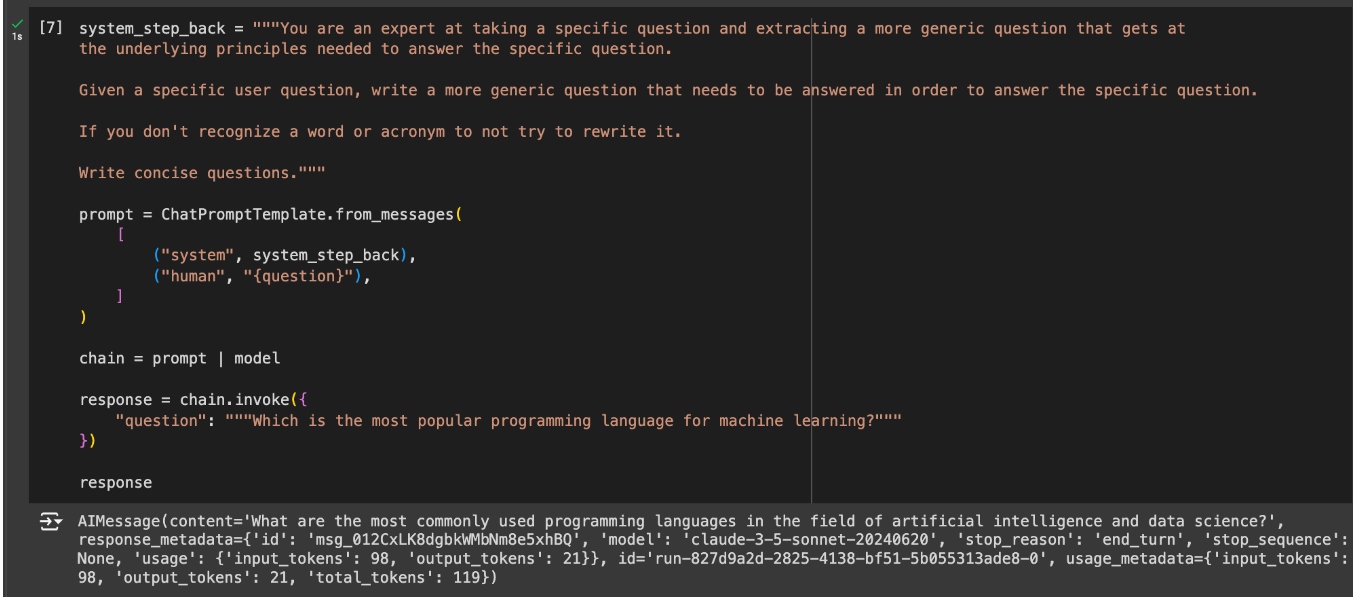

Step-Again Immediate

Many questions is usually a bit too complicated for the RAG pipeline’s retrieval to understand the a number of ranges of data wanted to reply them. For these instances, it may be useful to generate a number of further queries to make use of for retrieval. These queries will probably be extra generic than the unique question. It will allow the RAG pipeline to retrieve related info on a number of ranges.

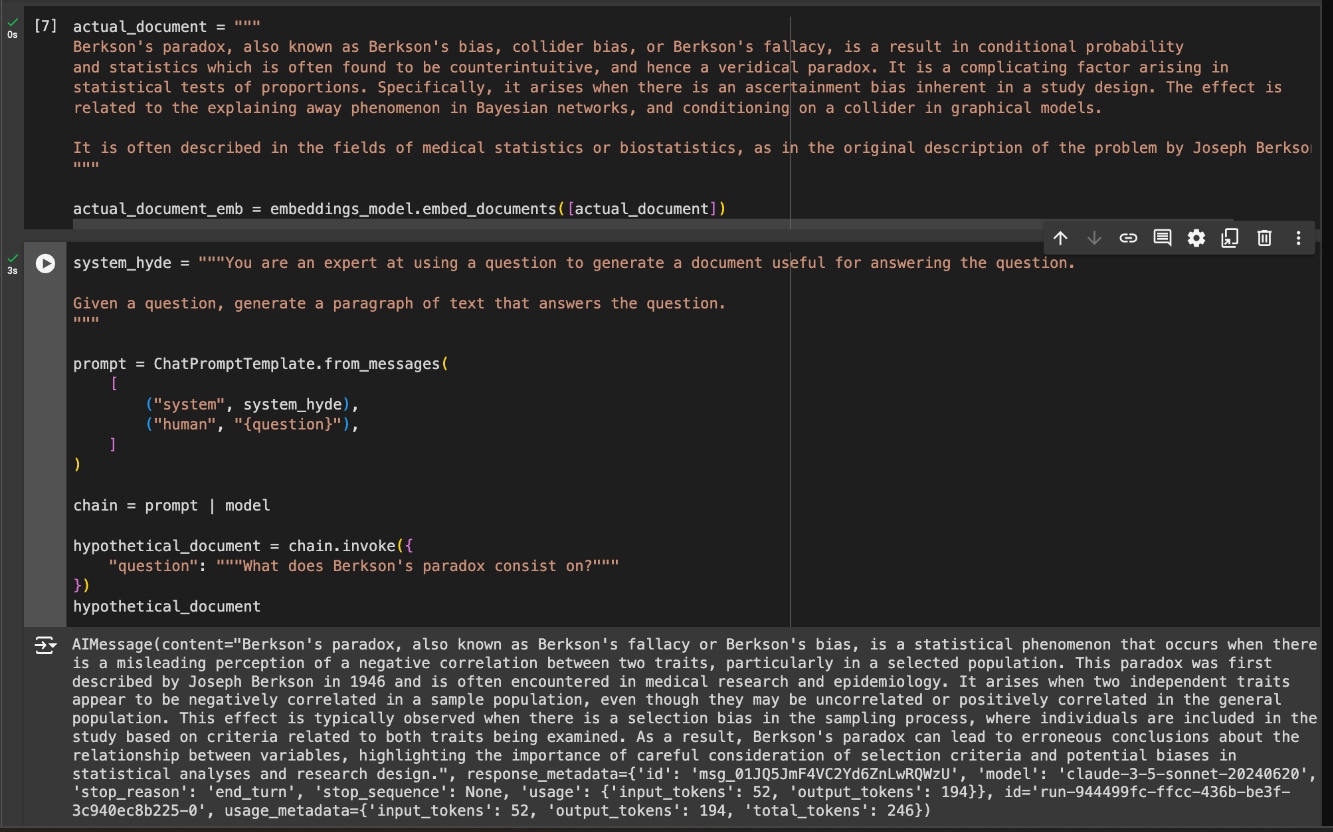

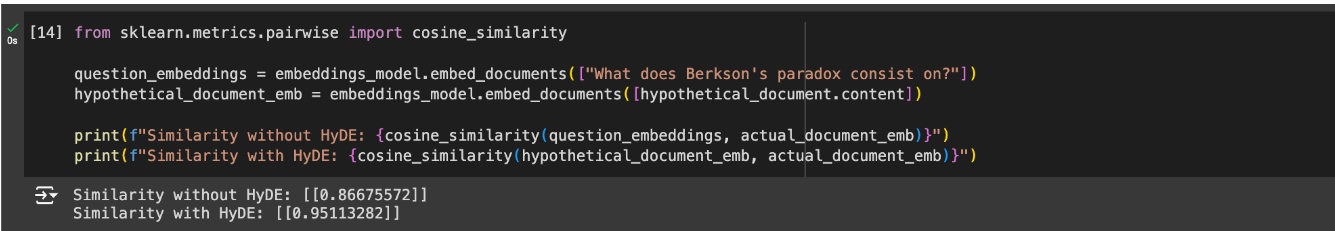

HyDE

One other technique to enhance how queries are matched with context chunks is Hypothetical Document Embeddings or HyDE. Generally, questions and solutions will not be that semantically comparable, which might trigger the RAG pipeline to overlook essential context chunks within the retrieval stage. Nonetheless, even when the question is semantically totally different, a response to the question ought to be semantically just like one other response to the identical question. The HyDE technique consists of making hypothetical context chunks that reply the question and utilizing them to match the true context that can assist the LLM reply.

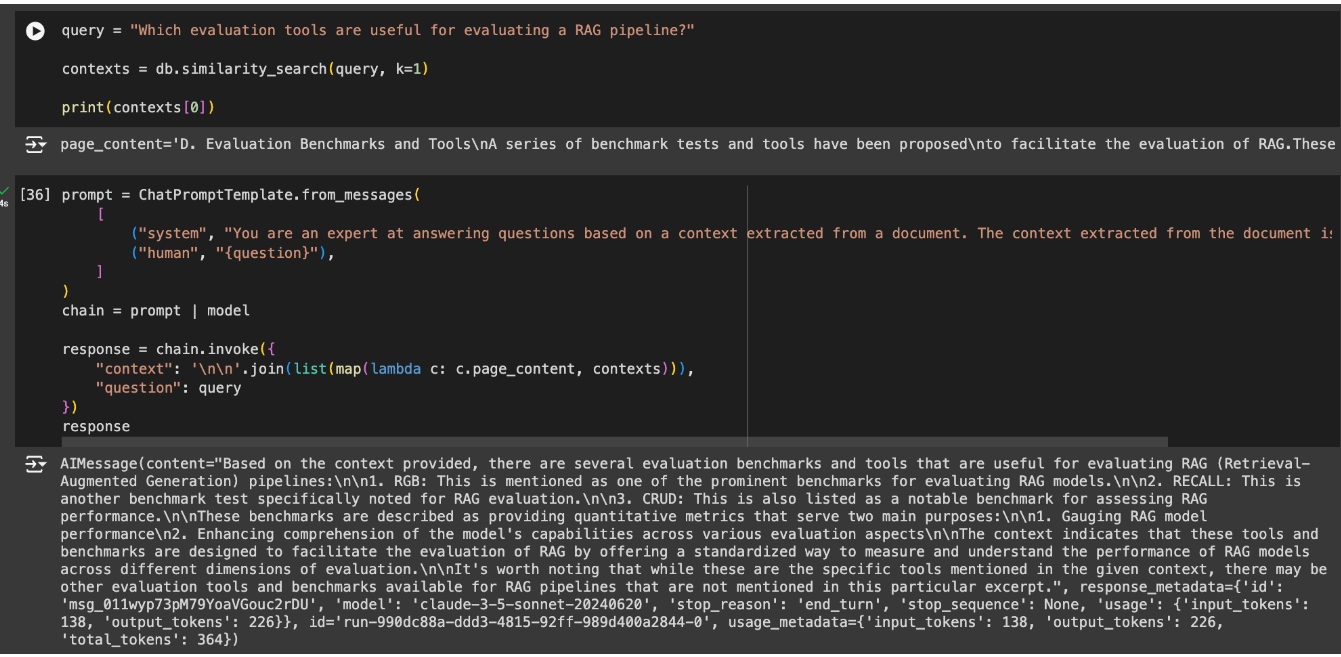

Instance: RAG With vs With out Question Rewriting

Taking the RAG pipeline from the final article, “How To Construct a Fundamental RAG App,” we’ll introduce question rewriting into it. We’ll ask it a query a bit extra superior than final time and observe whether or not the response improves with question rewriting over with out it. First, let’s construct the identical RAG pipeline. Solely this time, I’ll solely use the highest doc returned from the vector database to be much less forgiving to missed paperwork.

The response is nice and primarily based on the context, however it acquired caught up in me asking about analysis and missed that I used to be particularly asking for instruments. Due to this fact, the context used does have info on some benchmarks, however it misses the subsequent chunk of data that talks about instruments.

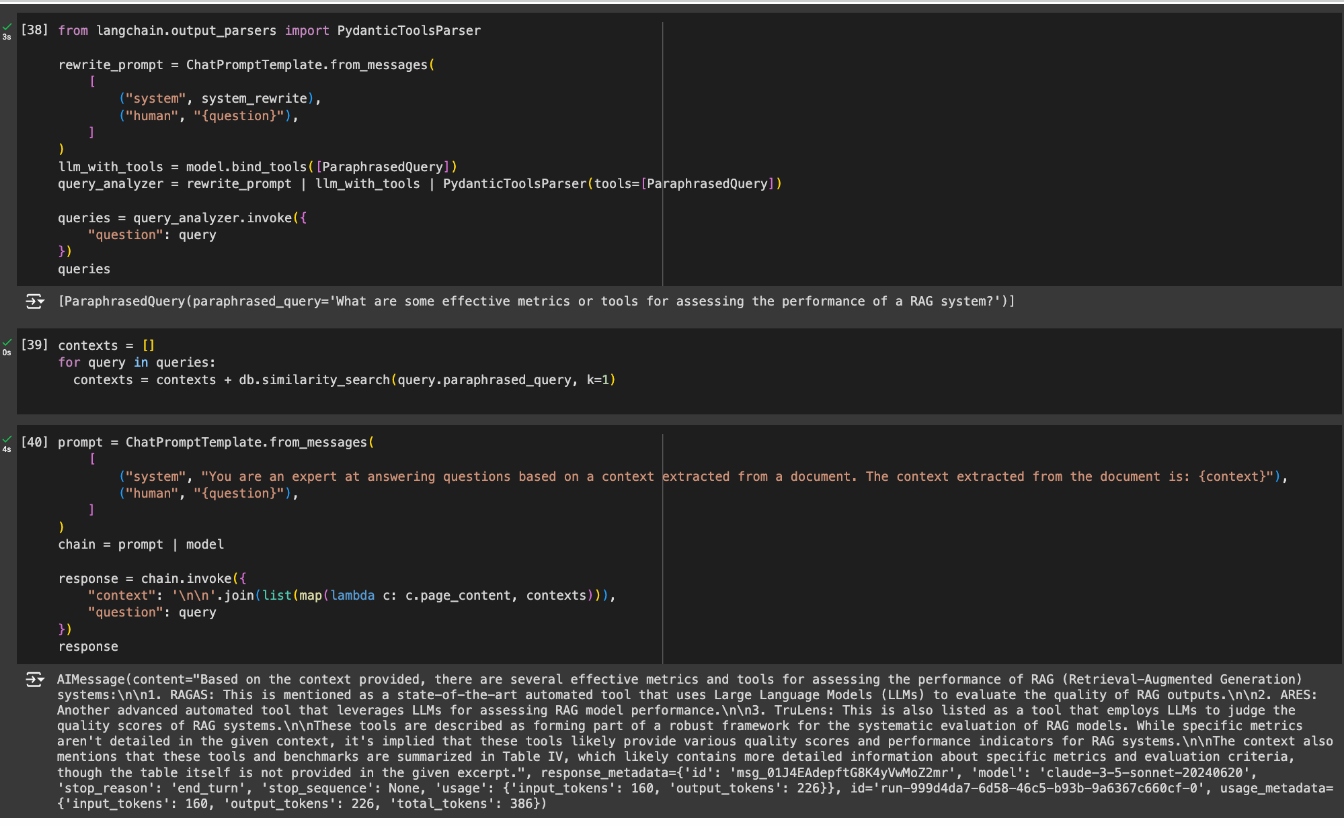

Now, let’s implement the identical RAG pipeline however now, with question rewriting. In addition to the question rewriting prompts, we now have already seen within the earlier examples, I’ll be utilizing a Pydantic parser to extract and iterate over the generated various queries.

The brand new question now matches with the chunk of data I needed to get my reply from, giving the LLM a greater likelihood of answering a significantly better response to my query.

Conclusion

We’ve got taken our first step out of fundamental RAG pipelines and into Superior RAG. Question rewriting is a quite simple Superior RAG method, however a strong one for enhancing the outcomes of a RAG pipeline. We’ve got gone over other ways to implement it relying on what sort of questions we have to enhance. In future articles, we’ll go over different Superior RAG methods that may sort out totally different RAG points than these seen on this article.