In earlier articles, we constructed a primary RAG software. We additionally discovered to introduce extra superior strategies to enhance a RAG software. Right this moment, we’ll discover how one can tie these superior strategies collectively. These strategies may do completely different — generally reverse — issues. Nonetheless, generally we have to use all of them, to cowl all prospects. So let’s examine how we will hyperlink completely different strategies collectively. On this article, we’ll check out a method referred to as Question Routing.

The Drawback With Superior RAG Purposes

When our Generative AI software receives a question, now we have to determine what to do with it. For easy Generative AI functions, we ship the question on to the LLM. For easy RAG functions, we use the question to retrieve context from a single information supply after which question the LLM. However, if our case is extra advanced, we will have a number of information sources or completely different queries that want various kinds of context. So can we construct a one-size-fits-all answer, or can we make the applying adapt to take completely different actions relying on the question?

What Is Question Routing?

Question routing is about giving our RAG app the ability of decision-making. It’s a method that takes the question from the consumer and makes use of it to decide on the following motion to take, from an inventory of predefined decisions.

Question routing is a module in our Superior RAG structure. It’s often discovered after any question rewriting or guardrails. It analyzes the enter question and it decides the most effective software to make use of from an inventory of predefined actions. The actions are often retrieving context from one or many information sources. It might additionally determine to make use of a distinct index for a knowledge supply (like parent-child retrieval). Or it might even determine to seek for context on the Web.

Which Are the Selections for the Question Router?

Now we have to outline the alternatives that the question router can take beforehand. We should first implement every of the completely different methods, and accompany every one with a pleasant description. It is rather necessary that the outline explains intimately what every technique does since this description shall be what our router will base its determination on.

The alternatives a question router takes might be the next:

Retrieval From Completely different Knowledge Sources

We are able to catalog a number of information sources that include info on completely different subjects. We’d have a knowledge supply that incorporates details about a product that the consumer has questions on. And one other information supply with details about our return insurance policies, and so on. As a substitute of searching for the solutions to the consumer’s questions in all information sources, the question router can determine which information supply to make use of primarily based on the consumer question and the info supply description.

Knowledge sources might be textual content saved in vector databases, common databases, graph databases, and so on.

Retrieval From Completely different Indexes

Question routers also can select to make use of a distinct index for a similar information supply.

For instance, we might have an index for keyword-based search and one other for semantic search utilizing vector embeddings. The question router can determine which of the 2 is finest for getting the related context for answering the query, or possibly use each of them on the identical time and mix the contexts from each.

We might even have completely different indexes for various retrieval methods. For instance, we might have a retrieval technique primarily based on summaries, a sentence window retrieval technique, or a parent-child retrieval technique. The question router can analyze the specificity of the query and determine which technique is finest to make use of to get the most effective context.

Different Knowledge Sources

The choice that the question router takes just isn’t restricted to databases and indexes. It may additionally determine to make use of a software to search for the data elsewhere. For instance, it could actually determine to make use of a software to search for the reply on-line utilizing a search engine. It may additionally use an API from a selected service (for instance, climate forecasting) to get the info it must get the related context.

Sorts of Question Routers

An necessary a part of our question router is the way it makes the choice to decide on one or one other path. The choice can differ relying on every of the various kinds of question routers. The next are a number of of essentially the most used question router sorts:

LLM Selector Router

This answer offers a immediate to an LLM. The LLM completes the immediate with the answer, which is the choice of the correct alternative. The immediate contains all of the completely different decisions, every with its description, in addition to the enter question to base its determination on. The response to this question shall be used to programmatically determine which path to take.

LLM Operate Calling Router

This answer leverages the function-calling capabilities (or tool-using capabilities) of LLMs. Some LLMs have been skilled to have the ability to determine to make use of some instruments to get to a solution if they’re supplied for them within the immediate. Utilizing this functionality, every of the completely different decisions is phrased like a software within the immediate, prompting the LLM to decide on which one of many instruments supplied is finest to unravel the issue of retrieving the correct context for answering the question.

Semantic Router

This answer makes use of a similarity search on the vector embedding illustration of the consumer question. For every alternative, we must write a number of examples of a question that will be routed to this path. When a consumer question arrives, an embeddings mannequin converts it to a vector illustration and it’s in comparison with the instance queries for every router alternative. The instance with the closest vector illustration to the consumer question is chosen as the trail the router should path to.

Zero-Shot Classification Router

For any such router, a small LLM is chosen to behave as a router. This LLM shall be finetuned utilizing a dataset of examples of consumer queries and the proper routing for every of them. The finetuned LLM’s sole goal shall be to categorise consumer queries. Small LLMs are cheaper and greater than adequate for a easy classification job.

Language Classification Router

In some instances, the aim of the question router shall be to redirect the question to a selected database or mannequin relying on the language the consumer wrote the question in. Language might be detected in some ways, like utilizing an ML classification mannequin or a Generative AI LLM with a selected immediate.

Key phrase Router

Generally the use case is very simple. On this case, the answer might be to route a technique or one other relying on if some key phrases are current within the consumer question. For instance, if the question incorporates the phrase “return” we might use a knowledge supply with info helpful about how one can return a product. For this answer, a easy code implementation is sufficient, and subsequently, no costly mannequin is required.

Single Selection Routing vs A number of Selection Routing

Relying on the use case, it’s going to make sense for the router to simply select one path and run it. Nonetheless, in some instances, it can also make sense to make use of a couple of alternative for answering the identical question. To reply a query that spans many subjects, the applying must retrieve info from many information sources. Or the response is likely to be completely different primarily based on every information supply. Then, we will use all of them to reply the query and consolidate them right into a single last reply.

Now we have to design the router taking these prospects into consideration.

Instance Implementation of a Question Router

Let’s get into the implementation of a question router inside a RAG software. You’ll be able to observe the implementation step-by-step and run it your self within the Google Colab pocket book.

For this instance, we’ll showcase a RAG software with a question router. The applying can determine to reply questions primarily based on two paperwork. The primary doc is a paper about RAG and the second is a recipe for hen gyros. Additionally, the applying can determine to reply primarily based on a Google search. We’ll implement a single-source question router utilizing an LLM operate calling router.

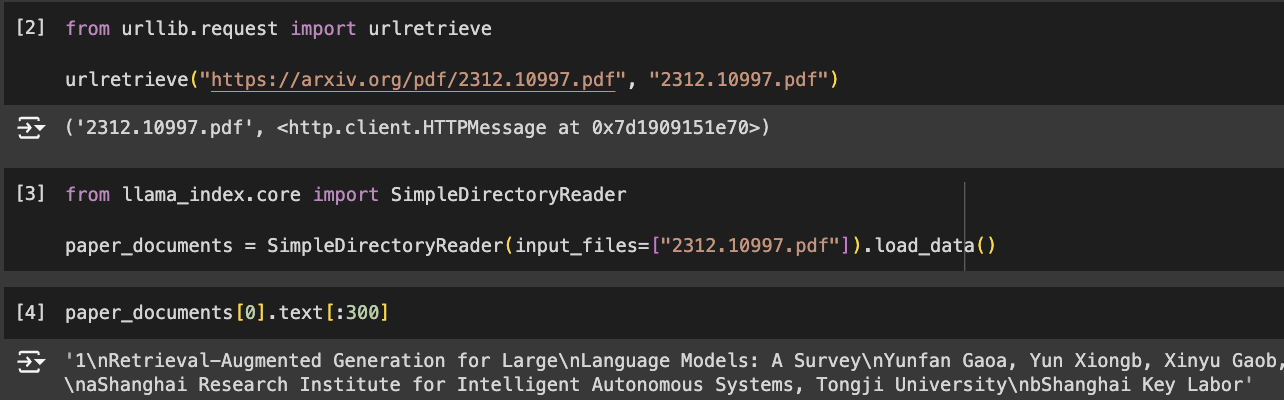

Load the Paper

First, we’ll put together the 2 paperwork for retrieval. Let’s first load the paper about RAG:

Load the Recipe

We will even load the recipe for hen gyros. This recipe from Mike Value is hosted in tasty.co. We’ll use a easy net web page reader to learn the web page and retailer it as textual content.

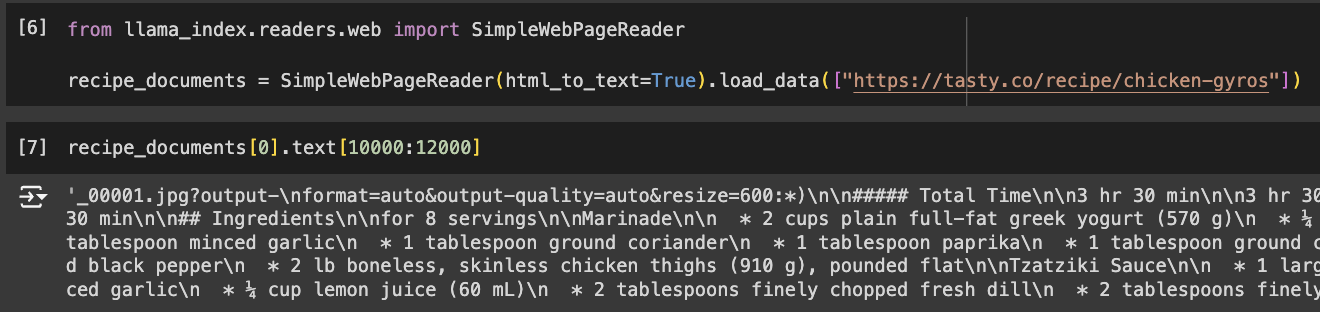

Save the Paperwork in a Vector Retailer

After getting the 2 paperwork we’ll use for our RAG software, we’ll break up them into chunks and we’ll convert them to embeddings utilizing BGE small, an open-source embeddings mannequin. We’ll retailer these embeddings in two vector shops, able to be questioned.

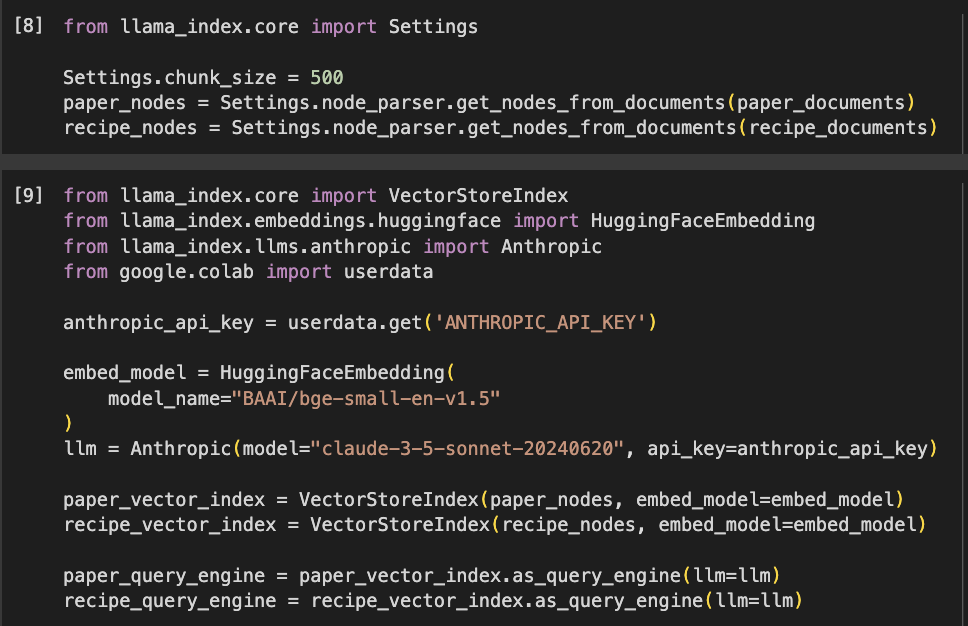

Search Engine Device

Moreover the 2 paperwork, the third possibility for our router shall be to seek for info utilizing Google Search. For this instance, I’ve created my very own Google Search API keys. In order for you this half to work, you must use your personal API keys.

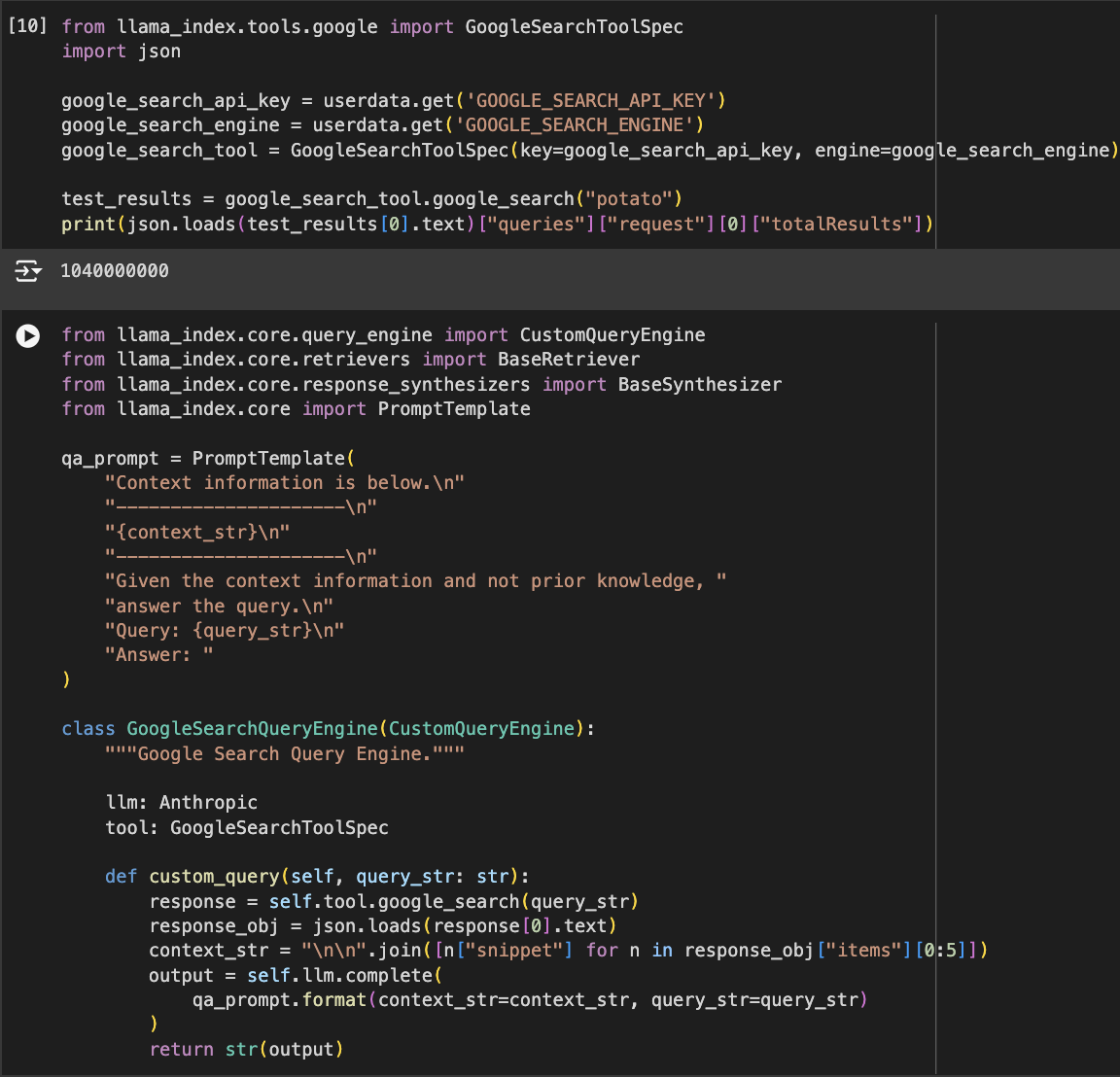

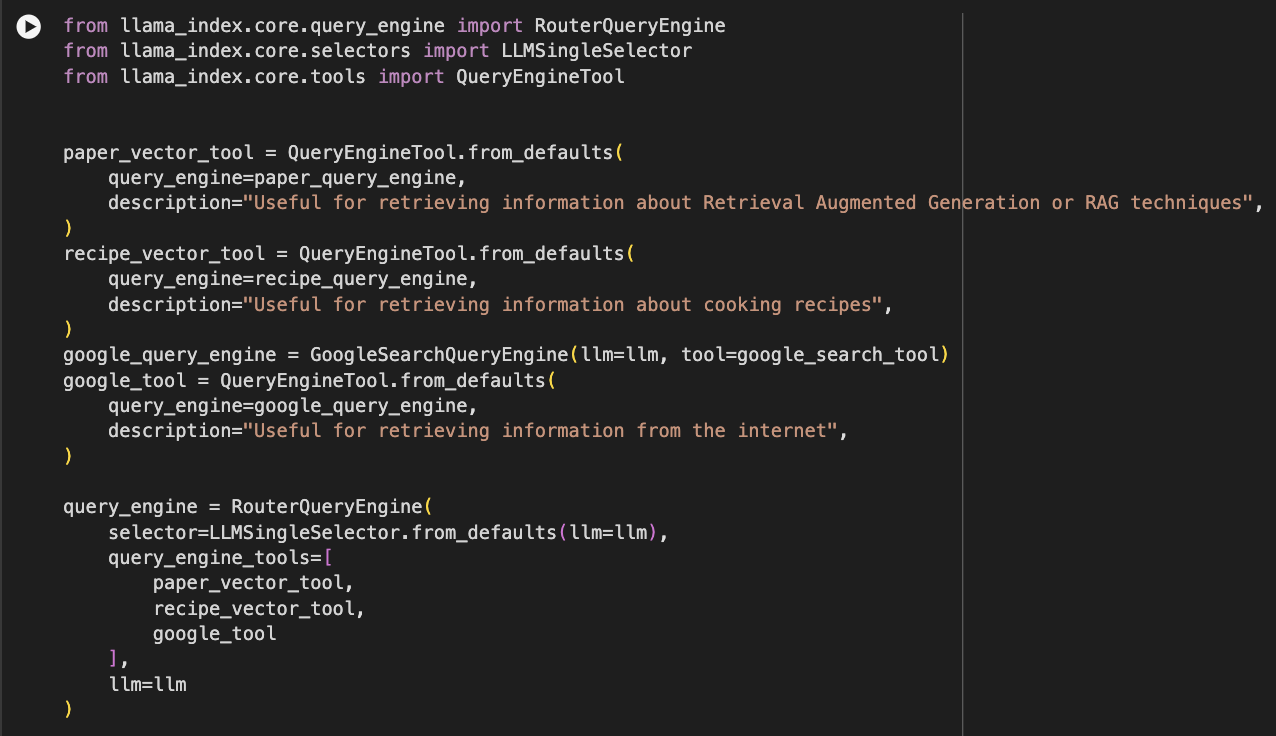

Create the Question Router

Subsequent, utilizing the LlamaIndex library, we create a Question Engine Device for every of the three choices that the router will select between. We offer an outline for every of the instruments, explaining what it’s helpful for. This description is essential since it will likely be the idea on which the question router decides which path it chooses.

Lastly, we create a Router Question Engine, additionally with Llama. We give the three question engine instruments to this router. Additionally, we outline the selector. That is the element that can make the selection of which software to make use of. For this instance, we’re utilizing an LLM Selector. It is also a single selector, which means it’s going to solely select one software, by no means a couple of, to reply the question.

Run Our RAG Utility!

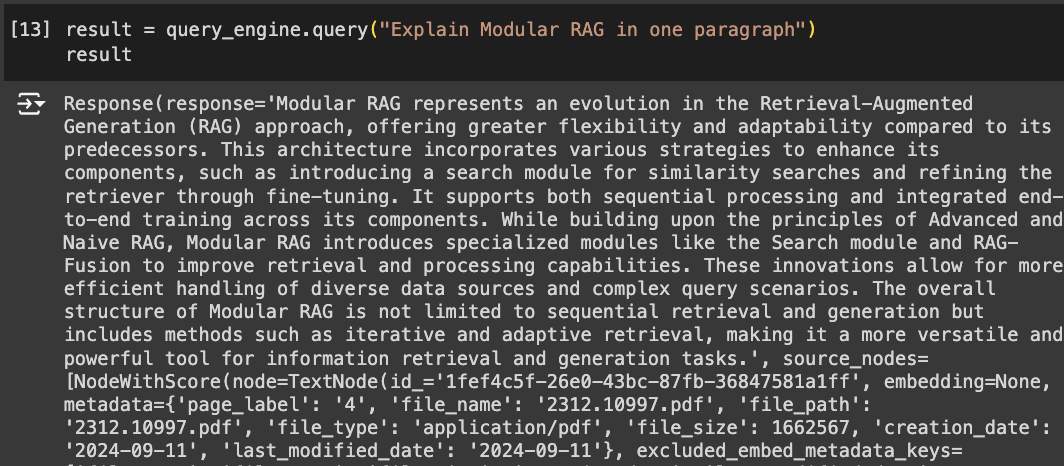

Our question router is now prepared. Let’s take a look at it with a query about RAG. We supplied a vector retailer loaded with info from a paper on RAG strategies. The question router ought to select to retrieve context from that vector retailer with a view to reply the query. Let’s have a look at what occurs:

Our RAG software solutions accurately. Together with the reply, we will see that it supplies the sources from the place it received the data from. As we anticipated, it used the vector retailer with the RAG paper.

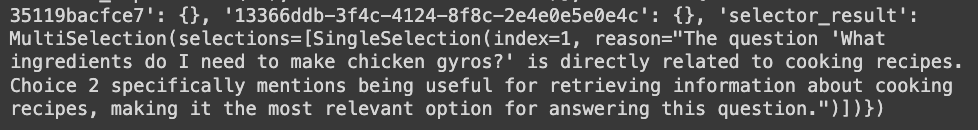

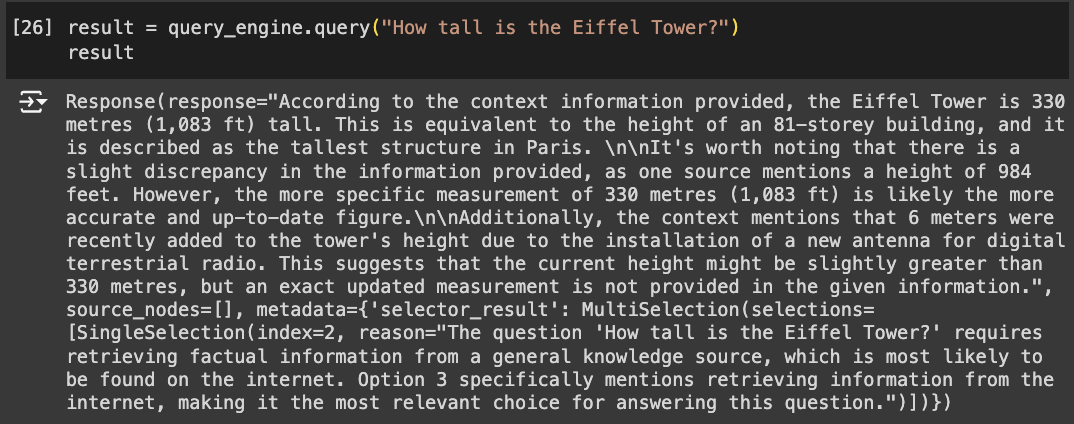

We are able to additionally see an attribute “selector_result” within the outcome. On this attribute, we will examine which one of many instruments the question router selected, in addition to the explanation that the LLM gave to decide on that possibility.

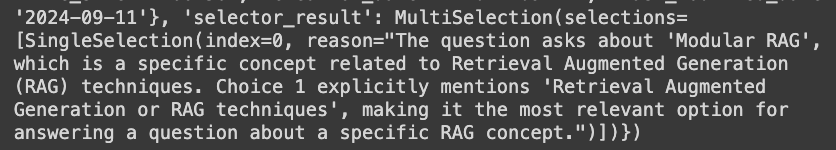

Now let’s ask a culinary query. The recipe used to create the second vector retailer is for hen gyros. Our software ought to be capable of reply that are the components wanted for that recipe primarily based on that supply.

As we will see, the hen gyros recipe vector retailer was accurately chosen to reply that query.

Lastly, let’s ask it a query that may be answered with a Google Search.

Conclusion

In conclusion, question routing is a superb step in the direction of a extra superior RAG software. It permits us to arrange a base for a extra advanced system, the place our app can higher plan how one can finest reply questions. Additionally, question routing might be the glue that ties collectively different superior strategies in your RAG software and makes them work collectively as a complete system.

Nonetheless, the complexity of higher RAG programs does not finish with question routing. Question routing is simply the primary stepping stone for orchestration inside RAG functions. The following stepping stone for making our RAG functions higher cause, determine, and take actions primarily based on the wants of the customers are Brokers. In later articles, we shall be diving deeper into how Brokers work inside RAG and Generative AI functions basically.