Shifting knowledge from one place to a different is conceptually easy. You merely learn from one datasource and write to a different. Nonetheless, doing that persistently and safely is one other story. There are a selection of errors you can also make in the event you overlook vital particulars.

We just lately mentioned the highest causes so many organizations are presently in search of DynamoDB alternate options. Past prices (probably the most incessantly talked about issue), points akin to throttling, arduous limits, and vendor lock-in are incessantly cited as motivation for a change.

However what does a migration from DynamoDB to a different database seem like? Do you have to dual-write? Are there any accessible instruments to help you with that? What are the standard do’s and don’ts? In different phrases, how do you progress out from DynamoDB?

On this put up, let’s begin with an outline of how database migrations work, cowl particular and vital traits associated to DynamoDB migrations, after which focus on a number of the methods employed to combine with and migrate knowledge seamlessly to different databases.

How Database Migrations Work

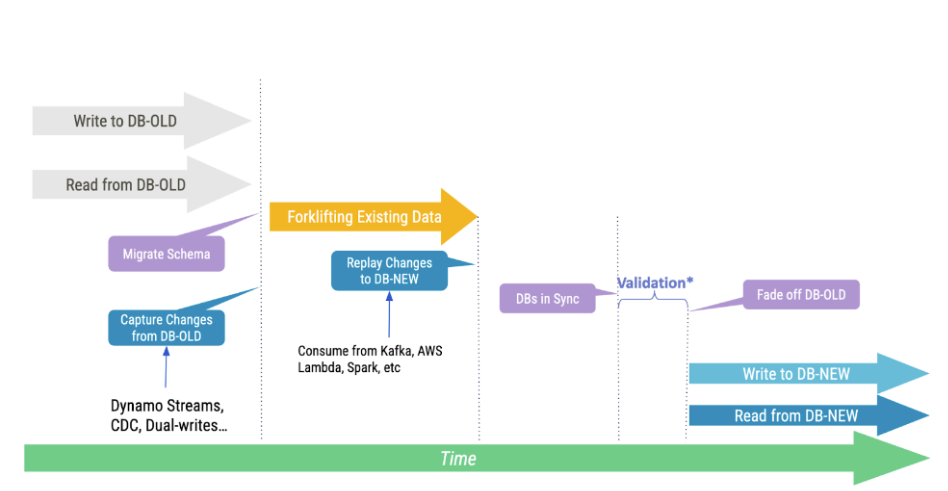

Most database migrations comply with a strict set of steps to get the job accomplished.

First, you begin capturing all modifications made to the supply database. This ensures that any knowledge modifications (or deltas) may be replayed later.

Second, you merely copy knowledge over. You learn from the supply database and write to the vacation spot one. A variation is to export a supply database backup and easily side-load it into the vacation spot database.

Previous the preliminary knowledge load, the goal database will comprise a lot of the data from the supply database, besides those which have modified through the time period it took so that you can full the earlier step. Naturally, the subsequent step is to easily replay all deltas generated by your supply database to the vacation spot one. As soon as that completes, each databases will likely be totally in sync, and that’s when you might change your utility over.

To Twin-Write or Not?

To Twin-Write or Not?

In case you are conversant in Cassandra migrations, then you may have in all probability been launched to the advice of merely “dual-writing” to get the job accomplished. That’s, you’d proxy each author mutation out of your supply database to additionally apply the identical data to your goal database.

Sadly, not each database implements the idea of permitting a author to retrieve or manipulate the timestamp of a report just like the CQL protocol permits. This prevents you from implementing dual-writes within the utility whereas back-filling the goal database with historic knowledge. If you happen to try to try this, you’ll doubtless find yourself with an inconsistent migration, the place some goal Objects could not mirror their newest state in your supply database.

Wait… Does it imply that dual-writing in a migration from DynamoDB is simply improper? In fact not! Contemplate that your DynamoDB desk expires data (TTL) each 24 hours. In that case, it doesn’t make sense to back-fill your database: merely dual-write and, previous the TTL interval, change your readers over. In case your TTL is longer (say a 12 months), then ready for it to run out gained’t be probably the most environment friendly solution to transfer your knowledge over.

Again-Filling Historic Knowledge

Whether or not or not you want to back-fill historic knowledge primarily is dependent upon your use case. But, we are able to simply motive round the truth that it usually is a compulsory step in most migrations.

There are 3 principal methods so that you can back-fill historic knowledge from DynamoDB:

ETL

ETL (extract-transform-load) is actually what a software like Apache Spark does. It begins with a Desk Scan and reads a single web page value of outcomes. The outcomes are then used to deduce your supply desk’s schema. Subsequent, it spawns readers to eat out of your DynamoDB desk in addition to author employees ingest the retrieved knowledge to the vacation spot database.

This strategy is nice for finishing up easy migrations and likewise allows you to rework (the T within the ETL half) your knowledge as you go. Nonetheless, it’s sadly liable to some issues. For instance:

- Schema inference: DynamoDB tables are schemaless, so it’s tough to deduce a schema. All desk attributes (apart from your hash and kind keys) may not be current on the primary web page of the preliminary scan. Plus, a given Merchandise may not undertaking all of the attributes current inside one other Merchandise.

- Value: Sinces extracting knowledge requires a DynamoDB desk full scan, it’ll inevitably eat RCUs. It will in the end drive up migration prices, and it might probably additionally introduce an upstream impression to your utility if DynamoDB runs out of capability.

- Time: The time it takes emigrate the information is proportional to your knowledge set dimension. Because of this in case your migration takes longer than 24 hours, you might be unable to straight replay from DynamoDB Streams after, on condition that that is the time period that AWS ensures the supply of its occasions.

Desk Scan

A desk scan, because the title implies, includes retrieving all data out of your supply DynamoDB desk – solely after loading them to your vacation spot database. In contrast to the earlier ETL strategy the place each the “Extract” and “Load” items are coupled and knowledge will get written as you go, right here every step is carried out in a phased means.

The excellent news is that this technique is very simple to wrap your head round. You run a single command. As soon as it completes, you’ve obtained all of your knowledge! For instance:

$ aws dynamodb scan --table-name supply > output.jsonYou’ll then find yourself with a single JSON file containing all present Objects inside your supply desk, which you’ll then merely iterate via and write to your vacation spot. Until you might be planning to rework your knowledge, you shouldn’t want to fret concerning the schema (because you already know beforehand that every one Key Attributes are current).

This technique works very properly for small to medium-sized tables, however – as with the earlier ETL technique – it could take appreciable time to scan bigger tables. And that’s not accounting for the time it’ll take you to parse it and later load it to the vacation spot.

S3 Knowledge Export

When you have a big dataset or are involved with RCU consumption and the impression on reside site visitors, you may depend on exporting DynamoDB knowledge to Amazon S3. This lets you simply dump your tables’ whole contents with out impacting your DynamoDB desk efficiency. As well as, you may request incremental exports later, in case the back-filling course of takes longer than 24 hours.

To request a full DynamoDB export to S3, merely run:

$ aws dynamodb export-table-to-point-in-time --table-arn arn:aws:dynamodb:REGION:ACCOUNT:desk/TABLE_NAME --s3-bucket BUCKET_NAME --s3-prefix PREFIX_NAME --export-format DYNAMODB_JSONThe export will then run within the background (assuming the required S3 bucket exists). To test for its completion, run:

$ aws dynamodb list-exports --table-arn arn:aws:dynamodb:REGION:ACCOUNT:desk/supply

{

"ExportSummaries": [

{

"ExportArn": "arn:aws:dynamodb:REGION:ACCOUNT:table/TABLE_NAME/export/01706834224965-34599c2a",

"ExportStatus": "COMPLETED",

"ExportType": "FULL_EXPORT"

}

]

}As soon as the method is full, your supply desk’s knowledge will likely be accessible inside the S3 bucket/prefix specified earlier. Inside it, you will see that a listing named AWSDynamoDB, below a construction that resembles one thing like this:

$ tree AWSDynamoDB/

AWSDynamoDB/

└── 01706834981181-a5d17203

├── _started

├── knowledge

│ ├── 325ukhrlsi7a3lva2hsjsl2bky.json.gz

│ ├── 4i4ri4vq2u2vzcwnvdks4ze6ti.json.gz

│ ├── aeqr5obfpay27eyb2fnwjayjr4.json.gz

│ ├── d7bjx4nl4mywjdldiiqanmh3va.json.gz

│ ├── dlxgixwzwi6qdmogrxvztxzfiy.json.gz

│ ├── fuukigkeyi6argd27j25mieigm.json.gz

│ ├── ja6tteiw3qy7vew4xa2mi6goqa.json.gz

│ ├── jirrxupyje47nldxw7da52gnva.json.gz

│ ├── jpsxsqb5tyynlehyo6bvqvpfki.json.gz

│ ├── mvc3siwzxa7b3jmkxzrif6ohwu.json.gz

│ ├── mzpb4kukfa5xfjvl2lselzf4e4.json.gz

│ ├── qs4ria6s5m5x3mhv7xraecfydy.json.gz

│ ├── u4uno3q3ly3mpmszbnwtzbpaqu.json.gz

│ ├── uv5hh5bl4465lbqii2rvygwnq4.json.gz

│ ├── vocd5hpbvmzmhhxz446dqsgvja.json.gz

│ └── ysowqicdbyzr5mzys7myma3eu4.json.gz

├── manifest-files.json

├── manifest-files.md5

├── manifest-summary.json

└── manifest-summary.md5

2 directories, 21 recordsdataSo how do you restore from these recordsdata? Effectively… you want to use the DynamoDB Low-level API. Fortunately, you don’t must dig via its particulars since AWS gives the LoadS3toDynamoDB pattern code as a solution to get began. Merely override the DynamoDB reference to the author logic of your goal database, and off you go!

Streaming DynamoDB Modifications

Whether or not or not you require back-filling knowledge, likelihood is you need to seize occasions from DynamoDB to make sure each will get in sync with one another.

DynamoDB Streams can be utilized to seize modifications carried out in your supply DynamoDB desk. However how do you eat from its occasions?

DynamoDB Streams Kinesis Adapter

AWS gives the DynamoDB Streams Kinesis Adapter to will let you course of occasions from DynamoDB Streams through the Amazon Kinesis Shopper Library (such because the kinesis-asl module in Apache Spark). Past the historic knowledge migration, merely stream occasions from DynamoDB to your goal database. After that, each datastores must be in sync.

Though this strategy could introduce a steep studying curve, it’s by far probably the most versatile one. It even allows you to eat occasions from outdoors the AWS ecosystem (which can be significantly vital in the event you’re switching to a unique supplier).

For extra particulars on this strategy, AWS gives a walkthrough on the way to eat occasions from a supply DynamoDB desk to a vacation spot one.

AWS Lambda

Lambda features are easy to get began with, deal with all checkpointing logic on their very own, and seamlessly combine with the AWS ecosystem. With this strategy, you merely encapsulate your utility logic inside a Lambda operate. That permits you to write occasions to your vacation spot database with out having to cope with the Kinesis API logic, akin to check-pointing or the variety of shards in a stream.

When taking this route, you may load the captured occasions straight into your goal database. Or, if the 24-hour retention restrict is a priority, you may merely stream and retain these data in one other service, akin to Amazon SQS, and replay them later. The latter strategy is properly past the scope of this text.

For examples of the way to get began with Lambda features, see the AWS documentation.

Last Remarks

Migrating from one database to a different requires cautious planning and a radical understanding of all steps concerned through the course of. Additional complicating the matter, there’s a wide range of other ways to perform a migration, and every variation brings its personal set of trade-offs and advantages.

This text offered an in-depth take a look at how a migration from DynamoDB works, and the way it differs from different databases. We additionally mentioned other ways to back-fill historic knowledge and stream modifications to a different database. Lastly, we ran via an end-to-end migration, leveraging AWS instruments you in all probability already know. At this level, it is best to have all of the instruments and ways required to hold out a migration by yourself.