Environment friendly information processing is essential for companies and organizations that depend on massive information analytics to make knowledgeable selections. One key issue that considerably impacts the efficiency of information processing is the storage format of the information. This text explores the influence of various storage codecs, particularly Parquet, Avro, and ORC on question efficiency and prices in massive information environments on Google Cloud Platform (GCP). This text supplies benchmarks, discusses price implications, and affords suggestions on deciding on the suitable format based mostly on particular use circumstances.

Introduction to Storage Codecs in Massive Knowledge

Knowledge storage codecs are the spine of any massive information processing setting. They outline how information is saved, learn, and written immediately impacting storage effectivity, question efficiency, and information retrieval speeds. Within the massive information ecosystem, columnar codecs like Parquet and ORC and row-based codecs like Avro are broadly used as a result of their optimized efficiency for particular sorts of queries and processing duties.

- Parquet: Parquet is a columnar storage format optimized for read-heavy operations and analytics. It’s extremely environment friendly when it comes to compression and encoding, making it supreme for situations the place learn efficiency and storage effectivity are prioritized.

- Avro: Avro is a row-based storage format designed for information serialization. It’s recognized for its schema evolution capabilities and is usually used for write-heavy operations the place information must be serialized and deserialized rapidly.

- ORC (Optimized Row Columnar): ORC is a columnar storage format much like Parquet however optimized for each learn and write operations, ORC is extremely environment friendly when it comes to compression, which reduces storage prices and hastens information retrieval.

Analysis Goal

The first goal of this analysis is to evaluate how completely different storage codecs (Parquet, Avro, ORC) have an effect on question efficiency and prices in massive information environments. This text goals to supply benchmarks based mostly on varied question varieties and information volumes to assist information engineers and designers select probably the most appropriate format for his or her particular use circumstances.

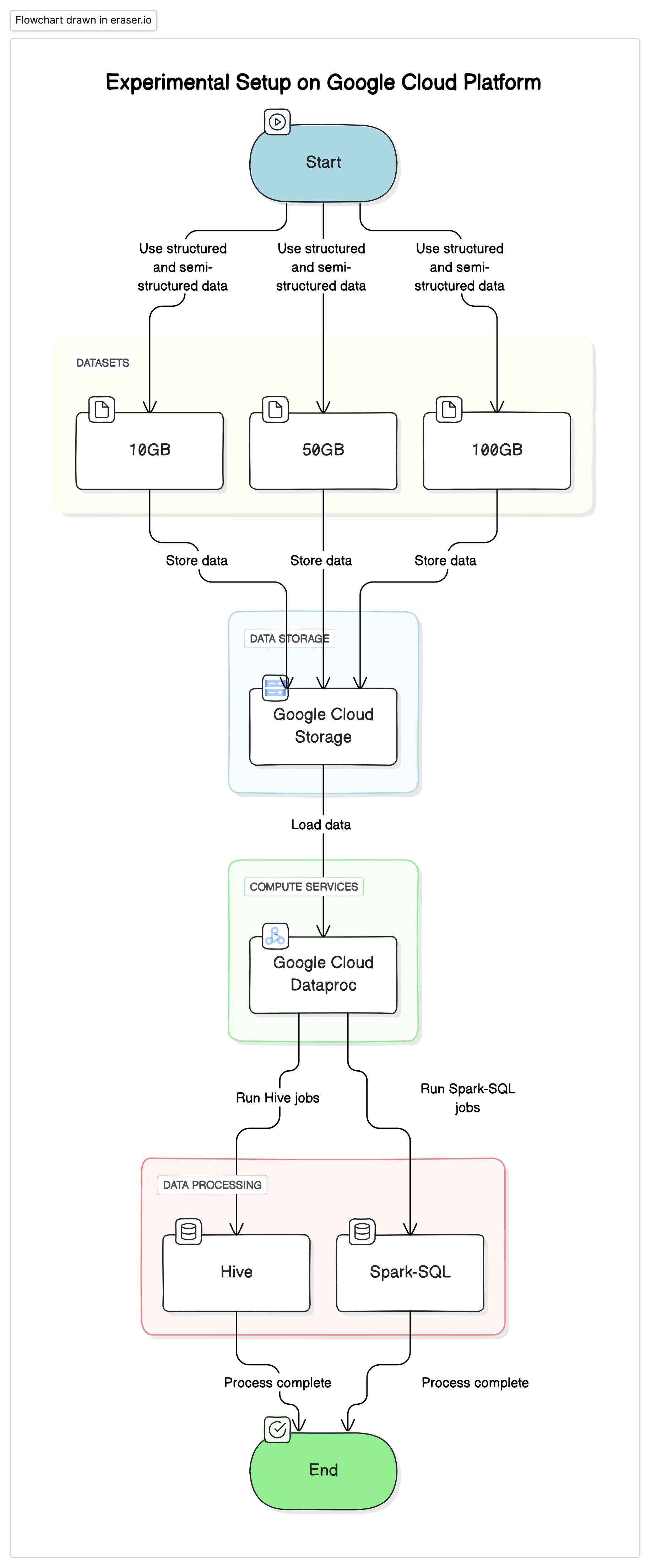

Experimental Setup

To conduct this analysis, we used a standardized setup on Google Cloud Platform (GCP) with Google Cloud Storage as the information repository and Google Cloud Dataproc for operating Hive and Spark-SQL jobs. The info used within the experiments was a mixture of structured and semi-structured datasets to imitate real-world situations.

Key Elements

- Google Cloud Storage: Used to retailer the datasets in numerous codecs (Parquet, Avro, ORC)

- Google Cloud Dataproc: A managed Apache Hadoop and Apache Spark service used to run Hive and Spark-SQL jobs.

- Datasets: Three datasets of various sizes (10GB, 50GB, 100GB) with combined information varieties.

# Initialize PySpark and arrange Google Cloud Storage as file system

from pyspark.sql import SparkSession

spark = SparkSession.builder

.appName("BigDataQueryPerformance")

.config("spark.jars.packages", "com.google.cloud.bigdataoss:gcs-connector:hadoop3-2.2.5")

.getOrCreate()

# Configure the entry to Google Cloud Storage

spark.conf.set("fs.gs.impl", "com.google.cloud.hadoop.fs.gcs.GoogleHadoopFileSystem")

spark.conf.set("fs.gs.auth.service.account.enable", "true")

spark.conf.set("google.cloud.auth.service.account.json.keyfile", "/path/to/your-service-account-file.json")Check Queries

- Easy SELECT queries: Primary retrieval of all columns from a desk

- Filter queries: SELECT queries with WHERE clauses to filter particular rows

- Aggregation queries: Queries involving GROUP BY and mixture features like SUM, AVG, and so on..

- Be part of queries: Queries becoming a member of two or extra tables on a standard key

Outcomes and Evaluation

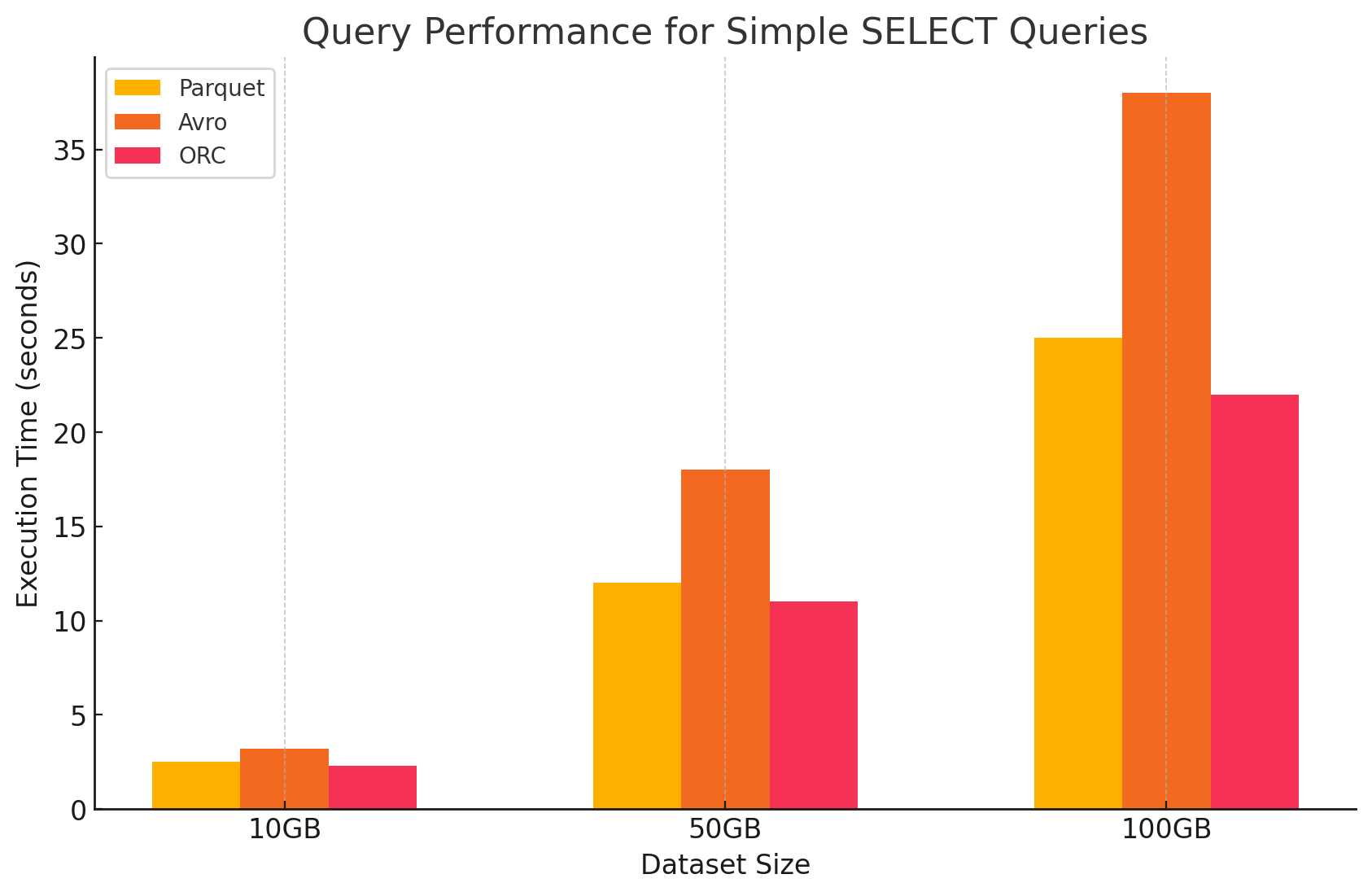

1. Easy SELECT Queries

- Parquet: It carried out exceptionally properly as a result of its columnar storage format, which allowed for quick scanning of particular columns. Parquet information are extremely compressed, decreasing the quantity of information learn from disk, which resulted in quicker question execution instances.

# Easy SELECT question on Parquet file

parquet_df.choose("column1", "column2").present()- Avro: Avro carried out reasonably properly. Being a row-based format, Avro required studying the complete row, even when solely particular columns have been wanted. This will increase the I/O operations, resulting in slower question efficiency in comparison with Parquet and ORC.

-- Easy SELECT question on Avro file in Hive

CREATE EXTERNAL TABLE avro_table

STORED AS AVRO

LOCATION 'gs://your-bucket/dataset.avro';

SELECT column1, column2 FROM avro_table;- ORC: ORC confirmed related efficiency to Parquet, with barely higher compression and optimized storage methods that enhanced learn speeds. ORC information are additionally columnar, making them appropriate for SELECT queries that solely retrieve particular columns.

# Easy SELECT question on ORC file

orc_df.choose("column1", "column2").present()

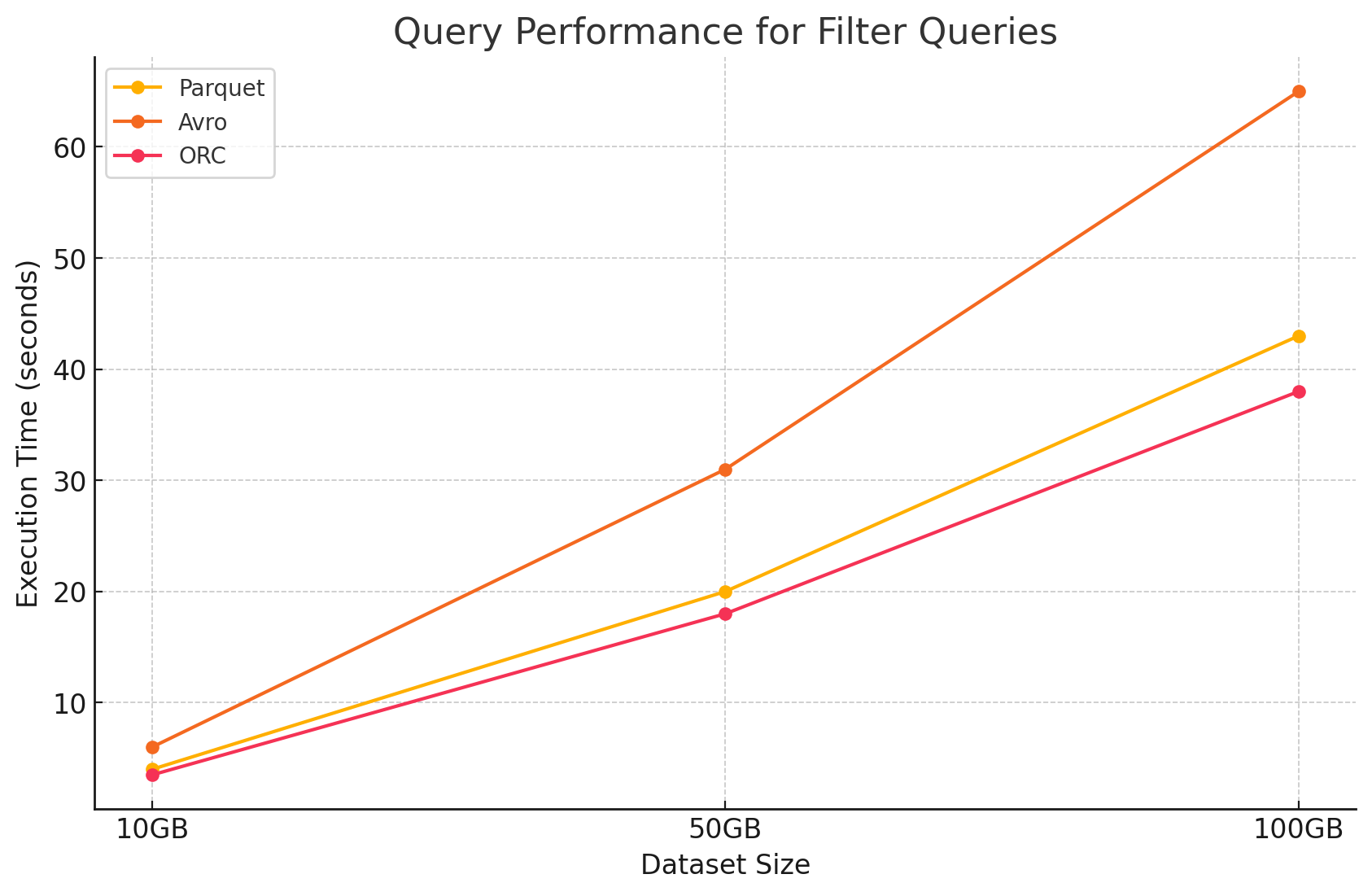

2. Filter Queries

- Parquet: Parquet maintained its efficiency benefit as a result of its columnar nature and the flexibility to skip irrelevant columns rapidly. Nevertheless, efficiency was barely impacted by the necessity to scan extra rows to use filters.

# Filter question on Parquet file

filtered_parquet_df = parquet_df.filter(parquet_df.column1 == 'some_value')

filtered_parquet_df.present()- Avro: The efficiency decreased additional because of the must learn whole rows and apply filters throughout all columns, rising processing time.

-- Filter question on Avro file in Hive

SELECT * FROM avro_table WHERE column1 = 'some_value';- ORC: This outperformed Parquet barely in filter queries as a result of its predicate pushdown characteristic, which permits filtering immediately on the storage degree earlier than the information is loaded into reminiscence.

# Filter question on ORC file

filtered_orc_df = orc_df.filter(orc_df.column1 == 'some_value')

filtered_orc_df.present()

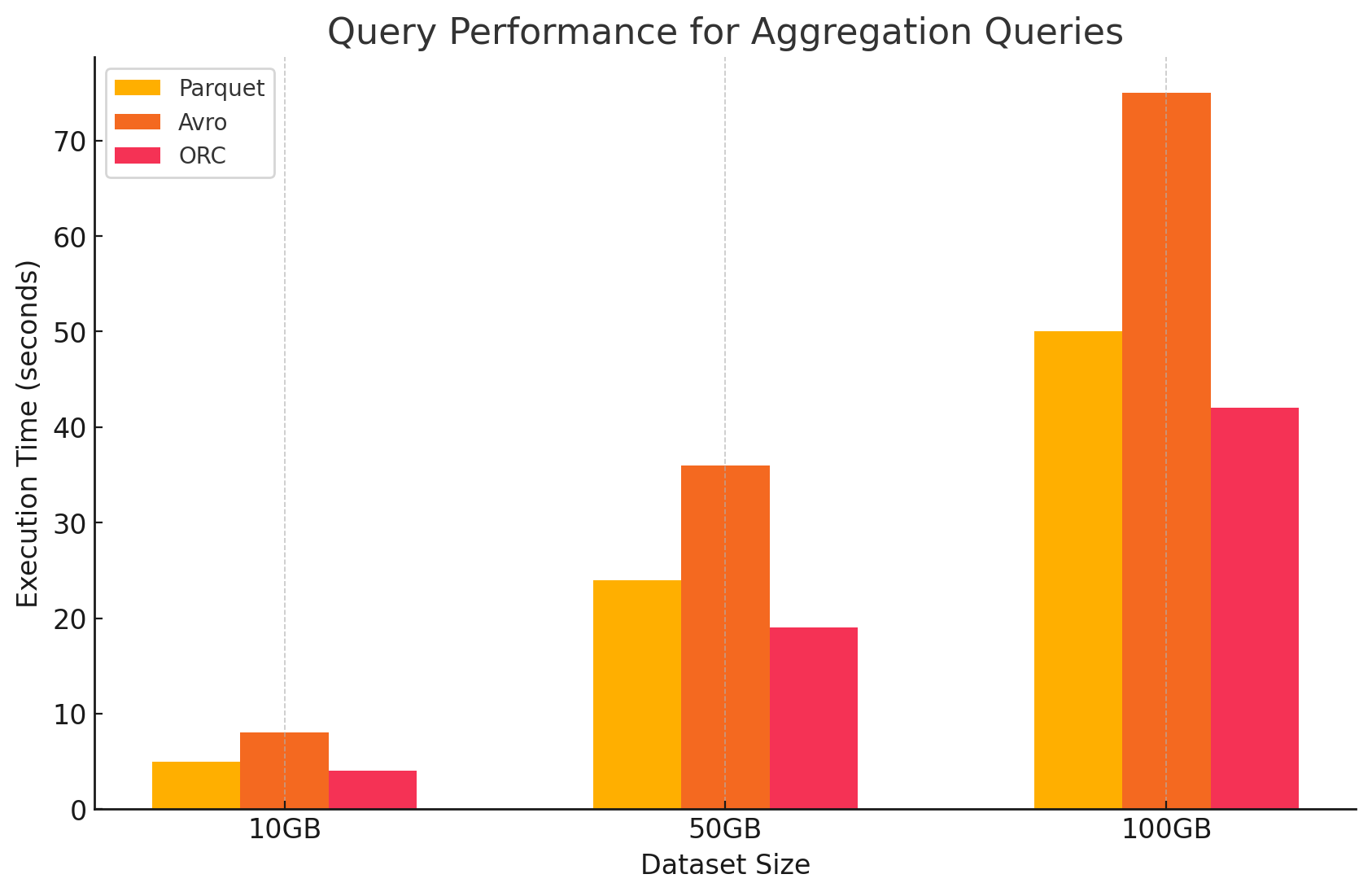

3. Aggregation Queries

- Parquet: Parquet carried out properly, however barely much less environment friendly than ORC. The columnar format advantages aggregation operations by rapidly accessing required columns, however Parquet lacks among the built-in optimizations that ORC affords.

# Aggregation question on Parquet file

agg_parquet_df = parquet_df.groupBy("column1").agg({"column2": "sum", "column3": "avg"})

agg_parquet_df.present()- Avro: Avro lagged behind as a result of its row-based storage, which required scanning and processing all columns for every row, rising the computational overhead.

-- Aggregation question on Avro file in Hive

SELECT column1, SUM(column2), AVG(column3) FROM avro_table GROUP BY column1;- ORC: ORC outperformed each Parquet and Avro in aggregation queries. ORC’s superior indexing and built-in compression algorithms enabled quicker information entry and lowered I/O operations, making it extremely appropriate for aggregation duties.

# Aggregation question on ORC file

agg_orc_df = orc_df.groupBy("column1").agg({"column2": "sum", "column3": "avg"})

agg_orc_df.present()

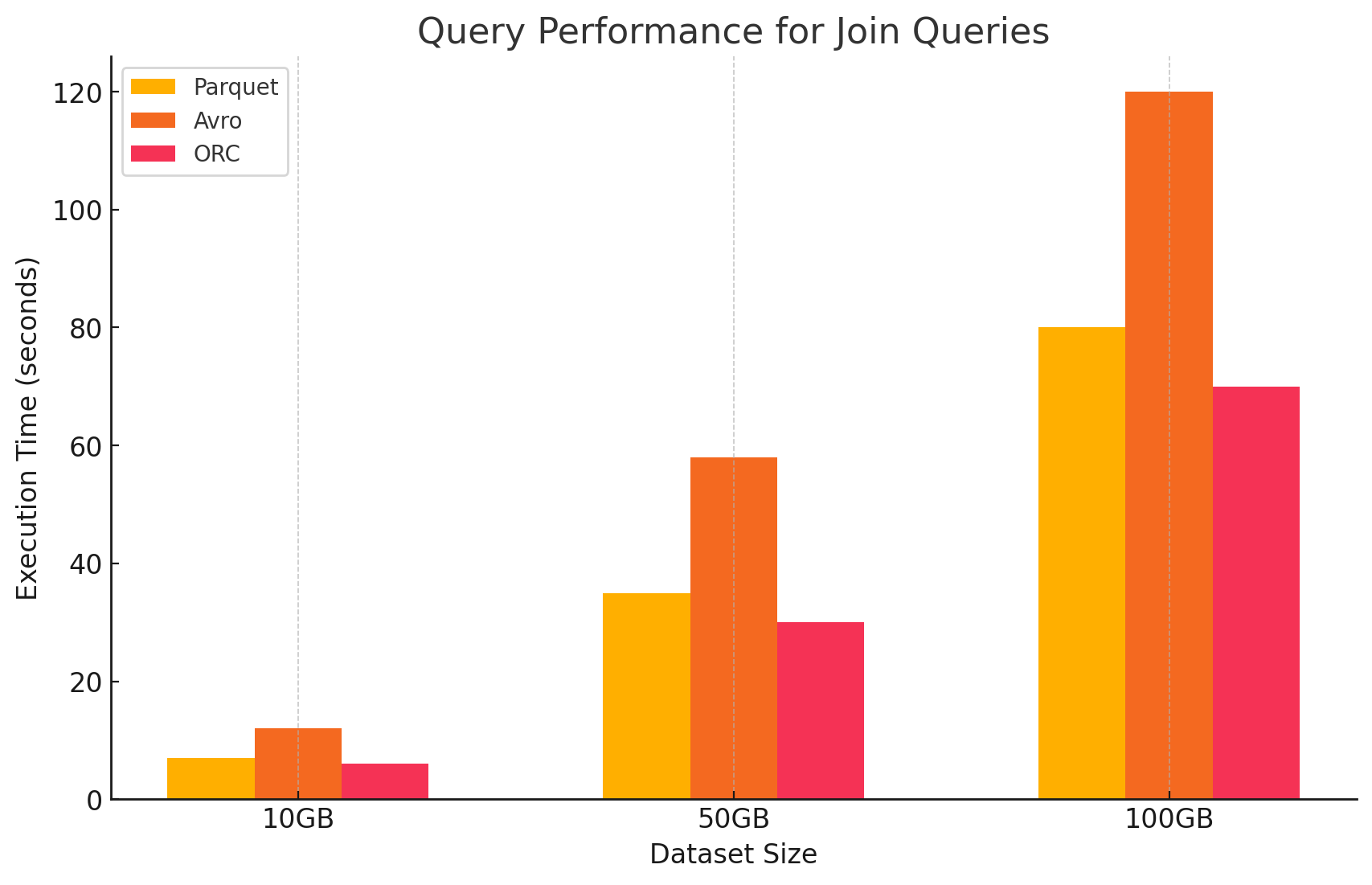

4. Be part of Queries

- Parquet: Parquet carried out properly, however not as effectively as ORC in be part of operations as a result of its much less optimized information studying for be part of situations.

# Be part of question between Parquet and ORC information

joined_df = parquet_df.be part of(orc_df, parquet_df.key == orc_df.key)

joined_df.present()- ORC: ORC excelled in be part of queries, benefitting from superior indexing and predicate pushdown capabilities, which minimized information scanned and processed throughout be part of operations.

# Be part of question between two ORC information

joined_orc_df = orc_df.be part of(other_orc_df, orc_df.key == other_orc_df.key)

joined_orc_df.present()- Avro: Avro struggled considerably with be part of operations, primarily because of the excessive overhead of studying full rows and the dearth of columnar optimizations for be part of keys.

-- Be part of question between Parquet and Avro information in Hive

SELECT a.column1, b.column2

FROM parquet_table a

JOIN avro_table b

ON a.key = b.key;

Affect of Storage Format on Prices

1. Storage Effectivity and Price

- Parquet and ORC (columnar codecs)

- Compression and storage price: Each Parquet and ORC are columnar storage codecs that supply excessive compression ratios, particularly for datasets with many repetitive or related values inside columns. This excessive compression reduces the general information measurement, which in flip lowers storage prices, notably in cloud environments the place storage is billed per GB.

- Optimum for analytics workloads: On account of their columnar nature, these codecs are perfect for analytical workloads the place solely particular columns are continuously queried. This implies much less information is learn from storage, decreasing each I/O operations and related prices.

- Avro (row-based format)

- Compression and storage price: Avro usually supplies decrease compression ratios than columnar codecs like Parquet and ORC as a result of it shops information row by row. This may result in greater storage prices, particularly for big datasets with many columns, as all information in a row should be learn, even when only some columns are wanted.

- Higher for write-heavy workloads: Whereas Avro may lead to greater storage prices as a result of decrease compression, it’s higher suited to write-heavy workloads the place information is constantly being written or appended. The associated fee related to storage could also be offset by the effectivity beneficial properties in information serialization and deserialization.

2. Knowledge Processing Efficiency and Price

- Parquet and ORC (columnar codecs)

- Diminished processing prices: These codecs are optimized for read-heavy operations, which makes them extremely environment friendly for querying massive datasets. As a result of they permit studying solely the related columns wanted for a question, they scale back the quantity of information processed. This results in decrease CPU utilization and quicker question execution instances, which may considerably scale back computational prices in a cloud setting the place compute assets are billed based mostly on utilization.

- Superior options for price optimization: ORC, specifically, consists of options like predicate push-down and built-in statistics, which allow the question engine to skip studying pointless information. This additional reduces I/O operations and hastens question efficiency, optimizing prices.

- Avro (row-based codecs)

- Greater processing prices: Since Avro is a row-based format, it usually requires extra I/O operations to learn whole rows even when only some columns are wanted. This may result in elevated computational prices as a result of greater CPU utilization and longer question execution instances, particularly in read-heavy environments.

- Environment friendly for streaming and serialization: Regardless of greater processing prices for queries, Avro is properly suited to streaming and serialization duties the place quick write speeds and schema evolution are extra essential.

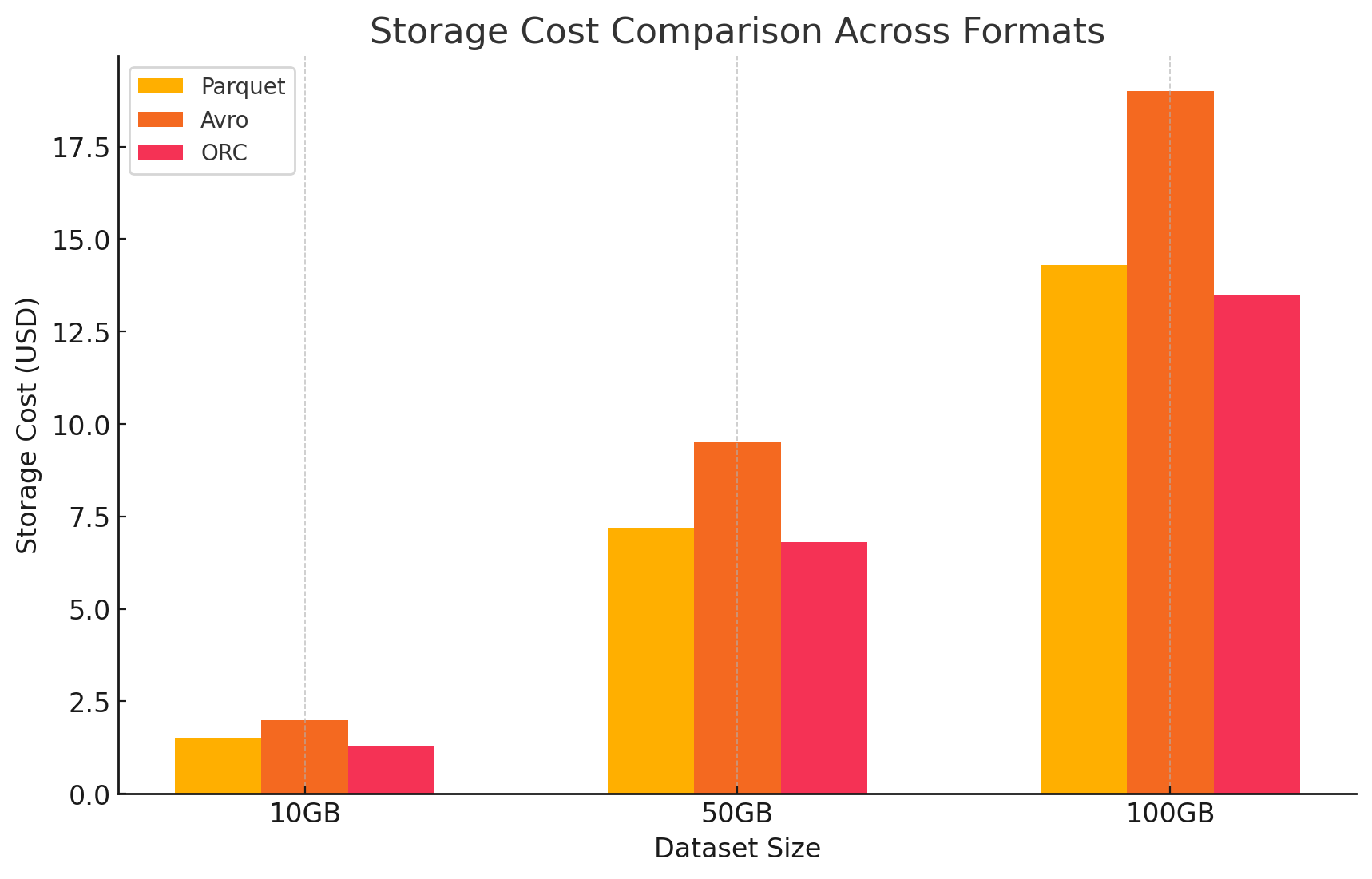

3. Price Evaluation With Pricing particulars

- To quantify the price influence of every storage format, we performed an experiment utilizing GCP. We calculated the prices related to each storage and information processing for every format based mostly on GCP’s pricing fashions.

- Google Cloud storage prices

- Storage price: That is calculated based mostly on the quantity of information saved in every format. GCP expenses per GB per 30 days for information saved in Google Cloud Storage. Compression ratios achieved by every format immediately influence these prices. Columnar codecs like Parquet and ORC usually have higher compression ratios than row-based codecs like Avro, leading to decrease storage prices.

- Here’s a pattern of how storage prices have been calculated:

- Parquet: Excessive compression resulted in lowered information measurement, reducing storage prices

- ORC: Just like Parquet, ORC’s superior compression additionally lowered storage prices successfully

- Avro: Decrease compression effectivity led to greater storage prices in comparison with Parquet and ORC

# Instance of the way to save information again to Google Cloud Storage in numerous codecs

# Save DataFrame as Parque

parquet_df.write.parquet("gs://your-bucket/output_parquet")

# Save DataFrame as Avro

avro_df.write.format("avro").save("gs://your-bucket/output_avro")

# Save DataFrame as ORC

orc_df.write.orc("gs://your-bucket/output_orc")

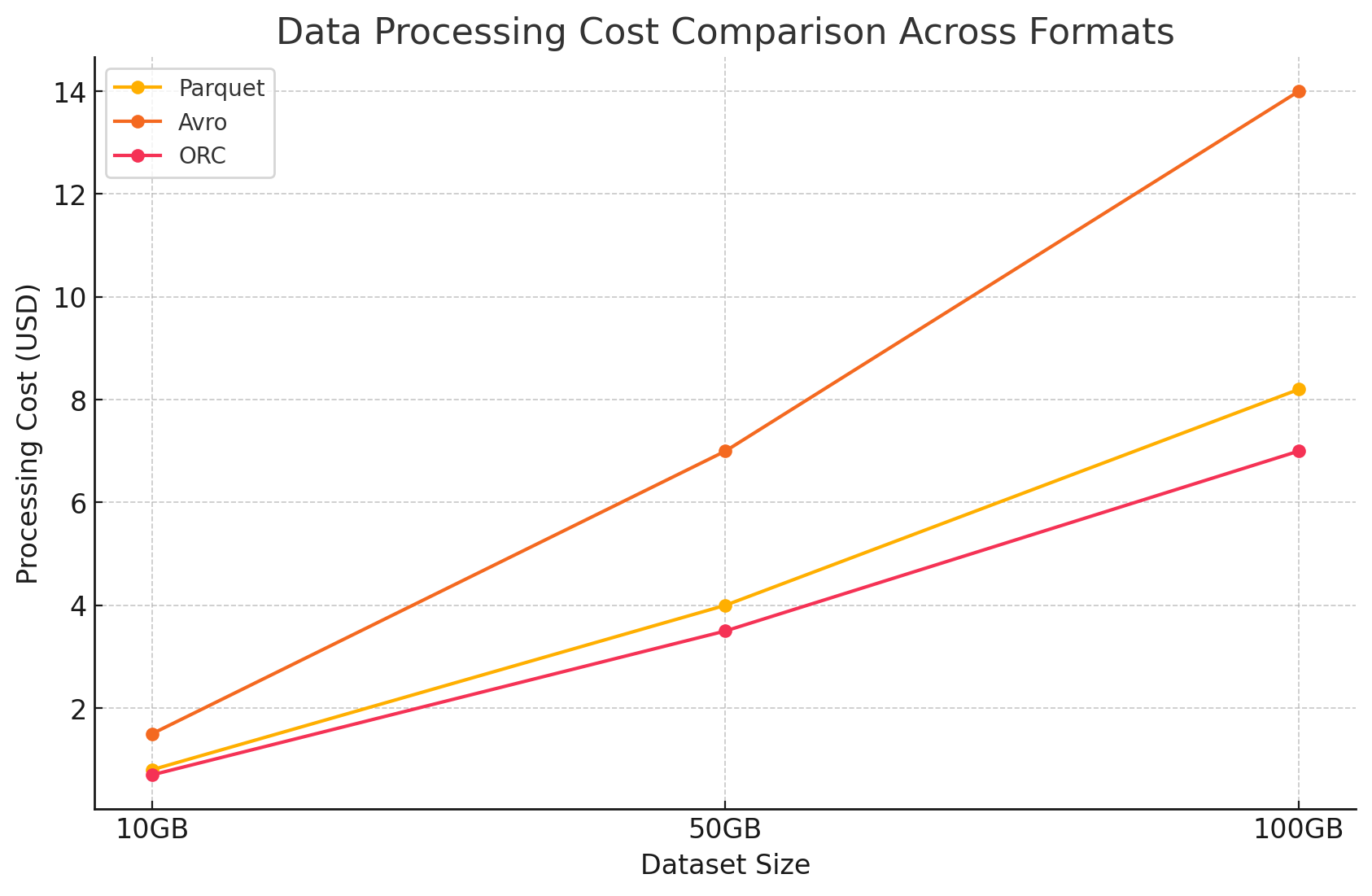

- Knowledge processing prices

- Knowledge processing prices have been calculated based mostly on the compute assets required to carry out varied queries utilizing Dataproc on GCP. GCP expenses for dataproc utilization based mostly on the dimensions of the cluster and the length for which the assets are used.

- Compute prices:

- Parquet and ORC: On account of their environment friendly columnar storage, these codecs lowered the quantity of information learn and processed, resulting in decrease compute prices. Quicker question execution instances additionally contributed to price financial savings, particularly for complicated queries involving massive datasets.

- Avro: Avro required extra compute assets as a result of its row-based format, which elevated the quantity of information learn and processed. This led to greater prices, notably for read-heavy operations.

Conclusion

The selection of storage format in massive information environments considerably impacts each question efficiency and price. The above analysis and experiment show the next key factors:

- Parquet and ORC: These columnar codecs present glorious compression, which reduces storage prices. Their means to effectively learn solely the mandatory columns enormously enhances question efficiency and reduces information processing prices. ORC barely outperforms Parquet in sure question varieties as a result of its superior indexing and optimization options, making it a superb alternative for combined workloads that require each excessive learn and write efficiency.

- Avro: Whereas Avro isn’t as environment friendly when it comes to compression and question efficiency as Parquet and ORC, it excels in use circumstances requiring quick write operations and schema evolution. This format is good for situations involving information serialization and streaming the place write efficiency and suppleness are prioritized over learn effectivity.

- Price effectivity: In a cloud setting like GCP, the place prices are carefully tied to storage and compute utilization, choosing the proper format can result in vital price financial savings. For analytics workloads which might be predominantly read-heavy, Parquet and ORC are probably the most cost-effective choices. For purposes that require speedy information ingestion and versatile schema administration, Avro is an acceptable alternative regardless of its greater storage and compute prices.

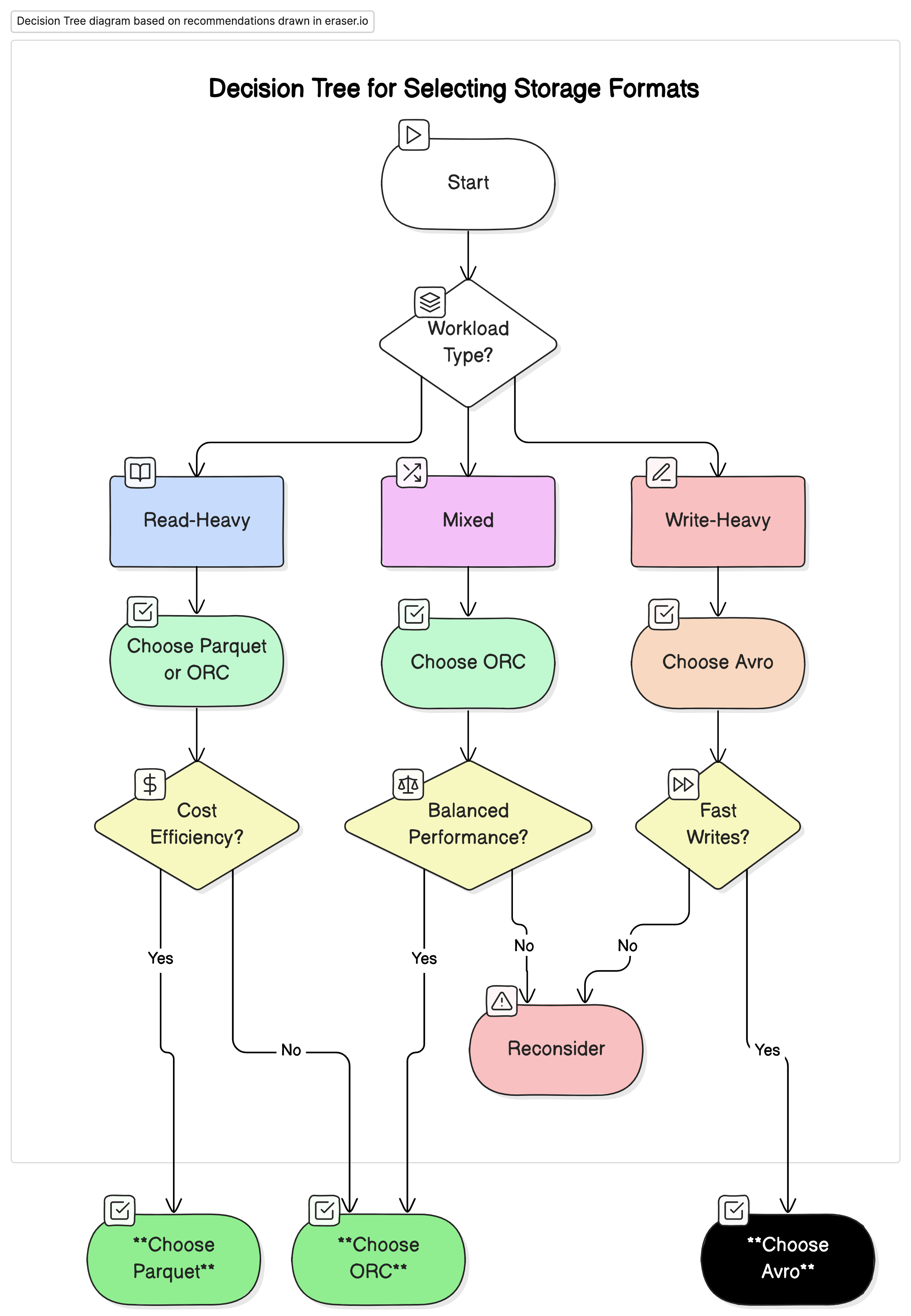

Suggestions

Primarily based on our evaluation, we suggest the next:

- For read-heavy analytical workloads: Use Parquet or ORC. These codecs present superior efficiency and price effectivity as a result of their excessive compression and optimized question efficiency.

- For write-heavy workloads and serialization: Use Avro. It’s higher suited to situations the place quick writes and schema evolution are essential, resembling information streaming and messaging methods.

- For combined workloads: ORC affords balanced efficiency for each learn and write operations, making it a perfect alternative for environments the place information workloads fluctuate.

Last Ideas

Deciding on the proper storage format for giant information environments is essential for optimizing each efficiency and price. Understanding the strengths and weaknesses of every format permits information engineers to tailor their information structure to particular use circumstances, maximizing effectivity and minimizing bills. As information volumes proceed to develop, making knowledgeable selections about storage codecs will develop into more and more vital for sustaining scalable and cost-effective information options.

By fastidiously evaluating the efficiency benchmarks and price implications offered on this article, organizations can select the storage format that greatest aligns with their operational wants and monetary targets.