Why Snowflake?

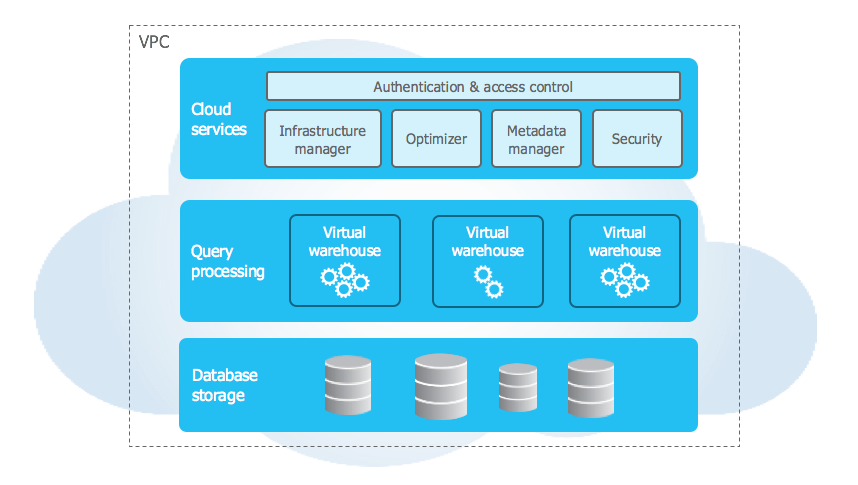

Snowflake is a cloud-based information platform that gives a completely managed service for dealing with data-driven engagements. It’s scalable and is enabled on a number of cloud tenants of AWS, Azure, and GCP.

Snowflake has a singular structure that separates the storage, compute, and repair layers which allows scalable and elastic information processing. This structure allows us to make use of sources of storage, compute, and providers independently and pay as per the utilization.

Snowflake helps MPP structure which permits excessive concurrency with the potential of dealing with a number of workloads and accessing information concurrently. It additionally offers safe information sharing throughout completely different organizations with out creating replicas of the dataset. It presents question efficiency options of Auto question optimization, information indexing, and caching

It offers sturdy security measures of knowledge encryption for information at relaxation and in transit. Position-based entry management (RBAC) with auditing capabilities to make sure that it’s compliant.

Snowflake helps structured (RDBMS), Semi-structured information (JSON, XML), and unstructured information and is nicely built-in with numerous enterprise intelligence, information integration, and analytical workflows.

What Is Streaming?

Streaming refers back to the steady transmission and supply of knowledge akin to movies, audio, and information over a community from supply to vacation spot in a real-time method.

Applied sciences that assist streaming embody Apache Kafka, Apache Flink, Apache Spark Streaming, and Snow Pipe of Snowflake.

What Is Snow Pipe?

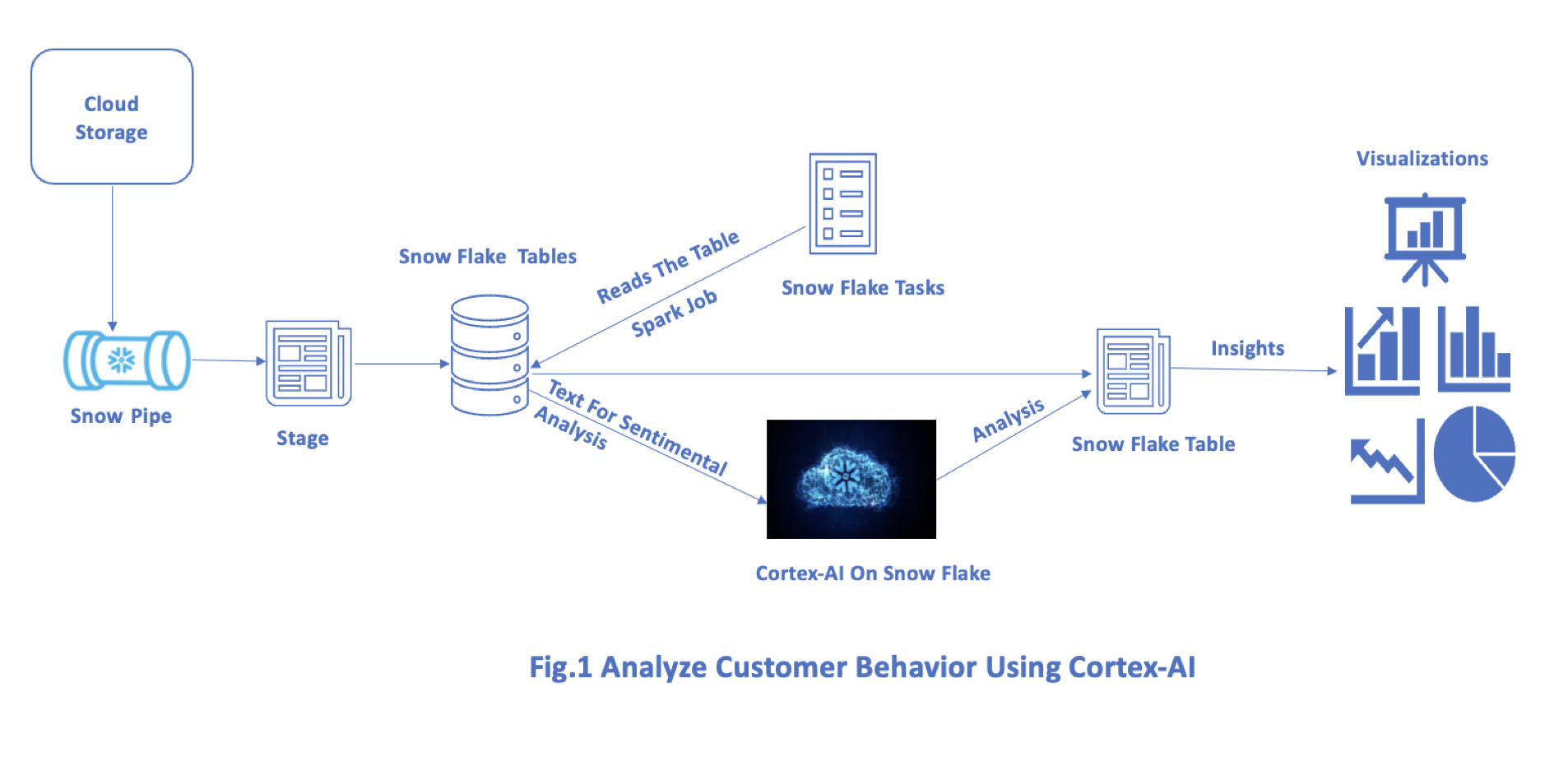

Snow Pipe is a Snowflake service that mechanically ingests information into the Snowflake warehouse from cloud storage akin to Amazon S3, Azure Blob Storage, and Google Cloud Storage with out requiring any handbook intervention.

It seamlessly integrates information from cloud platforms of various varieties and different sizes with an event-driven mechanism up on the file detection within the storage containers with configured SQS queues helps combine the dataset with Snowflake warehouse on a real-time foundation with an auto-scaling mechanism that handles all kinds of payloads with minimal changes thereby decreasing the fee related to the load operations and cut back overheads.

What Is Cortex AI?

It’s an AI platform that gives capabilities of pure language processing (NLP), predictive analytics, Segmenting, and a suggestion system that may be built-in with Snowflake AI through Snow Park to generate real-time insights utilizing Snowflake native capabilities of scheduling & execution, which additional reduces prices related to information motion and integration by processing information and operating AI fashions throughout the built-in platform.

What Is Snowpark?

Snowpark is an SDK(Software program Improvement Equipment) enabled on the Snowflake platform that enables builders to put in writing customized code of their most well-liked languages of Scala, Python, and Java to carry out information processing and transformation actions by leveraging Snowflake’s compute capabilities.

It offers libraries and APIs to work together programmatically with the Snowflake platform and offers efficient insights by integrating with AI functions.

Steps Concerned in Creating Snow-Pipe

1. Put together Your AWS Setup

- Amazon S3 Bucket: Just remember to have an Amazon S3 bucket arrange the place your information information will likely be positioned.

- AWS IAM Position: Create an AWS IAM position that Snowflake can assume to entry your S3 bucket. This position ought to have permission to learn from the S3 bucket.

2. Arrange Snowflake

- Integration: Arrange an integration in Snowflake that defines your AWS S3 particulars (bucket identify, AWS IAM position ARN, and so forth.).

CREATE STORAGE INTEGRATION my_storage_integration

TYPE = EXTERNAL_STAGE

STORAGE_PROVIDER = S3

ENABLED = TRUE

S3_BUCKET = 'my_bucket'

S3_PREFIX = 'snowpipe/kafka/';3. Create a Stage

- Exterior Stage: Create an exterior stage in Snowflake utilizing the mixing created within the earlier step.

CREATE OR REPLACE STAGE kafka_stage

URL = 's3://my_bucket/snowpipe/kafka/'

STORAGE_INTEGRATION = my_storage_integration;4. Create a Snowflake Desk

- Goal Desk: Create a desk in Snowflake the place your information from S3 will likely be loaded.

CREATE OR REPLACE TABLE my_snowflake_table (

column1 STRING,

column2 STRING,

column3 TIMESTAMP

);5. Create a Kafka Integration

Snowflake makes use of Kafka integrations to connect with Kafka subjects and eat messages. Right here’s an instance of tips on how to create a Kafka integration:

CREATE INTEGRATION kafka_integration

TYPE = EXTERNAL_KAFKA

ENABLED = TRUE

KAFKA_BROKER_HOST = 'your.kafka.dealer.com'

KAFKA_BROKER_PORT = 9092

KAFKA_TOPIC_LIST = 'topic1,topic2'

KAFKA_SECURITY_PROTOCOL = 'PLAINTEXT'

KAFKA_AUTO_OFFSET_RESET = 'earliest'

KAFKA_FETCH_MIN_BYTES = 1

KAFKA_POLL_TIMEOUT_MS = 200;

6. Create a Snowpipe

CREATE PIPE my_kafka_pipe

AUTO_INGEST = TRUE

INTEGRATION = kafka_integration

AS

COPY INTO my_snowflake_table

FROM (

SELECT $1::STRING, $2::STRING, $3::TIMESTAMP -- Alter primarily based in your Kafka message construction

FROM @kafka_stage (FILE_FORMAT => 'json_format')

);7. Grant Needed Permissions

- Snowflake Objects: Grant obligatory permissions to the Snowflake objects (integration, stage, desk, and pipe) to the suitable Snowflake roles or customers.

GRANT USAGE ON INTEGRATION my_storage_integration TO ROLE my_role;

GRANT USAGE ON STAGE kafka_stage TO ROLE my_role;

GRANT SELECT, INSERT ON TABLE my_snowflake_table TO ROLE my_role;

GRANT EXECUTE TASK ON PIPE my_kafka_pipe TO ROLE my_role;8. Monitor and Handle Snowpipe

- Monitoring: Monitor the efficiency and standing of your Snowpipe utilizing Snowflake’s UI or by querying the related metadata tables (

PIPE_HISTORY,PIPE_EXECUTION). - Handle: Modify or disable the Snowpipe as wanted utilizing

ALTER PIPEinstructions.

Creating and Integrating Snow Pipe Utilizing SQL

Snowflake SQL To Create a Snowpipe for Ingesting Kafka Knowledge

CREATE PIPE snowpipe_kafka_pipe

AUTO_INGEST = TRUE

AWS_SNS_TOPIC = 'arn:aws:sns:us-west 2:123456789012:snowpipe_notifications'

AS COPY INTO my_kafka_table

FROM @my_external_stage

FILE_FORMAT = (TYPE = 'JSON');Instance Snowflake SQL for Operating Sentiment Evaluation Utilizing Cortex AI

CREATE OR REPLACE PROCEDURE sentiment_analysis_proc()

RETURNS VARIANT

LANGUAGE JAVASCRIPT

EXECUTE AS CALLER

AS

$$

var end result = [];

var stmt = snowflake.createStatement({

sqlText: "SELECT review_text FROM MY_KAFKA_TABLE"

});

var rs = stmt.execute();

whereas (rs.subsequent()) {

var review_text = rs.getColumnValue(1);

// Carry out sentiment evaluation utilizing Cortex AI

var sentiment = cortexAI.predictSentiment(review_text);

end result.push({

review_text: review_text,

sentiment: sentiment

});

}

return end result;

$$;

CALL sentiment_analysis_proc();Code for Sentimental Evaluation and Integrating Kafka Streams Utilizing PySpark

from pyspark.sql import SparkSession

from pyspark.sql.features import col, udf

from cortex_ai_client import CortexAIClientInitialize Spark Session

spark = SparkSession.builder

.appName("KafkaSnowflakeCortexAIIntegration")

.getOrCreate()

Kafka Connection Particulars

kafka_brokers = "kafka_host:port"

Exchange With Your Kafka Dealer Particulars

kafka_topic = "customer_interactions"

- Exchange along with your Kafka Subject

Cortex AI Consumer Initialization

cortex_client = CortexAIClient(api_key='your_api_key')

- Initialize Cortex AI shopper along with your API key

Perform To Carry out Sentiment Evaluation Utilizing Cortex AI

def analyze_sentiment(review_text):

sentiment = cortex_client.predict_sentiment(review_text)

return sentiment

Register UDF for Sentiment Evaluation

analyze_sentiment_udf = udf(analyze_sentiment)

Learn From Kafka Stream

kafka_stream_df = spark

.readStream

.format("kafka")

.choice("kafka.bootstrap.servers", kafka_brokers)

.choice("subscribe", kafka_topic)

.load()Convert Kafka Messages to Strings

kafka_stream_df = kafka_stream_df.selectExpr("CAST(value AS STRING)")

Apply Sentiment Evaluation Utilizing Cortex AI

sentiment_analyzed_df = kafka_stream_df.withColumn("sentiment_score", analyze_sentiment_udf(col("value")))

Outline Snowflake Connection Choices

sfOptions = {

"sfURL": "your_account.snowflakecomputing.com",

"sfAccount": "your_account",

"sfUser": "your_username",

"sfPassword": "your_password",

"sfDatabase": "your_database",

"sfSchema": "your_schema",

"sfWarehouse": "your_warehouse",

"dbtable": "analyzed_customer_interactions", Snowflake Desk To Write Outcomes

"streamName": "kafka_stream_results"

Snowflake Stream Title for Streaming Inserts

}

Write Analyzed Knowledge to Snowflake

question = sentiment_analyzed_df

.writeStream

.format("snowflake")

.choices(**sfOptions)

.choice("checkpointLocation", "/tmp/checkpoint_location")

.begin()

Await Termination (Or Run Indefinitely if Wanted)

question.awaitTermination()

Cease Spark Session

spark.cease()

Schedule Python or PySpark Jobs in Snowflake

- Add your script to Snowflake inner stage: Add your Python or PySpark script to a Snowflake inner stage utilizing the PUT command:

PUT file:///native/path/to/my_python_script.py @~/snowflake_scripts/my_python_script.py;

- Create a Snowflake activity: Create a Snowflake activity that may execute your Python or PySpark script. Duties in Snowflake can execute SQL statements, so you possibly can name a saved process that invokes an exterior script runner (like

PYTHON&PYSPARK SCRIPTS):

CREATE TASK my_python_task

WAREHOUSE = my_warehouse

SCHEDULE = 'USING CRON 0 * * * * UTC'

TIMESTAMP_INPUT_FORMAT = 'YYYY-MM-DD HH24:MI:SS'

AS

CALL execute_external_script('PYTHON_SCRIPT', '@~/snowflake_scripts/my_python_script.py');- Allow and handle your activity: As soon as the duty is created, use the ALTER TASK command to allow it:

ALTER TASK my_python_task RESUME;

You can even use ALTER TASK to disable, modify the schedule, or replace the script executed by the duty.

Conclusion

Leveraging Cortex AI with the Snowflake platform enhances sturdy synergies of superior AI and energy platform capabilities and helps organizations obtain transformative insights from their information with out the complexities of conventional information motion and integration challenges.