In fashionable software program improvement, efficient testing performs a key position in making certain the reliability and stability of functions.

This text presents sensible suggestions for writing integration exams, demonstrating tips on how to concentrate on the specs of interactions with exterior providers, making the exams extra readable and simpler to take care of. The strategy not solely enhances the effectivity of testing but additionally promotes a greater understanding of the mixing processes throughout the utility. By the lens of particular examples, varied methods and instruments – resembling DSL wrappers, JsonAssert, and Pact – might be explored, providing the reader a complete information to enhancing the standard and visibility of integration exams.

The article presents examples of integration exams carried out utilizing the Spock Framework in Groovy for testing HTTP interactions in Spring functions. On the identical time, the principle strategies and approaches instructed in it may be successfully utilized to numerous kinds of interactions past HTTP.

Downside Description

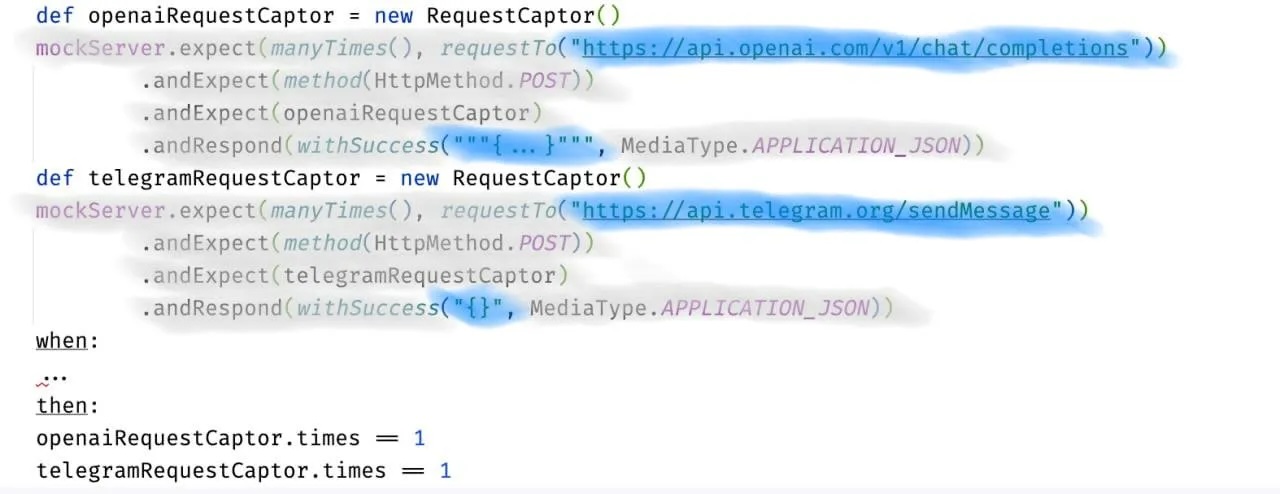

The article Ordering Chaos: Arranging HTTP Request Testing in Spring describes an strategy to writing exams with a transparent separation into distinct phases, every performing its particular position. Let’s describe a check instance in response to these suggestions, however with mocking not one, however two requests. The Act stage (Execution) might be omitted for brevity (a full check instance may be discovered within the challenge repository).

The introduced code is conditionally divided into components: “Supporting Code” (coloured in grey) and “Specification of External Interactions” (coloured in blue). The Supporting Code contains mechanisms and utilities for testing, together with intercepting requests and emulating responses. The Specification of Exterior Interactions describes particular knowledge about exterior providers that the system ought to work together with in the course of the check, together with anticipated requests and responses. The Supporting Code lays the inspiration for testing, whereas the Specification instantly pertains to the enterprise logic and important capabilities of the system that we are attempting to check.

The Specification occupies a minor a part of the code however represents important worth for understanding the check, whereas the Supporting Code, occupying a bigger half, presents much less worth and is repetitive for every mock declaration. The code is meant to be used with MockRestServiceServer. Referring to the instance on WireMock, one can see the identical sample: the specification is sort of similar, and the Supporting Code varies.

The purpose of this text is to supply sensible suggestions for writing exams in such a approach that the main target is on the specification, and the Supporting Code takes a again seat.

Demonstration State of affairs

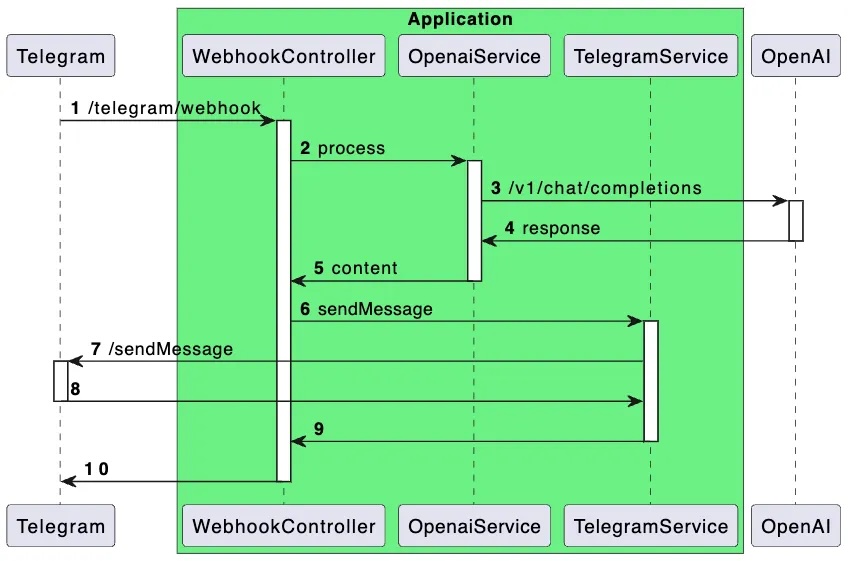

For our check state of affairs, I suggest a hypothetical Telegram bot that forwards requests to the OpenAI API and sends responses again to customers.

The contracts for interacting with providers are described in a simplified method to focus on the principle logic of the operation. Beneath is a sequence diagram demonstrating the applying structure. I perceive that the design would possibly elevate questions from a techniques structure perspective, however please strategy this with understanding—the principle aim right here is to display an strategy to enhancing visibility in exams.

Proposal

This text discusses the next sensible suggestions for writing exams:

- Use of DSL wrappers for working with mocks

- Use of

JsonAssertfor consequence verification - Storing the specs of exterior interactions in JSON information

- Use of Pact information

Utilizing DSL Wrappers for Mocking

Utilizing a DSL wrapper permits for hiding the boilerplate mock code and gives a easy interface for working with the specification. It is vital to emphasise that what’s proposed will not be a particular DSL, however a basic strategy it implements. A corrected check instance utilizing DSL is introduced under (full check textual content).

setup:

def openaiRequestCaptor = restExpectation.openai.completions(withSuccess("{...}"))

def telegramRequestCaptor = restExpectation.telegram.sendMessage(withSuccess("{}"))

when:

...

then:

openaiRequestCaptor.instances == 1

telegramRequestCaptor.instances == 1The place the strategy restExpectation.openai.completions, for instance, is described as follows:

public interface OpenaiMock {

/**

* This methodology configures the mock request to the next URL: {@code https://api.openai.com/v1/chat/completions}

*/

RequestCaptor completions(DefaultResponseCreator responseCreator);

}Having a touch upon the strategy permits, when hovering over the strategy identify within the code editor, to get assist, together with seeing the URL that might be mocked.

Within the proposed implementation, the declaration of the response from the mock is made utilizing ResponseCreator cases, permitting for customized ones, resembling:

public static ResponseCreator withResourceAccessException() {

return (request) -> {

throw new ResourceAccessException("Error");

};

}An instance check for unsuccessful situations specifying a set of responses is proven under:

import static org.springframework.http.HttpStatus.FORBIDDEN

setup:

def openaiRequestCaptor = restExpectation.openai.completions(openaiResponse)

def telegramRequestCaptor = restExpectation.telegram.sendMessage(withSuccess("{}"))

when:

...

then:

openaiRequestCaptor.instances == 1

telegramRequestCaptor.instances == 0

the place:

openaiResponse | _

withResourceAccessException() | _

withStatus(FORBIDDEN) | _For WireMock, the whole lot is identical, besides the response formation is barely totally different (check code, response manufacturing facility class code).

Utilizing the @Language(“JSON”) Annotation for Higher IDE Integration

When implementing a DSL, it is potential to annotate methodology parameters with @Language(“JSON”) to allow language function help for particular code snippets in IntelliJ IDEA. With JSON, for instance, the editor will deal with the string parameter as JSON code, enabling options resembling syntax highlighting, auto-completion, error checking, navigation, and construction search. This is an instance of the annotation’s utilization:

public static DefaultResponseCreator withSuccess(@Language("JSON") String physique) {

return MockRestResponseCreators.withSuccess(physique, APPLICATION_JSON);

}This is the way it seems to be within the editor:

Utilizing JsonAssert for Outcome Verification

The JSONAssert library is designed to simplify the testing of JSON constructions. It allows builders to simply evaluate anticipated and precise JSON strings with a excessive diploma of flexibility, supporting varied comparability modes.

This permits shifting from a verification description like this:

openaiRequestCaptor.physique.mannequin == "gpt-3.5-turbo"

openaiRequestCaptor.physique.messages.dimension() == 1

openaiRequestCaptor.physique.messages[0].position == "user"

openaiRequestCaptor.physique.messages[0].content material == "Hello!"

```

to one thing like this

```java

assertEquals("""{

"model": "gpt-3.5-turbo",

"messages": [{

"role": "user",

"content": "Hello!"

}]

}""", openaiRequestCaptor.bodyString, false)In my view, the principle benefit of the second strategy is that it ensures knowledge illustration consistency throughout varied contexts – in documentation, logs, and exams. This considerably simplifies the testing course of, offering flexibility compared and accuracy in error analysis. Thus, we not solely save time on writing and sustaining exams but additionally enhance their readability and informativeness.

When working inside Spring Boot, ranging from not less than model 2, no extra dependencies are wanted to work with the library, as org.springframework.boot:spring-boot-starter-test already features a dependency on org.skyscreamer:jsonassert.

Storing the Specification of Exterior Interactions in JSON Information

One remark we will make is that JSON strings take up a good portion of the check. Ought to they be hidden? Sure and no. It is vital to grasp what brings extra advantages. Hiding them makes exams extra compact and simplifies greedy the essence of the check at first look. However, for thorough evaluation, a part of the essential details about the specification of exterior interplay might be hidden, requiring additional jumps throughout information. The choice relies on comfort: do what’s extra snug for you.

For those who select to retailer JSON strings in information, one easy choice is to maintain responses and requests individually in JSON information. Beneath is a check code (full model) demonstrating an implementation choice:

setup:

def openaiRequestCaptor = restExpectation.openai.completions(withSuccess(fromFile("json/openai/response.json")))

def telegramRequestCaptor = restExpectation.telegram.sendMessage(withSuccess("{}"))

when:

...

then:

openaiRequestCaptor.instances == 1

telegramRequestCaptor.instances == 1The fromFile methodology merely reads a string from a file within the src/check/sources listing and would not carry any revolutionary concepts however remains to be obtainable within the challenge repository for reference.

For the variable a part of the string, it is instructed to make use of substitution with org.apache.commons.textual content.StringSubstitutor and go a set of values when describing the mock. For instance:

setup:

def openaiRequestCaptor = restExpectation.openai.completions(withSuccess(fromFile("json/openai/response.json",

[content: "Hello! How can I assist you today?"])))The place the half with substitution within the JSON file seems to be like this:

...

"message": {

"role": "assistant",

"content": "${content:-Hello there, how may I assist you today?}"

},

...The only problem for builders when adopting the file storage strategy is to develop a correct file placement scheme in check sources and a naming scheme. It is easy to make a mistake that may worsen the expertise of working with these information. One answer to this downside might be utilizing specs, resembling these from Pact, which might be mentioned later.

When utilizing the described strategy in exams written in Groovy, you would possibly encounter inconvenience: there is not any help in IntelliJ IDEA for navigating to the file from the code, however help for this performance is predicted to be added sooner or later. In exams written in Java, this works nice.

Utilizing Pact Contract Information

Let’s begin with the terminology.

Contract testing is a technique of testing integration factors the place every utility is examined in isolation to substantiate that the messages it sends or receives conform to a mutual understanding documented in a “contract.” This strategy ensures that interactions between totally different components of the system meet expectations.

A contract within the context of contract testing is a doc or specification that information an settlement on the format and construction of messages (requests and responses) exchanged between functions. It serves as a foundation for verifying that every utility can appropriately course of knowledge despatched and obtained by others within the integration.

The contract is established between a shopper (for instance, a consumer desirous to retrieve some knowledge) and a supplier (for instance, an API on a server offering the information wanted by the consumer).

Shopper-driven testing is an strategy to contract testing the place customers generate contracts throughout their automated check runs. These contracts are handed to the supplier, who then runs their set of automated exams. Every request contained within the contract file is shipped to the supplier, and the response obtained is in contrast with the anticipated response specified within the contract file. If each responses match, it means the patron and repair supplier are appropriate.

Lastly, Pact is a software that implements the concepts of consumer-driven contract testing. It helps testing each HTTP integrations and message-based integrations, specializing in code-first check improvement.

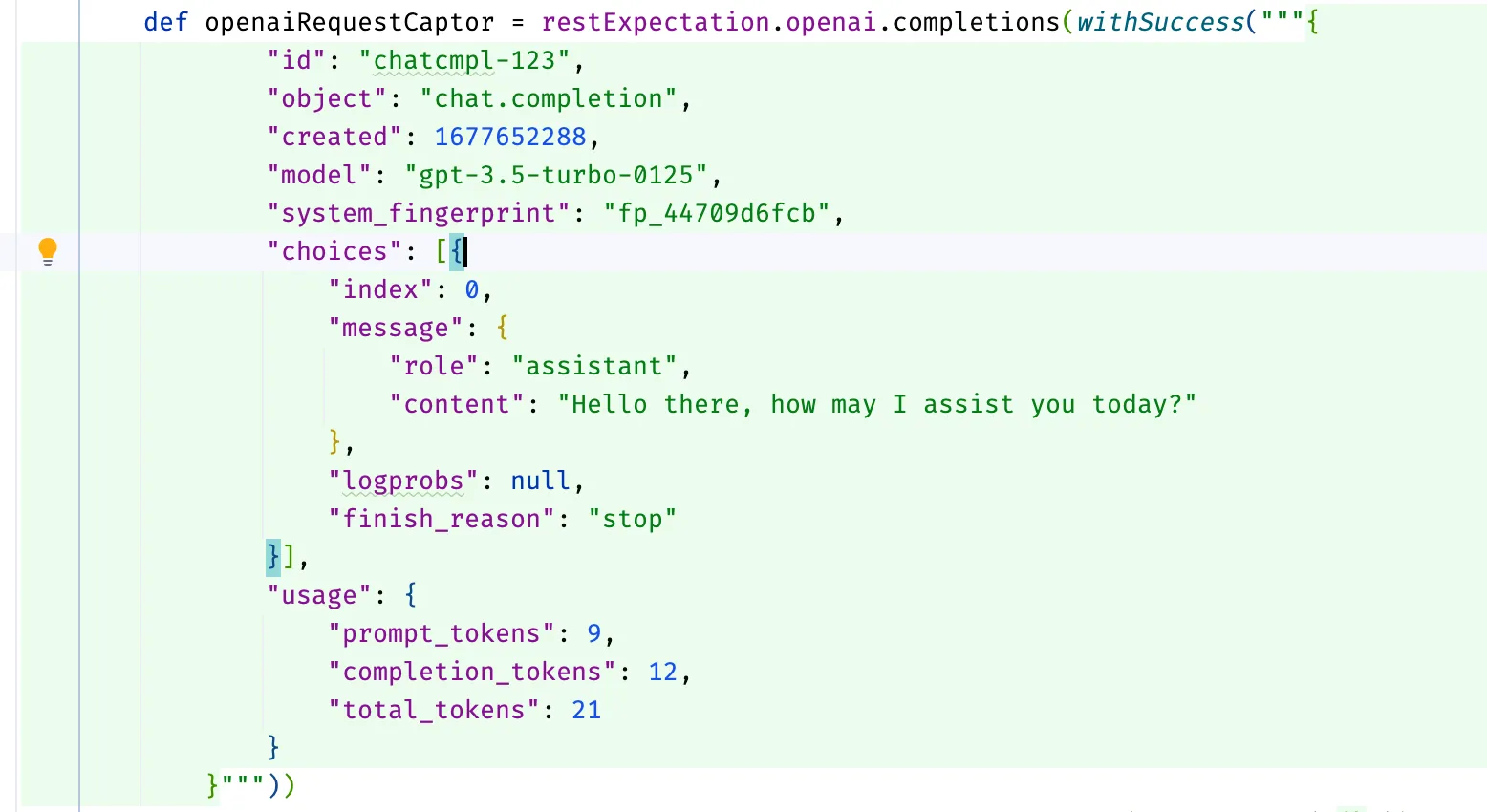

As I discussed earlier, we will use Pact’s contract specs and instruments for our job. The implementation would possibly seem like this (full check code):

setup:

def openaiRequestCaptor = restExpectation.openai.completions(fromContract("openai/SuccessfulCompletion-Hello.json"))

def telegramRequestCaptor = restExpectation.telegram.sendMessage(withSuccess("{}"))

when:

...

then:

openaiRequestCaptor.instances == 1

telegramRequestCaptor.instances == 1The contract file is accessible for assessment.

The benefit of utilizing contract information is that they comprise not solely the request and response physique but additionally different components of the exterior interactions specification—request path, headers, and HTTP response standing, permitting a mock to be totally described based mostly on such a contract.

It is vital to notice that on this case, we restrict ourselves to contract testing and don’t lengthen into consumer-driven testing. Nonetheless, somebody would possibly need to discover Pact additional.

Conclusion

This text reviewed sensible suggestions for enhancing the visibility and effectivity of integration exams within the context of improvement with the Spring Framework. My aim was to concentrate on the significance of clearly defining the specs of exterior interactions and minimizing boilerplate code. To realize this aim, I instructed utilizing DSL wrappers, JsonAssert, storing specs in JSON information, and dealing with contracts by way of Pact. The approaches described within the article purpose to simplify the method of writing and sustaining exams, enhance their readability, and most significantly, improve the standard of testing itself by precisely reflecting interactions between system elements.

- Hyperlink to the challenge repository demonstrating the exams: sandbox/bot

Thanks on your consideration to the article, and good luck in your pursuit of writing efficient and visual exams!