In earlier articles, we’ve mentioned the fundamentals of stream processing and the way to decide on a stream processing system.

On this article, we’ll describe what a streaming database is, as it’s the core part of a stream processing system. We’ll additionally present some commercially out there options to make an informative selection if it is advisable to select one.

Desk of Contents

- Fundamentals of streaming databases

- Challenges in implementing streaming databases

- The structure of streaming databases

- Examples of streaming databases

Fundamentals of Streaming Databases

Given the character of stream processing that goals to handle knowledge as a stream, engineers can’t depend on conventional databases to retailer knowledge, and for this reason we’re speaking about streaming databases on this article.

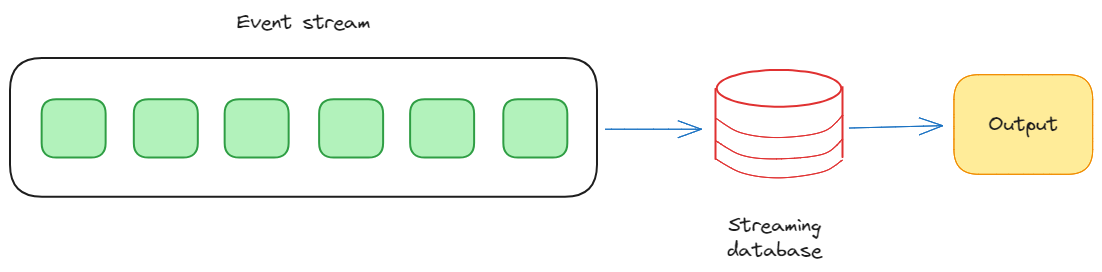

We will outline a streaming database as a real-time knowledge repository for storing, processing, and enhancing streams of knowledge. So, the basic attribute of streaming databases is their skill to handle knowledge in movement, the place occasions are captured and processed as they happen.

Streaming databases (Picture by Federico Trotta)

So, not like conventional databases that retailer static datasets and require periodic updates to course of them, streaming databases embrace an event-driven mannequin, reacting to knowledge as it’s generated. This enables organizations to extract actionable insights from dwell knowledge, enabling well timed decision-making and responsiveness to dynamic developments.

One of many key distinctions between streaming and conventional databases lies of their remedy of time. In streaming databases, time is a important dimension as a result of knowledge aren’t simply static data however are related to temporal attributes.

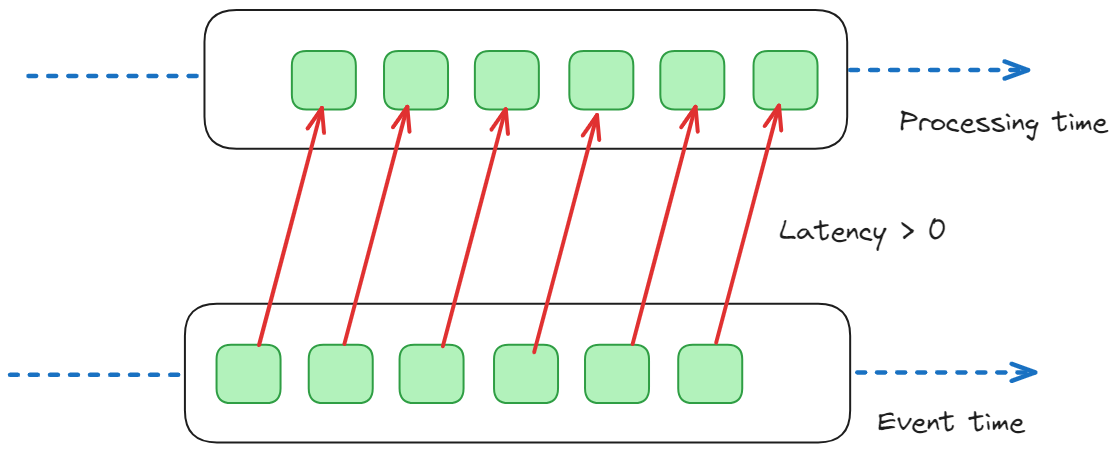

Latency in streaming databases (Picture by Federico Trotta)

Specifically, we will outline the next:

- Occasion time: This refers back to the time when an occasion or knowledge level really occurred in the actual world. For instance, in case you are processing a stream of sensor knowledge from units measuring temperature, the occasion time can be the precise time when every temperature measurement was recorded by the sensors.

- Processing time: This refers back to the time at which the occasion is processed throughout the stream processing system. For instance, if there’s a delay or latency in processing the temperature measurements after they’re obtained, the processing time can be later than the occasion time.

This temporal consciousness facilitates the creation of time-based aggregations, permitting companies to realize the precise understanding of developments and patterns over time intervals, and dealing with out-of-order occasions.

In truth, occasions might not at all times arrive on the processing system within the order wherein they occurred in the actual world for a number of causes, like community delays, various processing speeds, or different elements.

So, by incorporating occasion time into the evaluation, streaming databases can reorder occasions primarily based on their precise prevalence in the actual world. Which means the timestamps related to every occasion can be utilized to align occasions within the right temporal sequence, even when they arrive out of order. This ensures that analytical computations and aggregations mirror the temporal actuality of the occasions, offering correct insights into developments and patterns.

Challenges in Implementing Streaming Databases

Whereas streaming databases supply a revolutionary strategy to real-time knowledge processing, their implementation will be difficult.

Among the many others, we will checklist the next challenges:

Sheer Quantity and Velocity of Streaming Information

Actual-time knowledge streams, particularly high-frequency ones frequent in functions like IoT and monetary markets, generate a excessive quantity of latest knowledge at a excessive velocity. So, streaming databases must deal with a steady knowledge stream effectively, with out sacrificing efficiency.

Guaranteeing Information Consistency in Actual-Time

In conventional batch processing, consistency is achieved by periodic updates. In streaming databases, making certain consistency throughout distributed methods in actual time introduces complexities. Strategies resembling occasion time processing, watermarking, and idempotent operations are employed to handle these challenges however require cautious implementation.

Safety and Privateness Considerations

Streaming knowledge typically incorporates delicate data, and processing it in real-time calls for sturdy safety measures. Encryption, authentication, and authorization mechanisms should be built-in into the streaming database structure to safeguard knowledge from unauthorized entry and potential breaches. Furthermore, compliance with knowledge safety rules provides an extra layer of complexity.

Tooling and Integrations

The varied nature of streaming knowledge sources and the number of instruments out there demand considerate integration methods. Compatibility with current methods, ease of integration, and the power to assist totally different knowledge codecs and protocols grow to be important concerns.

Want for Expert Personnel

As streaming databases are inherently tied to real-time analytics, the necessity for expert personnel to develop, handle, and optimize these methods must be considered. The shortage of experience within the area can delay the diffuse adoption of streaming databases, and organizations should put money into coaching and improvement to bridge this hole.

Structure of Streaming Databases

The structure of streaming databases is crafted to deal with the intricacies of processing real-time knowledge streams effectively.

At its core, this structure embodies the ideas of distributed computing, enabling scalability and responsiveness to the dynamic nature of streaming knowledge.

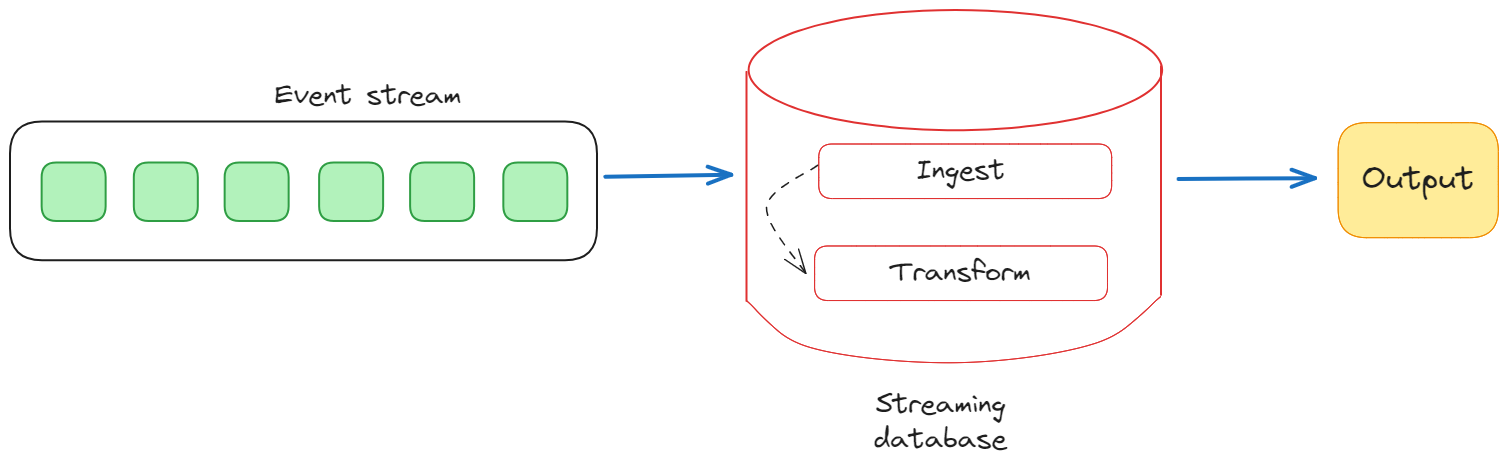

A basic side of streaming databases’ structure is the power to accommodate steady, high-velocity knowledge streams. That is achieved by a mixture of knowledge ingestion, processing, and storage parts.

Information ingestion in streaming databases (Picture by Federico Trotta)

The info ingestion layer is chargeable for amassing and accepting knowledge from varied sources in actual time. This may increasingly contain connectors to exterior methods, message queues, or direct API integrations.

As soon as ingested, knowledge is processed within the streaming layer, the place it’s analyzed, reworked, and enriched in close to real-time. This layer typically employs stream processing engines or frameworks that allow the execution of complicated computations on the streaming knowledge, permitting for the derivation of significant insights.

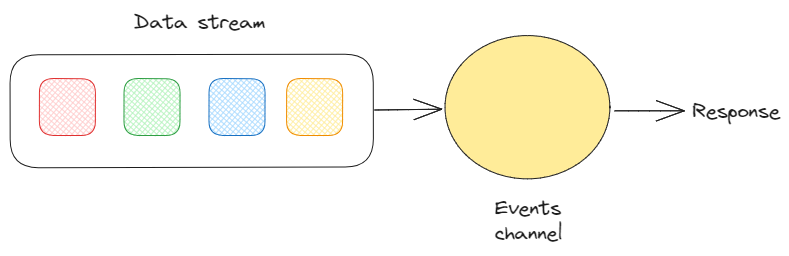

Occasions in streaming databases (Picture by Federico Trotta)

Since they take care of real-time knowledge, an indicator of streaming database structure is the event-driven paradigm. In truth, every knowledge level is handled as an occasion, and the system reacts to those occasions in real-time. This temporal consciousness is key for time-based aggregations, and dealing with out-of-sequence occasions, facilitating a granular understanding of the temporal dynamics of the information.

The schema design in streaming databases can be dynamic and versatile, permitting for the evolution of knowledge buildings over time. In contrast to conventional databases with inflexible schemas, in reality, streaming databases accommodate the fluid nature of streaming knowledge, the place the schema might change as new fields or attributes are launched: this flexibility permits for the opportunity of dealing with various knowledge codecs and adapting to the evolving necessities of streaming functions.

Instance of a Streaming Database

Now, let’s introduce a few examples of commercially out there streaming databases, to spotlight their options and fields of software.

RisingWave

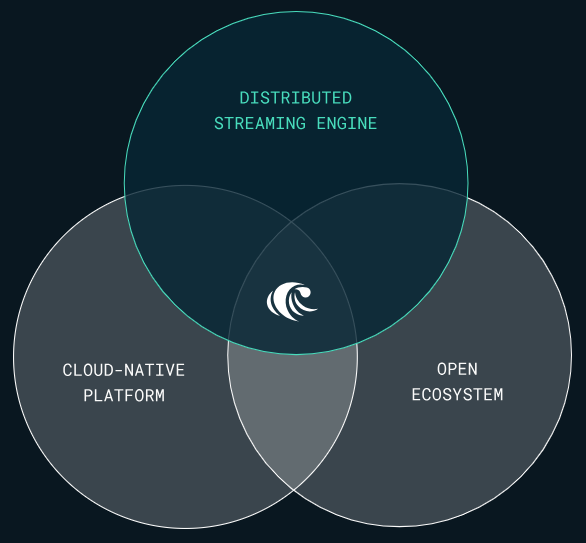

Picture from the RisingWave web site

RisingWave is a distributed SQL streaming database that permits easy, environment friendly, and dependable streaming knowledge processing. It consumes streaming knowledge, performs incremental computations when new knowledge is available in, and updates outcomes dynamically.

Since it’s a distributed database, RisingWave embraces parallelization to satisfy the calls for of scalability. By distributing the processing duties throughout a number of nodes or clusters, in reality, it could possibly successfully deal with a excessive quantity of incoming knowledge streams concurrently. This distributed nature additionally ensures fault tolerance and resilience, because the system can proceed to function seamlessly, even within the presence of node failures.

Additionally, RisingWave Database is an open-source distributed SQL streaming database designed for the cloud. Specifically, it was designed as a distributed streaming database from scratch, not a bolt-on implementation primarily based on one other system.

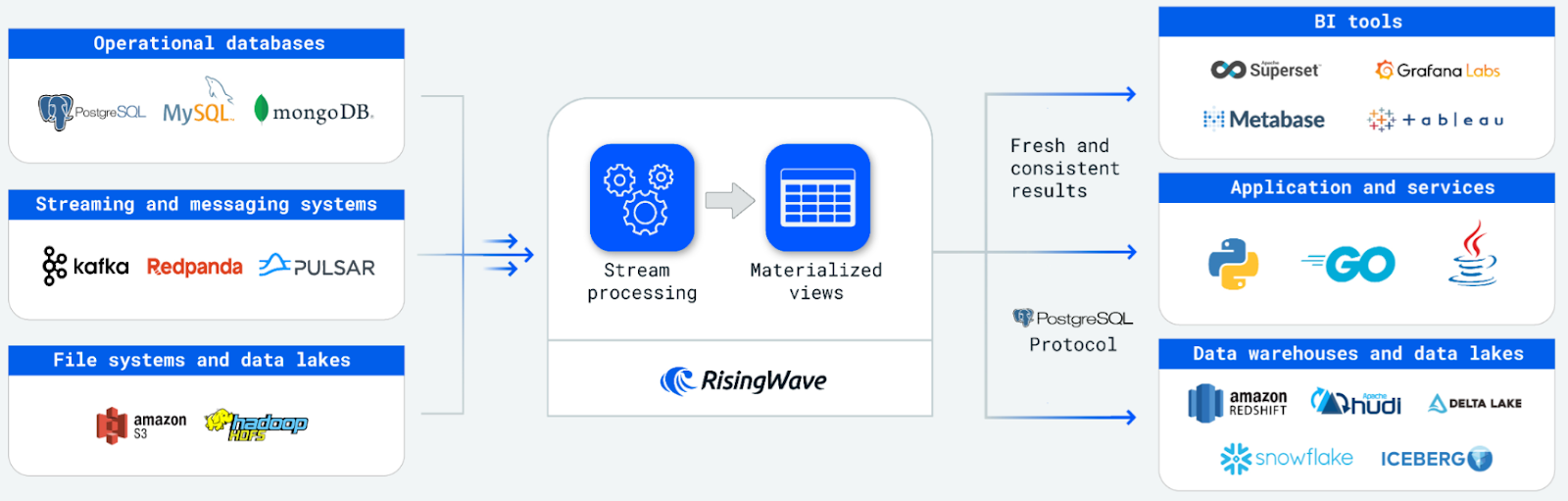

Picture from the RisingWave web site

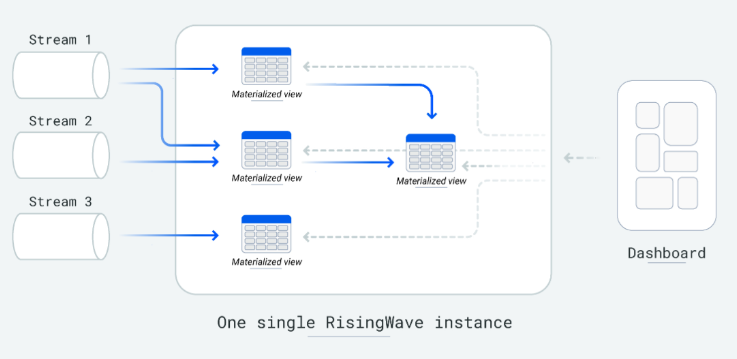

It additionally reduces the complexity of constructing stream-processing functions by permitting builders to specific intricate stream-processing logic by cascaded materialized views. Moreover, it permits customers to persist knowledge straight throughout the system, eliminating the necessity to ship outcomes to exterior databases for storage and question serving.

Picture from the RisingWave web site

The benefit of the RisingWave database will be described as follows:

- Easy to be taught: It makes use of PostgreSQL-style SQL, enabling customers to dive into stream processing as they’d do with a PostgreSQL database.

- Easy to develop: Because it operates as a relational database, builders can decompose stream processing logic into smaller, manageable, stacked materialized views, moderately than coping with intensive computational packages.

- Easy to combine: With integrations to a various vary of cloud methods and the PostgreSQL ecosystem, RisingWave has a wealthy and expansive ecosystem, making it easy to include into current infrastructures.

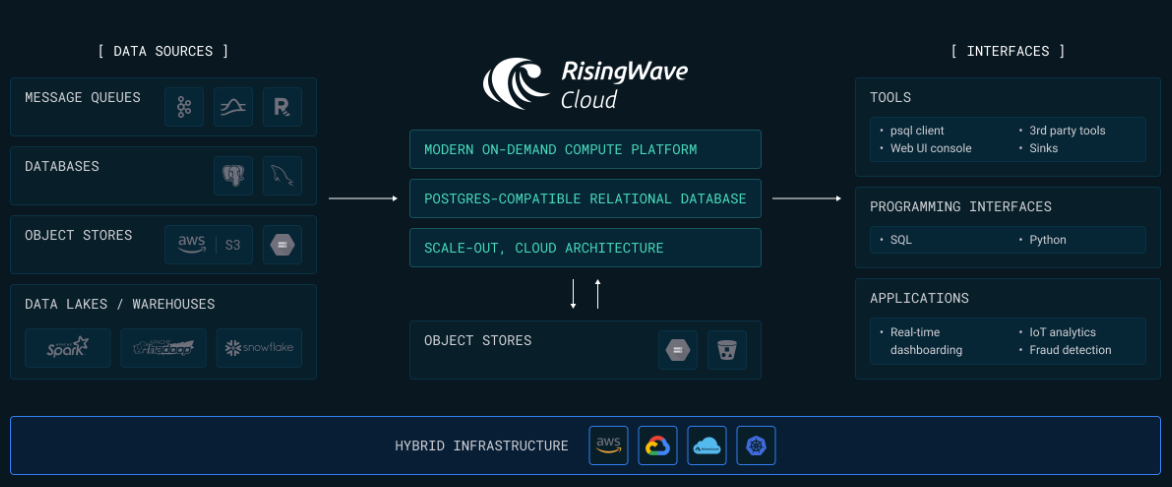

Lastly, RisingWave gives RisingWave cloud: a hosted service that brings the ability to create a brand new cloud-hosted RisingWave cluster and get began on stream processing in minutes.

Picture from the RisingWave web site

Materialize

Picture taken from the Materialize web site

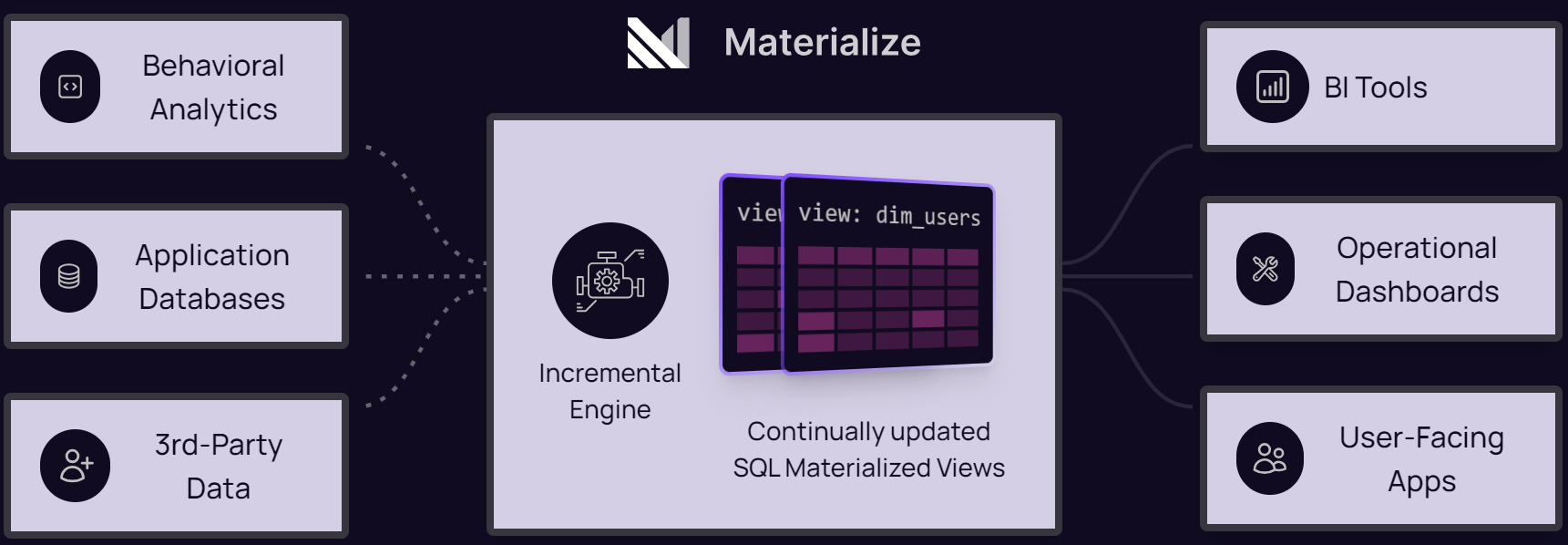

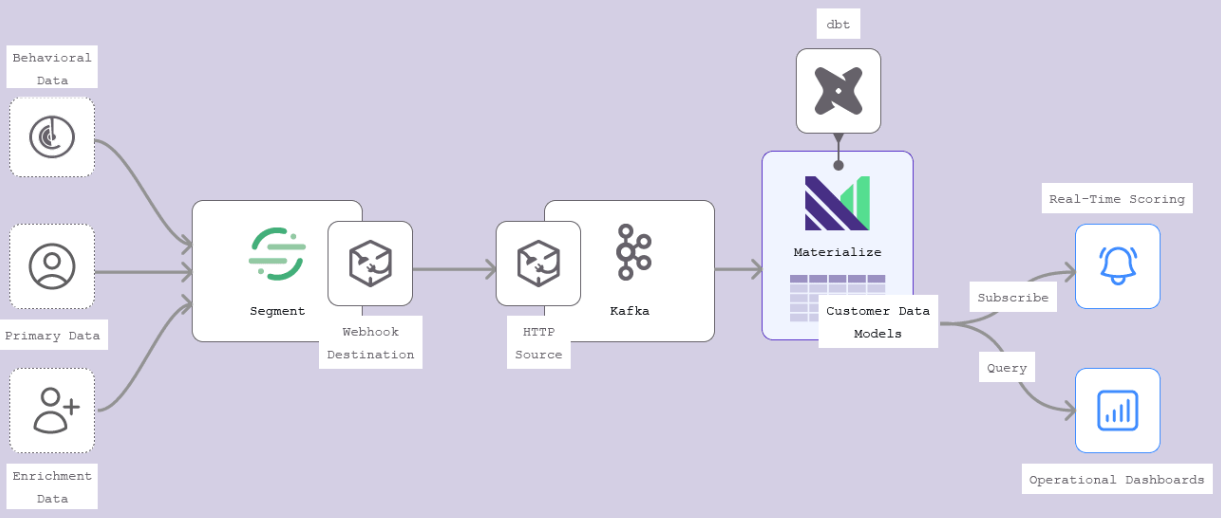

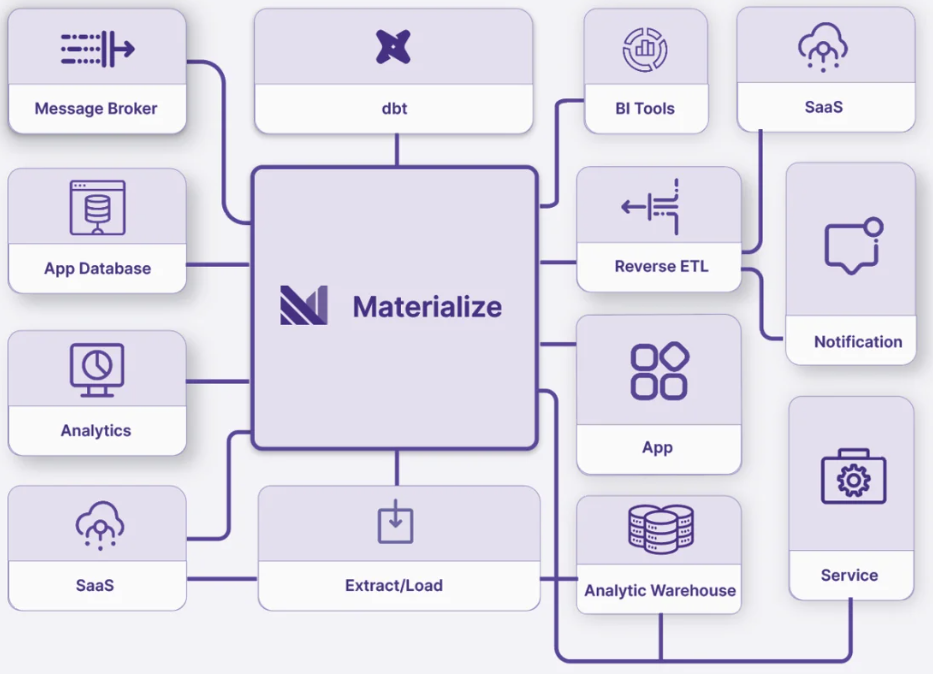

Materialize is a high-performance, SQL-based streaming knowledge warehouse designed to supply real-time, incremental knowledge processing with a powerful emphasis on simplicity, effectivity, and reliability. Its structure allows customers to construct complicated, incremental knowledge transformations and queries on high of streaming knowledge with minimal latency.

Since it’s constructed for real-time knowledge processing, Materialize leverages environment friendly incremental computation to make sure low-latency updates and queries. By processing solely the adjustments in knowledge moderately than reprocessing total knowledge units, it could possibly deal with high-throughput knowledge streams with optimum efficiency.

Materialize is designed to scale horizontally, distributing processing duties throughout a number of nodes or clusters to handle a excessive quantity of knowledge streams concurrently. This nature additionally enhances fault tolerance and resilience, permitting the system to function seamlessly even within the face of node failures.

As an open-source streaming knowledge warehouse, Materialize presents transparency and suppleness. It was constructed from the bottom as much as assist real-time, incremental knowledge processing, not as an add-on to an current system.

It considerably simplifies the event of stream-processing functions by permitting builders to specific complicated stream-processing logic by commonplace SQL queries. Builders, in reality, can straight persist knowledge throughout the system, eliminating the necessity to transfer outcomes to exterior databases for storage and question serving.

Picture taken from the Materialize web site

The benefit of Materialize will be described as follows:

- Easy to be taught: It makes use of PostgreSQL-compatible SQL, enabling builders to leverage their current SQL abilities for real-time stream processing with no steep studying curve.

- Easy to develop: Materialize permits customers to write down complicated streaming queries utilizing acquainted SQL syntax. The system’s skill to routinely preserve materialized views and deal with the underlying complexities of stream processing signifies that builders can deal with enterprise logic moderately than the intricacies of knowledge stream administration, making the event section straightforward.

- Easy to combine: With assist for a wide range of knowledge sources and sinks together with Kafka and PostgreSQL, Materialize integrates seamlessly into totally different ecosystems, making the incorporation with current infrastructure straightforward.

Picture taken from the Materialize web site

Lastly, Materialize gives sturdy consistency and correctness ensures, making certain correct and dependable question outcomes even with concurrent knowledge updates. This makes it a great answer for functions requiring well timed insights and real-time analytics.

Materialize’s skill to ship real-time, incremental processing of streaming knowledge, mixed with its ease of use and sturdy efficiency, positions it as a strong instrument for contemporary data-driven functions.

Conclusions

On this article, we’ve analyzed the necessity for streaming databases when processing streams of knowledge, evaluating them with conventional databases.

We’ve additionally seen that the implementation of a streaming database in current software program environments presents challenges that should be addressed, however out there business options like RisingWave and Materialize overcome them.

Word: This text has been co-authored by Federico Trotta and Karin Wolok.