This tutorial introduces the sensible implementation of the DolphinDB’s replay function. Primarily based on DolphinDB’s streaming replay, distributed database structure, and APIs, you may create a robust instrument for historic perception and mannequin back-testing, which lets you assessment conditions of curiosity and enhance future efficiency. By following the directions on this tutorial, you’ll be taught:

- The workflow of developing a market replay resolution

- The optimum knowledge storage plan for knowledge sources

- The strategies to course of and analyze replayed knowledge through APIs

- The efficiency of the replay performance

Be aware: For detailed directions on tips on how to replay market knowledge, check with Tutorial: Market Knowledge Replay.

1. Assemble a Market Knowledge Replay Resolution

This chapter guides you thru the method of constructing a complete market knowledge replay resolution. Our implementation focuses on three varieties of market knowledge: quote tick knowledge, commerce tick knowledge, and snapshots of Degree-2 knowledge. The service provides the next capabilities:

- Multi-client assist: Permits submission of replay requests through varied shoppers (together with C++ and Python API)

- Concurrent consumer requests: Helps simultaneous replay requests from a number of customers

- Multi-source synchronization: Permits orderly replay of a number of knowledge sources concurrently

- Superior ordering choices: Gives time-based ordering and additional sequential ordering primarily based on timestamps (e.g., primarily based on document numbers of tick knowledge)

- Completion sign: Alerts the top of a replay

- Versatile knowledge consumption: Provides subscription-based entry to course of replayed outcomes

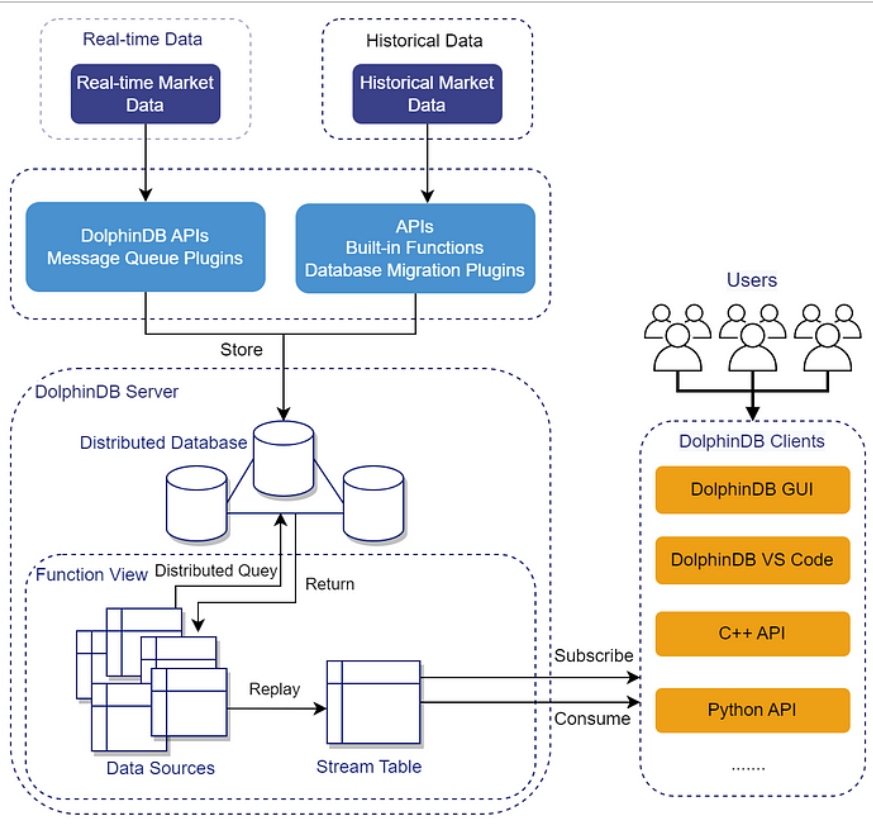

1.1 Structure

The answer we construct has the next structure:

- Market knowledge ingestion: Each real-time and historic tick knowledge will be imported to the DolphinDB distributed database through the DolphinDB API or a plugin.

- Operate encapsulation: Capabilities used throughout the replay course of will be encapsulated as operate views. Key parameters resembling inventory checklist, replay date, replay price, and knowledge supply can nonetheless be specified by customers.

- Consumer request: Customers can replay market knowledge by calling the operate view by DolphinDB shoppers (resembling DolphinDB GUI, VS Code Extension, and APIs). Customers may also subscribe to and eat real-time replay outcomes on the shopper aspect. Moreover, multi-user concurrent replay is supported.

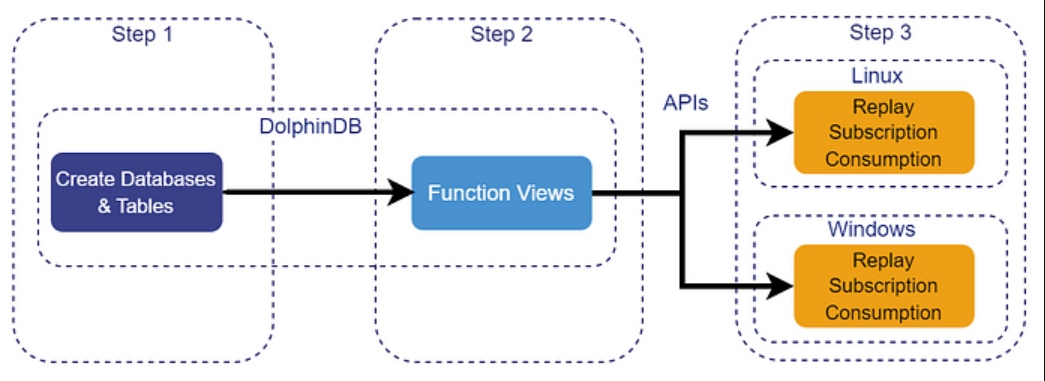

1.2 Steps

This part introduces the steps for constructing a market knowledge replay resolution, as proven within the following determine:

- Step 1 (Chapter 2): Design and implement an acceptable distributed database construction in DolphinDB. Create the required tables and import historic market knowledge to function the replay knowledge supply.

- Step 2 (Chapter 3): Develop a DolphinDB operate view that encapsulates all the replay course of. This abstraction permits customers to provoke replay requests by merely specifying key parameters, while not having to know the small print of DolphinDB’s replay performance.

- Step 3 (Chapters 4 and 5): Make the most of the DolphinDB API to name the beforehand created operate view from exterior purposes. This allows the execution of replay operations exterior the DolphinDB surroundings. Moreover, customers can arrange subscriptions to eat real-time replay outcomes on the shopper aspect.

2. Storage Plan for Market Knowledge

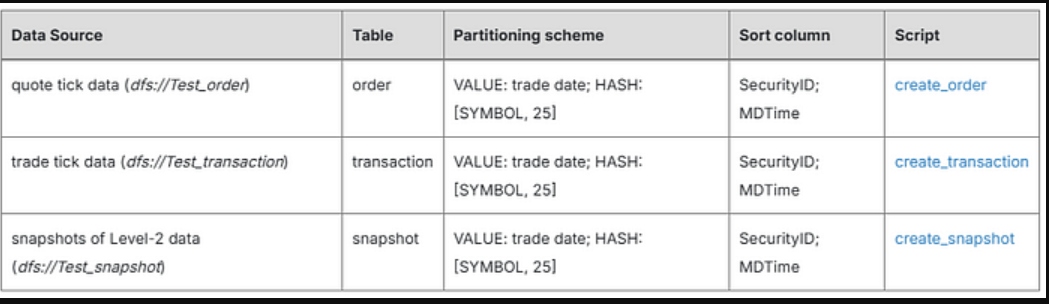

The instance on this tutorial replays three varieties of market knowledge: quote tick knowledge, commerce tick knowledge, and snapshots of Degree-2 knowledge.

The next chart shows the information (which is saved in TSDB databases) we use within the instance:

The replay mechanism operates on a elementary precept: it retrieves the required tick knowledge from the database, kinds it chronologically, after which writes it to the stream desk. This course of is closely influenced by the effectivity of database studying and sorting operations, which straight impacts the general replay velocity.

To optimize efficiency, a well-designed partitioning scheme is essential. The partitioning scheme of tables outlined above is tailor-made to widespread replay requests, that are sometimes submitted primarily based on particular dates and shares.

As well as, right here, we make the most of the DolphinDB cluster for storing historic knowledge. The cluster consists of three knowledge nodes and maintains two chunk replicas. The distributed nature of the cluster permits for parallel knowledge entry throughout a number of nodes, considerably boosting learn speeds. By sustaining a number of copies of the information, the system achieves larger resilience towards potential node failures.

3. Consumer-Outlined Capabilities for Replay

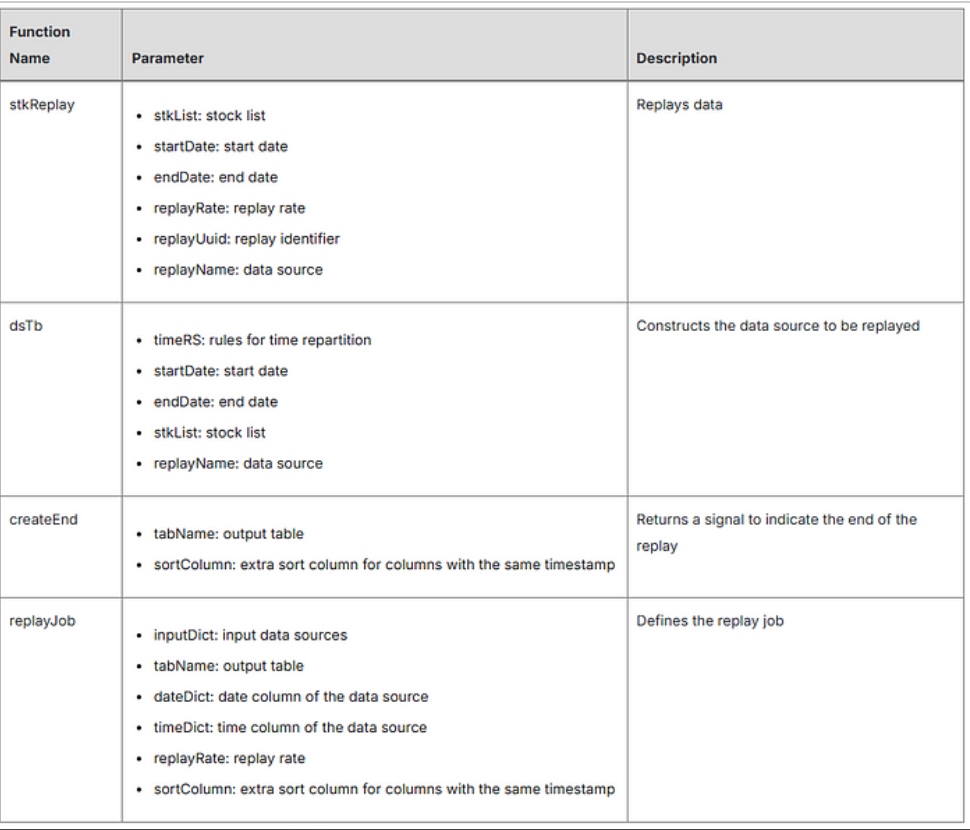

This chapter delves into the core functionalities of the replay course of and their implementation particulars. These capabilities are then encapsulated into operate views, which will be referred to as by APIs.

The chart under outlines the capabilities within the instance.

3.1 stkReplay: Replay

The stkReplay operate is used to carry out the replay operation.

The operate definition is as follows:

def stkReplay(stkList, mutable startDate, mutable endDate, replayRate, replayUuid, replayName)

{

maxCnt = 50

returnBody = dict(STRING, STRING)

startDate = datetimeParse(startDate, "yyyyMMdd")

endDate = datetimeParse(endDate, "yyyyMMdd") + 1

sortColumn = "ApplSeqNum"

if(stkList.dimension() > maxCnt)

{

returnBody["errorCode"] = "0"

returnBody["errorMsg"] = "Exceeds the limits for a single replay. The maximum is: " + string(maxCnt)

return returnBody

}

if(dimension(replayName) != 0)

{

for(identify in replayName)

{

if(not identify in ["snapshot", "order", "transaction"])

{

returnBody["errorCode"] = "0"

returnBody["errorMsg"] = "Input the correct name of the data source. Cannot recognize: " + identify

return returnBody

}

}

}

else

{

returnBody["errorCode"] = "0"

returnBody["errorMsg"] = "Missing data source. Input the correct name of the data source."

return returnBody

}

attempt

{

if(dimension(replayName) == 1 && replayName[0] == "snapshot")

{

colName = ["timestamp", "biz_type", "biz_data"]

colType = [TIMESTAMP, SYMBOL, BLOB]

sortColumn = "NULL"

}

else

{

colName = ["timestamp", "biz_type", "biz_data", sortColumn]

colType = [TIMESTAMP, SYMBOL, BLOB, LONG]

}

msgTmp = streamTable(10000000:0, colName, colType)

tabName = "replay_" + replayUuid

enableTableShareAndPersistence(desk=msgTmp, tableName=tabName, asynWrite=true, compress=true, cacheSize=10000000, retentionMinutes=60, flushMode=0, preCache=1000000)

timeRS = cutPoints(09:30:00.000..15:00:00.000, 23)

inputDict = dict(replayName, every(dsTb{timeRS, startDate, endDate, stkList}, replayName))

dateDict = dict(replayName, take(`MDDate, replayName.dimension()))

timeDict = dict(replayName, take(`MDTime, replayName.dimension()))

jobId = "replay_" + replayUuid

jobDesc = "replay stock data"

submitJob(jobId, jobDesc, replayJob{inputDict, tabName, dateDict, timeDict, replayRate, sortColumn})

returnBody["errorCode"] = "1"

returnBody["errorMsg"] = "Replay successfully"

return returnBody

}

catch(ex)

{

returnBody["errorCode"] = "0"

returnBody["errorMsg"] = "Exception occurred when replaying: " + ex

return returnBody

}

}

Be aware: The operate definition is versatile and will be custom-made to go well with particular necessities.

The parameters handed in will first be validated and formatted:

- The

stkListparameter is proscribed to a most of fifty shares (outlined bymaxCnt) for a single replay operation. - Parameters

startDateandendDateare formatted bystartDateandendDateutilizing thedatetimeParseoperate. replayNameis the checklist of information sources to be replayed. It have to be considered one of “snapshot”, “order”, or “transaction”, on this instance.

If any parameters fail validation, the operate experiences errors (as outlined in returnBody). Upon profitable parameter validation, the operate proceeds to initialize the output desk.

The output desk “msgTmp” is outlined as a heterogeneous stream desk the place the BLOB column shops the serialized results of every replayed document. To optimize reminiscence utilization, it’s shared and persevered with the enableTableShareAndPersistence operate.

When the information supply consists of each “transaction” and “order”, an additional type column will be specified for information with the identical timestamp. On this instance, information in “transaction” and “order” with the identical timestamp are sorted by the document quantity “ApplSeqNum” (See part 3.3 replayJob operate for particulars). In such a case, the output desk should comprise the kind column. If the information supply incorporates solely “snapshots”, the kind column is just not required.

Then, submit the replay job utilizing the submitJob operate. You may be knowledgeable concerning the standing of the replay job, whether or not it has executed efficiently or encountered an exception (as outlined in returnBody).

3.2 dsTb: Assemble Knowledge Sources

The dsTb operate is used to organize the information sources to be replayed.

The operate definition is as follows:

def dsTb(timeRS, startDate, endDate, stkList, replayName)

{

if(replayName == "snapshot"){

tab = loadTable("dfs://Test_snapshot", "snapshot")

}

else if(replayName == "order") {

tab = loadTable("dfs://Test_order", "order")

}

else if(replayName == "transaction") {

tab = loadTable("dfs://Test_transaction", "transaction")

}

else {

return NULL

}

ds = replayDS(sqlObj=

The dsTb operate serves as a higher-level wrapper across the built-in replayDS operate.

- It first evaluates the supplied

replayNameto find out which dataset to make use of. - Primarily based on

replayName, it masses the corresponding desk object from the database. - Utilizing

replayDS, it segments the information withstkListwithin the vary of[startDate, endDate)into multiple data sources based ontimeRS, and returns a list of data sources.

The timeRS parameter, which corresponds to the timeRepartitionSchema parameter in the replayDS function, is a vector of temporal types to create finer-grained data sources, ensuring efficient querying of the DFS table and optimal management memory usage.

The timeRS in this example is defined as a variable in the stkReplay function, timeRS = cutPoints(09:30:00.000..15:00:00.000, 23), which means dividing data into trading hours (09:30:00.000..15:00:00.000) into 23 equal parts.

To better understand how dsTb works, execute the following script. For the “order” data source, the records with Security ID “000616.SZ” in trading hours of 2021.12.01 are divided into 3 parts.

timeRS = cutPoints(09:30:00.000..15:00:00.000, 3)

startDate = 2021.12.01

endDate = 2021.12.02

stkList = ['000616.SZ']

replayName = ["order"]

ds = dsTb(timeRS, startDate, endDate, stkList, replayName)

This returns a listing of metacode objects, every containing an SQL assertion representing these knowledge segments.

DataSource= 00:00:00.000000000,date(MDDate) == 2021.12.01,MDDate >= 2021.12.01 and MDDate

DataSource= 09:30:00.000,date(MDDate) == 2021.12.01,MDDate >= 2021.12.01 and MDDate

DataSource= 11:20:00.001,date(MDDate) == 2021.12.01,MDDate >= 2021.12.01 and MDDate

DataSource= 13:10:00.001,date(MDDate) == 2021.12.01,MDDate >= 2021.12.01 and MDDate

DataSource= 15:00:00.001,date(MDDate) == 2021.12.01,MDDate >= 2021.12.01 and MDDate

3.3 replayJob: Outline Replay Job

The replayJob operate is used to outline the replay job.

The operate definition is as follows:

def replayJob(inputDict, tabName, dateDict, timeDict, replayRate, sortColumn)

{

if(sortColumn == "NULL")

{

replay(inputTables=inputDict, outputTables=objByName(tabName), dateColumn=dateDict, timeColumn=timeDict, replayRate=int(replayRate), absoluteRate=false, parallelLevel=23)

}

else

{

replay(inputTables=inputDict, outputTables=objByName(tabName), dateColumn=dateDict, timeColumn=timeDict, replayRate=int(replayRate), absoluteRate=false, parallelLevel=23, sortColumns=sortColumn)

}

createEnd(tabName, sortColumn)

}

The replayJob operate encapsulates the built-in replay operate to carry out an N-to-1 heterogeneous replay. As soon as all required knowledge has been replayed, the createEnd operate is known as to assemble and write an finish sign into the replay end result, marking the conclusion of the replay course of with a particular document.

The inputDict, dateDict, and timeDict are outlined as variables within the stkReplay operate:

inputDictis a dictionary indicating knowledge sources to be replayed. The upper-order operateeveryis used to outline the information supply for everyreplayName.dateDictandtimeDictare dictionaries indicating the date and time columns of the information sources, used for knowledge sorting.

The replayJob operate features a sortColumn parameter for extra knowledge ordering. If the information supply consists solely of “snapshots” (where ordering is not a concern), sortColumn should be set to NULL. In this case, the built-in replay function is called without its sortColumns parameter. For other data sources (“order” and “transaction”), sortColumn can be utilized to specify an additional column for sorting the information with the identical timestamp.

3.4 createEnd: Sign the Finish

The createEnd operate is used to outline the top sign. It may be optionally outlined.

The operate definition is as follows:

def createEnd(tabName, sortColumn)

{

dbName = "dfs://End"

tbName = "endline"

if(not existsDatabase(dbName))

{

db = database(listing=dbName, partitionType=VALUE, partitionScheme=2023.04.03..2023.04.04)

endTb = desk(2200.01.01T23:59:59.000 as DateTime, `END as level, lengthy(0) as ApplSeqNum)

endLine = db.createPartitionedTable(desk=endTb, tableName=tbName, partitionColumns=`DateTime)

endLine.append!(endTb)

}

ds = replayDS(sqlObj=

The createEnd operate indicators the top of the replay course of, writing a document labeled “end” to the output table (specified by tabName). inputEnd, dateEnd, and timeEnd are dictionaries with the string “finish” as the important thing, which corresponds to the second column (biz_type) within the output desk.

To streamline the parsing and consumption of the output desk, a separate database is created particularly for the top sign. Inside this database, a partitioned desk is established. This desk should embody a time column, with different fields being elective. A simulated document is written to this desk. This document is just not strictly outlined and will be custom-made as wanted. For directions on tips on how to create a database and desk, check with DolphinDB Tutorial: Distributed Database.

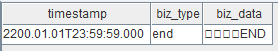

The createEnd operate additionally features a sortColumn parameter to find out whether or not to name the built-in replay operate with or with out its sortColumns parameter. The next determine demonstrates an finish sign within the output desk when sortColumn is ready to NULL.

3.5 Encapsulate Capabilities

The capabilities described above are encapsulated into operate views utilizing the addFunctionView operate, as proven within the following script.

addFunctionView(dsTb)

addFunctionView(createEnd)

addFunctionView(replayJob)

addFunctionView(stkReplay)

4. Replay Knowledge With APIs

By creating operate views, we’ve simplified the interface for API shoppers. Now, we solely have to invoke the operate stkReplay in APIs to provoke the replay course of. The replay course of detailed on this chapter is all constructed upon the above operate view.

4.1 With C++ API

This instance runs on a Linux OS, with a DolphinDB C++ API surroundings arrange. With executable information compiled, you may run the code from the command line interface. The complete script is out there within the Appendices: C++ code.

The C++ software interacts with the DolphinDB server utilizing a DBConnection object (conn). Via DBConnection, you may execute scripts and capabilities on the DolphinDB server and switch knowledge in each instructions.

The applying invokes the stkReplay operate on the DolphinDB server utilizing the DBConnection::run operate. The args holds all the required arguments for the stkReplay operate. The operate’s result’s captured in a dictionary named ‘end result’. The applying checks the “errorCode” key of the end result utilizing the get technique. If the errorCode is just not 1, it signifies an execution error. On this case, the appliance returns an error message and terminates this system.

The next code demonstrates tips on how to name the stkReplay operate.

DictionarySP end result = conn.run("stkReplay", args);

string errorCode = result->get(Util::createString("errorCode"))->getString();

if (errorCode != "1")

{

std::cout getString()

When a number of customers provoke replay operations, to stop conflicts that would come up from duplicate desk names, it’s essential to assign distinctive names to the stream tables being replayed. Executing the next code can generate a novel identifier for every consumer. This identifier takes the type of a string, resembling “Eq8Jk8Dd0Tw5Ej8D”.

string uuid(int len)

{

char* str = (char*)malloc(len + 1);

srand(getpid());

for (int i = 0; i

4.2 With Python API

This instance runs on a Home windows OS, with a DolphinDB Python API surroundings arrange. The complete script is out there within the Appendices: Python Code.

The Python software interacts with the DolphinDB server utilizing a session object (s). Via classes, you may execute scripts and capabilities on the DolphinDB server and switch knowledge in each instructions.

As soon as the required variables (stk_list, start_date, end_date, replay_rate, replay_uuid, and replay_name) have been outlined, the add technique is used to add these objects to the DolphinDB server and the run technique is invoked to execute the stkReplay operate.

The next code demonstrates tips on how to name the stkReplay operate.

stk_list = ['000616.SZ','000681.SZ']

start_date="20211201"

end_date="20211201"

replay_rate = -1

replay_name = ['snapshot']

s.add({'stk_list':stk_list, 'start_date':start_date, 'end_date':end_date, 'replay_rate':replay_rate, 'replay_uuid':uuidStr, 'replay_name':replay_name})

s.run("stkReplay(stk_list, start_date, end_date, replay_rate, replay_uuid, replay_name)")

When a number of customers provoke replay operations, to stop conflicts that would come up from duplicate desk names, execute the next code can generate a novel identifier for every consumer. This identifier takes the type of a string, resembling “Eq8Jk8Dd0Tw5Ej8D”.

def uuid(size):

str=""

for i in vary(size):

if(i % 3 == 0):

str += chr(ord('A') + random.randint(0, os.getpid() + 1) % 26)

elif(i % 3 == 1):

str += chr(ord('a') + random.randint(0, os.getpid() + 1) % 26)

else:

str += chr(ord('0') + random.randint(0, os.getpid() + 1) % 10)

return str

uuidStr = uuid(16)

5. Devour Replayed Knowledge With APIs

This chapter introduces tips on how to eat replayed knowledge with C++ API and Python API.

5.1 With C++ API

5.1.1 Assemble a Deserializer

To deserialize the replayed knowledge (which is saved in a heterogeneous desk), a deserializer must be constructed first. Use the next code to assemble a deserializer:

DictionarySP snap_full_schema = conn.run("loadTable("dfs://Test_snapshot", "snapshot").schema()");

DictionarySP order_full_schema = conn.run("loadTable("dfs://Test_order", "order").schema()");

DictionarySP transac_full_schema = conn.run("loadTable("dfs://Test_transaction", "transaction").schema()");

DictionarySP end_full_schema = conn.run("loadTable("dfs://Finish", "endline").schema()");

unordered_map sym2schema;

sym2schema["snapshot"] = snap_full_schema;

sym2schema["order"] = order_full_schema;

sym2schema["transaction"] = transac_full_schema;

sym2schema["end"] = end_full_schema;

StreamDeserializerSP sdsp = new StreamDeserializer(sym2schema);

The preliminary 4 traces get desk schemata of “snapshot,” “order,” “transaction,” and “end signal”. Throughout replay, the suitable schema is chosen as wanted, with the top being required.

To deal with heterogeneous stream tables, a StreamDeserializer object is created utilizing the sym2schema technique. This strategy maps symbols (keys for supply tables) to their respective desk schemata. Subsequently, each the information sources and the top sign are deserialized primarily based on these schemata.

For detailed directions, see C++ API Reference Information: Setting up a Deserializer.

5.1.2 Subscribe To Replayed Knowledge

Execute the next code to subscribe to the output desk:

int listenport = 10260;

ThreadedClient threadedClient(listenport);

string tableNameUuid = "replay_" + uuidStr;

auto thread = threadedClient.subscribe(hostName, port, myHandler, tableNameUuid, "stkReplay", 0, true, nullptr, true, 500000, 0.001, false, "admin", "123456", sdsp);

std::cout be part of();

The place variables:

listenportis the subscription port of the single-threaded shopper.tableNameUuidis the identify of the stream desk to be consumed.

We subscribe to the stream desk by threadedClient.subscribe. The thread returned factors to the thread that constantly invokes myHandler. It’ll cease calling myHandler when operate unsubscribe is known as on the identical matter.

For detailed directions, see the C++ API Reference Information: Subscription.

5.1.3 Outline the Handler for Processing Knowledge

The threadedClient.subscribe technique is used to subscribe to the heterogeneous stream desk. Throughout this course of, the StreamDeserializerSP occasion deserializes incoming knowledge and routes it to a user-defined operate handler. Customers can customise knowledge processing logic by implementing their very own myHandler operate.

On this specific instance, a primary output operation is carried out. When the msg is flagged as “end”, the subscription is terminated utilizing threadedClient.unsubscribe. The implementation of myHandler for this instance is as follows:

lengthy sumcount = 0;

lengthy lengthy starttime = Util::getNanoEpochTime();

auto myHandler = [&](vector msgs)

{

for (auto& msg : msgs)

{

std::cout getString() get(0)->dimension();

}

lengthy lengthy velocity = (Util::getNanoEpochTime() - starttime) / sumcount;

std::cout

5.1.4 Execute the Utility

After compiling the primary file with the aforementioned code throughout the DolphinDB C++ API surroundings, you may provoke the “replay -subscription — consumption” course of utilizing particular instructions.

- To replay knowledge of a single inventory from one desk (“order”) for someday at most velocity:

$ ./predominant 000616.SZ 20211201 20211201 -1 order

- To replay knowledge of two (or extra) shares from three tables (“snapshot”, “order”, “transaction”) for someday at most velocity:

$ ./predominant 000616.SZ,000681.SZ 20211201 20211201 -1 snapshot,order,transaction

5.1.5 Output the End result

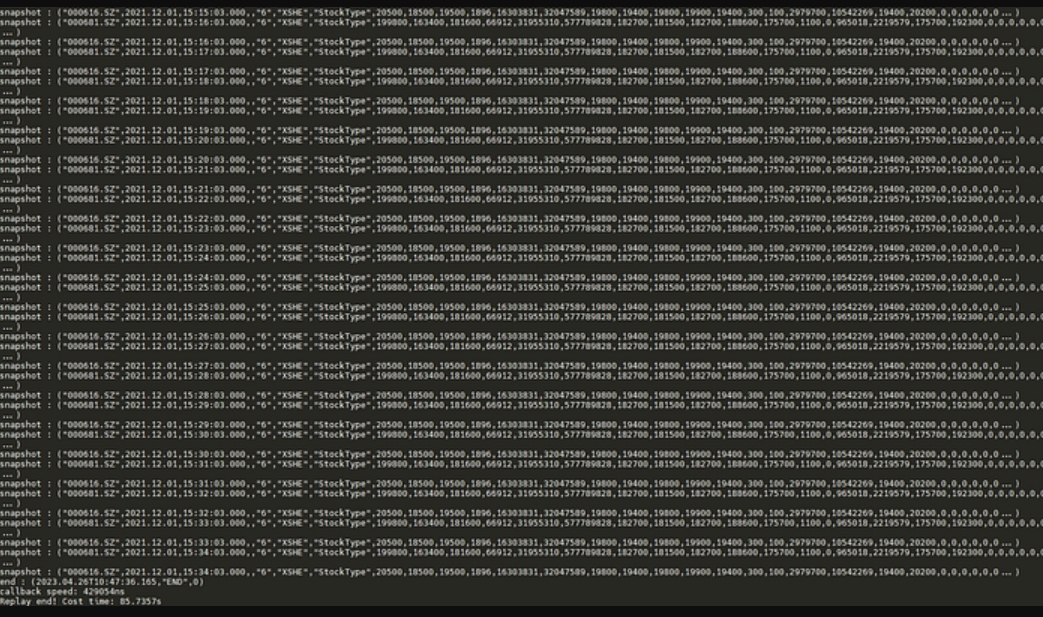

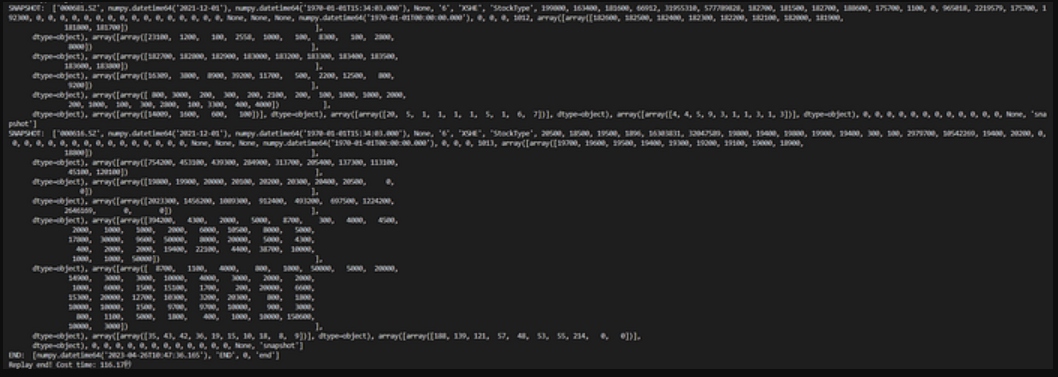

The next determine shows the end result for replaying two shares (“000616.SZ” & “000681.SZ”) from three tables at most velocity:

5.2 With Python API

5.2.1 Assemble a Deserializer

To deserialize the replayed knowledge (which is saved in a heterogeneous desk), a deserializer must be constructed first. Use the next code to assemble a deserializer:

sd = ddb.streamDeserializer({

'snapshot': ["dfs://Test_snapshot", "snapshot"],

'order': ["dfs://Test_order", "order"],

'transaction': ["dfs://Test_transaction", "transaction"],

'finish': ["dfs://End", "endline"],

}, s)

To deal with heterogeneous stream tables, a streamDeserializer object is created. The parameter sym2table is outlined as a dictionary object. The keys are the supply tables (i.e., “snapshot,” “order,” “transaction,” and “end signal”) and the values are the schema of every desk. Be aware that in replay, the suitable schema is chosen as wanted, with the top sign schema being required.

For detailed directions, see Python API Reference Information: streamDeserializer.

5.2.2 Subscribe To Replayed Knowledge

Execute the next code to subscribe to the output desk:

s.enableStreaming(0)

s.subscribe(host=hostname, port=portname, handler=myHandler, tableName="replay_"+uuidStr, actionName="replay_stock_data", offset=0, resub=False, msgAsTable=False, streamDeserializer=sd, userName="admin", password="123456")

occasion.wait()

The enableStreaming technique is used to allow streaming knowledge subscription. Then name s.subscribe to create the subscription. Name occasion.wait() to dam the present thread to maintain receiving knowledge within the background.

For detailed directions, see the Python API Reference Information: Subscription.

5.2.3 Outline the Handler for Processing Knowledge

The s.subscribe technique is used to subscribe to the heterogeneous stream desk. Throughout this course of, the streamDeserializer occasion deserializes incoming knowledge and routes it to a user-defined operate handler. Customers can customise knowledge processing logic by implementing their very own myHandler operate.

On this specific instance, a primary output operation is carried out. The implementation of myHandler for this instance is as follows:

def myHandler(lst):

if lst[-1] == "snapshot":

print("SNAPSHOT: ", lst)

elif lst[-1] == 'order':

print("ORDER: ", lst)

elif lst[-1] == 'transaction':

print("TRANSACTION: ", lst)

else:

print("END: ", lst)

occasion.set()

5.2.4 Output the End result

The next determine shows the end result for replaying two shares (“000616.SZ” & “000681.SZ”) from three tables at most velocity:

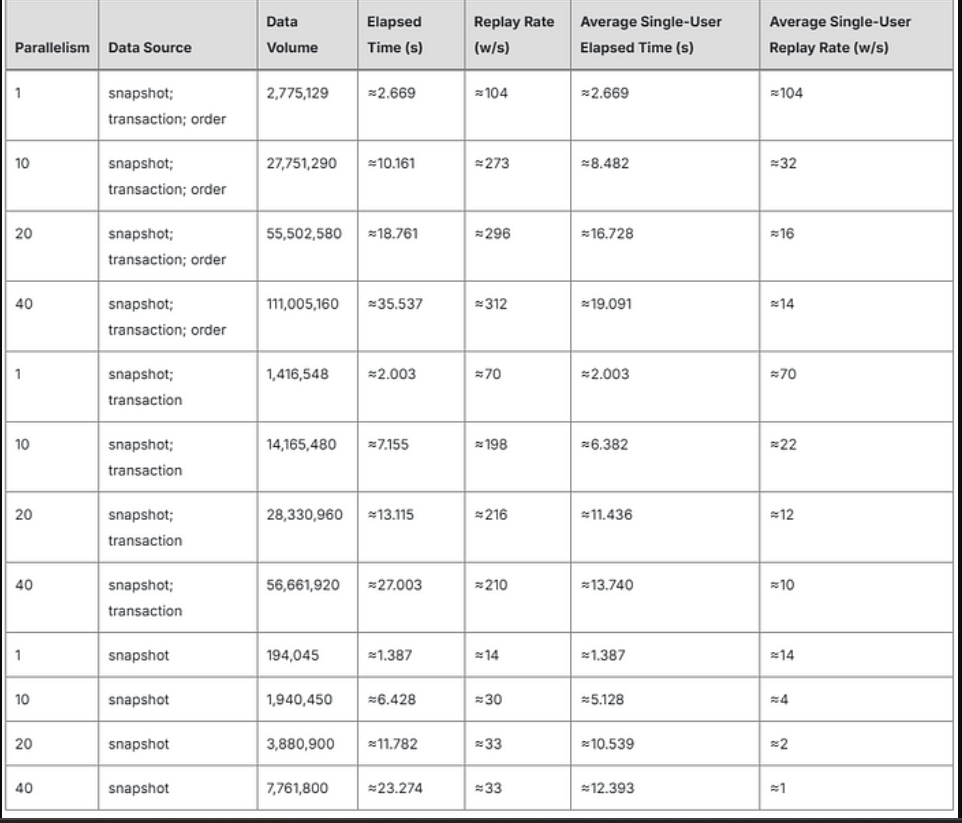

6. Efficiency Testing

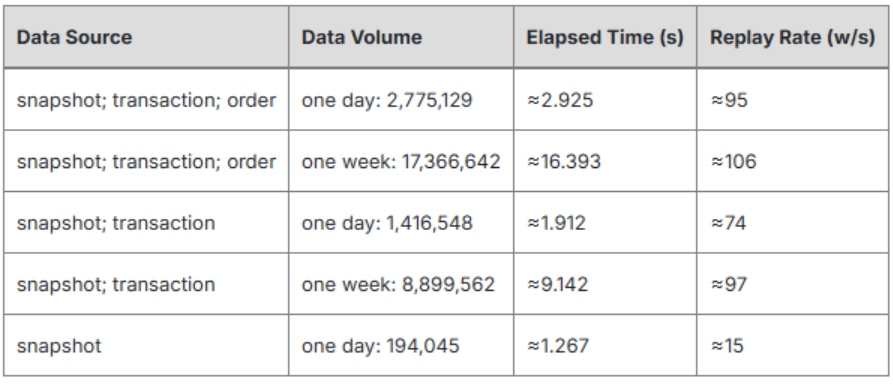

We chosen 50 shares starting from 2021.12.01 to 2021.12.09 for our efficiency testing. The take a look at scripts will be discovered within the Appendices: C++ Take a look at Code.

6.1 Take a look at Surroundings

- Processor household: Intel(R) Xeon(R) Silver 4216 CPU @ 2.10GHz

- CPU(s): 64

- Reminiscence: 503 GB

- Disk: SSD

- OS: CentOS Linux launch 7.9.2009 (Core)

- DolphinDB Server: model 2.00.9.3 (launched on 2023.03.29)

6.2 Concurrent Replays of fifty Shares on One Buying and selling Day

We chosen 50 shares traded on 2021.12.01. A number of situations of the C++ API replay program (described in Chapter 4) have been concurrently initiated within the Linux background to submit concurrent replay duties.

- Elapsed time: The newest end time — the earliest obtain time throughout all replay duties

- Replay price: The sum of all customers’ replay knowledge quantity/the whole elapsed time

- Common single-user elapsed time: (The sum of begin instances — the sum of finish instances)/the variety of concurrent duties

- Common single-user replay price: The quantity of information replayed by a single consumer/the common single-user elapsed time

6.3 Replays of fifty Shares Throughout A number of Buying and selling Days

We chosen 50 shares starting from 2021.12.01 to 2021.12.09. The occasion of the C++ API replay program (described in Chapter 4) was initiated within the Linux background to submit a process for replaying knowledge throughout a number of buying and selling days.

- Elapsed time = The newest end time — the earliest obtain time

- Replay price = Knowledge quantity / the whole elapsed time

7. Growth Surroundings

7.1 DolphinDB Server

- Server Model: 2.00.9.3 (launched on 2023.03.29)

- Deployment: standalone mode (see standalone deployment)

- Configuration: cluster.cfg

maxMemSize=128

maxConnections=5000

workerNum=24

webWorkerNum=2

chunkCacheEngineMemSize=16

newValuePartitionPolicy=add

logLevel=INFO

maxLogSize=512

node1.volumes=/ssd/ssd3/pocTSDB/volumes/node1,/ssd/ssd4/pocTSDB/volumes/node1

node2.volumes=/ssd/ssd5/pocTSDB/volumes/node2,/ssd/ssd6/pocTSDB/volumes/node2

node3.volumes=/ssd/ssd7/pocTSDB/volumes/node3,/ssd/ssd8/pocTSDB/volumes/node3

diskIOConcurrencyLevel=0

node1.redoLogDir=/ssd/ssd3/pocTSDB/redoLog/node1

node2.redoLogDir=/ssd/ssd4/pocTSDB/redoLog/node2

node3.redoLogDir=/ssd/ssd5/pocTSDB/redoLog/node3

node1.chunkMetaDir=/ssd/ssd3/pocTSDB/metaDir/chunkMeta/node1

node2.chunkMetaDir=/ssd/ssd4/pocTSDB/metaDir/chunkMeta/node2

node3.chunkMetaDir=/ssd/ssd5/pocTSDB/metaDir/chunkMeta/node3

node1.persistenceDir=/ssd/ssd6/pocTSDB/persistenceDir/node1

node2.persistenceDir=/ssd/ssd7/pocTSDB/persistenceDir/node2

node3.persistenceDir=/ssd/ssd8/pocTSDB/persistenceDir/node3

maxPubConnections=128

subExecutors=24

subThrottle=1

persistenceWorkerNum=1

node1.subPort=8825

node2.subPort=8826

node3.subPort=8827

maxPartitionNumPerQuery=200000

streamingHAMode=raft

streamingRaftGroups=2:node1:node2:node3

node1.streamingHADir=/ssd/ssd6/pocTSDB/streamingHADir/node1

node2.streamingHADir=/ssd/ssd7/pocTSDB/streamingHADir/node2

node3.streamingHADir=/ssd/ssd8/pocTSDB/streamingHADir/node3

TSDBCacheEngineSize=16

TSDBCacheEngineCompression=false

node1.TSDBRedoLogDir=/ssd/ssd3/pocTSDB/TSDBRedoLogDir/node1

node2.TSDBRedoLogDir=/ssd/ssd4/pocTSDB/TSDBRedoLogDir/node2

node3.TSDBRedoLogDir=/ssd/ssd5/pocTSDB/TSDBRedoLogDir/node3

TSDBLevelFileIndexCacheSize=20

TSDBLevelFileIndexCacheInvalidPercent=0.6

lanCluster=0

enableChunkGranularityConfig=true

Be aware: Modify configuration parameter persistenceDir with your individual path.

7.2 DolphinDB Shopper

- Processor household: Intel(R) Core(TM) i5–11500 @ 2.70GHz 2.71 GHz

- CPU(s): 12

- Reminiscence: 16 GB

- OS: Home windows 11 Professional

- DolphinDB GUI Model: 1.30.20.1

See GUI to put in DolphinDB GUI.

7.3 DolphinDB C++ API

- C++ API Model: release200.9

Be aware: It is strongly recommended to put in C++API with the model equivalent to the DolphinDB server. For instance, set up the API of launch 200 for the DolphinDB server V2.00.9.

7.4 DolphinDB Python API

8. Appendices

- Script for creating databases and tables: