Producing well-structured JSON outputs could be a advanced job, particularly when working with massive language fashions (LLMs). This text explores producing JSON outputs generated from LLMs with an instance of utilizing a Node.js-powered internet utility.

Massive Language Fashions (LLMs)

LLMs are refined AI methods designed to grasp and produce human-like textual content, able to dealing with duties akin to translation, summarization, and content material creation. Fashions like GPT (Mannequin by Open AI), BERT, and Claude have been instrumental in advancing pure language processing, making them priceless instruments for chatbots and different AI-driven functions.

JavaScript Object Notation (JSON)

JSON is a light-weight knowledge format that is simple to learn and write, each for individuals and computer systems. It organizes knowledge into key/worth pairs (objects) and ordered lists (arrays) utilizing a textual content format that, whereas based mostly on JavaScript, is appropriate with many programming languages. JSON is usually employed for knowledge alternate between internet servers and functions and can also be standard for configuration recordsdata and storing structured knowledge.

Open AI API

OpenAI API allows builders to combine superior AI functionalities into their functions, merchandise, and providers by offering entry to OpenAI’s state-of-the-art language fashions and different AI applied sciences.

The API follows a RESTful design, with requests and responses formatted in JSON. It helps quite a few programming languages, aided by each official and community-built libraries. Pricing is usage-based, calculated in tokens (about 4 characters per token), with completely different prices for numerous fashions. Common updates add new fashions and capabilities, with builders beginning by buying an API key. Go to OpenAI’s API platform and enroll or log in.

Right here is an instance internet utility powered by Node.js utilizing Open AI API. A Node.js server script working is an online utility, taking enter from a consumer and calling Open AI API to get outcomes from the LLM.

require('dotenv').config();

const specific = require('specific');

const axios = require('axios');

const app = specific();

const PORT = 3000;

app.use(specific.static('public'));

app.use(specific.json());

app.publish('/api/fetch', async (req, res) => {

strive {

const response = await axios.publish('https://api.openai.com/v1/chat/completions', {

mannequin: "gpt-4-turbo",

messages: [{ role: "system", content: "You are a helpful assistant." }, { role: "user", content: req.body.prompt }]

}, {

headers: {

'Authorization': `Bearer ${course of.env.OPENAI_API_KEY}`,

'Content material-Sort': 'utility/json'

}

});

const messageContent = response.knowledge.decisions[0].message.content material;

res.json({ message: messageContent });

} catch (error) {

res.standing(500).json({ error: 'Failure to get response from OpenAI', particulars: error.message });

}

});

app.hear(PORT, () => {

console.log(`Server working on http://localhost:${PORT}`);

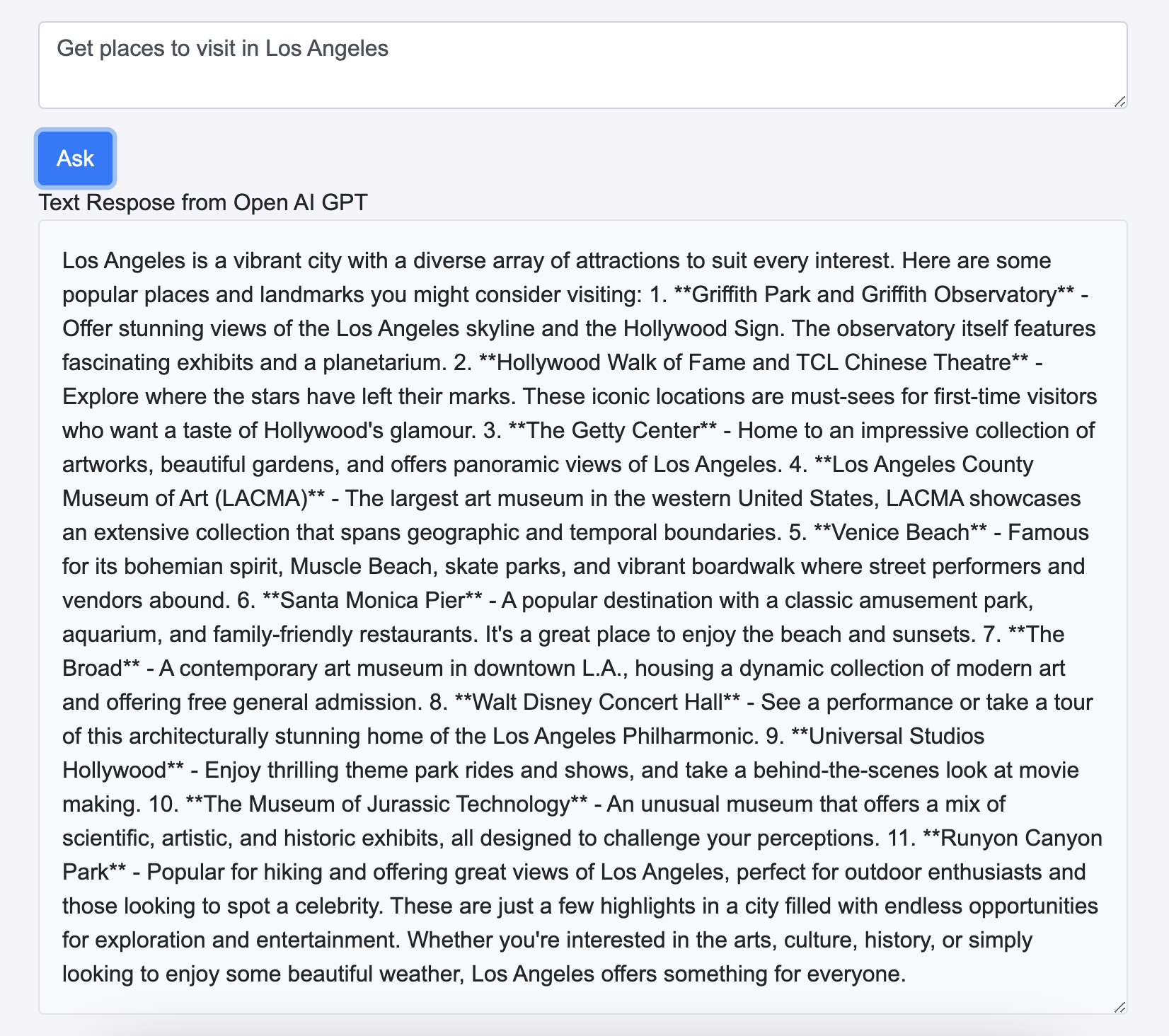

});The above code runs the online app server, however at any time when the consumer enters the question, the response is returned in textual content format. Right here is the way it appears to be like when built-in with the Person Interface that asks the consumer for textual content.

Textual content Response from Open AI API utilizing gpt-4-turbo

It might have been useful if the Open AI API had responded utilizing JSON with location particulars which might permit the Person Interface to be extra intuitive and actionable in order that seamless integrations with different functions can be useful for the consumer.

Open AI API JSON Response Format

Open AI API helps the response_format parameter in API, the place the sort may be outlined.

response_format: { kind: "json_object" } const response = await axios.publish('https://api.openai.com/v1/chat/completions', {

mannequin: "gpt-4-turbo",

response_format: { kind: "json_object" },

messages: [{ role: "system", content: "You are a helpful assistant. return results in json format" }, { role: "user", content: req.body.prompt }]

}, {

headers: {

'Authorization': `Bearer ${course of.env.OPENAI_API_KEY}`,

'Content material-Sort': 'utility/json'

}

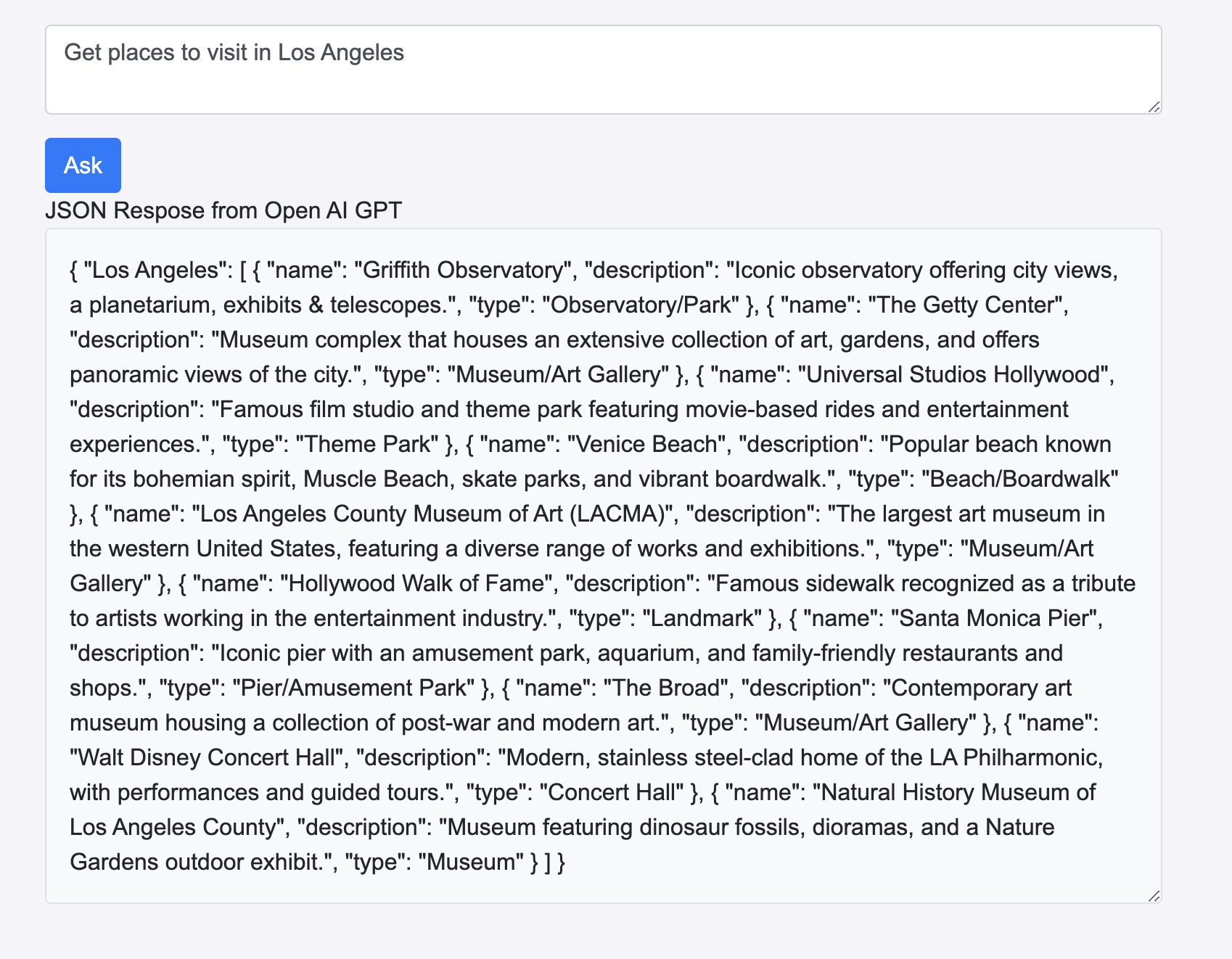

});Bettering the earlier code with response format to be a JSON object will look as follows. Nonetheless, simply modifying the response format is not going to return leads to JSON. The messages handed in Open AI API ought to convey the JSON format to be returned.

Right here is the modified code which is able to return leads to JSON format:

require('dotenv').config();

const specific = require('specific');

const axios = require('axios');

const app = specific();

const PORT = 3000;

app.use(specific.static('public'));

app.use(specific.json());

app.publish('/api/fetch', async (req, res) => {

strive {

const response = await axios.publish('https://api.openai.com/v1/chat/completions', {

mannequin: "gpt-4-turbo",

response_format: { kind: "json_object" },

messages: [{ role: "system", content: "You are a helpful assistant. return results in json format" }, { role: "user", content: req.body.prompt }]

}, {

headers: {

'Authorization': `Bearer ${course of.env.OPENAI_API_KEY}`,

'Content material-Sort': 'utility/json'

}

});

const messageContent = response.knowledge.decisions[0].message.content material;

res.json({ message: messageContent });

} catch (error) {

res.standing(500).json({ error: 'Failure to get response from OpenAI', particulars: error.message });

}

});

app.hear(PORT, () => {

console.log(`Net app Server working on http://localhost:${PORT}`);

console.log(`Utilizing OpenAI API Key: ${course of.env.OPENAI_API_KEY}`);

});The above code runs the online app server, however at any time when the consumer enters the question, the response is returned in JSON format as proven under, which might permit parsing the JSON response within the consumer interface after which integrating with third-party widgets to offer actionable Person Interfaces.

Textual content Response from Open AI API utilizing gpt-4-turbo

Open AI API JSON Response With Operate Calling

Operate calling is a strong technique for producing structured JSON responses. It lets builders outline particular features with preset parameters and return sorts. This helps the language mannequin perceive the perform’s objective and produce responses that match the required construction. By narrowing down the output to match the anticipated format, this method boosts each accuracy and consistency in API interactions.

Right here is the modified model of the earlier code utilizing perform calling:

messageContent += functionCall.perform.arguments;

});

}

res.json({ message: messageContent });

} catch (error) {

res.standing(500).json({ error: ‘Failure to get response from OpenAI’, particulars: error.message });

}

});

app.hear(PORT, () => {});” data-lang=”text/javascript”>

require('dotenv').config();

const specific = require('specific');

const axios = require('axios');

const app = specific();

const PORT = 3000;

app.use(specific.static('public'));

app.use(specific.json());

app.publish('/api/fetch', async (req, res) => {

strive {

const instruments = [

{

"type": "function",

"function": {

"name": "get_places_in_city",

"description": "Get the places to visit in city",

"parameters": {

"type": "object",

"properties": {

"name": {

"type": "string",

"description": "Name of the place",

},

"description": {

"type": "string",

"description": "description of the place",

},

"type":{

"type": "string",

"description": "type of the place",

}

},

"required": ["name","description","type" ],

additionalProperties: false

},

}

}

];

//const func = {"role": "function", "name": "get_places_in_city", "content": "{"name": "", "description": "", "type": ""}"};

const response = await axios.publish('https://api.openai.com/v1/chat/completions', {

mannequin: "gpt-4o",

messages: [{ role: "system", content: "You are a helpful assistant. return results in json format" }, { role: "user", content: req.body.prompt }],

instruments: instruments

}, {

headers: {

'Authorization': `Bearer ${course of.env.OPENAI_API_KEY}`,

'Content material-Sort': 'utility/json'

}

});

console.error('Error in response of OpenAI API:', response.knowledge.decisions[0].message? JSON.stringify(response.knowledge.decisions[0].message, null, 2) : error.message);

const toolCalls = response.knowledge.decisions[0].message.tool_calls;

let messageContent="";

if(toolCalls){

toolCalls.forEach((functionCall)=>{

messageContent += functionCall.perform.arguments;

});

}

res.json({ message: messageContent });

} catch (error) {

res.standing(500).json({ error: 'Failure to get response from OpenAI', particulars: error.message });

}

});

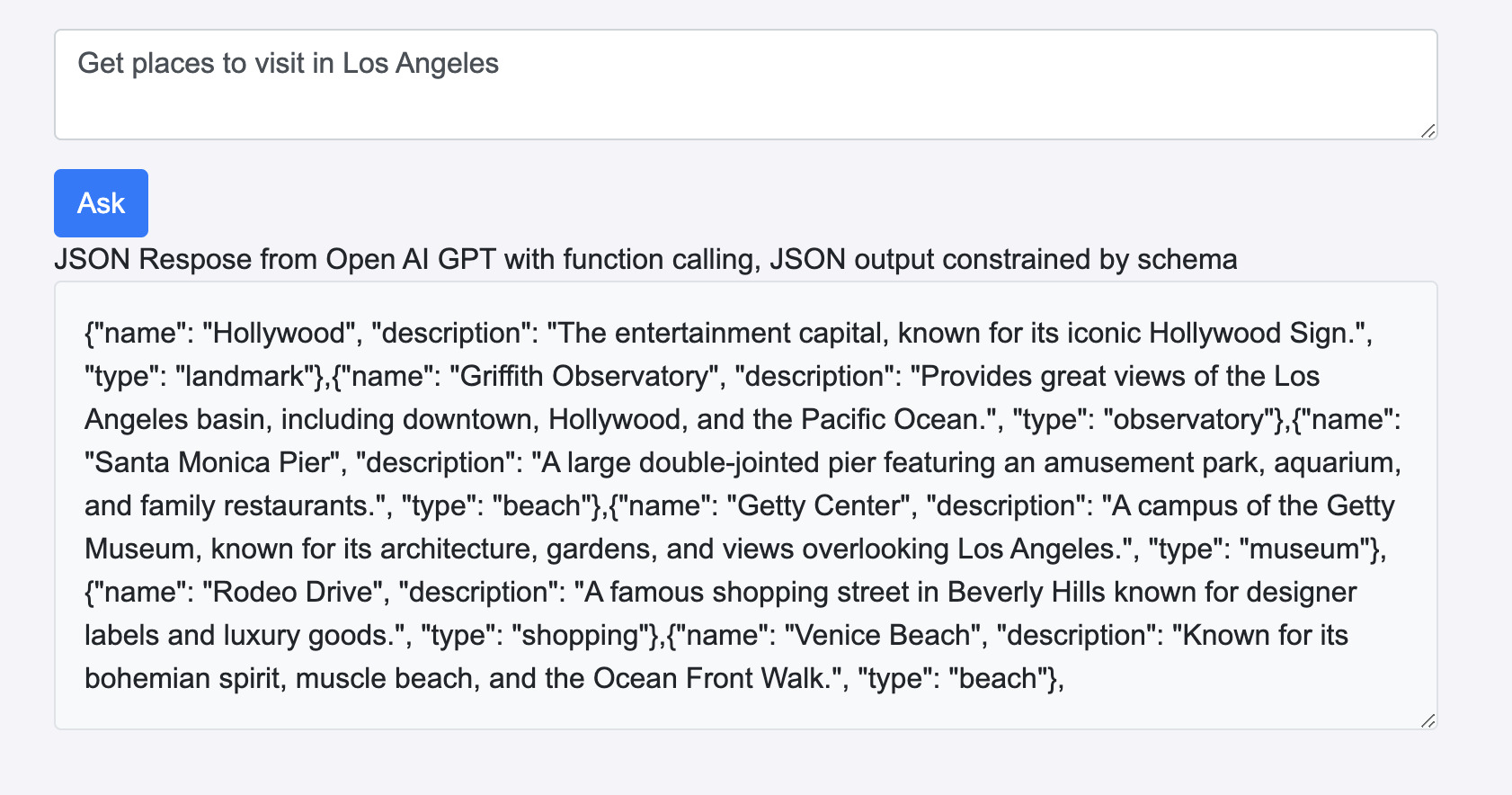

app.hear(PORT, () => {});This code launches an online app server, and at any time when the consumer submits a question, the server responds with knowledge in JSON format. This response can then be parsed inside the consumer interface, making it potential to combine third-party widgets for creating actionable, interactive components.

JSON response from OpenAIGPT with perform calling

Conclusion

This text with examples demonstrates how you can modify API calls to OpenAI to request JSON-formatted responses. This strategy considerably enhances the usability of LLM outputs, enabling extra intuitive and actionable consumer interfaces. By specifying the response_format parameter or through the use of a perform calling strategy and crafting acceptable system messages, builders can be sure that LLM responses are returned in a structured JSON format.

This technique of producing JSON outputs from LLMs facilitates seamless integration with different functions and permits for extra refined parsing and manipulation of AI-generated content material. As AI continues to evolve, the power to work with structured knowledge codecs like JSON will develop into more and more priceless, enabling builders to create extra highly effective and user-friendly AI-driven functions.