Kong Gateway is an open-source API gateway that ensures solely the appropriate requests get in whereas managing safety, price limiting, logging, and extra. OPA (Open Coverage Agent) is an open-source coverage engine that takes management of your safety and entry choices. Consider it because the thoughts that decouples coverage enforcement out of your app, so your companies don’t have to stress about implementing guidelines. As a substitute, OPA does the considering with its Rego language, evaluating insurance policies throughout APIs, microservices, and even Kubernetes. It’s versatile, and safe, and makes updating insurance policies a breeze. OPA works by evaluating three key issues: enter (real-time knowledge like requests), knowledge (exterior data like person roles), and coverage (the logic in Rego that decides whether or not to “allow” or “deny”). Collectively, these parts permit OPA to maintain your safety recreation sturdy whereas retaining issues easy and constant.

What Are We Searching for to Accomplish or Resolve?

What Are We Searching for to Accomplish or Resolve?

Oftentimes, the knowledge in OPA is sort of a regular outdated good friend — static or slowly altering. It’s used alongside the ever-changing enter knowledge to make sensible choices. However, think about a system with a sprawling internet of microservices, tons of customers, and an enormous database like PostgreSQL. This technique handles a excessive quantity of transactions each second and must sustain its pace and throughput with out breaking a sweat.

High quality-grained entry management in such a system is difficult, however with OPA, you may offload the heavy lifting out of your microservices and deal with it on the gateway degree. By teaming up with Kong API Gateway and OPA, you get each top-notch throughput and exact entry management.

How do you keep correct person knowledge with out slowing issues down? Consistently hitting that PostgreSQL database to fetch thousands and thousands of data is each costly and sluggish. Attaining each accuracy and pace normally requires compromises between the 2. Let’s purpose to strike a sensible steadiness by growing a customized plugin (on the gateway degree) that ceaselessly masses and domestically caches knowledge for OPA to make use of in evaluating its insurance policies.

Demo

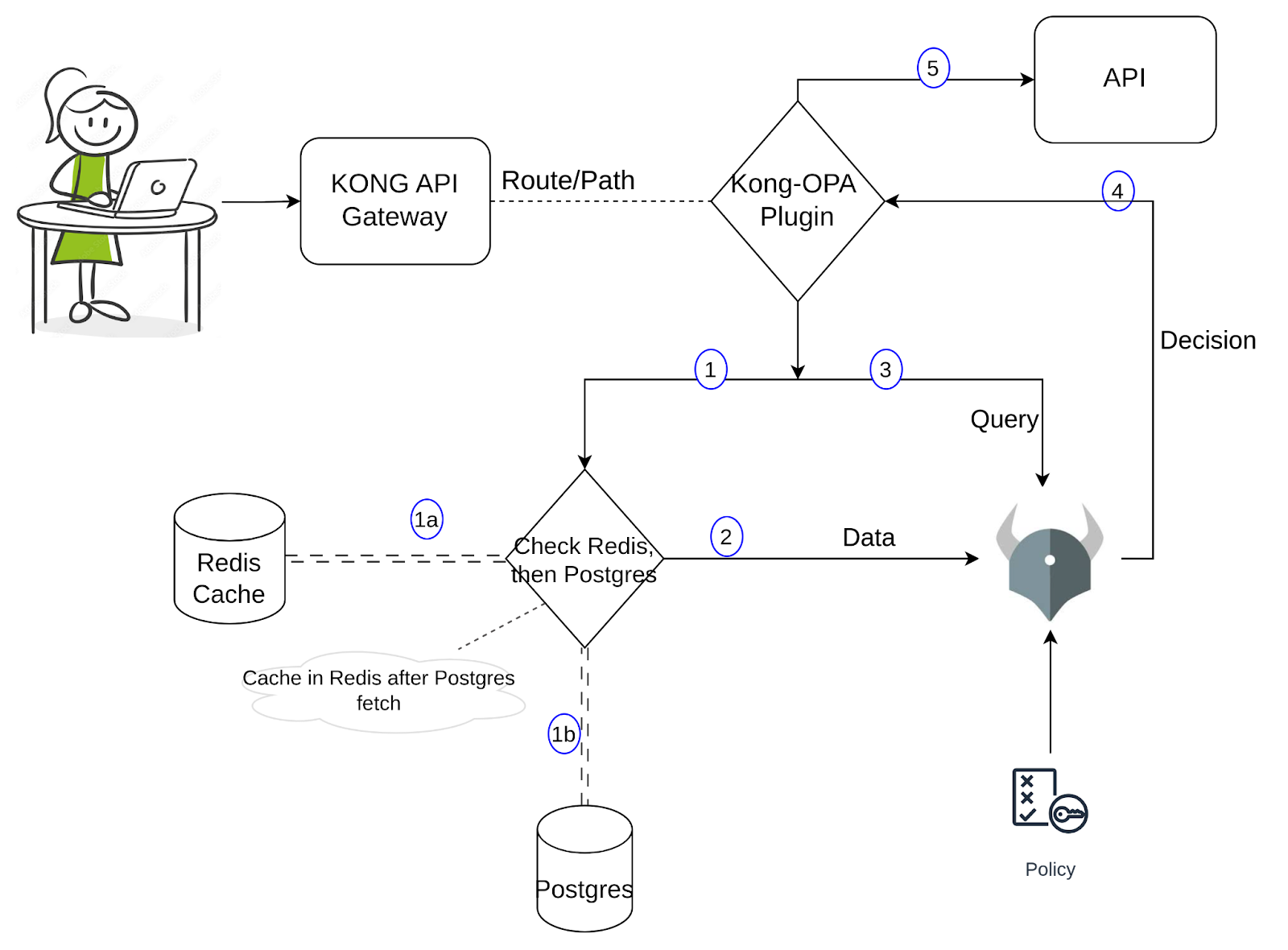

For the demo, I’ve arrange pattern knowledge in PostgreSQL, containing person info resembling identify, electronic mail, and position. When a person tries to entry a service by way of a particular URL, OPA evaluates whether or not the request is permitted. The Rego coverage checks the request URL (useful resource), methodology, and the person’s position, then returns both true or false based mostly on the foundations. If true, the request is allowed to cross via; if false, entry is denied. To date, it is a easy setup. Let’s dive into the customized plugin. For a clearer understanding of its implementation, please consult with the diagram beneath.

When a request comes via the Kong Proxy, the Kong customized plugin would get triggered. The plugin would fetch the required knowledge and cross it to OPA together with the enter/question. This knowledge fetch has two components to it: one could be to search for Redis to seek out the required values, and if discovered, cross it alongside to OPA; if else, it could additional question the Postgres and fetch the info and cache it in Redis earlier than passing it alongside to OPA. We will revisit this after we run the instructions within the subsequent part and observe the logs. OPA decides (based mostly on the coverage, enter, and knowledge) and if it is allowed, Kong will proceed to ship that request to the API. Utilizing this strategy, the variety of queries to Postgres is considerably decreased, but the info accessible for OPA is pretty correct whereas preserving the low latency.

To begin constructing a customized plugin, we want a handler.lua the place the core logic of the plugin is applied and a schema.lua which, because the identify signifies, defines the schema for the plugin’s configuration. If you’re beginning to learn to write customized plugins for Kong, please consult with this hyperlink for more information. The documentation additionally explains find out how to bundle and set up the plugin. Let’s proceed and perceive the logic of this plugin.

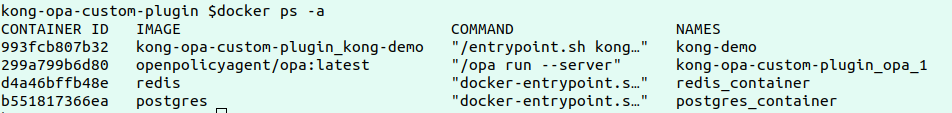

Step one of the demo could be to put in OPA, Kong, Postgres, and Redis in your native setup or any cloud setup. Please clone into this repository.

Evaluation the docker-compose yaml which has the configurations outlined to deploy all 4 companies above. Observe the Kong Env variables to see how the customized plugin is loaded.

Run the beneath instructions to deploy the companies:

docker-compose construct

docker-compose up

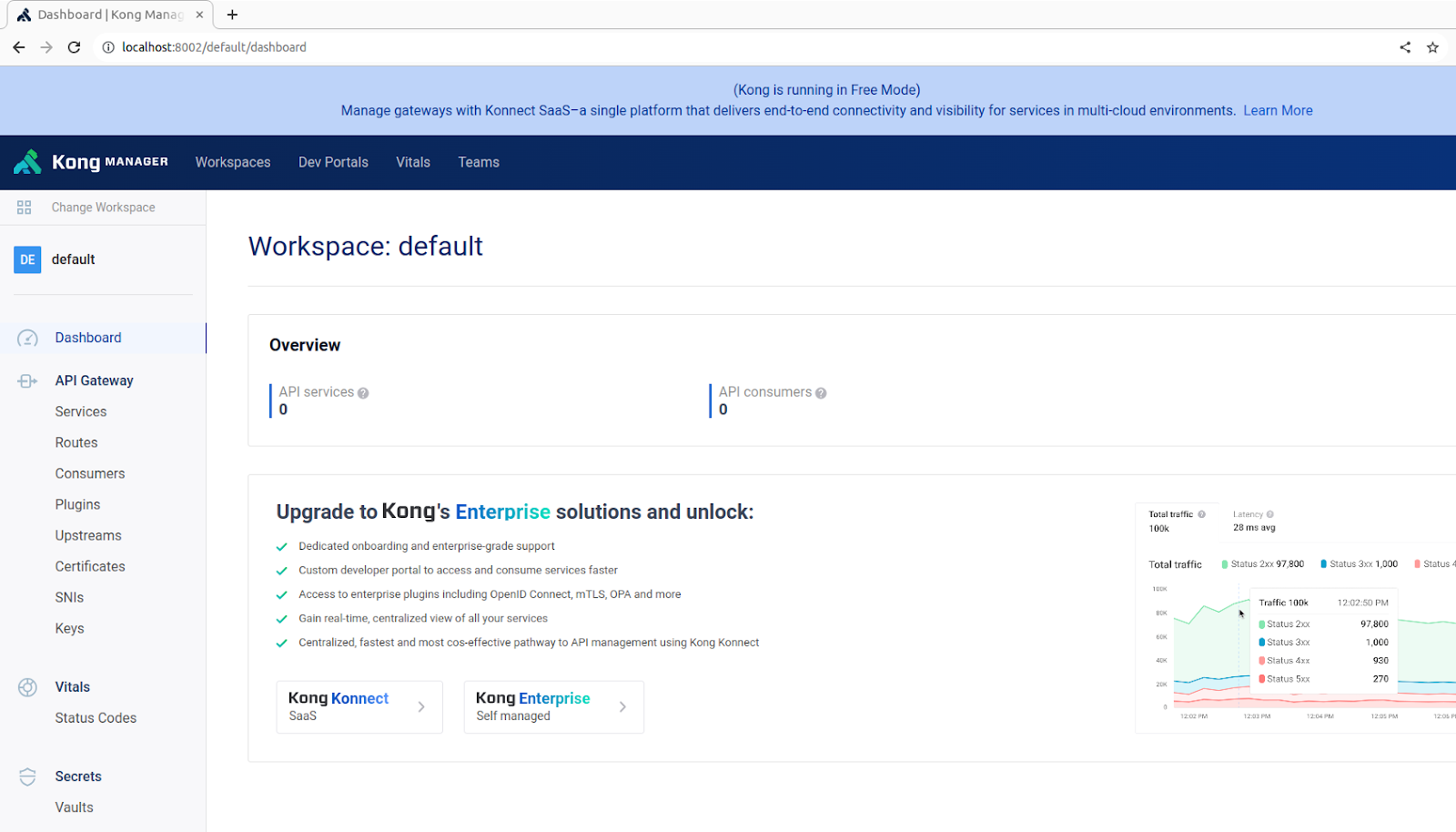

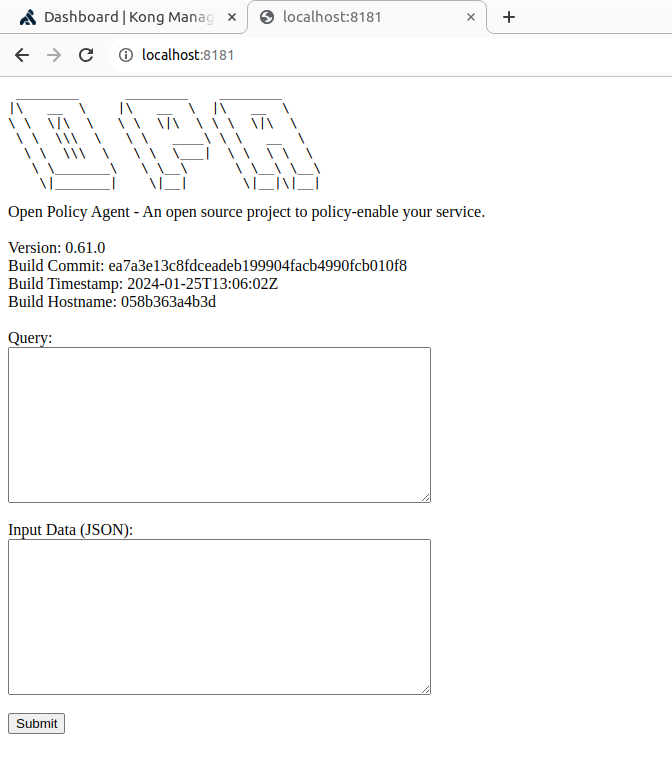

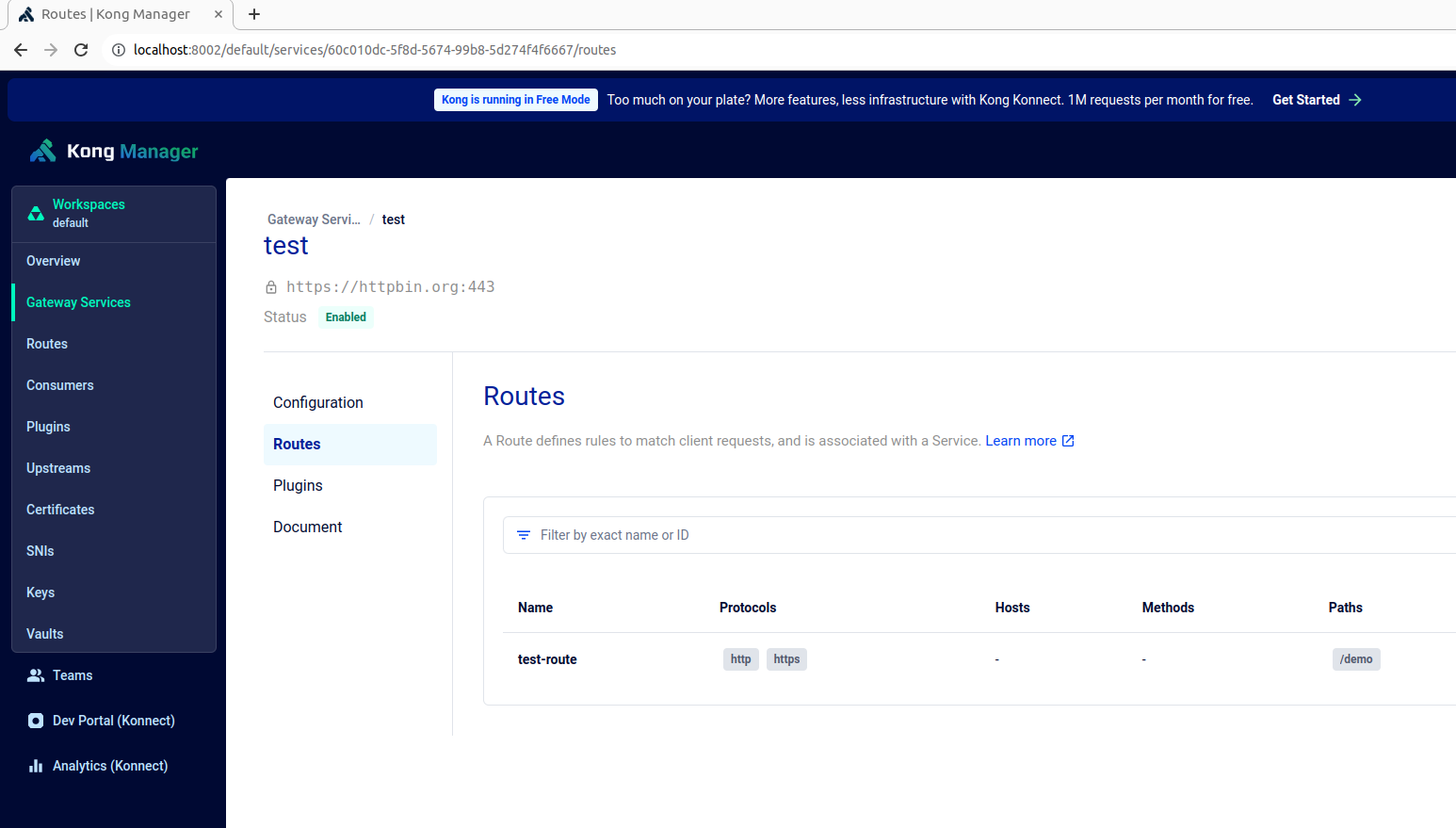

As soon as we confirm the containers are up and working, Kong supervisor and OPA can be found on respective endpoints https://localhost:8002 and https://localhost:8181 as proven beneath:

Create a check service, route and add our customized kong plugin to this route by utilizing the beneath command:

curl -X POST http://localhost:8001/config -F config=@config.yaml

The OPA coverage, outlined in authopa.rego file, is printed and up to date to the OPA service utilizing the beneath command:

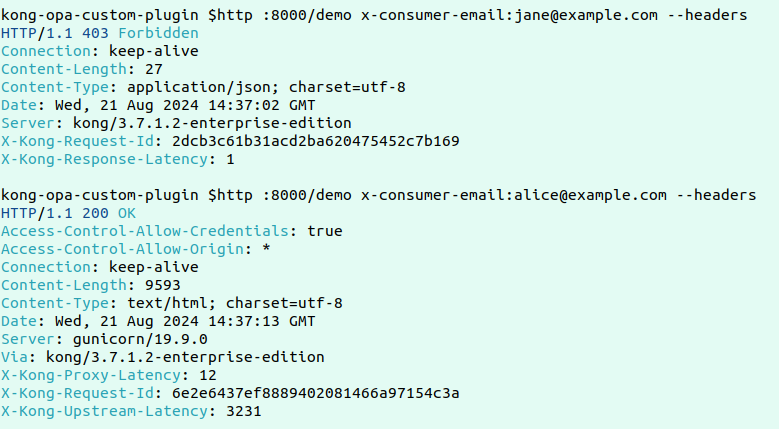

curl -X PUT http://localhost:8181/v1/insurance policies/mypolicyId -H "Content-Type: application/json" --data-binary @authopa.regoThis pattern coverage grants entry to person requests provided that the person is accessing the /demo path with a GET methodology and has the position of "Moderator". Extra guidelines may be added as wanted to tailor entry management based mostly on completely different standards.

opa_policy = [

{

"path": "/demo",

"method": "GET",

"allowed_roles": ["Moderator"]

}

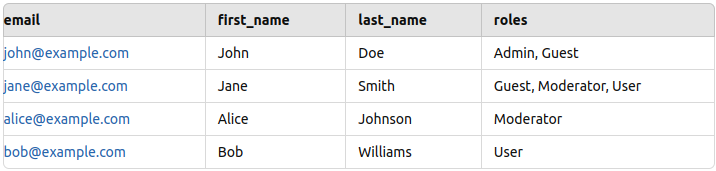

]Now the setup is prepared, however earlier than testing, we want some check knowledge so as to add in Postgres. I added some pattern knowledge (identify, electronic mail, and position) for just a few staff as proven beneath (please consult with the PostgresReadme).

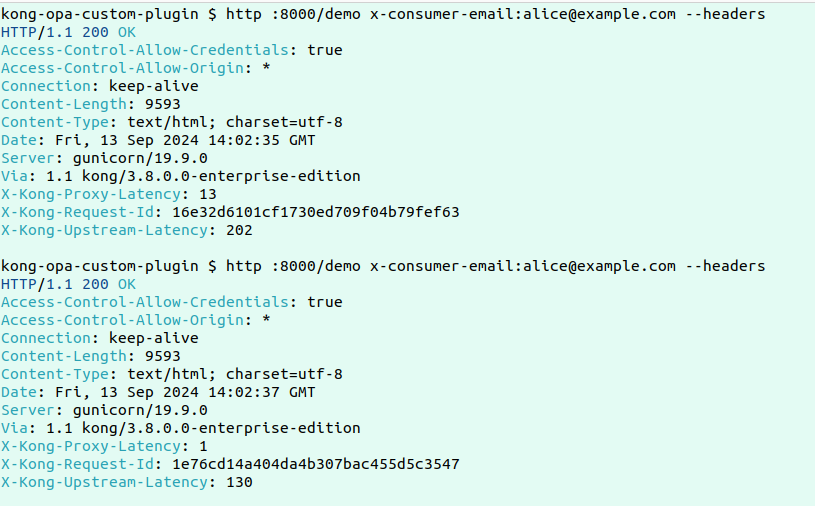

Right here’s a pattern failed and profitable request:

Now, to check the core performance of this tradition plugin, let’s make two consecutive requests and examine the logs for the way the info retrieval is going on.

Listed below are the logs:

Listed below are the logs:

2024/09/13 14:05:05 [error] 2535#0: *10309 [kong] redis.lua:19 [authopa] No knowledge present in Redis for key: alice@instance.com, consumer: 192.168.96.1, server: kong, request: "GET /demo HTTP/1.1", host: "localhost:8000", request_id: "ebbb8b5b57ff4601ff194907e35a3002"

2024/09/13 14:05:05 [info] 2535#0: *10309 [kong] handler.lua:25 [authopa] Fetching roles from PostgreSQL for electronic mail: alice@instance.com, consumer: 192.168.96.1, server: kong, request: "GET /demo HTTP/1.1", host: "localhost:8000", request_id: "ebbb8b5b57ff4601ff194907e35a3002"

2024/09/13 14:05:05 [info] 2535#0: *10309 [kong] postgres.lua:43 [authopa] Fetched roles: Moderator, consumer: 192.168.96.1, server: kong, request: "GET /demo HTTP/1.1", host: "localhost:8000", request_id: "ebbb8b5b57ff4601ff194907e35a3002"

2024/09/13 14:05:05 [info] 2535#0: *10309 [kong] handler.lua:29 [authopa] Caching person roles in Redis, consumer: 192.168.96.1, server: kong, request: "GET /demo HTTP/1.1", host: "localhost:8000", request_id: "ebbb8b5b57ff4601ff194907e35a3002"

2024/09/13 14:05:05 [info] 2535#0: *10309 [kong] redis.lua:46 [authopa] Information efficiently cached in Redis, consumer: 192.168.96.1, server: kong, request: "GET /demo HTTP/1.1", host: "localhost:8000", request_id: "ebbb8b5b57ff4601ff194907e35a3002"

2024/09/13 14:05:05 [info] 2535#0: *10309 [kong] opa.lua:37 [authopa] Is Allowed by OPA: true, consumer: 192.168.96.1, server: kong, request: "GET /demo HTTP/1.1", host: "localhost:8000", request_id: "ebbb8b5b57ff4601ff194907e35a3002"

2024/09/13 14:05:05 [info] 2535#0: *10309 consumer 192.168.96.1 closed keepalive connection

------------------------------------------------------------------------------------------------------------------------

2024/09/13 14:05:07 [info] 2535#0: *10320 [kong] redis.lua:23 [authopa] Redis end result: {"roles":["Moderator"],"email":"alice@example.com"}, consumer: 192.168.96.1, server: kong, request: "GET /demo HTTP/1.1", host: "localhost:8000", request_id: "75bf7a4dbe686d0f95e14621b89aba12"

2024/09/13 14:05:07 [info] 2535#0: *10320 [kong] opa.lua:37 [authopa] Is Allowed by OPA: true, consumer: 192.168.96.1, server: kong, request: "GET /demo HTTP/1.1", host: "localhost:8000", request_id: "75bf7a4dbe686d0f95e14621b89aba12"The logs present that for the primary request when there’s no knowledge in Redis, the info is being fetched from Postgres and cached in Redis earlier than sending it ahead to OPA for analysis. Within the subsequent request, for the reason that knowledge is accessible in Redis, the response could be a lot quicker.

Conclusion

In conclusion, by combining Kong Gateway with OPA and implementing the customized plugin with Redis caching, we successfully steadiness accuracy and pace for entry management in high-throughput environments. The plugin minimizes the variety of expensive Postgres queries by caching person roles in Redis after the preliminary question. On subsequent requests, the info is retrieved from Redis, considerably decreasing latency whereas sustaining correct and up-to-date person info for OPA coverage evaluations. This strategy ensures that fine-grained entry management is dealt with effectively on the gateway degree with out sacrificing efficiency or safety, making it a really perfect resolution for scaling microservices whereas implementing exact entry insurance policies.