Excessive-performance computing methods typically use all-flash architectures and kernel-mode parallel file methods to fulfill efficiency calls for. Nevertheless, the rising sizes of each information volumes and distributed system clusters elevate important value challenges for all-flash storage and huge operational challenges for kernel purchasers.

JuiceFS is a cloud-native distributed file system that operates totally in consumer house. It improves I/O throughput considerably by means of the distributed cache and makes use of cost-effective object storage for information storage. It’s therefore appropriate for serving large-scale AI workloads.

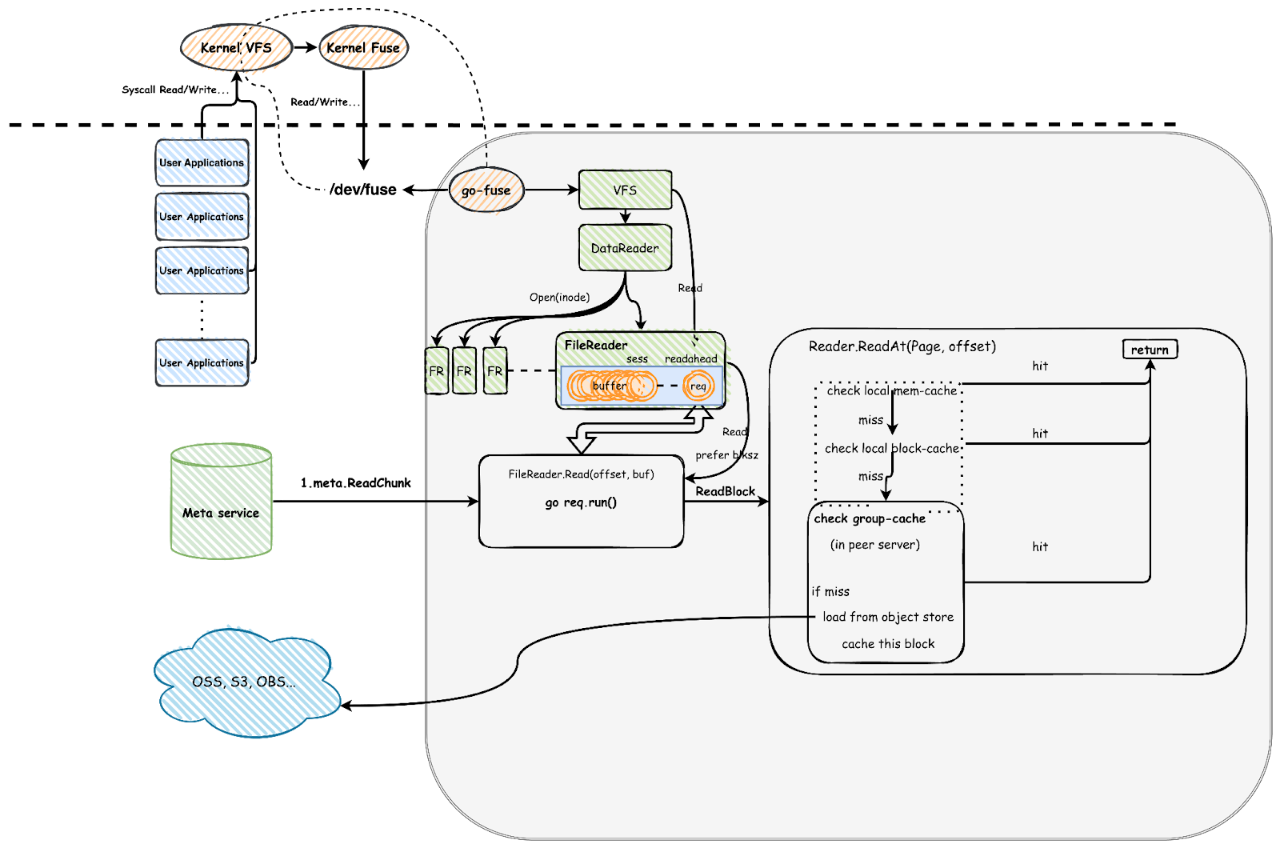

In JuiceFS, studying information begins with a client-side learn request, which is distributed to the JuiceFS consumer by way of FUSE. This request then passes by means of a readahead buffer layer, enters the cache layer, and in the end accesses object storage. To reinforce studying effectivity, we make use of numerous methods within the structure, together with information readahead, prefetch, and cache.

On this article, we’ll analyze the working rules of those methods intimately and share our take a look at leads to particular situations. We hope this text will present insights for enhancing your system efficiency.

JuiceFS Structure Introduction

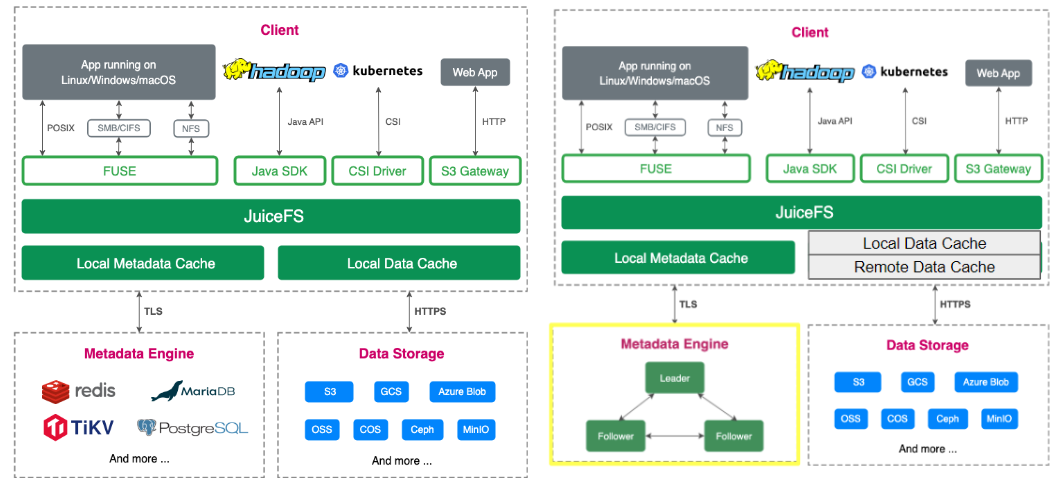

The structure of JuiceFS Neighborhood Version consists of three primary elements in whole, often called consumer, information storage, and metadata. Information entry is supported by means of numerous interfaces, together with POSIX, HDFS API, S3 API, and Kubernetes CSI, catering to completely different utility situations. By way of information storage, JuiceFS helps dozens of object storage options, together with public cloud companies and self-hosted options equivalent to Ceph and MinIO. The metadata engine works with main databases equivalent to Redis, TiKV, and PostgreSQL.

Structure: JuiceFS Neighborhood Version (left) vs. Enterprise Version (proper)

The first variations between the group version and the enterprise version are in dealing with the metadata engine and information caching, as proven within the determine above. Particularly, the enterprise version features a proprietary distributed metadata engine and helps distributed cache, whereas the group version solely helps native cache.

Ideas of Reads in Linux

There are numerous methods to learn information within the Linux system:

- Buffered I/O: It’s the usual technique to learn information. Information passes by means of the kernel buffer, and the kernel executes readahead operations to make reads extra environment friendly.

- Direct I/O: Bypassing the kernel buffer, this system permits file I/O operations. This lowers reminiscence utilization and information copying. Giant information transfers are acceptable for it.

- Asynchronous I/O: Regularly employed at the side of direct I/O, this system permits applications to ship out a number of I/O requests on a single thread with out having to attend for every request to complete. This improves I/O concurrency efficiency.

- Reminiscence map: This method makes use of tips to map information into the deal with house of the method, enabling speedy entry to file content material. With reminiscence mapping, purposes can entry the mapped file space as if it have been common reminiscence, with the kernel mechanically dealing with information reads and writes.

These studying modes convey particular challenges to storage methods:

- Random reads: Together with each random giant I/O reads and random small I/O reads, these primarily take a look at the storage system’s latency and IOPS.

- Sequential reads: These primarily take a look at the storage system’s bandwidth.

- Studying numerous small information: This assessments the efficiency of the storage system’s metadata engine and the general system’s IOPS capabilities.

JuiceFS Learn Course of Evaluation

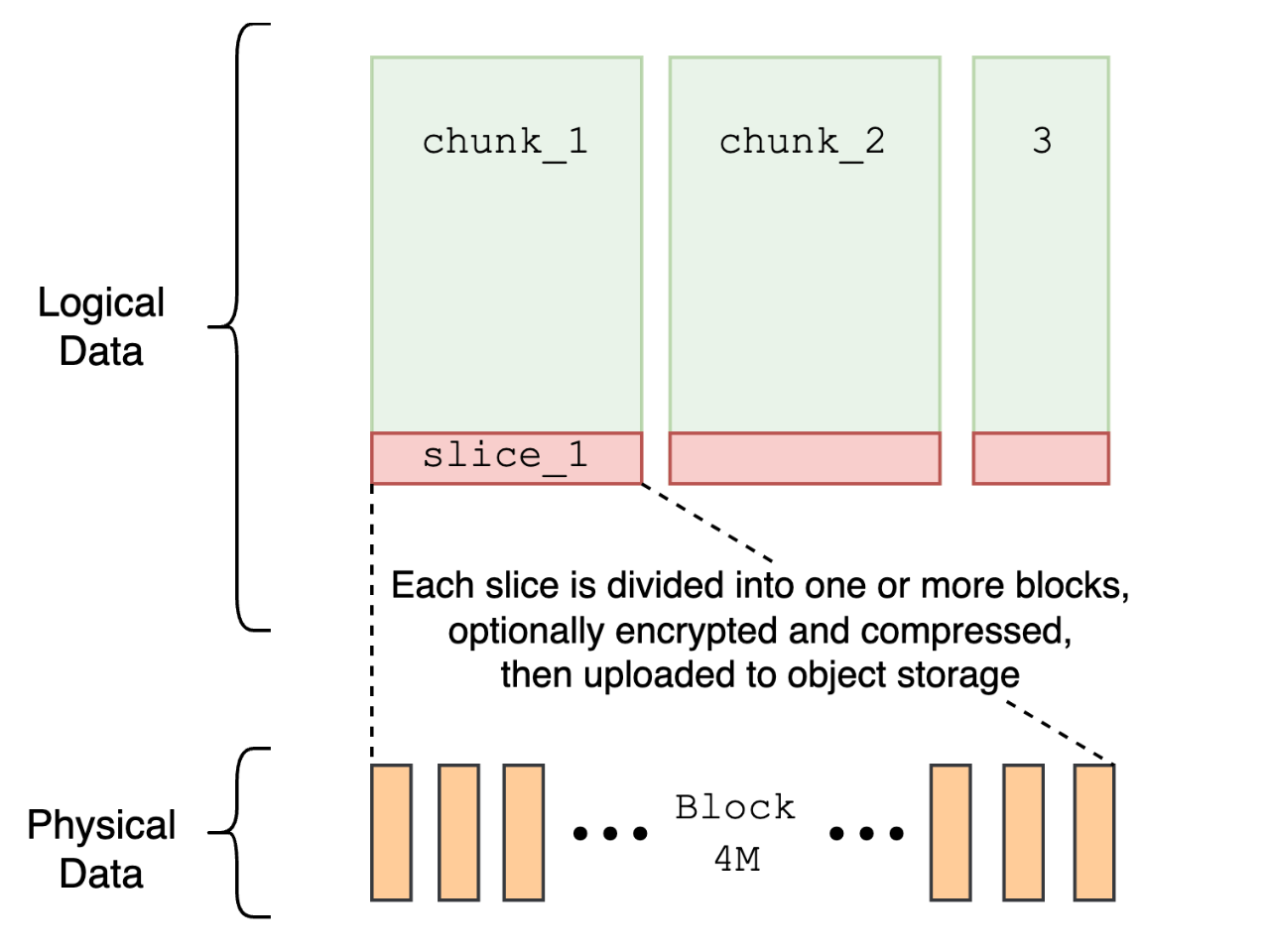

We make use of a method of file chunking. A file is logically divided into a number of chunks, every with a set measurement of 64 MB. Every chunk is additional subdivided into 4 MB blocks, that are the precise storage models within the object storage. Many efficiency optimization measures in JuiceFS are intently associated to this chunking technique. Be taught extra in regards to the JuiceFS storage workflow.

To optimize learn efficiency, we implement a number of strategies equivalent to readahead, prefetch, and cache.

JuiceFS information storage

Readahead

Readahead is a way that anticipates future learn requests and preloads information from the item storage into reminiscence. It reduces entry latency and improves precise I/O concurrency. The determine under reveals the learn course of in a simplified manner. The realm under the dashed line represents the applying layer, whereas the realm above it represents the kernel layer.

JuiceFS information studying workflow

When a consumer course of (the applying layer marked in blue within the decrease left nook) initiates a system name for file studying and writing, the request first passes by means of the kernel’s digital file system (VFS), then to the kernel’s FUSE module. It communicates with the JuiceFS consumer course of by way of the /dev/fuse system.

The method illustrated within the decrease proper nook demonstrates the following readahead optimization inside JuiceFS. The system introduces classes to trace a collection of sequential reads. Every session data the final learn offset, the size of sequential reads, and the present readahead window measurement. This data helps decide if a brand new learn request hits this session and mechanically adjusts or strikes the readahead window. By sustaining a number of classes, JuiceFS can effectively help high-performance concurrent sequential reads.

To reinforce the efficiency of sequential reads, we launched measures to extend concurrency within the system design. Every block (4 MB) within the readahead window initiates a goroutine to learn information. It’s vital to notice that concurrency is restricted by the buffer-size parameter. With a default setting of 300 MB, the theoretical most concurrency for object storage is 75 (300 MB divided by 4 MB). This setting might not suffice for some high-performance situations, and customers want to regulate this parameter in response to their useful resource configuration and particular necessities. We’ve examined completely different parameter settings in subsequent content material.

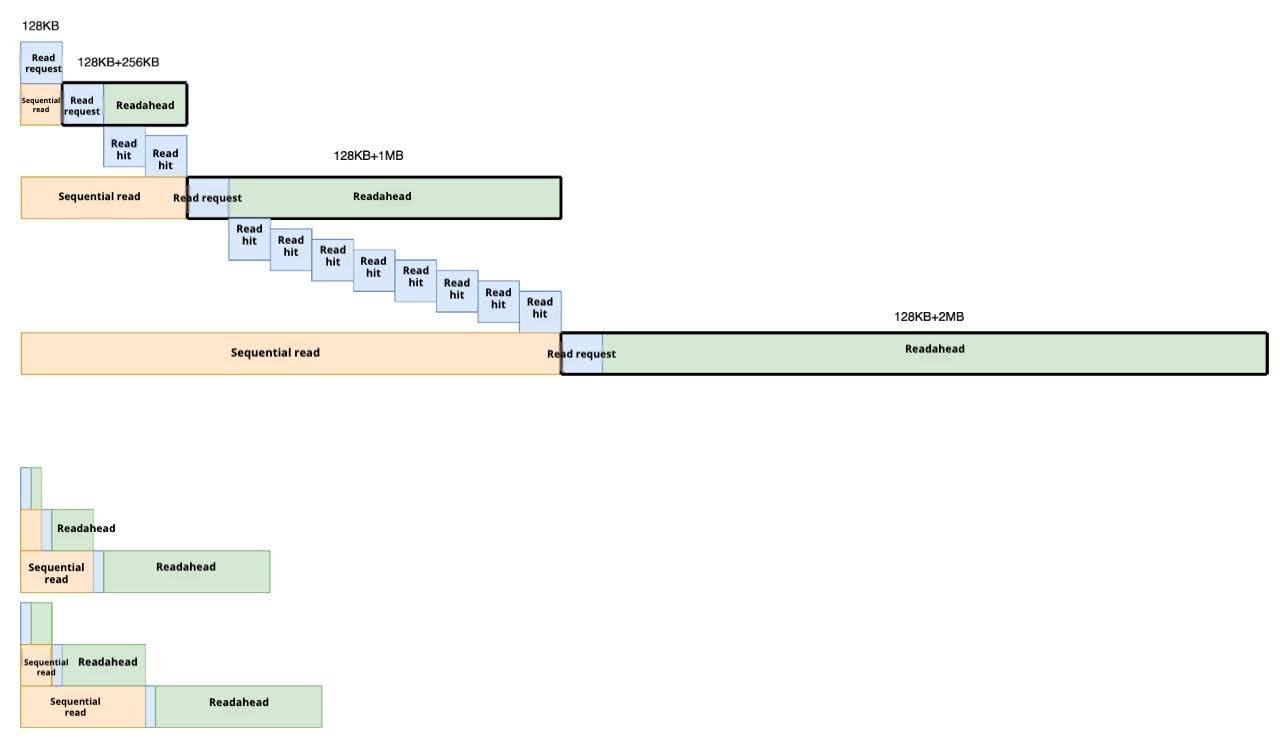

For instance, as proven within the second row of the determine under, when the system receives a second sequential learn request, it really initiates a request that features the readahead window and three consecutive information blocks. Based on the readahead settings, the following two requests will instantly hit the readahead buffer and be returned instantly.

A simplified instance of JuiceFS readahead mechanism

If the primary and second requests don’t use readahead and instantly entry object storage, the latency will likely be excessive (normally better than 10 ms). When the latency drops to inside 100 microseconds, it signifies that the I/O request efficiently used readahead. This implies the third and fourth requests instantly hit the information preloaded into reminiscence.

Prefetch

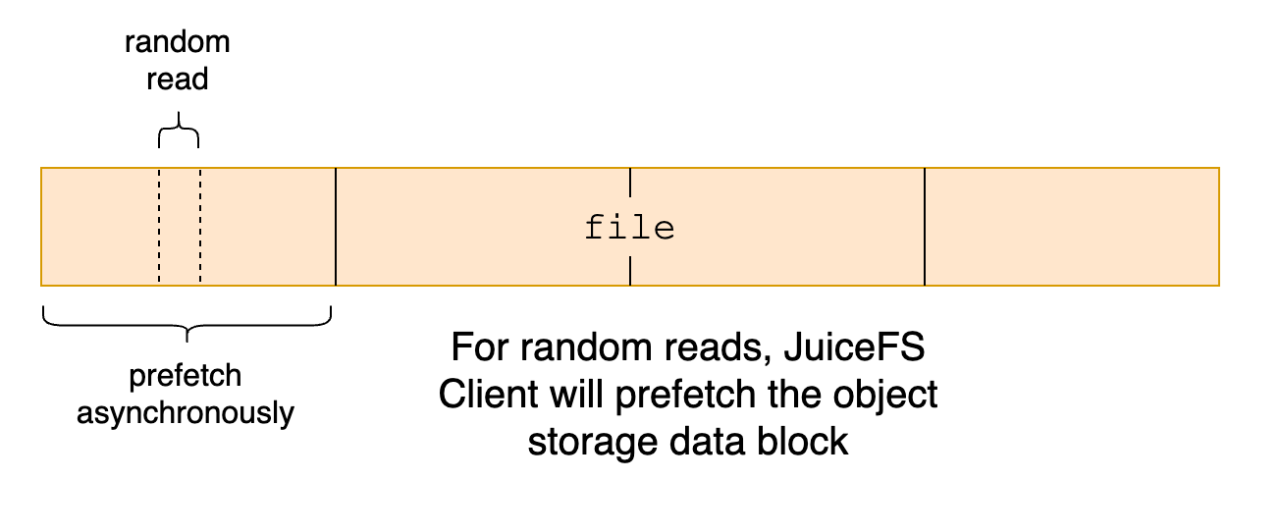

Prefetching happens when a small section of knowledge is learn randomly from a file. We assume that the close by area may also be learn quickly. Subsequently, the consumer asynchronously downloads your entire block containing that small information section.

Nevertheless, in some situations, prefetching may be unsuitable. For instance, if the applying performs giant, sparse, random reads on a big file, prefetching may entry pointless information, inflicting learn amplification. Subsequently, if customers already perceive their utility’s learn patterns and decide that prefetching is pointless, they will disable it utilizing --prefetch=0.

JuiceFS prefetch workflow

Cache

You’ll be able to study in regards to the JuiceFS cache on this doc. This text will deal with the fundamental ideas of cache.

Web page Cache

The web page cache is a mechanism supplied by the Linux kernel. One among its core functionalities is readahead. It preloads information into the cache to make sure fast response instances when the information is definitely requested.

The web page cache is especially essential in sure situations, equivalent to when dealing with random learn operations. If customers strategically use the web page cache to pre-fill file information, equivalent to studying a whole file into the cache when reminiscence is free, subsequent random learn efficiency may be considerably improved. This could improve total utility efficiency.

Native Cache

JuiceFS native cache can retailer blocks in native reminiscence or on native disks. This permits native hits when purposes entry this information, reduces community latency, and improves efficiency. Excessive-performance SSDs are sometimes really helpful for native cache. The default unit of knowledge cache is a block, 4 MB in measurement. It’s asynchronously written to the native cache after it’s initially learn from object storage.

For configuration particulars on the native cache, equivalent to --cache-dir and --cache-size, enterprise customers can check with the Information cache doc.

Distributed Cache

In contrast to native cache, the distributed cache aggregates the native caches of a number of nodes right into a single cache pool, thereby rising the cache hit charge. Nevertheless, distributed cache introduces a further community request. This leads to barely larger latency in comparison with native cache. The everyday random learn latency for distributed cache is 1-2 ms; for native cache, it’s 0.2-0.5 ms. For the main points of the distributed cache structure, see Distributed cache.

FUSE and Object Storage Efficiency

JuiceFS’s learn requests all undergo FUSE, and the information have to be learn from object storage. Subsequently, understanding the efficiency of FUSE and object storage is the idea for understanding the efficiency of JuiceFS.

FUSE Efficiency

We carried out two units of assessments on FUSE efficiency. The take a look at state of affairs was that after the I/O request reached the FUSE mount course of, the information was crammed instantly into the reminiscence and returned instantly. The take a look at primarily evaluated the overall bandwidth of FUSE underneath completely different numbers of threads, the typical bandwidth of a single thread, and the CPU utilization. By way of {hardware}, take a look at 1 is Intel Xeon structure and take a look at 2 is AMD EPYC structure.

The desk under reveals the take a look at outcomes of FUSE efficiency take a look at 1, based mostly on Intel Xeon CPU structure:

| Threads | Bandwidth (GiB/s) | Bandwidth per Thread (GiB/s) | CPU utilization (cores) |

|---|---|---|---|

|

1 |

7.95 |

7.95 |

0.9 |

|

2 |

15.4 |

7.7 |

1.8 |

|

3 |

20.9 |

6.9 |

2.7 |

|

4 |

27.6 |

6.9 |

3.6 |

|

6 |

43 |

7.2 |

5.3 |

|

8 |

55 |

6.9 |

7.1 |

|

10 |

69.6 |

6.96 |

8.6 |

|

15 |

90 |

6 |

13.6 |

|

20 |

104 |

5.2 |

18 |

|

25 |

102 |

4.08 |

22.6 |

|

30 |

98.5 |

3.28 |

27.4 |

The desk reveals that:

- Within the single-threaded take a look at, the utmost bandwidth reached 7.95 GiB/s whereas utilizing lower than one core of CPU.

- Because the variety of threads grew, the bandwidth elevated virtually linearly. When the variety of threads grew to twenty, the overall bandwidth elevated to 104 GiB/s.

Right here, customers have to pay particular consideration to the truth that the FUSE bandwidth efficiency measured utilizing completely different {hardware} sorts and completely different working methods underneath the identical CPU structure could also be completely different. We examined utilizing a number of {hardware} sorts, and the utmost single-thread bandwidth measured on one was solely 3.9 GiB/s.

The desk under reveals the take a look at outcomes of FUSE efficiency take a look at 2, based mostly on AMD EPYC CPU structure:

|

Threads |

Bandwidth (GiB/s) |

Bandwidth per thread (GiB/s) |

CPU utilization (cores) |

|---|---|---|---|

|

1 |

3.5 |

3.5 |

1 |

|

2 |

6.3 |

3.15 |

1.9 |

|

3 |

9.5 |

3.16 |

2.8 |

|

4 |

9.7 |

2.43 |

3.8 |

|

6 |

14.0 |

2.33 |

5.7 |

|

8 |

17.0 |

2.13 |

7.6 |

|

10 |

18.6 |

1.9 |

9.4 |

|

15 |

21 |

1.4 |

13.7 |

In take a look at 2, the bandwidth didn’t scale linearly. Particularly when the variety of concurrencies reached 10, the bandwidth per concurrency was lower than 2 GiB/s.

Beneath multi-concurrency situations, the height bandwidth of take a look at 2 (EPYC structure) was about 20 GiBps, whereas take a look at 1 (Intel Xeon structure) confirmed larger efficiency. The height worth normally occurred after the CPU sources have been absolutely occupied. Right now, each the applying course of and the FUSE course of reached the CPU useful resource restrict.

In precise purposes, as a result of time overhead in every stage, the precise I/O efficiency is usually decrease than the above-mentioned take a look at peak of three.5 GiB/s. For instance, within the mannequin loading state of affairs, when loading mannequin information in pickle format, normally the single-thread bandwidth can solely attain 1.5 to 1.8 GiB/s. That is primarily as a result of when studying the pickle file, information deserialization is required, and there will likely be a bottleneck of CPU single-core processing. Even when studying instantly from reminiscence with out going by means of FUSE, the bandwidth can solely attain as much as 2.8 GiB/s.

Object Storage Efficiency

We used the juicefs objbench instrument for testing object storage efficiency, masking completely different a great deal of single concurrency, 10 concurrency, 200 concurrency, and 800 concurrency. It needs to be famous that the efficiency hole between completely different object shops could also be giant.

|

Load |

Add objects (MiB/s) |

Obtain objects (MiB/s) |

Common add time (ms/object) |

Common obtain time (ms/object) |

|---|---|---|---|---|

|

Single concurrency |

32.89 |

40.46 |

121.63 |

98.85 |

|

10 concurrency |

332.75 |

364.82 |

10.02 |

10.96 |

|

200 concurrency |

5,590.26 |

3,551.65 |

067 |

1.13 |

|

800 concurrency |

8,270.28 |

4,038.41 |

0.48 |

0.99 |

Once we elevated the concurrency of GET operations on object storage to 200 and 800, we might obtain very excessive bandwidth. This means that the bandwidth for single concurrency may be very restricted when studying information instantly from object storage. Growing concurrency is essential for total bandwidth efficiency.

Sequential Learn and Random Learn Checks

To supply a transparent benchmark reference, we used the fio instrument to check the efficiency of JuiceFS Enterprise Version in sequential and random learn situations.

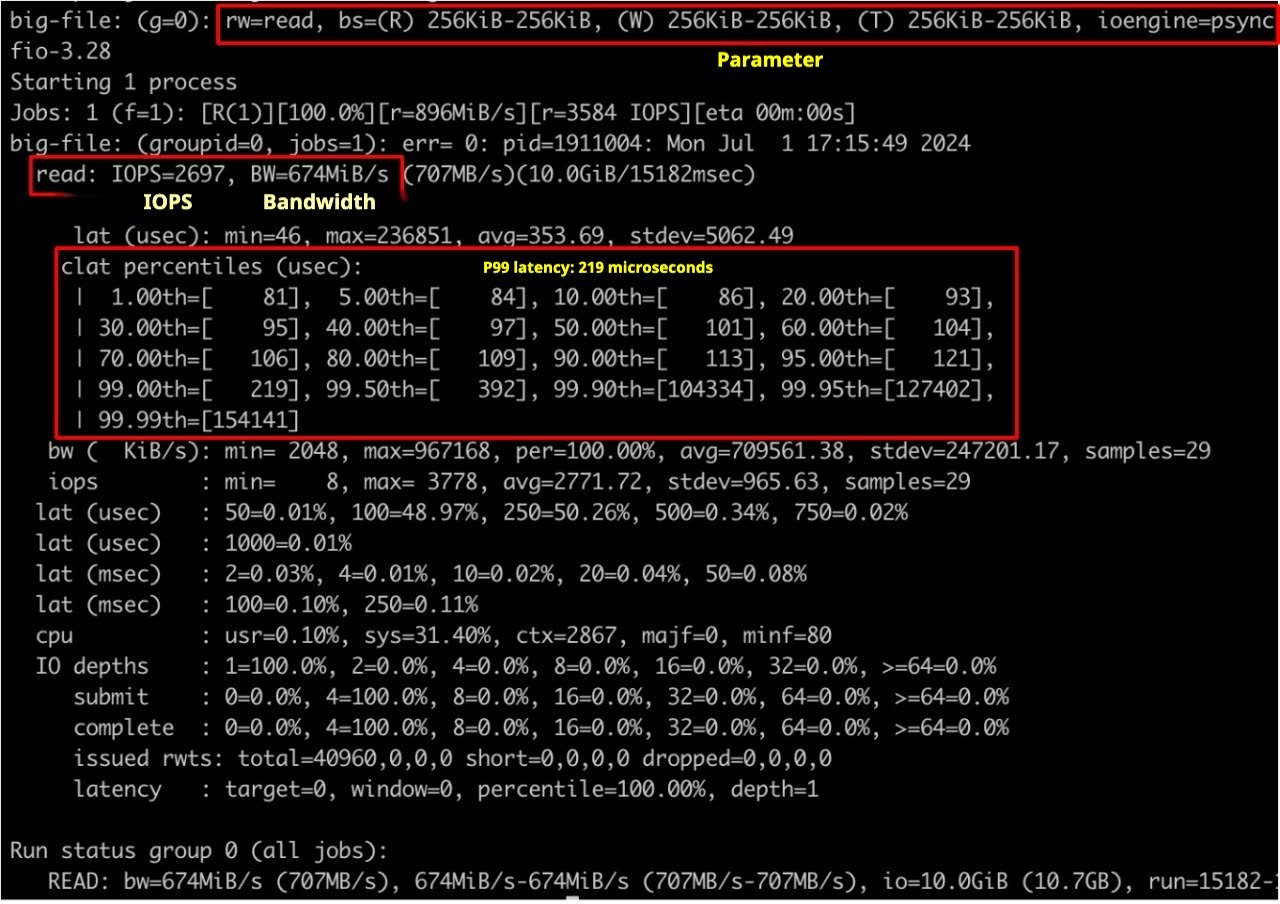

Sequential Learn

As proven within the determine under, 99% of the information had a latency of lower than 200 microseconds. In sequential learn situations, the readahead window carried out very effectively, leading to low latency.

Sequential learn

Sequential learn

By default, buffer-size=300 MiB, a sequential studying of 10 GB from object storage.

By rising the readahead window, we improved I/O concurrency and thus elevated bandwidth. Once we adjusted buffer-size from the default 300 MiB to 2 GiB, the learn concurrency was now not restricted, and the learn bandwidth elevated from 674 MiB/s to 1,418 MiB/s. It reached the efficiency peak of single-threaded FUSE. To additional improve bandwidth, it’s obligatory to extend the I/O concurrency within the utility code.

The desk under reveals the efficiency take a look at outcomes of various buffer sizes (single thread):

| buffer-size | Bandwidth |

|---|---|

|

300 MiB |

674 MiB/s |

|

2 GiB |

1,418 MiB/s |

When the variety of utility threads elevated to 4, the bandwidth reached 3,456 MiB/s. For 16 threads, the bandwidth reached 5,457 MiB/s. At this level, the community bandwidth was already saturated.

The desk under reveals the bandwidth efficiency take a look at outcomes of various thread counts (buffer-size: 2 GiB):

| buffer-size | bandwidth |

|---|---|

|

1 thread |

1,418 MiB/s |

|

4 threads |

3,456 MiB/s |

|

16 threads |

5,457 MiB/s |

Random Learn

For small I/O random reads, efficiency is principally decided by latency and IOPS. Since whole IOPS may be linearly scaled by including nodes, we first deal with latency information on a single node.

- FUSE information bandwidth refers back to the quantity of knowledge transmitted by means of the FUSE layer. It represents the information switch charge observable and operable by consumer purposes.

- Underlying information bandwidth refers back to the bandwidth of the storage system that processes information on the bodily layer or working system degree.

As proven within the desk under, in comparison with penetrating object storage, latency was decrease when hitting native cache and distributed cache. When optimizing random learn latency, it is essential to think about enhancing information cache hit charges. As well as, utilizing asynchronous I/O interfaces and rising thread counts can considerably enhance IOPS.

The desk under reveals the take a look at outcomes of JuiceFS small I/O random reads:

| Class | Latency | IOPS | FUSE information bandwidth | |

|---|---|---|---|---|

|

Small I/O random learn 128 KB (synchronous learn) |

Hitting native cache |

0.1-0.2 ms |

5,245 |

656 MiB/s |

|

Hitting distributed cache |

0.3-0.6 ms |

1,795 |

224 MiB/s |

|

|

Penetrating object storage |

50-100 ms |

16 |

2.04 MiB/s |

|

|

Small I/O random learn 4 KB (synchronous learn) |

Hitting native cache |

0.05-0.1 ms |

14.7k |

57.4 MiB/s |

|

Hitting distributed cache |

0.1-0.2 ms |

6,893 |

26.9 MiB/s |

|

|

Penetrating object storage |

30-55 ms |

25 |

102 KiB/s |

|

|

Small I/O random learn 4 KB (libaio iodepth=64) |

Hitting native cache |

– |

30.8k |

120 MiB/s |

|

Hitting distributed cache |

– |

32.3k |

126 MiB/s |

|

|

Penetrating object storage |

– |

1,530 |

6,122 KiB/s |

|

|

Small I/O random learn 4 KB (libaio iodepth=64) 4 concurrency |

Hitting native cache |

– |

116k |

450 MiB/s |

|

Hitting distributed cache |

– |

90.5k |

340 MiB/s |

|

|

Penetrating object storage |

– |

5.4k |

21.5 MiB/s |

In contrast to small I/O situations, giant I/O random learn situations should additionally take into account the learn amplification situation. As proven within the desk under, the underlying information bandwidth was larger than the FUSE information bandwidth attributable to readahead results. Precise information requests could also be 1-3 instances greater than utility information requests. On this case, you may disable prefetch and alter the utmost readahead window for tuning.

The desk under reveals the take a look at outcomes of JuiceFS giant I/O random reads, with distributed cache enabled:

| Class | FUSE information bandwidth | Underlying Information bandwidth |

|---|---|---|

|

1 MB buffered I/O |

92 MiB |

290 MiB |

|

2 MB buffered I/O |

155 MiB |

435 MiB |

|

4 MB buffered I/O |

181 MiB |

575 MiB |

|

1 MB direct I/O |

306 MiB |

306 MiB |

|

2 MB direct I/O |

199 MiB |

340 MiB |

|

4 MB direct I/O |

245 MiB |

735 MiB |

Conclusion

This text supplied our methods for optimizing the studying efficiency of JuiceFS. It has lined readahead, prefetch, and cache. JuiceFS lowers latency and will increase I/O throughput by placing these methods into observe.

We’ve proven by means of detailed benchmarks and evaluation how numerous configurations have an effect on system efficiency. If you’re doing sequential reads or random I/Os, understanding about and tuning these mechanisms may be helpful in enhancing your methods’ learn efficiency.