Multi-level caching is a way used to enhance the efficiency of purposes by storing continuously accessed information in several cache layers. The cache layers typically lie at varied ranges of the appliance stack.

It’s a widespread follow to make use of a distributed cache like NCache to implement multi-level caching in purposes. NCache offers a scalable and high-performance caching answer that may retailer information in reminiscence throughout a number of servers. Along with this, NCache offers a function to allow native caching in consumer nodes to supply even sooner information entry.

On this article, we are going to talk about how one can implement multi-level caching with NCache and the advantages of utilizing this strategy.

Why Multi-Stage Caching?

Earlier than implementing multi-level caching, let’s perceive how storing information in a number of cache layers can profit an software.

Let’s discuss a typical software setup the place information is saved in a database and retrieved by the appliance when wanted:

- When an software must entry information, it sends a request to the database, which retrieves the information and sends it again to the appliance.

- This course of may be gradual on account of community latency and the time to retrieve information from the database.

To enhance the appliance efficiency, we have to cut back the variety of instances the appliance has to question the database for a similar information. That is the place caching comes into play.

Bettering Efficiency With Caching

To unravel the issue, we are able to retailer continuously accessed information in a cache, which is quicker to entry than a database.

When the appliance wants the information, it first checks the cache. If the information is discovered within the cache, it’s returned to the appliance with out querying the database. This reduces the load on the database and improves the response time of the appliance.

Caches may be carried out at totally different ranges of the appliance stack, equivalent to:

- Native cache: A cache native to the appliance occasion. It shops information in reminiscence on the identical server the place the appliance is operating. That is the quickest to entry however is proscribed to a single server.

- Distributed cache: A cache that’s shared throughout a number of servers in a cluster. It shops information in reminiscence throughout a number of servers, offering scalability and excessive availability. NCache is an instance of a distributed cache.

Along with the above, there may be different cache layers like database question caches, CDN caches, and so on. We’ll deal with native and distributed caching to exhibit multi-level caching as they’re mostly used.

Let’s take a look at a comparability of native and distributed caching within the beneath desk:

| Native Cache | Distributed Cache | |

|---|---|---|

| Scope | Native to the appliance occasion | Shared throughout a number of servers in a cluster |

| Scalability | Restricted to a single server | Might be scaled to a number of servers |

| Excessive Availability | Goes down if the server goes down | Offers excessive availability by replication and failover |

| Efficiency | Quickest entry | Slower entry than native cache on account of community latency |

| Storage | Restricted by server reminiscence | Can retailer bigger quantities of knowledge |

| Use Case | Appropriate for small-scale purposes | Appropriate for large-scale purposes with high-traffic |

By combining native and distributed caching, we are able to create a multi-level caching technique that leverages the advantages of each kinds of caches. This might help enhance the efficiency of the appliance and cut back the load on the database.

L1 and L2 Caching

In multi-level caching, the cache layers are sometimes referred to as L1 and L2 caches.

- L1 Cache: An L1 Cache shops restricted information however offers sooner entry instances. Because it shops restricted information, it could have the next cache miss price. Native caches are sometimes used as L1 caches.

- L2 Cache: An L2 Cache shops extra information and is shared throughout a number of servers. The aim of the L2 cache is to scale back the cache miss price by storing extra information and offering excessive availability. Distributed caches like NCache are sometimes used as L2 caches.

By combining L1 and L2 caching, purposes can steadiness quick entry instances and excessive availability, thus leading to improved efficiency and scalability.

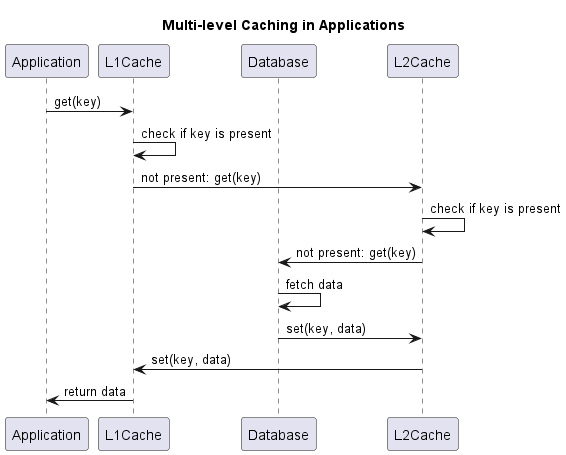

Let’s take a look at a diagram that illustrates the idea of multi-level caching with L1 and L2 caches:

Within the diagram:

- The appliance situations have their native caches (L1 caches) that retailer continuously accessed information.

- The distributed cache (L2 cache) shops extra information and is shared throughout a number of servers in a cluster.

- When an software wants information, it first checks the L1 cache. If the information is just not discovered within the L1 cache, it checks the L2 cache. If the information is discovered within the L2 cache, it’s returned to the appliance.

- If the information is just not present in both cache, the appliance queries the database and shops the information in each caches for future entry.

Within the information replace situation, when the appliance updates information, it updates the database and invalidates/updates the corresponding information in each caches. This ensures that the information stays constant throughout all cache layers.

Utilizing this multi-level caching technique, purposes can cut back the load on the database, enhance response instances, and scale to deal with high-traffic hundreds.

Establishing a Distributed Cache With NCache

Now that we perceive the advantages of multi-level caching, let’s have a look at how we are able to implement it utilizing NCache. We’ll construct a easy Java software that makes use of NCache because the distributed cache and a neighborhood cache for storing information.

To start with, let’s discover how we are able to arrange a cache cluster with NCache and connect with it from our Java software.

Establishing NCache Server

Earlier than we begin coding, we have to arrange NCache on our servers. NCache offers a distributed caching answer that may be put in on Home windows and Linux servers.

We’ll create a cache cluster utilizing NCache and configure it. As soon as the cache cluster is ready up, we are able to connect with it from our Java software.

Including NCache Dependencies

To make use of NCache in our Java software, we have to add the NCache consumer libraries to our venture. We will do that by including the next Maven dependencies to our pom.xml file:

com.alachisoft.ncache

ncache-client

5.3.3

This consumer will enable us to connect with the NCache cluster and work together with the cache from our Java code.

Establishing NCache Connection

To connect with the NCache cluster, we’ll configure the cache settings in our Java software.

The popular means to do that is through the use of a configuration file. The configuration file incorporates the cache settings, server particulars, and different properties required to connect with the cache cluster:

Alternatively, we are able to declare cache connection programmatically utilizing CacheConnectionOptions. This may require modifications within the code when we have to replace the cache connection particulars and due to this fact is just not beneficial.

Code Instance: Constructing Blocks

Now that we’ve got arrange NCache and linked to the cache cluster, let’s construct a easy Java software that demonstrates multi-level caching with NCache.

We’ll create a small service that gives a CRUD (Create, Learn, Replace, Delete) interface for storing and retrieving Product information. The service will use a distributed cache backed by NCache.

Along with this, we’ll discover how one can use NCache Shopper Cache to supply native caching.

Information Class

Let’s begin by defining a easy Product class that represents a product in our software:

public class Product {

non-public int id;

non-public String identify;

non-public double value;

// Getters and Setters

}Distributed Cache Operations With NCache

Subsequent, we’ll create the NCacheService class that gives strategies to work together with the NCache cluster.

Here is an overview of the NCacheService class:

import java.util.Calendar;

public class ProductNCacheService {

non-public last Cache cache;

public ProductNCacheService() throws Exception {

// connects to NCache cluster and will get the cache occasion

cache = CacheManager.GetCache("demoCache");

}

public Product get(int id) {

return cache.get(id, Product.class);

}

public void put(int id, Product product) {

cache.add(id, new CacheItem(product));

}

public void delete(int id) {

cache.take away(id);

}

}First, we connect with the NCache cluster and get the cache occasion. Then, we offer strategies to get, put, and delete merchandise within the cache.

Utilizing NCache

Subsequent, we’ll create a ProductService class that gives strategies to work together with the caches and the database.

The service will work together with the cache and the database to retailer and retrieve product information.

Retrieving Information

Let’s implement the getProduct methodology within the ProductService class. This methodology retrieves a product by its ID from NCache, or the database if not discovered within the cache:

public class ProductService {

non-public last ProductNCacheService nCacheService;

non-public last ProductDatabaseService databaseService;

public ProductService(ProductNCacheService nCacheService, ProductDatabaseService databaseService) {

this.nCacheService = nCacheService;

this.databaseService = databaseService;

}

public Product getProduct(int id) {

Product product = nCacheService.get(id);

if (product == null) {

product = databaseService.getProduct(id);

nCacheService.put(id, product);

}

return product;

}

}Let’s take a look at the getProduct methodology intimately:

- The

ProductServiceclass has a reference to theProductNCacheServiceandProductDatabaseServicelessons. - Within the

getProductmethodology, we first attempt to retrieve the product from the NCache utilizing thegetmethodology. If the product is just not discovered within the cache, we fetch it from the database utilizing thegetProductmethodology of theProductDatabaseServiceclass. - As soon as we’ve got the product, we retailer it within the NCache utilizing the

putmethodology in order that it may be retrieved from the cache in subsequent calls.

That is an implementation of 1 degree of caching the place we first verify the distributed cache (NCache) after which the database if the information is just not discovered within the cache. This helps cut back the load on the database and improves the response time of the appliance.

Updating Information

Now that we perceive retrieving information, let’s implement the updateProduct methodology within the ProductService class. This methodology updates a product in NCache and the database:

public void updateProduct(Product product) {

databaseService.updateProduct(product);

nCacheService.put(product.getId(), product); // Replace the product within the cache immediately

}There are a number of methods to deal with information updates in multi-level caching:

- It’s potential that immediate updates will not be required within the cache. On this situation, the consumer can replace the database and invalidate the cache information. The subsequent learn operation will fetch the up to date information from the database and retailer it within the cache.

- For eventualities the place immediate updates are required, the consumer can replace the database after which replace the cache information. This ensures that the cache information is all the time in sync with the database.

Since we’re utilizing a devoted distributed cache, it could be useful to replace the cache information after updating the database to take care of consistency and keep away from cache misses.

One other vital facet of NCache is its help for Learn-By way of and Write-By way of caching methods. Learn-through caching permits the cache to fetch information from the database if it isn’t discovered within the cache. Equally, write-through/write-behind caching permits the cache to put in writing information to the database when it’s up to date within the cache. This helps keep consistency between the cache and the database always.

Including a Native Cache Utilizing NCache Shopper Cache

The subsequent step is so as to add a neighborhood cache because the L1 cache in our software. We may present our personal implementation of an in-memory cache or use a extra refined native cache just like the NCache Shopper Cache.

NCache offers a Shopper Cache that can be utilized as a neighborhood cache within the software. Utilizing NCache Shopper Cache as a substitute of our personal in-memory cache removes the overhead of managing cache eviction, cache dimension, and different cache-related considerations.

Let’s examine how we are able to use the NCache Shopper Cache because the L1 cache in our software.

NCache Shopper Cache Setup

NCache can create a consumer cache that connects to the NCache server and shops information regionally. The consumer cache may be configured to make use of totally different synchronization modes, eviction insurance policies, and isolation ranges to regulate how information is retrieved and up to date.

To arrange the NCache Shopper Cache, we’ll configure it within the NCache Server. We will create a consumer cache for an current clustered cache and configure its properties.

Listed here are a couple of properties we have to set when creating a brand new consumer cache:

- Cache identify: The identify of the consumer cache.

- Sync mode: The synchronization mode for the consumer cache. We will select between

OptimisticandPessimisticmodes. Optimistic mode ensures information is all the time returned to the consumer if a key exists. Nonetheless, on account of cache synchronization delays, information might not all the time be constant. Pessimistic mode ensures inconsistent information is just not returned to the consumer however might have a efficiency affect as it may well lead to a couple extra native cache misses. - Isolation Stage: We will select between InProc and OutProc isolation ranges. InProc shops information within the software’s reminiscence, whereas OutProc shops information in a separate course of. The advantage of OutProc is that it may well retailer extra information and is extra resilient to software crashes. On the identical time, InProc is quicker because it shops information within the software’s reminiscence.

- Cache dimension: The whole dimension to which the cache can develop.

Along with this, we are able to arrange an eviction coverage:

- Eviction coverage: The eviction coverage determines how the cache handles information when it reaches its most dimension. We will select between

LRU(Least Lately Used),LFU(Least Regularly Used), andPrecedenceeviction insurance policies. - Default precedence: The default precedence of the gadgets within the cache. If priority-based eviction is enabled, gadgets with increased precedence are evicted final. The consumer can override default precedence when including gadgets to the cache.

- Eviction proportion: The share of things to evict when the cache reaches its most dimension.

- Clear interval: The interval at which the cache is cleaned up. It seems for expired gadgets and evicts them.

Utilizing NCache Shopper Cache

As soon as the NCache Shopper Cache is ready up, we are able to use it in our Java software because the L1 cache. One massive benefit is that the code interacting with NCache wants no modifications.

Methodology 1 (Most popular): Configuration File

If utilizing a configuration file, we are able to add the cache identify and different properties to the consumer.ncconf file. Here is an instance of the identical:

Right here, along with the standard properties, we’ve got added client-cache-id="demoClientCache" and client-cache-syncmode="optimistic" to specify consumer cache conduct. That is straightforward to handle and permits us to configure the consumer cache properties with out altering the code.

Methodology 2: Programmatic Configuration

Equally, if utilizing programmatic configuration, we are able to add the consumer cache particulars to our connection. Let’s outline our NCacheService class to incorporate a consumer cache connection:

public class NCacheService {

non-public last Cache cache;

public NCacheService() throws Exception {

CacheConnectionOptions cacheConnectionOptions = new CacheConnectionOptions();

cacheConnectionOptions.UserCredentials = new Credentials("domainuser-id", "password");

cacheConnectionOptions.ServerList = new Record() {

new ServerInfo("remoteServer",9800);

};

CacheConnectionOptions clientCacheConnectionOptions = new CacheConnectionOptions();

clientCacheConnectionOptions.LoadBalance = true;

clientCacheConnectionOptions.ConnectionRetries = 5;

clientCacheConnectionOptions.Mode = IsolationLevel.OutProc;

cache = CacheManager.GetCache("demoCache", cacheConnectionOptions, "demoClientCache", clientCacheConnectionOptions);

}

} Right here, we’ve got added a clientCacheConnectionOptions object to specify the consumer cache properties. We move this object when creating the cache occasion utilizing CacheManager.GetCache. This methodology is just not beneficial because it would not enable for simple configuration modifications.

Conclusion

On this article, we mentioned the idea of multi-level caching and the way it may be used to enhance the efficiency of purposes. By combining native and distributed caching, purposes can steadiness quick entry instances and excessive availability leading to higher efficiency and scalability.

We carried out a easy Java software to exhibit multi-level caching. First, we created an software that makes use of NCache as a distributed cache. Then, we used NCache’s Shopper Cache function to create a neighborhood cache for the appliance with minimal code modifications.

Utilizing NCache because the distributed and the native cache, we demonstrated how multi-level caching may be carried out in Java purposes. This strategy might help cut back the load on the database, enhance response instances, and scale to deal with high-traffic hundreds.