As deep studying fashions evolve, their rising complexity calls for high-performance GPUs to make sure environment friendly inference serving. Many organizations depend on cloud providers like AWS, Azure, or GCP for these GPU-powered workloads, however a rising variety of companies are opting to construct their very own in-house mannequin serving infrastructure. This shift is pushed by the necessity for higher management over prices, knowledge privateness, and system customization. By designing an infrastructure able to dealing with a number of GPU SKUs (resembling NVIDIA, AMD, and probably Intel), companies can obtain a versatile and cost-efficient system that’s resilient to provide chain delays and in a position to leverage numerous {hardware} capabilities. This text explores the important elements of such infrastructure, specializing in the technical concerns for GPU-agnostic design, container optimization, and workload scheduling.

Why Select In-Home Mannequin Serving Infrastructure?

Whereas cloud GPU situations supply scalability, many organizations favor in-house infrastructure for a number of key causes:

- Value effectivity: For predictable workloads, proudly owning GPUs could be cheaper than consistently renting cloud assets.

- Information privateness: In-house infrastructure ensures full management over delicate knowledge, avoiding potential dangers in shared environments.

- Latency discount: Native workloads remove community delays, bettering inference speeds for real-time purposes like autonomous driving and robotics.

- Customization: In-house setups enable fine-tuning of {hardware} and software program for particular wants, maximizing efficiency and cost-efficiency.

Why Serve A number of GPU SKUs?

Supporting a number of GPU SKUs is crucial for flexibility and cost-efficiency. NVIDIA GPUs usually face lengthy lead occasions on account of excessive demand, inflicting delays in scaling infrastructure. By integrating AMD or Intel GPUs, organizations can keep away from these delays and preserve mission timelines.

Value is one other issue — NVIDIA GPUs are premium, whereas alternate options like AMD supply extra budget-friendly choices for sure workloads (see comparability on which GPU to get). This flexibility additionally permits groups to experiment and optimize efficiency throughout completely different {hardware} platforms, main to higher ROI evaluations. Serving a number of SKUs ensures scalability, price management, and resilience in opposition to provide chain challenges.

Designing GPU-Interchangeable Infrastructure

Constructing an in-house infrastructure able to leveraging varied GPU SKUs effectively requires each hardware-agnostic design rules and GPU-aware optimizations. Under are the important thing concerns for reaching this.

1. GPU Abstraction and Gadget Compatibility

Totally different GPU producers like NVIDIA and AMD have proprietary drivers, software program libraries, and execution environments. One of the vital challenges is to summary the particular variations between these GPUs whereas enabling the software program stack to maximise {hardware} capabilities.

Driver Abstraction

Whereas NVIDIA GPUs require CUDA, AMD GPUs sometimes use ROCm. A multi-GPU infrastructure should summary these particulars in order that purposes can change between GPU varieties with out main code refactoring.

- Answer: Design a container orchestration layer that dynamically selects the suitable drivers and runtime surroundings primarily based on the detected GPU. For instance, containers constructed for NVIDIA GPUs will embrace CUDA libraries, whereas these for AMD GPUs will embrace ROCm libraries. This abstraction could be managed utilizing surroundings variables and orchestrated by way of Kubernetes system plugins, which deal with device-specific initialization.

Cross-SKU Scheduling

Infrastructure must be able to mechanically detecting and scheduling workloads throughout completely different GPUs. Kubernetes system plugins for each NVIDIA and AMD must be put in throughout the cluster. Implement useful resource tags or annotations that specify the required GPU SKU or kind (resembling tensor cores for H100 or Infinity Material for AMD).

- Answer: Use customized Kubernetes scheduler logic or node selectors that match GPUs with the mannequin’s necessities (e.g., FP32, FP16 assist). Kubernetes customized useful resource definitions (CRDs) can be utilized to create an abstraction for varied GPU capabilities.

2. Container Picture Optimization for Totally different GPUs

GPU containers are usually not universally suitable, given the variations in underlying libraries, drivers, and dependencies required for varied GPUs. Right here’s learn how to deal with container picture design for various GPU SKUs:

Container Photographs for NVIDIA GPUs

NVIDIA GPUs require CUDA runtime, cuDNN libraries, and NVIDIA drivers. Containers operating on NVIDIA GPUs should bundle these libraries or guarantee compatibility with host-provided variations.

- Picture Setup: Use NVIDIA’s CUDA containers as base photographs (e.g.,

nvidia/cuda:xx.xx-runtime-ubuntu) and set up framework-specific libraries resembling TensorFlow or PyTorch, compiled with CUDA assist.

Container Photographs for AMD GPUs

AMD GPUs use ROCm (Radeon Open Compute) and require completely different runtime and compiler setups.

- Picture setup: Use ROCm base photographs (e.g.,

rocm/tensorflow) or manually compile frameworks from a supply with ROCm assist. ROCm’s compiler toolchain additionally requires HCC (Heterogeneous Compute Compiler), which must be put in.

Unified Container Registry

To cut back the upkeep overhead of managing completely different containers for every GPU kind, a unified container registry with versioned photographs tagged by GPU kind (e.g., app-name:nvidia, app-name:amd) could be maintained. At runtime, the container orchestration system selects the proper picture primarily based on the underlying {hardware}.

Driver-Impartial Containers

Alternatively, contemplate constructing driver-agnostic containers the place the runtime dynamically hyperlinks the suitable drivers from the host machine, thus eliminating the necessity to package deal GPU-specific drivers contained in the container. This strategy, nonetheless, requires the host to take care of an accurate and up-to-date set of drivers for all potential GPU varieties.

3. Multi-GPU Workload Scheduling

When managing infrastructure with heterogeneous GPUs, it’s important to have an clever scheduling mechanism to allocate the appropriate GPU SKU to the appropriate mannequin inference job.

GPU Affinity and Process Matching

Sure fashions profit from particular GPU options resembling NVIDIA’s Tensor Cores or AMD’s Matrix Cores. Defining mannequin necessities and matching them to {hardware} capabilities is essential for environment friendly useful resource utilization.

- Answer: Combine workload schedulers like Kubernetes with GPU operators, resembling NVIDIA GPU Operator and AMD ROCm operator, to automate workload placement and GPU choice. Effective-tuning the scheduler to grasp mannequin complexity, batch measurement, and compute precision (FP32 vs. FP16) will assist assign probably the most environment friendly GPU for a given job.

Dynamic GPU Allocation

For workloads that modify in depth, dynamic GPU useful resource allocation is crucial. This may be achieved utilizing Kubernetes’ Vertical Pod Autoscaler (VPA) together with system plugins that expose GPU metrics.

4. Monitoring and Efficiency Tuning

GPU Monitoring

Make the most of telemetry instruments like NVIDIA’s DCGM (Information Middle GPU Supervisor) or AMD’s ROCm SMI (System Administration Interface) to observe GPU utilization, reminiscence bandwidth, energy consumption, and different efficiency metrics. Aggregating these metrics right into a centralized monitoring system like Prometheus may help determine bottlenecks and underutilized {hardware}.

Efficiency Tuning

Periodically benchmark completely different fashions on accessible GPU varieties and alter the workload distribution to realize optimum throughput and latency.

Latency Comparisons

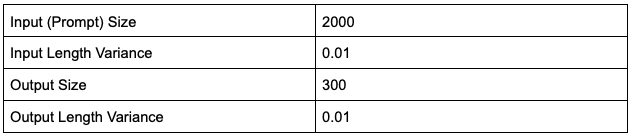

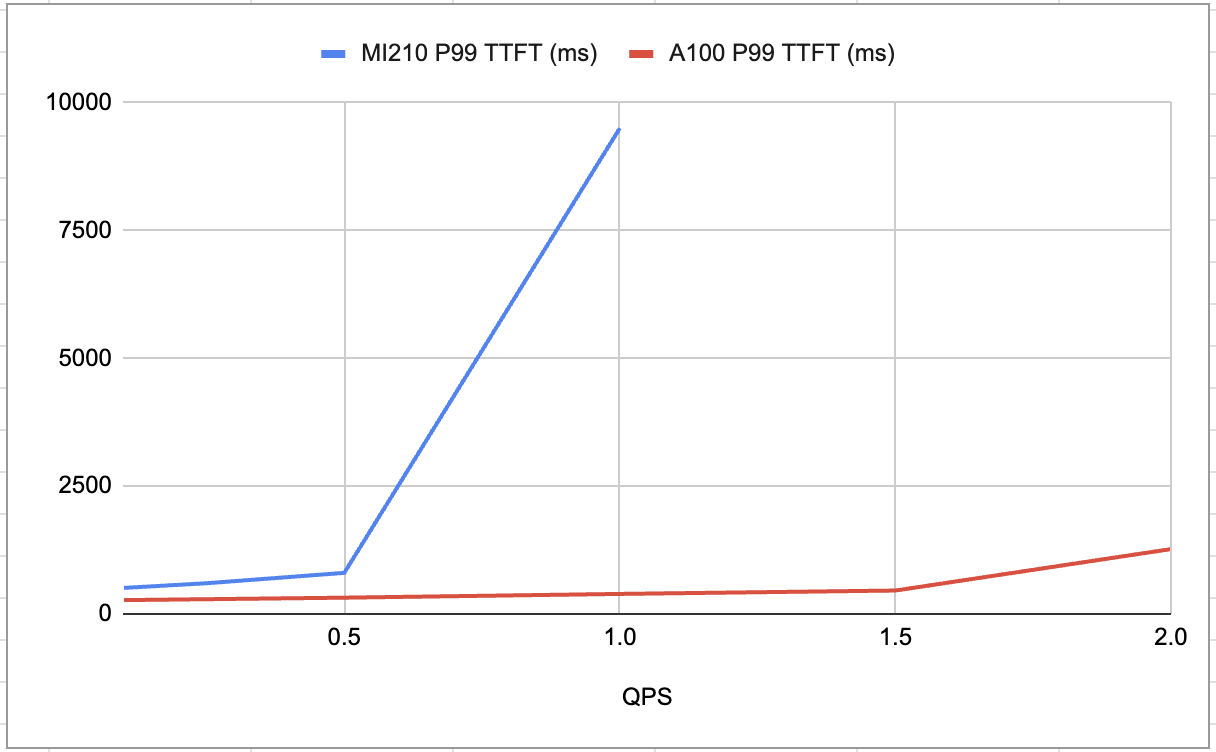

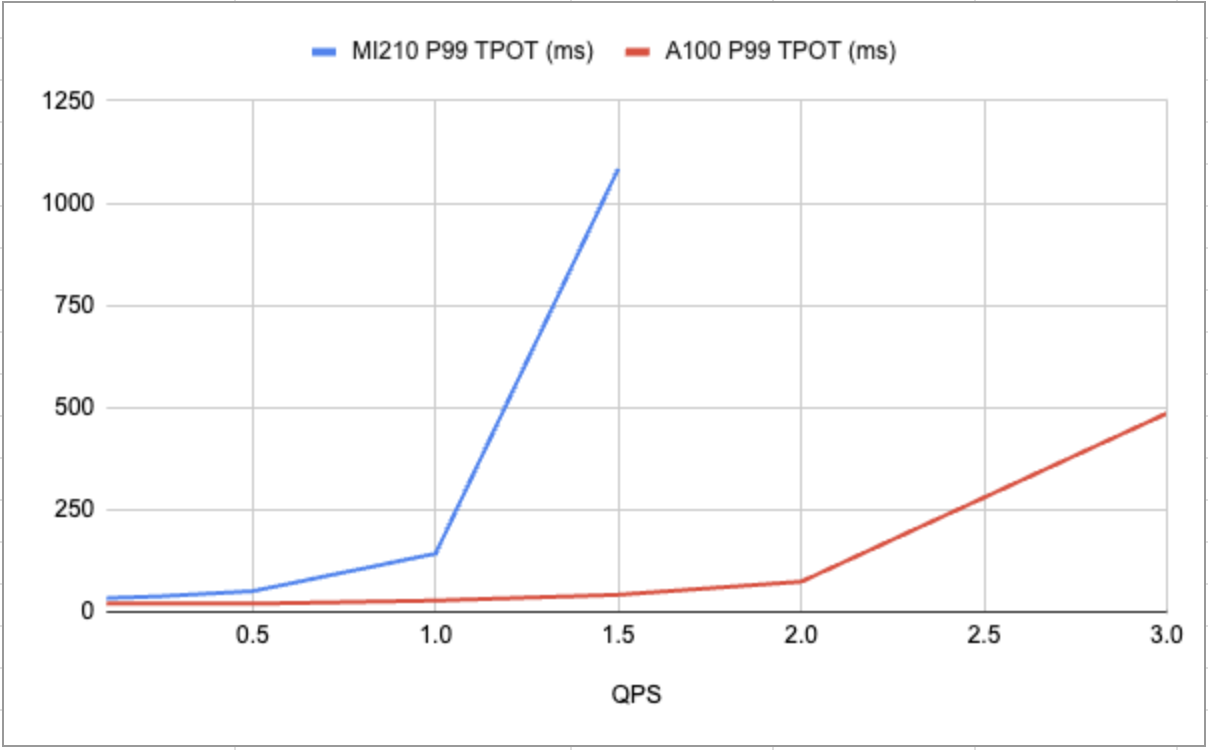

Under are the latency comparisons between NVIDIA and AMD GPUs utilizing a small language mannequin, llama2-7B, primarily based on the next enter and output settings (particular callout to Satyam Kumar for serving to me run these benchmarks).

Determine 1: AMD’s MI210 vs NVIDIA’s A100 P99 latencies for TTFT (Time to First Token)

Determine 2: AMD’s MI210 vs NVIDIA’s A100 P99 latencies for TPOT (Time per output token)

Right here is one other weblog primarily based on efficiency and price comparability: AMD MI300X vs. NVIDIA H100 SXM: Efficiency Comparability on Mixtral 8x7B Inference, which might help make choices about when to make use of which {hardware}.

Conclusion

The in-house mannequin serving infrastructure gives companies with higher management, cost-efficiency, and suppleness. Supporting a number of GPU SKUs ensures resilience in opposition to {hardware} shortages, optimizes prices, and permits for higher workload customization. By abstracting GPU-specific dependencies, optimizing containers, and intelligently scheduling duties, organizations can unlock the total potential of their AI infrastructure and drive extra environment friendly efficiency.