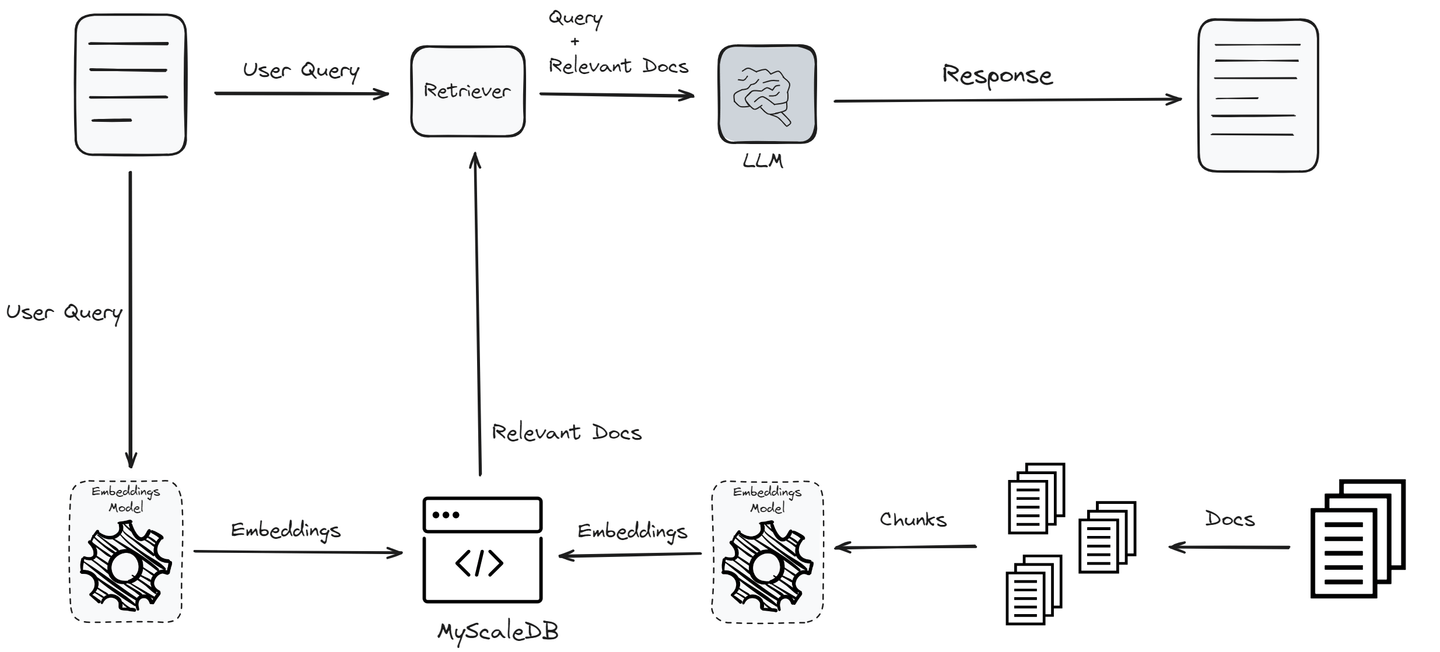

Retrieval-Augmented Era (RAG) (opens new window)techniques have been designed to enhance the response high quality of a big language mannequin (LLM). When a consumer submits a question, the RAG system extracts related info from a vector database and passes it to the LLM as context. The LLM then makes use of this context to generate a response for the consumer. This course of considerably improves the standard of LLM responses with much less “hallucination.” (opens new win

So, within the workflow above, there are two essential parts in a RAG system:

- Retriever: It identifies essentially the most related info from the vector database utilizing the ability of similarity search. This stage is essentially the most crucial a part of any RAG system because it units the inspiration for the standard of the ultimate output. The retriever searches a vector database to seek out paperwork related to the consumer question. It includes encoding the question and the paperwork into vectors and utilizing similarity measures to seek out the closest matches.

- Response generator: As soon as the related paperwork are retrieved, the consumer question and the retrieved paperwork are handed to the LLM mannequin to generate a coherent, related, and informative response. The generator (LLM) takes the context offered by the retriever and the unique question to generate an correct response.

The effectiveness and efficiency of any RAG system considerably rely upon these two core parts: the retriever and the generator. The retriever should effectively determine and retrieve essentially the most related paperwork, whereas the generator ought to produce responses which can be coherent, related, and correct, utilizing the retrieved info. Rigorous analysis of those parts is essential to make sure optimum efficiency and reliability of the RAG mannequin earlier than deployment.

Evaluating RAG

To guage an RAG system, we generally use two sorts of evaluations:

- Retrieval Analysis

- Response Analysis

In contrast to conventional machine studying strategies, the place there are well-defined quantitative metrics (akin to Gini, R-squared, AIC, BIC, confusion matrix, and so on.), the analysis of RAG techniques is extra advanced. This complexity arises as a result of the responses generated by RAG techniques are unstructured textual content, requiring a mixture of qualitative and quantitative metrics to evaluate their efficiency precisely.

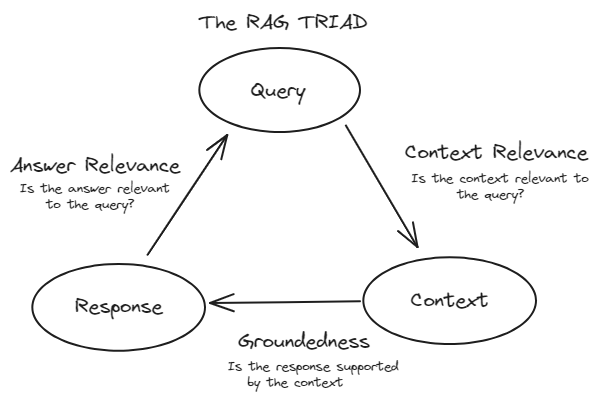

TRIAD Framework

To successfully consider RAG techniques, we generally observe the TRIAD framework. This framework consists of three main parts:

- Context Relevance: This part evaluates the retrieval a part of the RAG system. It evaluates how precisely the paperwork had been retrieved from the big dataset. Metrics like precision, recall, MRR, and MAP are used right here.

- Faithfulness (Groundedness): This part falls below the response analysis. It checks if the generated response is factually correct and grounded within the retrieved paperwork. Strategies akin to human analysis, automated fact-checking instruments, and consistency checks are used to evaluate faithfulness.

- Reply Relevance: That is additionally a part of the Response Analysis. It measures how effectively the generated response addresses the consumer’s question and gives helpful info. Metrics like BLEU, ROUGE, METEOR, and embedding-based evaluations are used.

Retrieval Analysis

Retrieval evaluations are utilized to the retriever part of an RAG system, which usually makes use of a vector database. These evaluations measure how successfully the retriever identifies and ranks related paperwork in response to a consumer question. The first purpose of retrieval evaluations is to evaluate context relevance—how effectively the retrieved paperwork align with the consumer’s question. It ensures that the context offered to the technology part is pertinent and correct.

Every of the metrics provides a singular perspective on the standard of the retrieved paperwork and contributes to a complete understanding of context relevance.

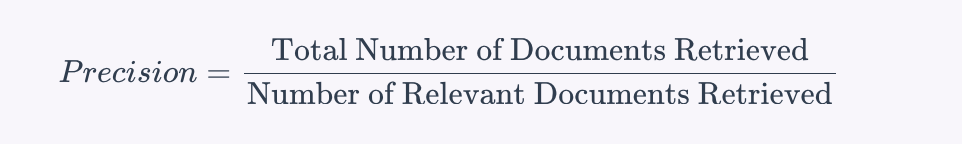

Precision

Precision measures the accuracy of the retrieved paperwork. It’s the ratio of the variety of related paperwork retrieved to the whole variety of paperwork retrieved. It’s outlined as:

Which means that precision evaluates how most of the paperwork retrieved by the system are literally related to the consumer’s question. For instance, if the retriever retrieves 10 paperwork and 7 of them are related, the precision can be 0.7 or 70%.

Precision evaluates, “Out of all of the paperwork that the system retrieved, what number of had been really related?”

Precision is particularly essential when presenting irrelevant info can have unfavourable penalties. For instance, excessive precision in a medical info retrieval system is essential as a result of offering irrelevant medical paperwork may result in misinformation and probably dangerous outcomes.

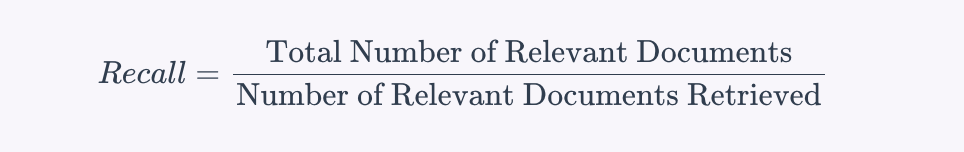

Recall

Recall measures the comprehensiveness of the retrieved paperwork. It’s the ratio of the variety of related paperwork retrieved to the whole variety of related paperwork within the database for the given question. It’s outlined as:

Which means that recall evaluates how most of the related paperwork that exist within the database had been efficiently retrieved by the system.

Recall evaluates: “Out of all the relevant documents that exist in the database, how many did the system manage to retrieve?”

Recall is crucial in conditions the place lacking out on related info will be expensive. As an example, in a authorized info retrieval system, excessive recall is important as a result of failing to retrieve a related authorized doc may result in incomplete case analysis and probably have an effect on the end result of authorized proceedings.

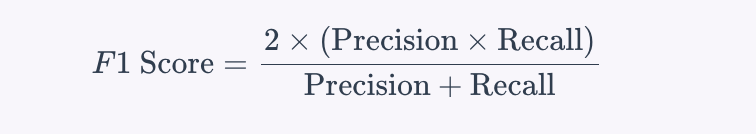

Stability Between Precision and Recall

Balancing precision and recall is commonly mandatory, as bettering one can typically scale back the opposite. The purpose is to seek out an optimum stability that fits the particular wants of the appliance. This stability is typically quantified utilizing the F1 rating, which is the harmonic imply of precision and recall:

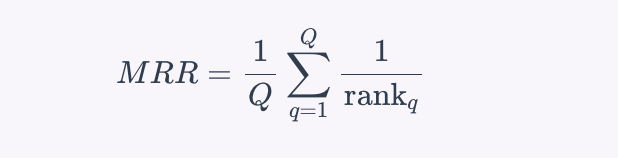

Imply Reciprocal Rank (MRR)

Imply Reciprocal Rank (MRR) is a metric that evaluates the effectiveness of the retrieval system by contemplating the rank place of the primary related doc. It’s notably helpful when solely the primary related doc is of main curiosity. The reciprocal rank is the inverse of the rank at which the primary related doc is discovered. MRR is the common of those reciprocal ranks throughout a number of queries. The system for MRR is:

The place Q is the variety of queries and is the rank place of the primary related doc for the q-th question.

MRR evaluates “On average, how quickly is the first relevant document retrieved in response to a user query?”

For instance, in a RAG-based question-answering system, MRR is essential as a result of it displays how rapidly the system can current the proper reply to the consumer. If the proper reply seems on the high of the listing extra ceaselessly, the MRR worth will probably be greater, indicating a simpler retrieval system.

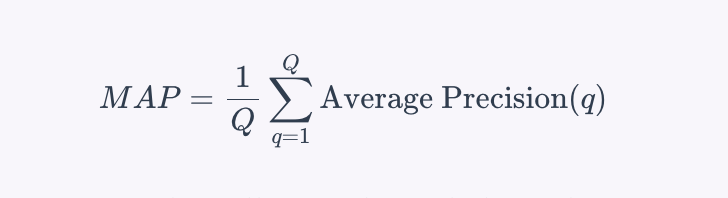

Imply Common Precision (MAP)

Imply Common Precision (MAP) is a metric that evaluates the precision of retrieval throughout a number of queries. It takes into consideration each the precision of the retrieval and the order of the retrieved paperwork. MAP is outlined because the imply of the common precision scores for a set of queries. To calculate the common precision for a single question, the precision is computed at every place within the ranked listing of retrieved paperwork, contemplating solely the top-Okay retrieved paperwork, the place every precision is weighted by whether or not the doc is related or not. The system for MAP throughout a number of queries is:

The place ( Q ) is the variety of queries, and is the common precision for the question ( q ).

MAP evaluates, “On common, how exact are the top-ranked paperwork retrieved by the system throughout a number of queries?”

For instance, in a RAG-based search engine, MAP is essential as a result of it considers the precision of the retrieval at completely different ranks, making certain that related paperwork seem greater within the search outcomes, which boosts the consumer expertise by presenting essentially the most related info first.

An Overview of the Retrieval Evaluations

- Precision: High quality of retrieved outcomes

- Recall: Completeness of retrieved outcomes

- MRR: How rapidly the primary related doc is retrieved

- MAP: Complete analysis combining precision and rank of related paperwork

Response Analysis

Response evaluations are utilized to the technology part of a system. These evaluations measure how successfully the system generates responses based mostly on the context offered by the retrieved paperwork. We divide response evaluations into two varieties:

- Faithfulness (Groundedness)

- Reply Relevance

Faithfulness (Groundedness)

Faithfulness evaluates whether or not the generated response is correct and grounded within the retrieved paperwork. It ensures that the response doesn’t include hallucinations or incorrect info. This metric is essential as a result of it traces the generated response again to its supply, making certain the data relies on a verifiable floor reality. Faithfulness helps forestall hallucinations, the place the system generates plausible-sounding however factually incorrect responses.

To measure faithfulness, the next strategies are generally used:

- Human analysis: Consultants manually assess whether or not the generated responses are factually correct and appropriately referenced from the retrieved paperwork. This course of includes checking every response towards the supply paperwork to make sure all claims are substantiated.

- Automated fact-checking instruments: These instruments examine the generated response towards a database of verified info to determine inaccuracies. They supply an automatic option to test the validity of the data with out human intervention.

- Consistency checks: These consider if the mannequin constantly gives the identical factual info throughout completely different queries. This ensures that the mannequin is dependable and doesn’t produce contradictory info.

Reply Relevance

Reply relevance measures how effectively the generated response addresses the consumer’s question and gives helpful info.

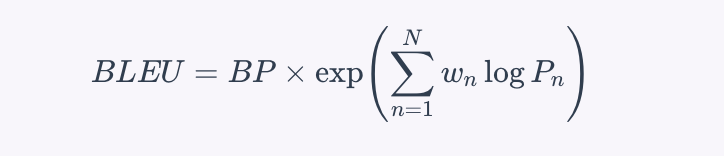

BLEU (Bilingual Analysis Understudy)

BLEU measures the overlap between the generated response and a set of reference responses, specializing in the precision of n-grams. It’s calculated by measuring the overlap of n-grams (contiguous sequences of n phrases) between the generated and reference responses. The system for the BLEU rating is:

The place (BP) is the brevity penalty to penalize brief responses, (P_n) is the precision of n-grams, and (w_n) are the weights for every n-gram stage. BLEU quantitatively measures how carefully the generated response matches the reference response.

ROUGE (Recall-Oriented Understudy for Gisting Analysis)

ROUGE measures the overlap of n-grams, phrase sequences, and phrase pairs between the generated and reference responses, contemplating each recall and precision. The commonest variant, ROUGE-N, measures the overlap of n-grams between the generated and reference responses. The system for ROUGE-N is:

ROUGE evaluates each the precision and recall, offering a balanced measure of how a lot related content material from the reference is current within the generated response.

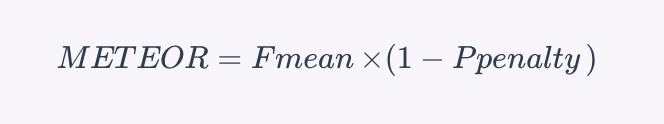

METEOR (Metric for Analysis of Translation with Express ORdering)

METEOR considers synonymy, stemming, and phrase order to judge the similarity between the generated response and the reference responses. The system for the METEOR rating is:

The place $ F_{textual content{imply}}$ is the harmonic imply of precision and recall, and is a penalty for incorrect phrase order and different errors. METEOR gives a extra nuanced evaluation than BLEU or ROUGE by contemplating synonyms and stemming.

Embedding-Primarily based Analysis

This technique makes use of vector representations of phrases (embeddings) to measure the semantic similarity between the generated response and the reference responses. Strategies akin to cosine similarity are used to check the embeddings, offering an analysis based mostly on the that means of the phrases moderately than their precise matches.

Suggestions and Methods To Optimize RAG Programs

There are some basic ideas and methods that you should use to optimize your RAG techniques:

Use Re-Rating Strategies

Re-ranking has been essentially the most extensively used method to optimize the efficiency of any RAG system. It takes the preliminary set of retrieved paperwork and additional ranks essentially the most related ones based mostly on their similarity. We are able to extra precisely assess doc relevance utilizing strategies like cross-encoders and BERT-based re-rankers. This ensures that the paperwork offered to the generator are contextually wealthy and extremely related, main to higher responses.

Tune Hyperparameters

Commonly tuning hyperparameters like chunk dimension, overlap, and the variety of high retrieved paperwork can optimize the efficiency of the retrieval part. Experimenting with completely different settings and evaluating their affect on retrieval high quality can result in higher general efficiency of the RAG system.

Embedding Fashions

Deciding on an applicable embedding mannequin is essential for optimizing a RAG system’s retrieval part. The appropriate mannequin, whether or not general-purpose or domain-specific, can considerably improve the system’s means to precisely signify and retrieve related info. By selecting a mannequin that aligns along with your particular use case, you’ll be able to enhance the precision of similarity searches and the general efficiency of your RAG system. Take into account components such because the mannequin’s coaching knowledge, dimensionality, and efficiency metrics when making your choice.

Chunking Methods

Customizing chunk sizes and overlaps can drastically enhance the efficiency of RAG techniques by capturing extra related info for the LLM. For instance, Langchain’s semantic chunking splits paperwork based mostly on semantics, making certain every chunk is contextually coherent. Adaptive chunking methods that modify based mostly on doc varieties (akin to PDFs, tables, and pictures) might help in retaining extra contextually applicable info.

Position of Vector Databases in RAG Programs

Vector databases are integral to the efficiency of RAG techniques. When a consumer submits a question, the RAG system’s retriever part leverages the vector database to seek out essentially the most related paperwork based mostly on vector similarity. This course of is essential for offering the language mannequin with the proper context to generate correct and related responses. A strong vector database ensures quick and exact retrieval, immediately influencing the general effectiveness and responsiveness of the RAG system.

Conclusion

Growing an RAG system just isn’t inherently tough, however evaluating RAG techniques is essential for measuring efficiency, enabling steady enchancment, aligning with enterprise aims, balancing prices, making certain reliability, and adapting to new strategies. This complete analysis course of helps in constructing a sturdy, environment friendly, and user-centric RAG system.

By addressing these crucial features, vector databases function the inspiration for high-performing RAG techniques, enabling them to ship correct, related, and well timed responses whereas effectively managing large-scale, advanced knowledge.