Are you able to get began with cloud-native observability with telemetry pipelines?

This text is a part of a collection exploring a workshop guiding you thru the open supply undertaking Fluent Bit, what it’s, a primary set up, and organising the primary telemetry pipeline undertaking. Discover ways to handle your cloud-native information from supply to vacation spot utilizing the telemetry pipeline phases protecting assortment, aggregation, transformation, and forwarding from any supply to any vacation spot.

Within the earlier article on this collection, we checked out allow Fluent Bit options that can assist keep away from the telemetry information loss we noticed when encountering backpressure. On this subsequent article, we have a look at half one in all integrating Fluent Bit with OpenTelemetry to be used circumstances the place this connectivity in your telemetry information infrastructure is crucial.

You could find extra particulars within the accompanying workshop lab.

There are various the reason why a corporation would possibly wish to cross their telemetry pipeline output onwards to OpenTelemetry (OTel), particularly by means of an OpenTelemetry Collector. To facilitate this integration, Fluent Bit from the discharge of model 3.1 added help for changing its telemetry information into the OTel format.

On this article, we’ll discover what Fluent Bit provides to transform pipeline information into the right format for sending onwards to OTel.

Resolution Structure

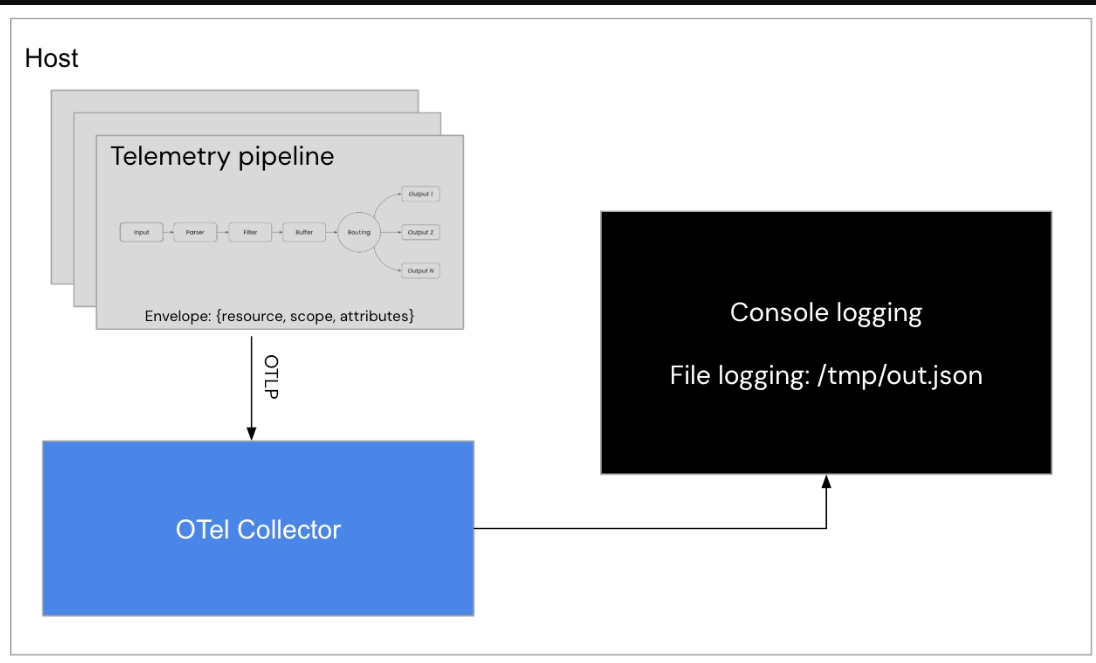

Earlier than we dive into the technical answer, let’s look at the structure of what we’re doing and the elements concerned. The next diagram is an architectural overview of what we’re constructing. We are able to see the pipeline amassing log occasions and processing them into an OTel Envelope to fulfill the OTel schema (useful resource, scope, attributes), pushing to an OTel Collector, and at last, to each the collectors’ console output and onwards to a separate log file:

The answer will probably be applied in a Fluent Bit configuration file utilizing the YAML format and initially, we will probably be organising every part of this answer a step at a time, testing it to make sure it is working as anticipated.

Preliminary Pipeline Configuration

We start the configuration of our telemetry pipeline within the enter part with a easy dummy plugin producing pattern log occasions and the output part of our configuration file workshop-fb.yaml as follows:

# This file is our workshop Fluent Bit configuration.

#

service:

flush: 1

log_level: data

pipeline:

# This entry generates a hit message for the workshop.

inputs:

- title: dummy

dummy: '{"service" : "backend", "log_entry" : "Generating a 200 success code."}'

# This entry directs all tags (it matches any we encounter)

# to print to plain output, which is our console.

#

outputs:

- title: stdout

match: '*'

format: json_lines

(Be aware: All examples under are proven operating this answer utilizing container pictures and options Podman because the container tooling. Docker may also be used, however the particular variations are left for the reader to resolve.)

Let’s now strive testing our configuration by operating it utilizing a container picture. The very first thing that’s wanted is to open in our favourite editor, a brand new file referred to as Buildfile-fb. That is going for use to construct a brand new container picture and insert our configuration. Be aware this file must be in the identical listing as our configuration file, in any other case regulate the file path names:

FROM cr.fluentbit.io/fluent/fluent-bit:3.1.4 COPY ./workshop-fb.yaml /fluent-bit/and many others/workshop-fb.yaml CMD [ "fluent-bit", "-c", "/fluent-bit/etc/workshop-fb.yaml"]

Now we’ll construct a brand new container picture, naming it with a model tag, as follows utilizing the Buildfile-fb and assuming you’re in the identical listing:

$ podman construct -t workshop-fb:v12 -f Buildfile-fb STEP 1/3: FROM cr.fluentbit.io/fluent/fluent-bit:3.1.4 STEP 2/3: COPY ./workshop-fb.yaml /fluent-bit/and many others/workshop-fb.yaml --> b4ed3356a842 STEP 3/3: CMD [ "fluent-bit", "-c", "/fluent-bit/etc/workshop-fb.yaml"] COMMIT workshop-fb:v4 --> bcd69f8a85a0 Efficiently tagged localhost/workshop-fb:v12 bcd69f8a85a024ac39604013bdf847131ddb06b1827aae91812b57479009e79a

Run the preliminary pipeline utilizing this container command and be aware the next output. This runs till exiting utilizing CTRL+C:

$ podman run --rm workshop-fb:v12

...

[2024/08/01 15:41:55] [ info] [input:dummy:dummy.0] initializing

[2024/08/01 15:41:55] [ info] [input:dummy:dummy.0] storage_strategy='reminiscence' (reminiscence solely)

[2024/08/01 15:41:55] [ info] [output:stdout:stdout.0] employee #0 began

[2024/08/01 15:41:55] [ info] [sp] stream processor began

{"date":1722519715.919221,"service":"backend","log_entry":"Generating a 200 success code."}

{"date":1722519716.252186,"service":"backend","log_entry":"Generating a 200 success code."}

{"date":1722519716.583481,"service":"backend","log_entry":"Generating a 200 success code."}

{"date":1722519716.917044,"service":"backend","log_entry":"Generating a 200 success code."}

{"date":1722519717.250669,"service":"backend","log_entry":"Generating a 200 success code."}

{"date":1722519717.584412,"service":"backend","log_entry":"Generating a 200 success code."}

...

To this point, we’re ingesting logs, however simply passing them by means of the pipeline to output in JSON format. Throughout the pipeline after ingesting log occasions, we have to course of them into an OTel-compatible schema earlier than we will push them onward to an OTel Collector. Fluent Bit v3.1+ provides a processor to place our logs into an OTel envelope.

Processing Logs for OTel

The OTel Envelope is how we’re going to course of log occasions into the right format (useful resource, scope, attributes). Let’s strive including this to our workshop-fb.yaml file and discover the adjustments to our log occasions. The configuration of our processor part wants an entry for logs. Fluent Bit offers a easy processor referred to as opentelemetry_envelope and is added as follows after the inputs part:

# This file is our workshop Fluent Bit configuration.

#

service:

flush: 1

log_level: data

pipeline:

# This entry generates a hit message for the workshop.

inputs:

- title: dummy

dummy: '{"service" : "backend", "log_entry" : "Generating a 200 success code."}'

processors:

logs:

- title: opentelemetry_envelope

# This entry directs all tags (it matches any we encounter)

# to print to plain output, which is our console.

#

outputs:

- title: stdout

match: '*'

format: json_lines

Let’s now strive testing our configuration by operating it utilizing a container picture. Construct a brand new container picture, naming it with a model tag as follows utilizing the Buildfile-fb, and assuming you’re in the identical listing:

$ podman construct -t workshop-fb:v13 -f Buildfile-fb STEP 1/3: FROM cr.fluentbit.io/fluent/fluent-bit:3.1.4 STEP 2/3: COPY ./workshop-fb.yaml /fluent-bit/and many others/workshop-fb.yaml --> b4ed3356a842 STEP 3/3: CMD [ "fluent-bit", "-c", "/fluent-bit/etc/workshop-fb.yaml"] COMMIT workshop-fb:v4 --> bcd69f8a85a0 Efficiently tagged localhost/workshop-fb:v13 bcd69f8a85a024ac39604013bdf847131ddb06b1827aae91812b57479009euyt

Run the preliminary pipeline utilizing this container command and be aware the identical output as we noticed with the supply construct. This runs till exiting utilizing CTRL+C:

$ podman run --rm workshop-fb:v13

...

[2024/08/01 16:26:52] [ info] [input:dummy:dummy.0] initializing

[2024/08/01 16:26:52] [ info] [input:dummy:dummy.0] storage_strategy='reminiscence' (reminiscence solely)

[2024/08/01 16:26:52] [ info] [output:stdout:stdout.0] employee #0 began

[2024/08/01 16:26:52] [ info] [sp] stream processor began

{"date":4294967295.0,"resource":{},"scope":{}}

{"date":1722522413.113665,"service":"backend","log_entry":"Generating a 200 success code."}

{"date":4294967294.0}

{"date":4294967295.0,"resource":{},"scope":{}}

{"date":1722522414.113558,"service":"backend","log_entry":"Generating a 200 success code."}

{"date":4294967294.0}

{"date":4294967295.0,"resource":{},"scope":{}}

{"date":1722522415.11368,"service":"backend","log_entry":"Generating a 200 success code."}

...

The work to this point has been targeted on our telemetry pipeline with Fluent Bit. We configured log occasion ingestion and processing to an OTel Envelope, and it is able to push onward as proven right here on this architectural part:

The subsequent step will probably be pushing our telemetry information to an OTel collector. This completes the primary a part of the answer for our use case on this article, be sure you discover this hands-on expertise with the accompanying workshop lab linked earlier within the article.

What’s Subsequent?

This text walked us by means of integrating Fluent Bit with OpenTelemetry as much as the purpose that we now have reworked our log information into the OTel Envelope format. Within the subsequent article, we’ll discover half two the place we push the telemetry information from Fluent Bit to OpenTelemetry finishing this integration answer.

Keep tuned for extra hands-on materials that can assist you together with your cloud-native observability journey.