Within the data age, coping with big PDFs occurs on a day-to-day foundation. More often than not, I’ve discovered myself drowning in a sea of textual content, struggling to search out the knowledge I needed or wanted studying web page after web page. However, what if I can ask questions concerning the PDF and get better not solely the related data but in addition the web page contents?

That is the place the Retrieval-Augmented Technology (RAG) approach comes into play. By combining these cutting-edge applied sciences, I’ve created a regionally hosted software that lets you chat along with your PDFs, ask questions, and obtain all the required context.

Let me stroll you thru the complete strategy of constructing this sort of software!

What Is Retrieval-Augmented Technology (RAG)?

Retrieval-Augmented Technology or RAG is a technique designed to enhance the efficiency of the LLM by incorporating further data on a given matter. This data reduces uncertainty and presents extra context serving to the mannequin reply to the questions in a greater manner.

When constructing a fundamental Retrieval-Augmented Technology (RAG) system, there are two predominant parts to concentrate on: the areas of Knowledge Indexing and Knowledge Retrieval and Technology. Knowledge Indexing allows the system to retailer and/or seek for paperwork every time wanted. Knowledge Retrieval and Technology is the place these listed paperwork are queried, the info required is then pulled out, and solutions are generated utilizing this knowledge.

Knowledge Indexing

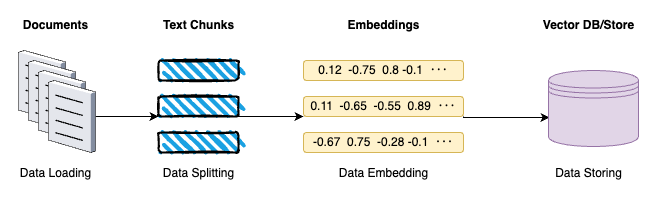

Knowledge Indexing includes of 4 key levels:

Knowledge Indexing includes of 4 key levels:

- Knowledge loading: This preliminary stage includes the ingestion of PDFs, audio recordsdata, movies, and so on. right into a unified format for the following phases.

- Knowledge splitting: The subsequent step is to divide the content material into manageable segments: segmenting the textual content into coherent sections or chunks that retain the context and which means.

- Knowledge embeddings: On this stage, the textual content chunks are reworked into numerical vectors. This transformation is completed utilizing embedding methods that seize the semantic essence of the content material.

- Knowledge storing: The final step is storing the generated embeddings which is usually in a vector retailer.

Knowledge Retrieval and Technology

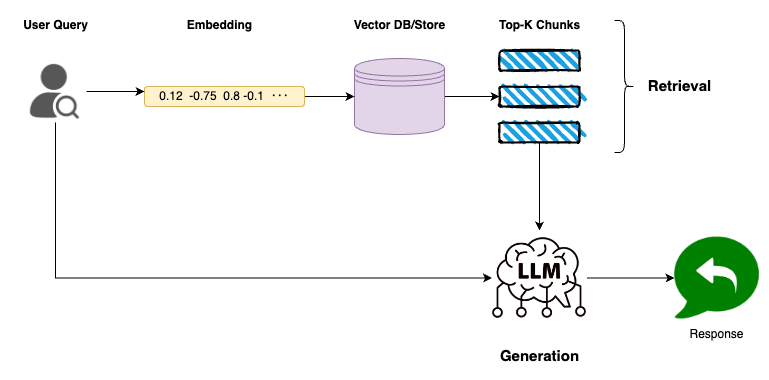

Retrieval

- Embedding the question: Remodeling the person’s question into an embedding type so it may be in contrast for similarity with the doc embeddings

- Looking out the vector: The vector retailer incorporates vectors of various chunks of paperwork. Thus, by evaluating this question embedding with the saved ones, the system determines which chunks are probably the most related to the question. Such comparability is commonly achieved with the assistance of computing cosine similarity or some other similarity metric.

- Choosing top-k chunks: The system takes the k-chunks closest to the question embedding based mostly on the similarity scores obtained.

Technology

- Combining context and question: The highest-k chunks present the required context associated to the question. When mixed with the person’s unique query, the LLM receives a complete enter that can be used to generate the output.

Now that we’ve extra context about it, let’s leap into the motion!

RAG for PDF Doc

Conditions

The whole lot is described on this GitHub repository. There’s additionally a Docker file to check the complete software. I’ve used the next libraries:

- LangChain: It’s a framework for growing purposes utilizing Massive Language Fashions (LLMs). It provides the suitable devices and approaches to manage and coordinate LLMs ought to they be utilized.

- PyPDF: That is used for loading and processing PDF paperwork. Whereas PyMuPDF is understood for its pace, I’ve confronted a number of compatibility points when establishing the Docker atmosphere.

- FAISS stands for Fb AI Similarity Search and is a library used for quick similarity search and clustering of dense vectors. FAISS can also be good for quick nearest neighbor search, so its use is ideal when coping with vector embeddings, as within the case of doc chunks. I’ve determined to make use of this as an alternative of a vector database for simplicity.

- Streamlit is employed for constructing the person interface of the applying. Streamlit permits for the fast improvement of interactive net purposes, making it a superb alternative for making a seamless person expertise.

Knowledge Indexing

- Load the PDF doc.

from langchain_community.document_loaders import PyPDFLoader

loader = PyPDFLoader(pdf_docs)

pdf_data = loader.load()

- Cut up it into chunks. I’ve used a piece dimension of 1000 characters.

from langchain.text_splitter import CharacterTextSplitter

text_splitter = CharacterTextSplitter(

separator="n",

chunk_size=1000,

chunk_overlap=150,

length_function=len

)

docs = text_splitter.split_documents(pdf_data)

- I’ve used the OpenAI embedding mannequin and loaded them into the FAISS vector retailer.

from langchain_openai import OpenAIEmbeddings

from langchain_community.vectorstores import FAISS

embeddings = OpenAIEmbeddings(api_key = open_ai_key)

db = FAISS.from_documents(docs, embeddings)

- I’ve configured the retrieval to solely the highest 3 related chunks.

retriever = db.as_retriever(search_kwargs={'okay': 3})

Knowledge Retrieval and Technology

- Utilizing the

RetrievalQAchain from LangChain, I’ve created the complete Retrieval and Technology system linking into the earlier FAISS retriever configured.

from langchain.chains import RetrievalQA

from langchain import PromptTemplate

from langchain_openai import ChatOpenAI

mannequin = ChatOpenAI(api_key = open_ai_key)

custom_prompt_template = """Use the next items of data to reply the person's query.

If you do not know the reply, simply say that you do not know, do not attempt to make up a solution.

Context: {context}

Query: {query}

Solely return the useful reply beneath and nothing else.

Useful reply:

"""

immediate = PromptTemplate(template=custom_prompt_template,

input_variables=['context', 'question'])

qa = RetrievalQA.from_chain_type(llm=mannequin,

chain_type="stuff",

retriever=retriever,

return_source_documents=True,

chain_type_kwargs={"prompt": immediate})Streamlit

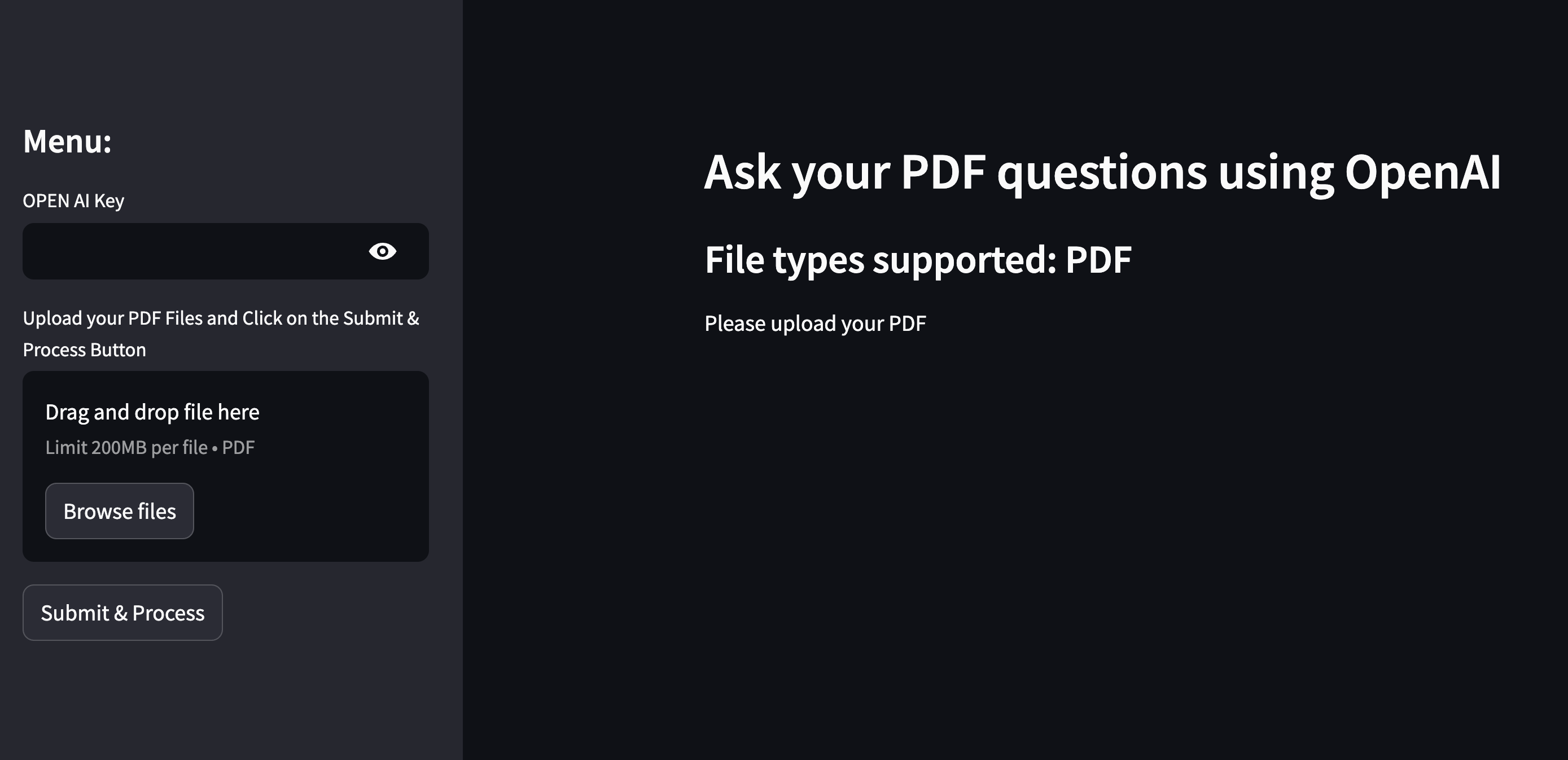

I’ve used Streamlit to create an software the place you’ll be able to add your personal paperwork and begin the RAG course of with them. The one parameter required is your OpenAI API Key.

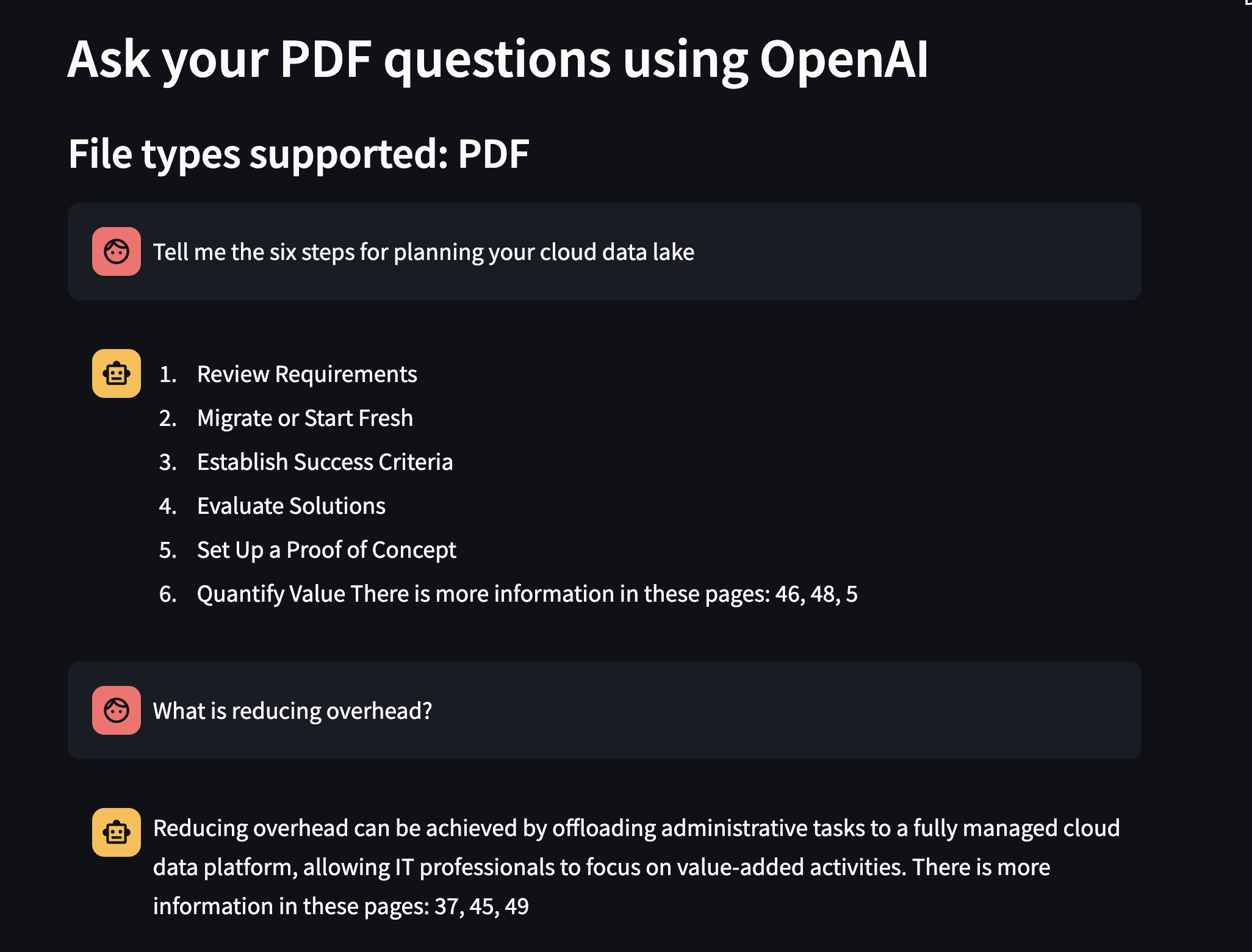

I used the ebook “Cloud Data Lakes for Dummies” as the instance for the dialog proven within the following picture.

Conclusion

At a time when data is out there in a voluminous type and at customers’ disposal, the chance to interact in significant discussions with paperwork can go a good distance in saving time within the strategy of mining precious data from giant PDF paperwork. With the assistance of the Retrieval-Augmented Technology, we are able to filter out undesirable data and take note of the precise data.

This implementation presents a naive RAG resolution; nonetheless, the chances to optimize it are huge. By utilizing totally different RAG methods, it could be attainable to additional refine elements similar to embedding fashions, doc chunking strategies, and retrieval algorithms.

I hope that for you, this text is as enjoyable to learn because it was for me to create!