The Want for the Creation of the STS Plugin

From a Net Utility in Tomcat

The thought of getting a server to handle the dataset was born throughout the efficiency checks of the revenue tax declaration utility of the French Ministry of Public Finance in 2012. The dataset consisted of thousands and thousands of traces to simulate tens of 1000’s of people that stuffed out their revenue tax return kind per hour and there have been a dozen injectors to distribute the injection load of a efficiency shot. The dataset was consumed, that’s to say, as soon as the road with the particular person’s data was learn or consumed, we may now not take the particular person’s data once more.

The administration of the dataset in a centralized method had been carried out with a Java internet utility (struggle) working in Tomcat. Injectors requesting a row of the dataset from the net utility.

To a Plugin for Apache JMeter

The necessity to have centralized administration of the dataset, particularly with an structure of a number of JMeter injectors, was on the origin of the creation in 2014 of the plugin for Apache JMeter named HTTP Easy Desk Server or STS. This plugin takes over the options of the devoted utility for Tomcat talked about above however with a lighter and less complicated technical answer based mostly on the NanoHTTPD library.

Handle the Dataset With HTTP Easy Desk Server (STS)

Including Centralized Administration of the Dataset

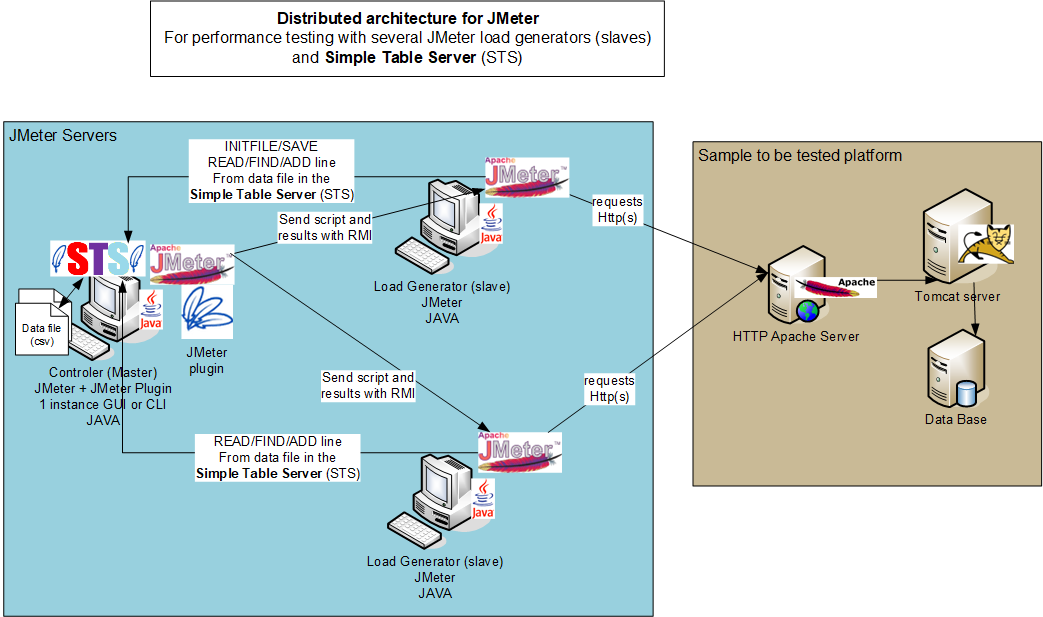

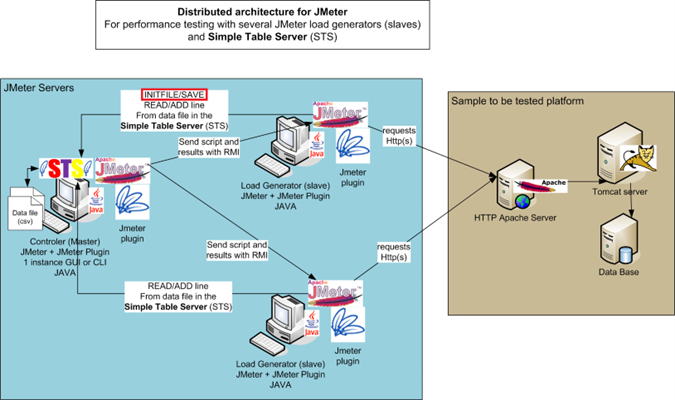

Efficiency checks with JMeter will be carried out with a number of JMeter injectors or load mills (with out interface, on a distant machine) and a JMeter controller (with person interface or command line interface on the native machine). JMeter script and properties are despatched by RMI protocol to injectors. The outcomes of the calls are returned periodically to the JMeter controller.

Nevertheless, the dataset and CSV recordsdata usually are not transferred from the controller to the injectors.

It’s natively not doable with JMeter to learn the dataset randomly or in reverse order. Nevertheless, there are a number of exterior plugins that permit you to randomly learn a knowledge file, however not in a centralized method.

It’s natively not doable to save lots of knowledge created throughout checks resembling file numbers or new paperwork in a file. The opportunity of saving values will be carried out with the Groovy script in JMeter, however not in a centralized method when you use a number of injectors throughout the efficiency take a look at.

The primary thought is to make use of a small HTTP server to handle the dataset recordsdata with easy instructions to retrieve or add knowledge traces to the information recordsdata. This HTTP server will be launched alone in an exterior program or within the JMeter device.

The HTTP server is known as the “Simple Table Server” or STS. The identify STS can also be a reference to the Digital Desk Server (VTS) program of LoadRunner with totally different functionalities and a unique technical implementation, however shut within the use instances.

STS may be very helpful in a take a look at with a number of JMeter injectors, nevertheless it additionally brings attention-grabbing options with a single JMeter for efficiency testing.

Some Prospects of Utilizing the HTTP Easy Desk Server

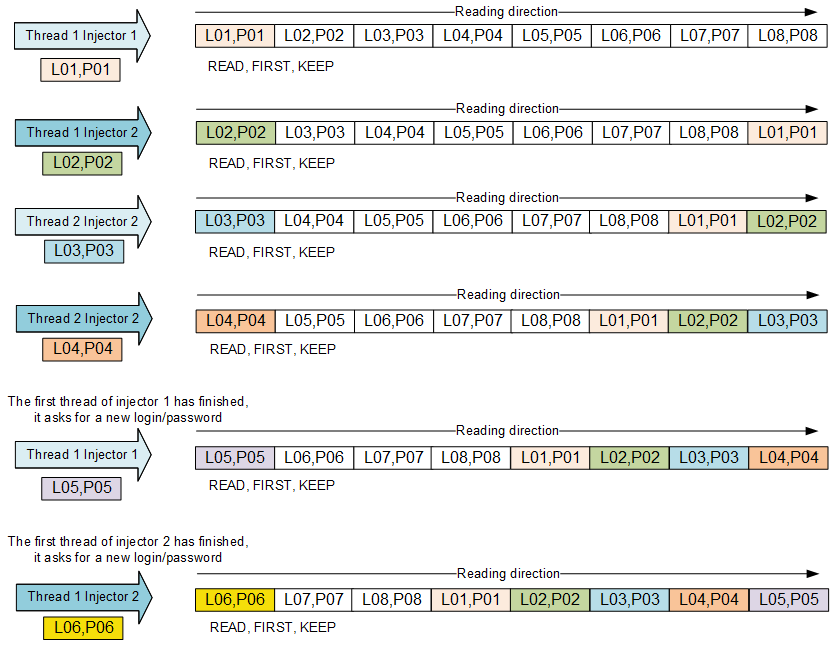

Studying Information in a Distributed and Collision-Free Method

Some purposes don’t tolerate that 2 customers join with the identical login on the similar time. It’s typically beneficial to not use the identical knowledge for two customers linked with the identical login on the similar time to keep away from conflicts on the information used.

The STS can simply handle the dataset in a distributed method to make sure that logins are totally different for every digital person at a given time T of the take a look at. A dataset with logins/passwords with various traces higher than the variety of energetic threads at a given time is required.

We manually load firstly of the STS or by script the logins/password file, and the injectors ask the STS for a line with login/password by the command READ, KEEP=TRUE, and READ_MODE=FIRST.

We are able to think about that the studying of the dataset is finished in a round method.

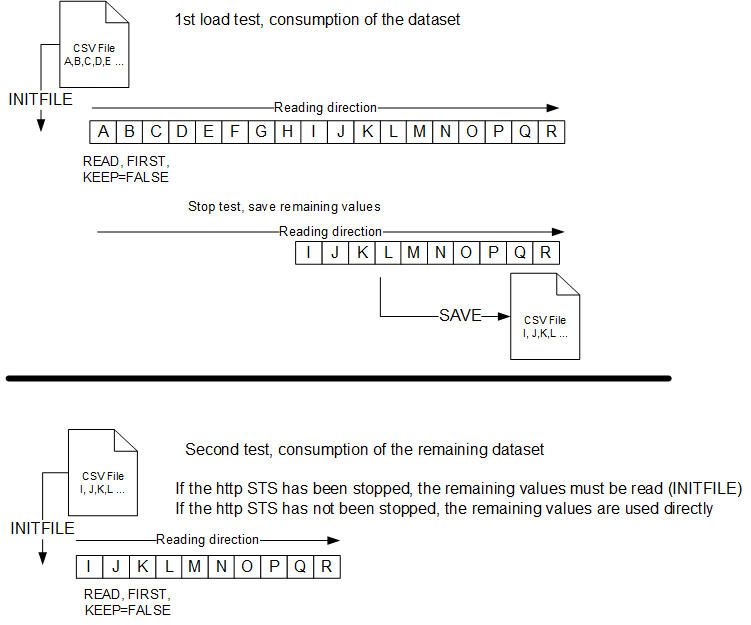

Studying Single-Use Information

The dataset will be single-use, that means that it’s used solely as soon as throughout the take a look at. For instance, individuals who register on a website can now not register with the identical data as a result of the system detects a replica. Paperwork are awaiting validation by an administrator. When the paperwork are validated, they’re now not in the identical state and might now not be validated once more.

To do that, we are going to learn a knowledge file within the reminiscence of the HTTP STS and the digital customers will learn by deleting the worth on the high of the listing. When the take a look at is stopped, we are able to save the values that stay in reminiscence in a file (with or and not using a timestamp prefix) or let the HTTP STS run for an additional take a look at whereas retaining the values nonetheless in reminiscence.

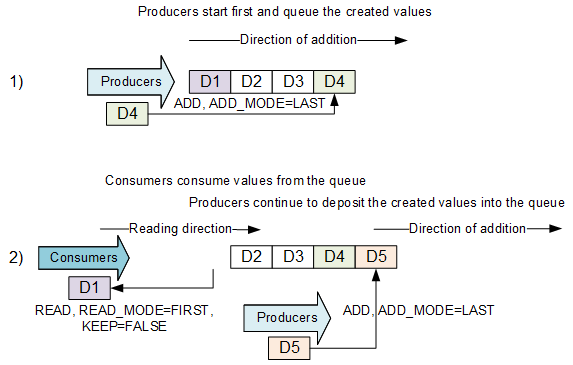

Producers and Shoppers of a Queue

With the STS, we are able to handle a queue with producers who deposit within the queue and customers who devour the information of the queue. In follow, we begin a script with producers who create knowledge like new paperwork or new registered individuals. The identifiers of created paperwork or the data of newly registered individuals are saved within the HTTP STS by the ADD command in mode ADD_MODE=LAST. The customers begin a bit later so that there’s knowledge within the queue.

It’s also needed that the customers don’t devour too rapidly in comparison with the producers or it’s essential to handle the case the place the listing now not accommodates a worth by detecting that there isn’t any extra worth out there and ready a couple of seconds earlier than repeating in a loop.

The customers devour the values by the instructions READ, FIRST, KEEP=FALSE. Here’s a schema to clarify how the producers ADD and customers READ in the identical queue.

Producer and Shopper With Search By FIND

This can be a variation of the earlier answer.

Producer

A person with rights restricted to a geographical sector creates paperwork and provides a line together with his login + the doc quantity (ex: login23;D12223).

login1;D120000

login2;D120210

login23;D12223

login24;D12233

login2;D120214

login23;D12255

Shopper

A person will modify the traits of the doc, however to take action, he should select from the listing in reminiscence solely the traces with the identical login because the one he at present makes use of for questions of rights and geographic sector.

Search within the listing with the FIND command and FIND_MODE=SUBSTRING. The string looked for is the login of the linked particular person (ex: login23) so LINE=login23; (with the separator ;). On this instance, the returned line would be the 1st line login23 and the file quantity can be utilized in trying to find recordsdata within the examined utility.

Right here, the results of the FIND by substring (SUBSTRING) is: login23;D12223. The road will be consumed with KEEP=FALSE or stored and positioned on the finish of the listing with KEEP=TRUE.

Enrich the Dataset because the Shot Progresses

The thought is to begin with a lowered dataset that’s learn firstly of the shot and so as to add new traces to the preliminary dataset because the shot progresses with the intention to improve or enrich the dataset. The dataset is subsequently bigger on the finish of the shot than at first. On the finish of the shot, the enriched dataset will be saved with the SAVE command and the brand new file will be the long run enter file for a brand new shot.

For instance, we add individuals by a state of affairs. We add to the search dataset these individuals within the listing so the search is finished on the primary individuals of the file learn at first but additionally the individuals added because the efficiency take a look at progresses.

Verification of the Dataset

The preliminary dataset can include values that can generate errors within the script as a result of the navigation falls into a selected case or the entered worth is refused as a result of it’s incorrect or already exists.

We learn the dataset by INITFILE or firstly of the STS, we learn (READ) and use the worth if the script goes to the tip (the Thread Group is configured with “Start Next Thread Loop on Sampler Error”), we save the worth by ADD in a file (valuesok.csv). On the finish of the take a look at, the values of the valuesok.csv file are saved (for instance, with a “tearDown Thread Group”). The verified knowledge of the valuesok.csv file will then be used.

Storing the Added Values in a File

Within the script, the values added to the applying database are saved in a file by the ADD command. On the finish of the take a look at, the values in reminiscence are saved in a file (for instance, with a “tearDown Thread Group”). The file containing the created values can be utilized to confirm the additions to the database a posteriori or to delete the created values with the intention to return to an preliminary state earlier than the take a look at.

A script devoted to creating a knowledge set can create values and retailer them in a knowledge file. This file will then be used within the JMeter script throughout efficiency checks. However, a devoted erase script can take the file of created values to erase them in a loop.

Communication Between Software program

The HTTP STS can be utilized as a method to speak values between JMeter and different software program. The software program could be a heavy consumer, an online utility, a shell script, a Selenium take a look at, one other JMeter, a LoadRunner, and so forth. We are able to additionally launch 2 command line checks with JMeter in a row by utilizing an “external standalone” STS as a method to retailer values created by the primary take a look at and used within the second JMeter take a look at. The software program can add or learn values by calling the URL of the HTTP STS, and JMeter can learn these added values or add them itself. This risk facilitates efficiency checks but additionally non-regression checks.

Enrich the Dataset because the Shot Progresses

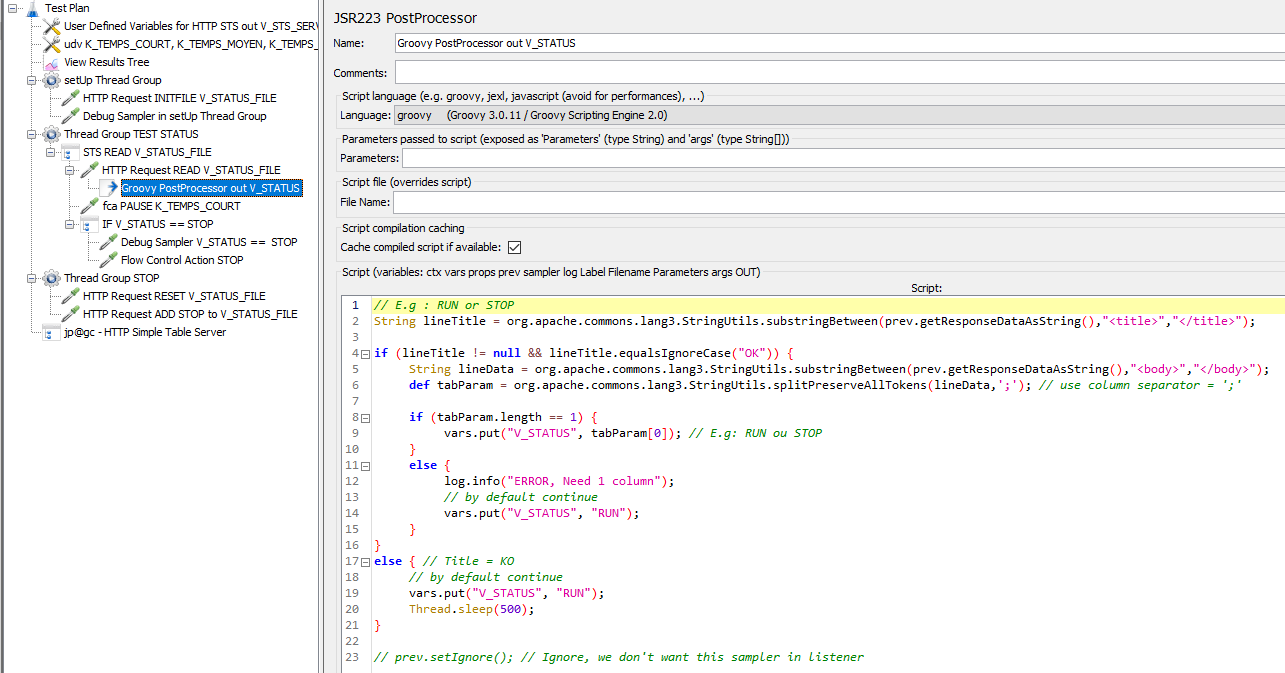

Gradual Exiting the Check on All JMeter Injectors

In JMeter, there isn’t any notion as in LoadRunner of “GradualExiting,” that’s to say, to point to the vuser on the finish of the iteration if it ought to proceed or to not repeat and subsequently cease. We are able to simulate this “GradualExiting” with the STS and a bit code within the JMeter script. With the STS we are able to load a file “status.csv,” which accommodates a line with a selected worth like the road “RUN.” The vuser asks at first of the iteration the worth of the road of the file “status.csv.” If the worth is the same as “STOP” then the vuser stops. If the worth is zero or totally different from STOP like “RUN” then the person continues.

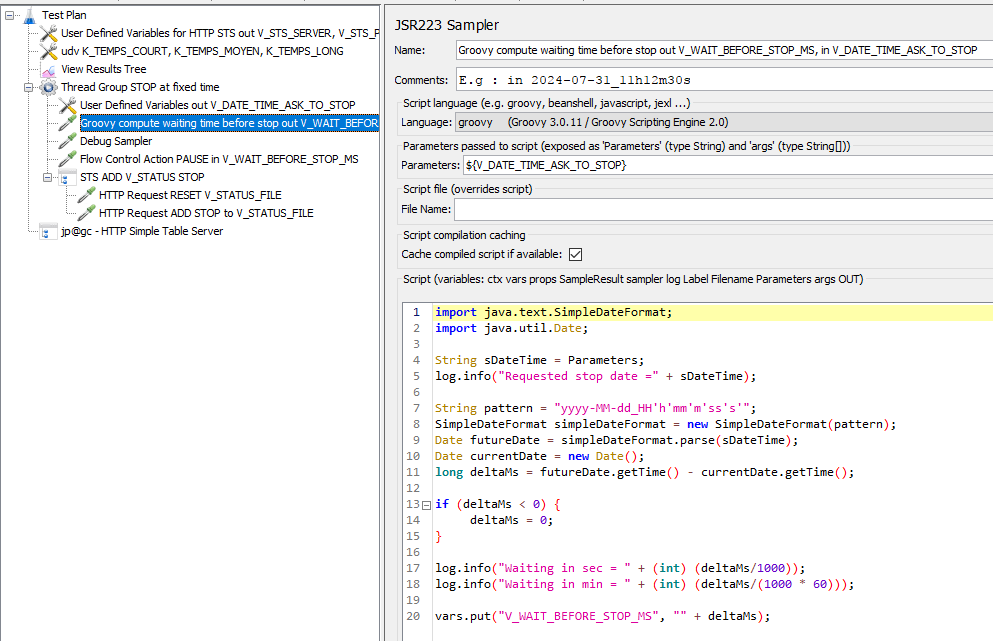

Gradual Exiting After Fastened Date Time

We are able to additionally program the cease request after a mounted time with this method. We point out as a parameter the date and time to alter the worth of the standing to STOP; e.g., 2024-07-31_14h30m45s by a JMeter script that runs along with the present load testing. The script is launched, and we calculate the variety of milliseconds earlier than the indicated date of the requested cease.

The vuser is placed on maintain for the calculated length.

Then the “status.csv” file within the STS is deleted to place the STOP worth, which can enable the second JMeter script that’s already working to learn the standing worth if standing == "STOP" worth and to cease correctly on all of the JMeter injectors or the JMeter alone.

Begin the HTTP Easy Desk Server

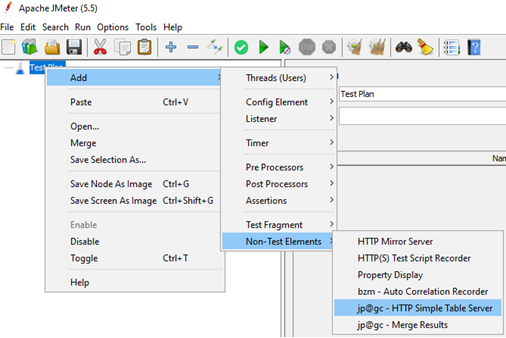

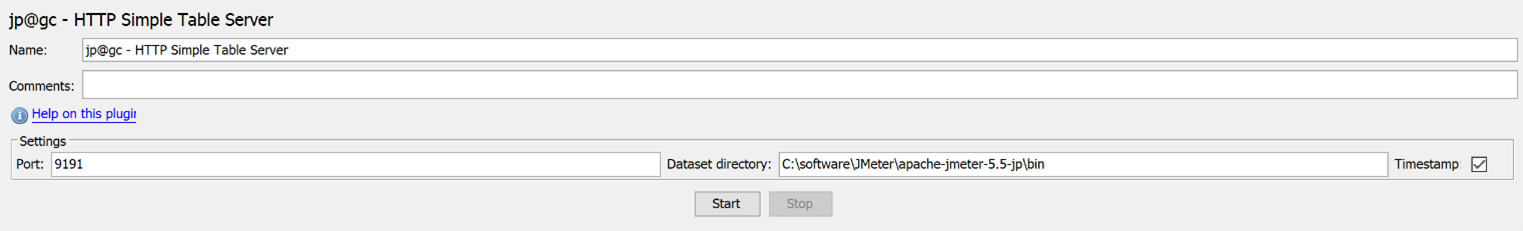

Declaration and Begin of the STS by the Graphical Interface

The Easy Desk Server is situated within the “Non-Test Elements” menu:

Click on on the “Start” button to begin the HTTP STS.

By default, the listing containing the recordsdata is

Begin From the Command Line

It’s doable to begin the STS server from the command line.

binsimple-table-server.cmd (Home windows OS)

/bin/simple-table-server.sh (Linux OS) Or mechanically when beginning JMeter from the command line (CLI) by declaring the file these 2 traces in jmeter.properties:

jsr223.init.file=simple-table-server.groovyjmeterPlugin.sts.loadAndRunOnStartup=true

If the worth is false, then the STS shouldn’t be began when launching JMeter from command line with out GUI.

The default port is 9191. It may be modified by the property:

# jmeterPlugin.sts.port=9191

If jmeterPlugin.sts.port=0, then the STS doesn’t begin when launching JMeter in CLI mode.

The property jmeterPlugin.sts.addTimestamp=true signifies if the backup of the file (e.g., data.csv) can be prefixed by the date and time (e.g.: 20240112T13h00m50s.data.csv); in any other case, jmeterPlugin.sts.addTimestamp=false writes/overwrites the file data.csv# jmeterPlugin.sts.addTimestamp=true.

The property jmeterPlugin.sts.daemon=true is used when the STS is launched as an exterior utility with the Linux nohup command (instance: nohup ./simple-table-server.sh &).

On this case, the STS doesn’t take heed to the keyboard. Use the

The STS enters an infinite loop, so you need to name the /sts/STOP command to cease the STS or the killer.

When jmeterPlugin.sts.daemon=false, the STS waits for the entry of

Loading Information at Easy Desk Server Startup

The STS has the power to load recordsdata into reminiscence at STS startup. Loading recordsdata is finished when the STS is launched as an exterior utility (

Loading recordsdata is not carried out with JMeter in GUI mode.

The recordsdata are learn within the listing indicated by the property jmeterPlugin.sts.datasetDirectory, and if this property is null, then within the listing

The declaration of the recordsdata to be loaded is finished by the next properties:

jmeterPlugin.sts.initFileAtStartup=article.csv,filename.csv

jmeterPlugin.sts.initFileAtStartupRegex=false

or

jmeterPlugin.sts.initFileAtStartup=.+?.csv

jmeterPlugin.sts.initFileAtStartupRegex=true

When jmeterPlugin.sts.initFileAtStartupRegex=false then the property jmeterPlugin.sts.initFileAtStartup accommodates the listing of recordsdata to be loaded with the comma character “,” because the file identify separator. (e.g., jmeterPlugin.sts.initFileAtStartup=article.csv,filename.csv). The STS at startup will attempt to load (INITFILE) the recordsdata articles.csv then filename.csv.

When jmeterPlugin.sts.initFileAtStartupRegex=true then the property jmeterPlugin.sts.initFileAtStartup accommodates an everyday expression that can be used to match the recordsdata within the listing of the property jmeterPlugin.sts.datasetDirectory (e.g.,jmeterPlugin.sts.initFileAtStartup=.+?.csv masses into reminiscence (INITFILE) all recordsdata with the extension “.csv“.

The file identify should not include particular characters that might enable altering the studying listing resembling ....fichier.csv, /and so forth/passwd, or ../../../tomcat/conf/server.xml.

The utmost dimension of a file identify is 128 characters (with out bearing in mind the listing path).

Administration of the Encoding of Information to Learn/Write and the HTML Response

It’s doable to outline the encoding when studying recordsdata or writing knowledge recordsdata. The properties are as follows:

- Learn (INITFILE) or write (SAVE) file with an accent from the textual content file within the charset like UTF-8 or ISO8859_15

- All CSV recordsdata should be in the identical charset encoding:

jmeterPlugin.sts.charsetEncodingReadFile=UTF-8

jmeterPlugin.sts.charsetEncodingWriteFile=UTF-8- Information can be learn (

INITFILE) with the charset declared by the worth ofjmeterPlugin.sts.charsetEncodingReadFile. - Information can be written (

SAVE) with the charset declared by the worth ofjmeterPlugin.sts.charsetEncodingWriteFile. - The default worth

jmeterPlugin.sts.charsetEncodingReadFilecorresponds to the System property: file.encoding. - The default worth

jmeterPlugin.sts.charsetEncodingWriteFilecorresponds to the System property: file.encoding. - All knowledge recordsdata should be in the identical charset in the event that they include non-ASCII characters.

- To reply in HTML to totally different instructions, particularly

READ, thecharsetdiscovered within the response header is indicated byjmeterPlugin.sts.charsetEncodingHttpResponse. jmeterPlugin.sts.charsetEncodingHttpResponse=: Within the HTTP header add “(Use UTF-8) Content-Type:text/html; charset=“- The default worth is the JMeter property:

sampleresult.default.encoding - The listing of charsets is asserted within the HTML web page (take the java.io API column)

- For the identify of the charset look, see Oracle docs for Supported Encodings.

- Column Canonical Identify for java.io API and java.lang API

Assist With Use

The URL of an STS command is of the shape:

The instructions and the names of the parameters are in uppercase (case delicate).

If no command is indicated then the assistance message is returned: http://localhost:9191/sts/.

Instructions and Configuration

The next is an inventory of instructions and configuration of the HTTP STS with extracts from the documentation of the JMeter-plugins.org website.

The calls are atomic (with synchronized) => Studying or including goes to the tip of the present processing earlier than processing the following request.

The instructions to the Easy Desk Server are carried out by HTTP GET and/or POST calls relying on the command.

Documentation is out there on the JMeter plugin web site.

Distributed Structure for JMeter

The Easy Desk Server runs on the JMeter controller (grasp) and cargo mills (slaves) or injectors make calls to the STS to get, discover, or add some knowledge.

Firstly of the take a look at, the primary load generator will load knowledge in reminiscence (preliminary name) and on the finish of the take a look at, it asks for the STS saving values in a file.

All of the load mills ask for knowledge from the identical STS which is began on the JMeter controller.

The INITFILE can be carried out at STS startup time (with out the primary load generator preliminary name).

Instance of a dataset file logins.csv:

login1;password1

login2;password2

login3;password3

login4;password4

login5;password5INITFILE: Load File in Reminiscence

- http://hostname:port/sts/INITFILE?FILENAME=logins.csv

OK

5 => variety of traces learn

- Linked listing after this command:

login1;password1

login2;password2

login3;password3

login4;password4

login5;password5The recordsdata are learn within the listing indicated by the property: jmeterPlugin.sts.datasetDirectory; if this property is null, then within the listing.

READ: Get One Line From Record

http://hostname:port/sts/

Out there choices:

- READ_MODE=FIRST => login1;password1

- READ_MODE=LAST => login5;password5

- READ_MODE=RANDOM => login?;password?

- KEEP=TRUE => The info is stored and put to the tip of the listing

- KEEP=FALSE => The info is eliminated

READMULTI: Get Multi Strains From Record in One Request

- GET Protocol

http://hostname:port/sts/READMULTI?FILENAME=logins.csv&NB_LINES={Nb traces to learn}&READ_MODE={FIRST, LAST, RANDOM}&KEEP={TRUE, FALSE}

- Out there choices:

- NB_LINES=Variety of traces to learn : 1

- READ_MODE=FIRST => Begin to learn on the first line

- READ_MODE=LAST => Begin to learn on the final line (reverse)

- READ_MODE=RANDOM => learn n traces randomly

- KEEP=TRUE => The info is stored and put to the tip of listing

- KEEP=FALSE => The info is eliminated

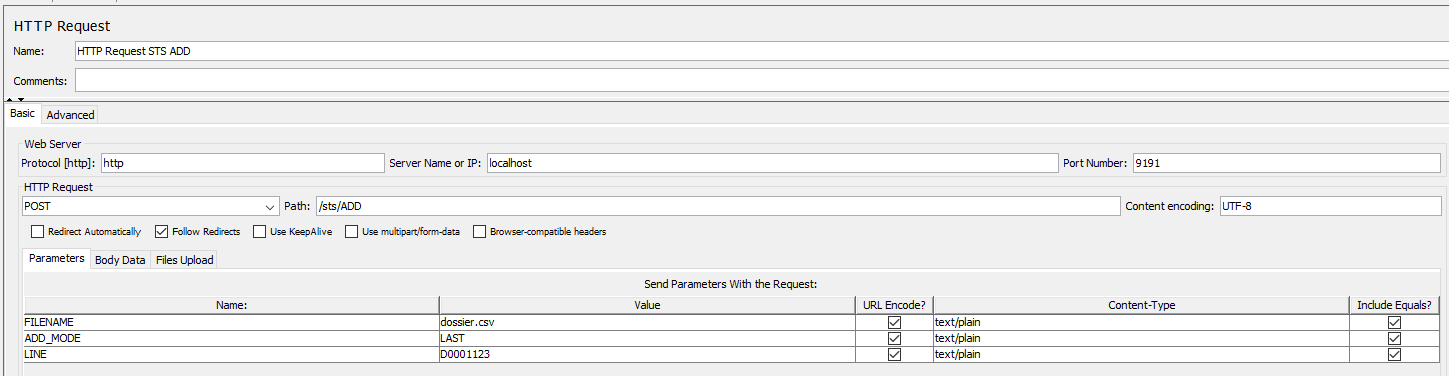

ADD: Add a Line Right into a File (GET OR POST HTTP Protocol)

- FILENAME=file.csv, LINE=D0001123, ADD_MODE={FIRST, LAST}

- HTML format:

- Out there choices:

- ADD_MODE=FIRST => Add to the start of the listing

- ADD_MODE=LAST => Add to the tip of the listing

- FILENAME=file.csv => If would not exist already it creates a LinkList in reminiscence

- LINE=1234;98763 =>Tthe line so as to add

- UNIQUE => Don’t add a line if the listing already accommodates such a line (return KO)

- HTTP POST request:

- Technique GET:

- GET Protocol:

http://hostname:port/sts/ADD FILENAME=file.csv&LINE=D0001123&ADD_MODE={FIRST, LAST}

- GET Protocol:

FIND: Discover a Line within the File (GET OR POST HTTP Protocol)

- Command

FIND- Discover a line (

LINE) in a file (FILENAME) (GET or POST HTTP protocol)

- Discover a line (

- The

LINEto seek out is forFIND_MODE:- A string:

SUBSTRING(Default,ALineInTheFileaccommodates thestringToFind) orEQUALS(stringToFind == ALineInTheFile) - A daily expression with

REGEX_FIND(accommodates) andREGEX_MATCH(whole area matches the sample) KEEP=TRUE=> The info is stored and put to the tip of the listingKEEP=FALSE=> The info is eliminated

- A string:

- GET Protocol:

http://hostname:port/sts/FIND?FILENAME=colours.txt&LINE=(BLUE|RED)&[FIND_MODE=[SUBSTRING,EQUALS,REGEX_FIND,REGEX_MATCH]]&KEEP={TRUE, FALSE}

If discover return the primary line discovered, begin studying on the first line within the file (linked listing):

If NOT discovered, return title KO and message Error: Not discovered! within the physique.

LENGTH: Return the Variety of Remaining Strains of a Linked Record

http://hostname:port/sts/LENGTH?FILENAME=logins.csv- HTML format:

STATUS: Show the Record of Loaded Information and the Variety of Remaining Strains

http://hostname:port/sts/STATUS- HTML format:

OK

logins.csv = 5

file.csv = 1

SAVE: Save the Specified Linked listing in a File to the datasetDirectory Location

http://hostname:port/sts/SAVE?FILENAME=logins.csv- If

jmeterPlugin.sts.addTimestampis ready to true, then a timestamp can be added to the filename. The file is saved in jmeterPlugin.sts.datasetDirectory or if null within the/bin listing: 20240520T16h33m27s.logins.csv. - You’ll be able to power the

addTimestampworth with parameterADD_TIMESTAMPwithin the URL like :http://hostname:port/sts/SAVE?FILENAME=logins.csv&ADD_TIMESTAMP={true,false} - HTML format:

OK

5 => variety of traces saved

RESET: Take away All Components From the Specified Record:

- http://hostname:port/sts/RESET?FILENAME=logins.csv

- HTML format:

The Reset command is commonly used within the “setUp Thread Group” to clear the values within the reminiscence Linked Record from a earlier take a look at.

It at all times returns OK even when the file doesn’t exist.

STOP: Shutdown the Easy Desk Server

- http://hostname:port/sts/STOP

- The cease command is used often when the HTTP STS server is launched by a script shell and we need to cease the STS on the finish of the take a look at.

- When the

jmeterPlugin.sts.daemon=true, it’s worthwhile to name http://hostname:port/sts/STOP or kill the method to cease the STS.

CONFIG: Show STS Configuration

- http://hostname:port/sts/CONFIG

- Show the STS configuration, e.g.:

jmeterPlugin.sts.loadAndRunOnStartup=false

startFromCli=true

jmeterPlugin.sts.port=9191

jmeterPlugin.sts.datasetDirectory=null

jmeterPlugin.sts.addTimestamp=true

jmeterPlugin.sts.demon=false

jmeterPlugin.sts.charsetEncodingHttpResponse=UTF-8

jmeterPlugin.sts.charsetEncodingReadFile=UTF-8

jmeterPlugin.sts.charsetEncodingWriteFile=UTF-8

jmeterPlugin.sts.initFileAtStartup=

jmeterPlugin.sts.initFileAtStartupRegex=false

databaseIsEmpty=falseError Response KO

When the command and/or a parameter are mistaken, the result’s a web page html standing 200 however the title accommodates the label KO.

Examples:

- Ship an unknown command. Watch out because the command a case delicate (

READ != learn).

KO

Error : unknown command !

- Attempt to learn the worth from a file not but loaded with

INITFILE.

KO

Error : logins.csv not loaded but !

- Attempt to learn the worth from a file however no extra traces within the Linked Record.

KO

Error : No extra line !

- Attempt to save traces in a file that accommodates unlawful characters like “..”, “:”

KO

Error : Unlawful character discovered !

- Command

FINDandFIND_MODE=REGEX_FINDorREGEX_MATCH:

KO

Error : Regex compile error !

Modifying STS Parameters From the Command Line

You’ll be able to override STS settings utilizing command-line choices:

-DjmeterPlugin.sts.port=

-DjmeterPlugin.sts.loadAndRunOnStartup=

-DjmeterPlugin.sts.datasetDirectory=

-DjmeterPlugin.sts.addTimestamp=

-DjmeterPlugin.sts.daemon=

-DjmeterPlugin.sts.charsetEncodingHttpResponse=

-DjmeterPlugin.sts.charsetEncodingReadFile=

-DjmeterPlugin.sts.charsetEncodingWriteFile=

-DjmeterPlugin.sts.initFileAtStartup=

-DjmeterPlugin.sts.initFileAtStartupRegex=false= jmeter.bat -DjmeterPlugin.sts.loadAndRunOnStartup=true -DjmeterPlugin.sts.port=9191 -DjmeterPlugin.sts.datasetDirectory=c:/knowledge -DjmeterPlugin.sts.charsetEncodingReadFile=UTF-8 -n –t testdemo.jmxSTS within the POM of a Check With the jmeter-maven-plugin

It’s doable to make use of STS in a efficiency take a look at launched with the jmeter-maven-plugin. To do that:

- Put your CSV recordsdata within the

/src/take a look at/jmeter logins.csv). - Put the

simple-table-server.groovy(Groovy script) within the/src/take a look at/jmeter - Put your JMeter script in

/src/take a look at/jmeter test_login.jmx). - Declare within the Maven construct part, within the configuration

artifact kg.apc:jmeter-plugins-table-server:. - Declare person properties for STS configuration and automated begin.

- For those who use a localhost and a proxy configuration, you could possibly add a proxy configuration with

localhost - Extract pom.xml devoted to HTTP Easy Desk Server :

com.lazerycode.jmeter

jmeter-maven-plugin

3.8.0

...

kg.apc:jmeter-plugins-table-server:5.0

9191

true

${venture.construct.listing}/jmeter/testFiles

true

${venture.construct.listing}/jmeter/testFiles/simple-table-server.groovy