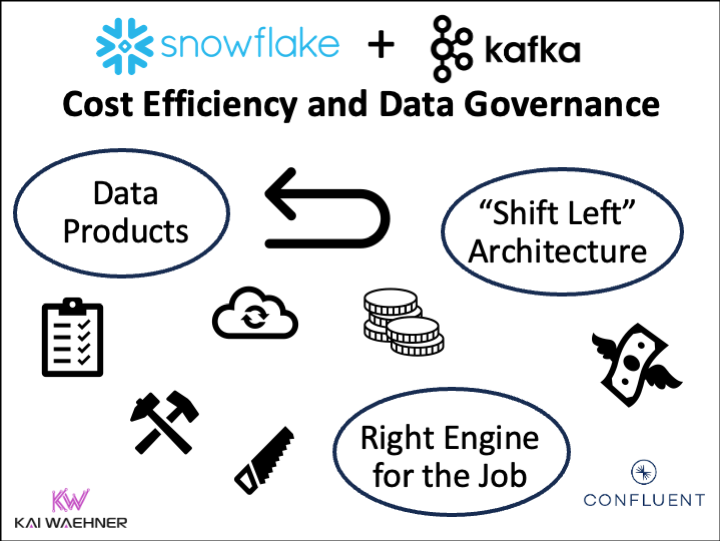

Snowflake is a number one cloud knowledge warehouse and transitions into a knowledge cloud that allows numerous use instances. The main disadvantage of this evolution is the considerably rising value of the information processing. This weblog publish explores how knowledge streaming with Apache Kafka and Apache Flink allows a “shift left architecture” the place enterprise groups can scale back value, present higher knowledge high quality, and course of knowledge extra effectively. The actual-time capabilities and unification of transactional and analytical workloads utilizing Apache Iceberg’s open desk format allow new use instances and a best-of-breed strategy with no vendor lock-in and the selection of assorted analytical question engines like Dremio, Starburst, Databricks, Amazon Athena, Google BigQuery, or Apache Flink.

Snowflake and Apache Kafka

Snowflake is a number one cloud-native knowledge warehouse. Its usability and scalability made it a prevalent knowledge platform in hundreds of firms. This weblog sequence explores totally different knowledge integration and ingestion choices, together with conventional ETL/iPaaS and knowledge streaming with Apache Kafka. The dialogue covers why point-to-point Zero-ETL is barely a short-term win, why Reverse ETL is an anti-pattern for real-time use instances, and when a Kappa Structure and shifting knowledge processing “to the left” into the streaming layer helps to construct transactional and analytical real-time and batch use instances in a dependable and cost-efficient manner.

That is a part of a weblog sequence:

- Snowflake Integration Patterns: Zero ETL and Reverse ETL vs. Apache Kafka

- Snowflake Knowledge Integration Choices for Apache Kafka (together with Iceberg)

- THIS POST: Apache Kafka + Flink + Snowflake: Value-Environment friendly Analytics and Knowledge Governance

Stream Processing With Kafka and Flink BEFORE the Knowledge Hits Snowflake

The primary two weblog posts explored the totally different design patterns and ingestion choices for Snowflake through Kafka or different ETL instruments. However let’s make it very clear: ingesting all uncooked knowledge right into a single knowledge lake at relaxation is an anti-pattern in nearly all instances. It isn’t cost-efficient and blocks real-time use instances. Why?

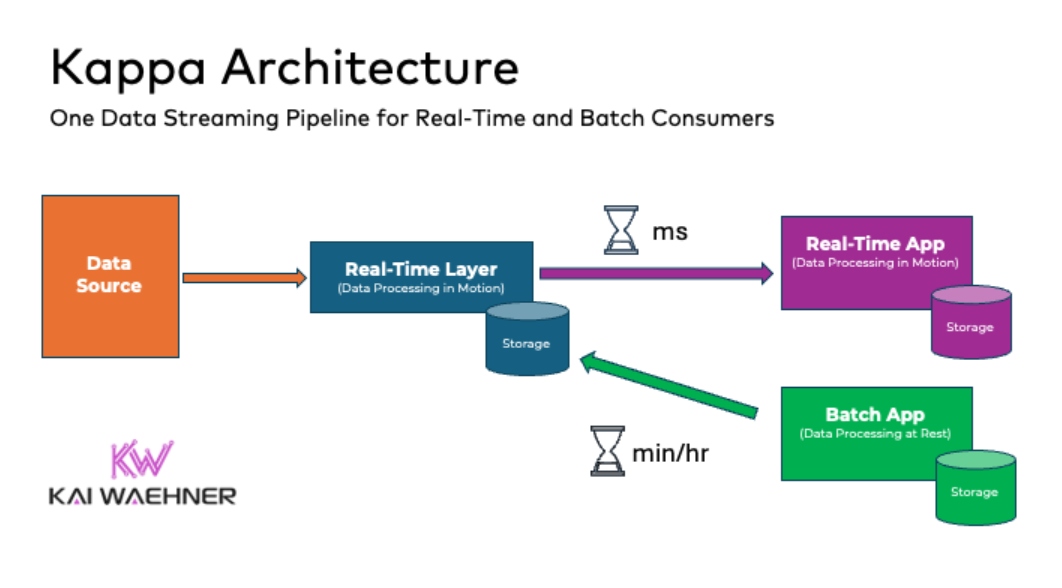

Actual-time knowledge beats sluggish knowledge. That’s true for nearly each use case. Enterprise architects construct infrastructures with the Lambda structure that always features a knowledge lake for all of the analytics. The Kappa structure is a software program structure that’s event-based and in a position to deal with all knowledge in any respect scales in actual time for transactional AND analytical workloads.

Reverse ETL Is NOT a Good Technique for Actual-Time Apps

The central premise behind the Kappa structure is that you may carry out each real-time and batch processing with a single know-how stack. The guts of the infrastructure is streaming structure. First, the information streaming platform log shops incoming knowledge. From there, a stream processing engine processes the information constantly in real-time or ingests the information into every other analytics database or enterprise software through any communication paradigm and pace, together with real-time, close to real-time, batch, and request-response.

The enterprise domains have the liberty of selection. Within the above diagram, a shopper may very well be:

- Batch app: Snowflake (utilizing Snowpipe for ingestion)

- Batch app: Elasticsearch for search queries

- Close to real-time app: Snowflake (utilizing Snowpipe Streaming for ingestion)

- Actual-time app: Stream processing with Kafka Streams or Apache Flink

- Close to real-time app: HTTP/REST API for request-response communication or third get together integration

I explored in a radical evaluation why Reverse ETL with a Knowledge Warehouse or Knowledge Lake like Snowflake is an ANTI-PATTERN for real-time use instances.

Reverse ETL is unavoidable in some situations, particularly in bigger organizations. Instruments stay FiveTran or Hightouch do job for those who want Reverse ETL. However by no means design your enterprise structure to retailer knowledge in a knowledge lake simply to reverse it afterward.

Low cost Object Storage -> Cloud Knowledge Warehouse = Knowledge Swamp

The price of storage has gotten extraordinarily low cost with object storage within the public cloud.

With the explosion of extra knowledge and extra knowledge varieties/codecs, object storage has risen as de facto low cost, ubiquitous storage serving the normal “knowledge lake.”

Nevertheless, even with that in place the information lake was nonetheless the “knowledge swamp,” with plenty of totally different file sizes/varieties, and so on., nonetheless, the cloud knowledge warehouse was in all probability simpler, and possibly why it made extra sense to nonetheless level most uncooked knowledge for evaluation within the knowledge warehouse.

Snowflake is an excellent analytics platform. It supplies strict SLAs and concurrent queries that want that pace. Nevertheless, most of the workloads performed in Snowflake don’t have that requirement. As an alternative, some use instances require advert hoc queries and are exploratory in nature with no pace SLAs. Knowledge warehousing ought to typically be a vacation spot of processed knowledge, not an entry level for all issues uncooked knowledge. And on the opposite aspect, some purposes require low latency for question outcomes. Function-built databases do analytics a lot sooner than Snowflake, even at scale and for transactional workloads.

The Proper Engine for the Job

The mix of an object retailer with a desk format like Apache Iceberg allows openness for various groups. You add a question engine on prime, and it’s like a composable knowledge warehouse. Now your group can run Apache Spark in your tables. Possibly knowledge science can run Ray on it for machine studying, and perhaps some aggregations run with Apache Flink.

It was by no means simpler to decide on the best-of-breed strategy in every enterprise unit once you leverage knowledge streaming together with SaaS analytics choices.

Subsequently, groups ought to select one of the best value/efficiency for his or her distinctive wants/necessities. Apache Iceberg makes that shift between totally different lakehouses and knowledge platforms so much simpler because it helps with the compaction of small file points.

Nonetheless, the efficiency of operating SQL Engine on the information lake was sluggish/not performant and didn’t help key traits which are anticipated, like ACID transactions.

Dremio, Starburst Knowledge, Spark SQL, Databricks Photon, Ray/Anyscale, Amazon Athena, Google BigQuery, Flink, and so on. are all of the question engine ecosystem techniques that the information product in a ruled Kafka Matter unlocks.

Why did I add a stream processor right here with Flink? Flink as an possibility to question streams in real-time/as advert hoc exploratory questions on each real-time/historic upstream.

Not Each Question Must Be (Close to) Actual-Time!

Understand that not each software requires the efficiency of Snowflake, a real-time analytics engine like Druid or Pinot, or fashionable knowledge virtualization with Starburst. In the event you don’t want your random advert hoc question to see what number of prospects at this time are shopping for crimson footwear within the retailer to share leads to 20 seconds/can await 3-5 minutes, and if the value of that question may be cheaper and performed effectively, shifting left provides you the choice to resolve the place lane to place your queries:

- The stream processor as a part of the information streaming platform for steady or interactive queries (e.g., Apache Flink)

- The batch analytics platform for long-running massive knowledge calculations (e.g., Spark and even Flink’s batch API)

- The analytics platform for quick, complicated queries (e.g., Snowflake)

- The low latency user-facing analytics (e.g., Druid or Pinot)

- Different use instances with one of the best engine for the job (like search with Elasticsearch)

Shift Processing “To the Left” Into the Knowledge Streaming Layer With Apache Flink

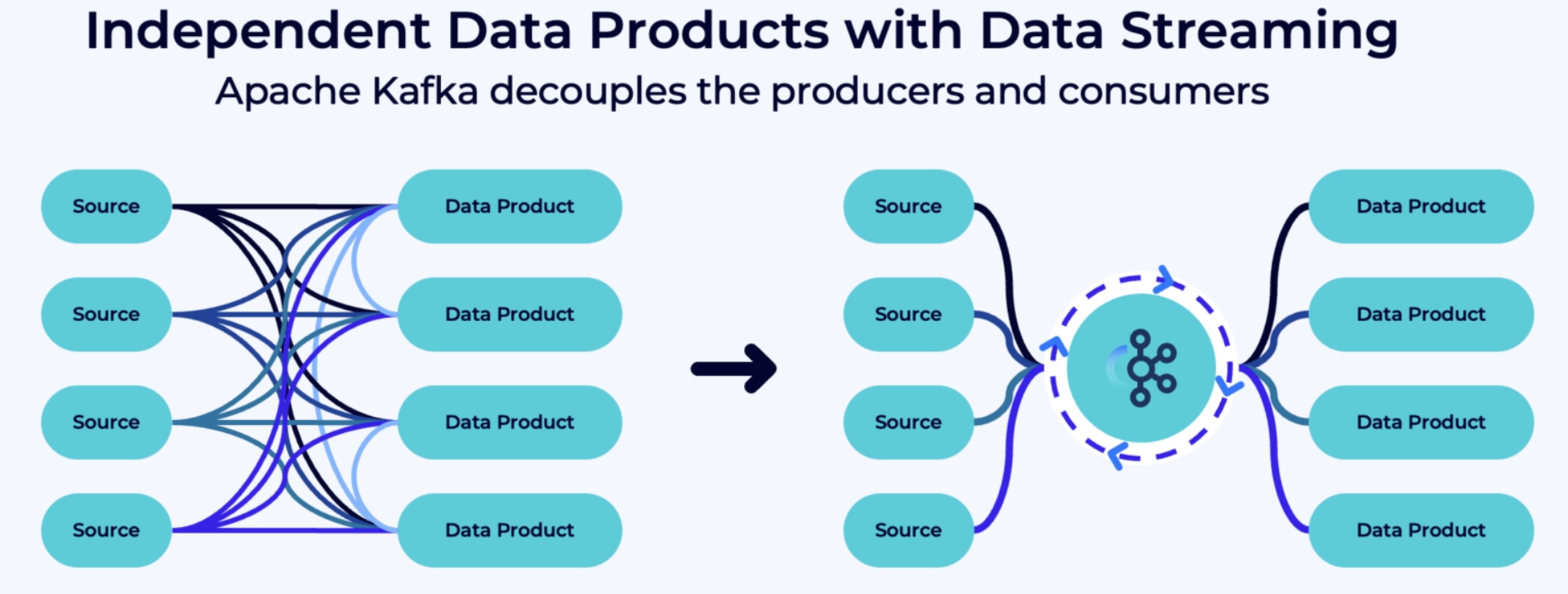

If storing all knowledge in a single knowledge lake/knowledge warehouse/lakehouse AND Reverse ETL are anti-patterns, how can we resolve it? The reply is less complicated than you may suppose: transfer the processing “to the left” within the enterprise structure!

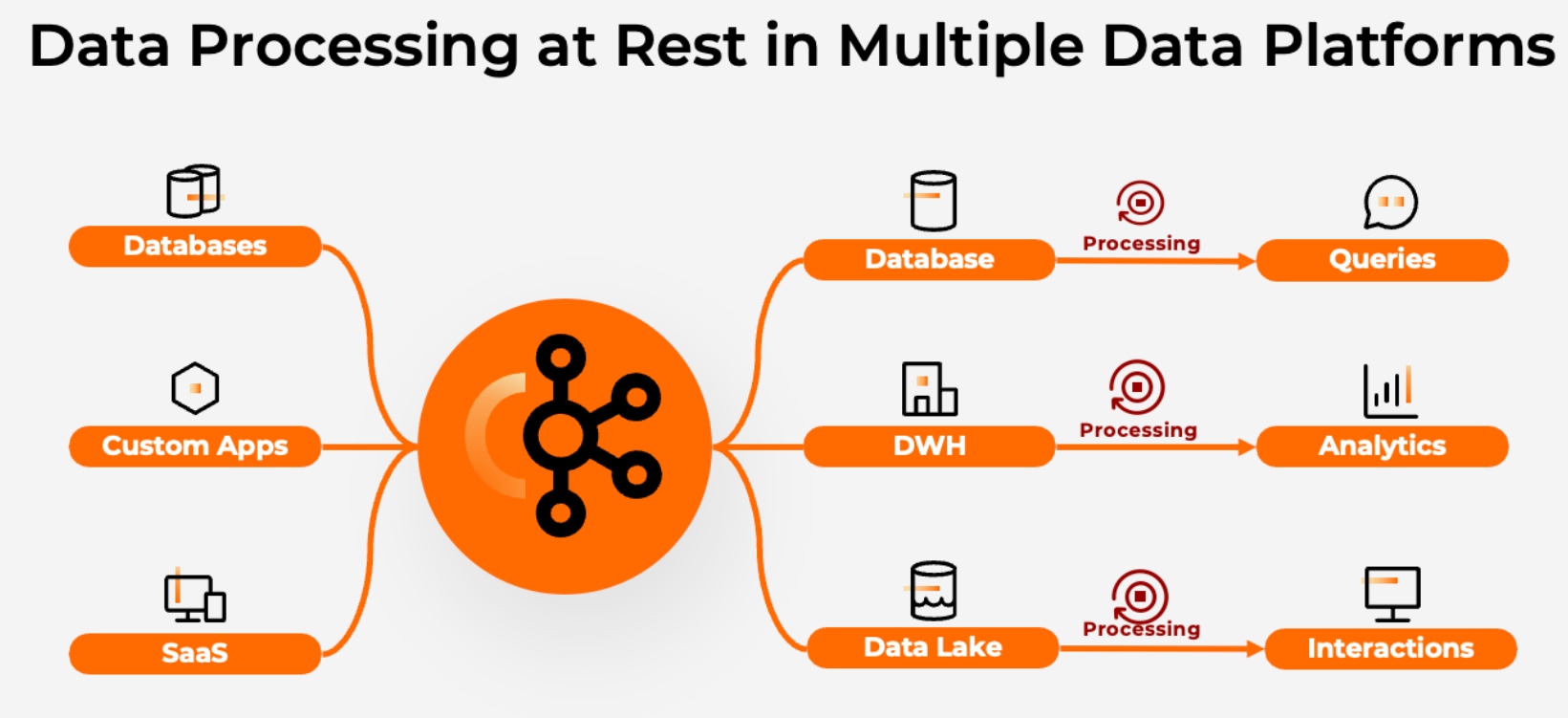

Most architectures ingest uncooked knowledge into a number of knowledge sinks, i.e., databases, warehouses, lakes, and different purposes:

Supply: Confluent

This has a number of drawbacks:

- Costly and inefficient: The identical knowledge is cleaned, reworked, and enriched in a number of areas (typically utilizing legacy applied sciences).

- Various diploma of staleness: Each downstream system receives and processes the identical knowledge at totally different occasions/totally different time intervals and supplies barely totally different semantics. This results in inconsistencies and degraded buyer expertise downstream.

- Complicated and error-prone: The identical enterprise logic must be maintained at a number of locations. A number of processing engines must be maintained and operated.

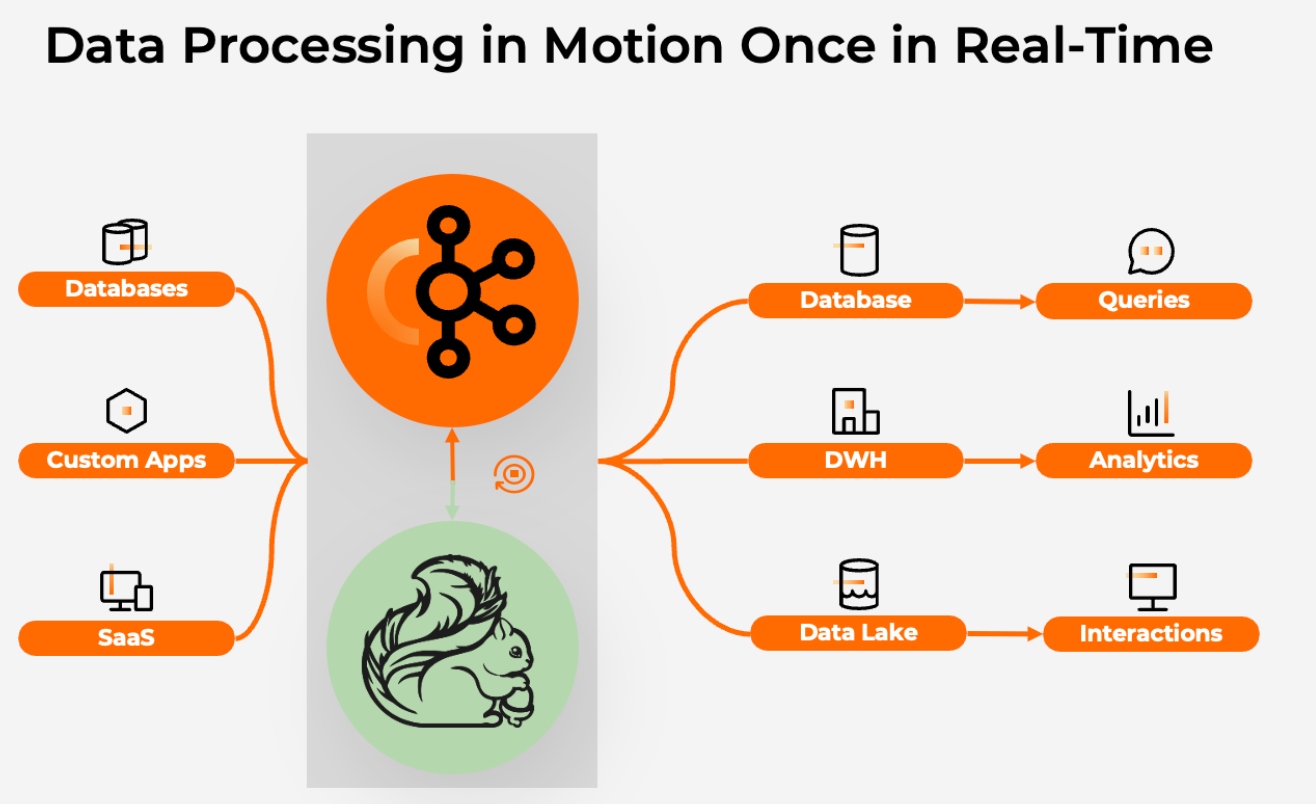

Therefore, it’s rather more constant and cost-efficient to course of knowledge as soon as in real-time when it’s created. An information streaming platform with Apache Kafka and Flink is ideal for this:

Supply: Confluent

With the shift-left strategy offering a knowledge product with good high quality within the streaming layer, every enterprise group can have a look at the value/efficiency of the most well-liked/frequent/frequent/costly queries and decide if they honestly ought to stay within the warehouse or select one other resolution.

Processing the information with a knowledge streaming platform “on the left side of the architecture” has numerous benefits:

- Value environment friendly: Knowledge is barely processed as soon as in a single place and it’s processed constantly, spreading the work over time.

- Contemporary knowledge all over the place: All purposes are provided with equally contemporary knowledge and characterize the present state of your online business.

- Reusable and constant: Knowledge is processed constantly and meets the latency necessities of probably the most demanding customers, which additional will increase reusability.

Common Knowledge Merchandise and Value-Environment friendly Knowledge Sharing in Actual-Time AND Batch

That is only a primer for its personal dialogue. Kafka and Snowflake are complementary. Nevertheless, the best integration and ingestion structure could make a distinction on your total knowledge structure (not simply the analytics with Snowflake). Therefore, take a look at my in-depth article “Kappa Architecture is Mainstream Replacing Lambda” to know why knowledge streaming is rather more than simply ingestion into your cloud knowledge warehouse.

Supply: Confluent

Constructing (real-time or batch) knowledge merchandise in fashionable microservice and knowledge mesh enterprise architectures is the long run. Knowledge streaming enforces good knowledge high quality and cost-efficient knowledge processing (as an alternative of “DBT’ing” all the pieces many times in Snowflake).

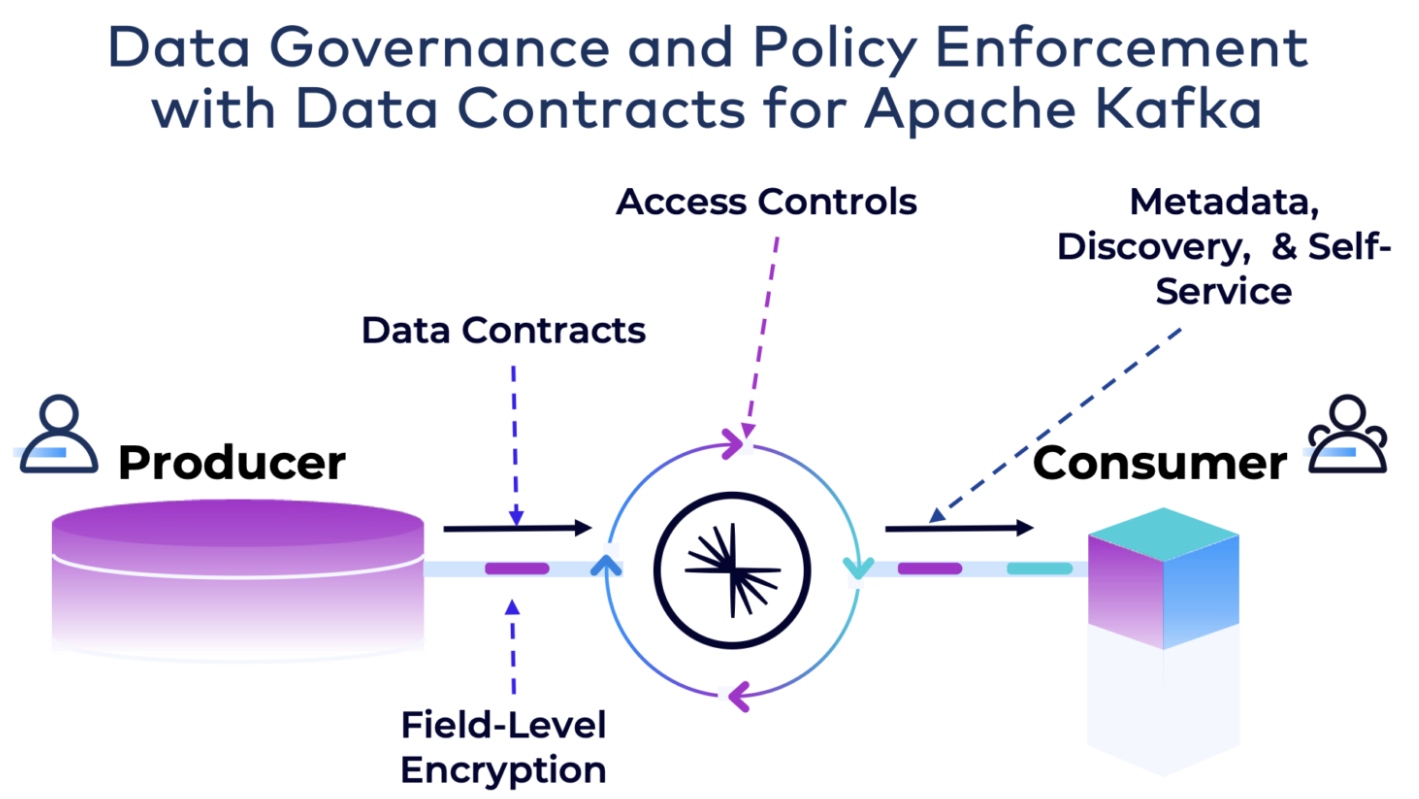

This offers firms choices to disaggregate the compute layer, and maybe shift left queries that basically don’t want a purpose-built analytics engine, i.e., the place a stream processor like Apache Flink can do the job. That reduces knowledge replication/copies. Completely different groups can question that knowledge with the question engine of selection, primarily based on the important thing necessities for the workload. However already constructed into good knowledge high quality is the information product (i.e., Kafka Matter) with knowledge high quality guidelines and coverage enforcement (Matter Schema + guidelines engine).

The Want for Enterprise-Large Knowledge Governance Throughout Knowledge Streaming With Apache Kafka and Lakehouses Like Snowflake

Enterprise-wide knowledge governance performs a essential function in making certain that organizations derive worth from their knowledge property, keep compliance with rules, and mitigate dangers related to knowledge administration throughout numerous IT environments.

Most knowledge platforms present knowledge governance capabilities like knowledge catalog, knowledge lineage, self-service knowledge portals, and so on. This consists of knowledge warehouses, knowledge lakes, lakehouses, and knowledge streaming platforms.

Quite the opposite, enterprise-wide knowledge governance options have a look at all the enterprise structure. They accumulate info from all totally different warehouses, lakes, and streaming platforms. In the event you apply the shift left technique for constant and cost-efficient knowledge processing throughout real-time and batch platforms, then the information streaming layer MUST combine with the enterprise-wide knowledge governance resolution. However frankly, you SHOULD combine all platforms into the enterprise-wide governance view anyway, irrespective of for those who shift some workloads to the left or not.

In the present day, most firms constructed their very own enterprise-wide governance suite (if they’ve one already in any respect). Sooner or later, an increasing number of firms undertake devoted options like Collibra or Microsoft Purview. It doesn’t matter what you select, the information streaming governance layer must combine with this enterprise-wide platform. Ideally, through direct integrations, however no less than through APIs.

Whereas the “shift left” strategy disrupts the information groups that processed all knowledge in analytics platforms like Snowflake or Databricks prior to now, the enterprise-wide governance ensures that the information groups are snug throughout totally different knowledge platforms. The profit is the unification of real-time and batch knowledge and operational and analytical workloads.

The Previous, Current, and Way forward for Kafka and Snowflake Integration

Apache Kafka and Snowflake are complementary applied sciences with numerous integration choices to construct an end-to-end knowledge pipeline with reporting and analytics within the cloud knowledge warehouse or knowledge cloud.

Actual-time knowledge beats sluggish knowledge in nearly all use instances. Some situations profit from Snowpipe Streaming with Kafka Hook up with construct near-real-time use instances in Snowflake. Different use instances leverage stream processing for real-time analytics and operational use instances.

Impartial Knowledge Merchandise with a Shift-Left Structure and Knowledge Governance

To make sure good knowledge high quality and cost-efficient knowledge pipelines with knowledge governance and coverage enforcement in an enterprise structure, a “shift left architecture” with knowledge streaming is the only option to construct knowledge merchandise.

Supply: Confluent

As an alternative of utilizing DBT or related instruments to course of knowledge many times at relaxation in Snowflake, contemplate pre-processing the information as soon as within the streaming platform with applied sciences like Kafka Streams or Apache Flink and scale back the associated fee in Snowflake considerably. The consequence is that every knowledge product consumes occasions of fine knowledge high quality to deal with constructing enterprise logic as an alternative of ETL. And you may select your favourite analytical question engine per use case or enterprise unit.

Apache Iceberg as Normal Desk Format to Unify Operational and Analytical Workloads

Apache Iceberg and competing applied sciences like Apache Hudi or Databricks’ Delta Lake make the long run integration between knowledge streaming and knowledge warehouses/knowledge lakes much more fascinating. I’ve already explored the adoption of Apache Iceberg as an open desk format in between the streaming and analytics layer. Most distributors (together with Confluent, Snowflake, and Amazon) already help Iceberg at this time.

How do you construct knowledge pipelines for Snowflake at this time? Already with Apache Kafka and a “shift left architecture”, or nonetheless with conventional ETL instruments? What do you concentrate on Snowflake’s Snowpipe Streaming? Or do you already go all in with Apache Iceberg? Let’s join on LinkedIn and talk about it!