What Is Knowledge Governance?

Knowledge governance is a framework that’s developed by means of the collaboration of people with varied roles and tasks. This framework goals to determine processes, insurance policies, procedures, requirements, and metrics that assist organizations obtain their objectives. These objectives embrace offering dependable information for enterprise operations, setting accountability, and authoritativeness, growing correct analytics to evaluate efficiency, complying with regulatory necessities, safeguarding information, making certain information privateness, and supporting the information administration life cycle.

Making a Knowledge Governance Board or Steering Committee is an effective first step when integrating a Knowledge Governance program and framework. A company’s governance framework must be circulated to all workers and administration, so everybody understands the adjustments going down.

The fundamental ideas wanted to efficiently govern information and analytics purposes. They’re:

- A deal with enterprise values and the group’s objectives

- An settlement on who’s accountable for information and who makes selections

- A mannequin emphasizing information curation and information lineage for Knowledge Governance

- Determination-making that’s clear and consists of moral rules

- Core governance elements embrace information safety and threat administration

- Present ongoing coaching, with monitoring and suggestions on its effectiveness

- Remodeling the office into collaborative tradition, utilizing Knowledge Governance to encourage broad participation

What Is Knowledge Integration?

Knowledge integration is the method of mixing and harmonizing information from a number of sources right into a unified, coherent format that varied customers can devour, for instance: operational, analytical, and decision-making functions.

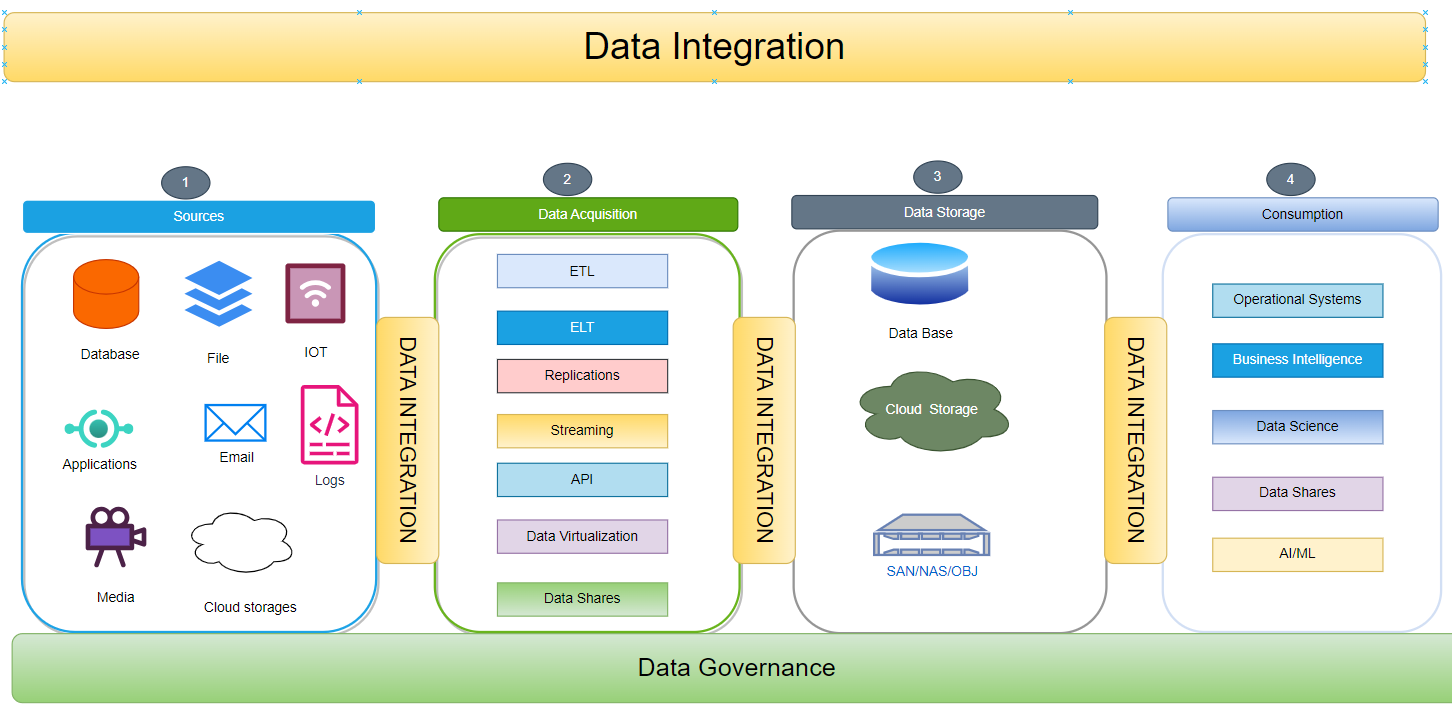

The info integration course of consists of 4 major important elements:

1. Supply Techniques

Supply methods, equivalent to databases, file methods, Web of Issues (IoT) units, media continents, and cloud information storage, present the uncooked info that have to be built-in. The heterogeneity of those supply methods leads to information that may be structured, semi-structured, or unstructured.

- Databases: Centralized or distributed repositories are designed to retailer, manage, and handle structured information. Examples embrace relational database administration methods (RDBMS) like MySQL, PostgreSQL, and Oracle. Knowledge is often saved in tables with predefined schemas, making certain consistency and ease of querying.

- File methods: Hierarchical buildings that manage and retailer recordsdata and directories on disk drives or different storage media. Widespread file methods embrace NTFS (Home windows), APFS (macOS), and EXT4 (Linux). Knowledge might be of any sort, together with structured, semi-structured, or unstructured.

- Web of Issues (IoT) units: Bodily units (sensors, actuators, and many others.) which are embedded with electronics, software program, and community connectivity. IoT units acquire, course of, and transmit information, enabling real-time monitoring and management. Knowledge generated by IoT units might be structured (e.g., sensor readings), semi-structured (e.g., gadget configuration), or unstructured (e.g., video footage).

- Media repositories: Platforms or methods designed to handle and retailer varied varieties of media recordsdata. Examples embrace content material administration methods (CMS) and digital asset administration (DAM) methods. Knowledge in media repositories can embrace pictures, movies, audio recordsdata, and paperwork.

- Cloud information storage: Providers that present on-demand storage and administration of knowledge on-line. In style cloud information storage platforms embrace Amazon S3, Microsoft Azure Blob Storage, and Google Cloud Storage. Knowledge in cloud storage might be accessed and processed from anyplace with an web connection.

2. Knowledge Acquisition

Knowledge acquisition includes extracting and gathering info from supply methods. Completely different strategies might be employed based mostly on the supply system’s nature and particular necessities. These strategies embrace batch processes, streaming strategies using applied sciences like ETL (Extract, Remodel, Load), ELT (Extract, Load, Remodel), API (Software Programming Interface), streaming, virtualization, information replication, and information sharing.

- Batch processes: Batch processes are generally used for structured information. On this technique, information is gathered over a time period and processed in bulk. This method is advantageous for big datasets and ensures information consistency and integrity.

- Software Programming Interface (API): APIs function a communication channel between purposes and information sources. They permit for managed and safe entry to information. APIs are generally used to combine with third-party methods and allow information alternate.

- Streaming: Streaming includes steady information ingestion and processing. It’s generally used for real-time information sources equivalent to sensor networks, social media feeds, and monetary markets. Streaming applied sciences allow rapid evaluation and decision-making based mostly on the newest information.

- Virtualization: Knowledge virtualization gives a logical view of knowledge with out bodily transferring or copying it. It allows seamless entry to information from a number of sources, no matter their location or format. Virtualization is usually used for information integration and lowering information silos.

- Knowledge replication: Knowledge replication includes copying information from one system to a different. It enhances information availability and redundancy. Replication might be synchronous, the place information is copied in real-time, or asynchronous, the place information is copied at common intervals.

- Knowledge sharing: Knowledge sharing includes granting licensed customers or methods entry to information. It facilitates collaboration, allows insights from a number of views, and helps knowledgeable decision-making. Knowledge sharing might be carried out by means of varied mechanisms equivalent to information portals, information lakes, and federated databases.

3. Knowledge Storage

Upon information acquisition, storing information in a repository is essential for environment friendly entry and administration. Numerous information storage choices can be found, every tailor-made to particular wants. These choices embrace:

- Database Administration Techniques (DBMS): Relational Database Administration Techniques (RDBMS) are software program methods designed to prepare, retailer, and retrieve information in a structured format. These methods supply superior options equivalent to information safety, information integrity, and transaction administration. Examples of fashionable RDBMS embrace MySQL, Oracle, and PostgreSQL. NoSQL databases, equivalent to MongoDB and Cassandra, are designed to retailer and handle semi-structured information. They provide flexibility and scalability, making them appropriate for dealing with giant quantities of knowledge which will want to suit higher right into a relational mannequin.

- Cloud storage providers: Cloud storage providers supply scalable and cost-effective storage options within the cloud. They supply on-demand entry to information from anyplace with an web connection. In style cloud storage providers embrace Amazon S3, Microsoft Azure Storage, and Google Cloud Storage.

- Knowledge lakes: Knowledge lakes are giant repositories of uncooked and unstructured information of their native format. They’re typically used for large information analytics and machine studying. Knowledge lakes might be carried out utilizing Hadoop Distributed File System (HDFS) or cloud-based storage providers.

- Delta lakes: Delta lakes are a sort of knowledge lake that helps ACID transactions and schema evolution. They supply a dependable and scalable information storage answer for information engineering and analytics workloads.

- Cloud information warehouse: Cloud information warehouses are cloud-based information storage options designed for enterprise intelligence and analytics. They supply quick question efficiency and scalability for big volumes of structured information. Examples embrace Amazon Redshift, Google BigQuery, and Snowflake.

- Huge information recordsdata: Huge information recordsdata are giant collections of knowledge saved in a single file. They’re typically used for information evaluation and processing duties. Widespread massive information file codecs embrace Parquet, Apache Avro, and Apache ORC.

- On-premises Storage Space Networks (SAN): SANs are devoted high-speed networks designed for information storage. They provide quick information switch speeds and supply centralized storage for a number of servers. SANs are sometimes utilized in enterprise environments with giant storage necessities.

- Community Hooked up Storage (NAS): NAS units are file-level storage methods that connect with a community and supply shared space for storing for a number of purchasers. They’re typically utilized in small and medium-sized companies and supply easy accessibility to information from varied units.

Choosing the proper information storage possibility is dependent upon components equivalent to information dimension, information sort, efficiency necessities, safety wants, and value concerns. Organizations might use a mix of those storage choices to satisfy their particular information administration wants.

4. Consumption

That is the ultimate stage of the information integration lifecycle, the place the built-in information is consumed by varied purposes, information analysts, enterprise analysts, information scientists, AI/ML fashions, and enterprise processes. The info might be consumed in varied kinds and thru varied channels, together with:

- Operational methods: The built-in information might be consumed by operational methods utilizing APIs (Software Programming Interfaces) to assist day-to-day operations and decision-making. For instance, a buyer relationship administration (CRM) system might devour information about buyer interactions, purchases, and preferences to supply customized experiences and focused advertising campaigns.

- Analytics: The built-in information might be consumed by analytics purposes and instruments for information exploration, evaluation, and reporting. Knowledge analysts and enterprise analysts use these instruments to determine tendencies, patterns, and insights from the information, which can assist inform enterprise selections and methods.

- Knowledge sharing: The built-in information might be shared with exterior stakeholders, equivalent to companions, suppliers, and regulators, by means of data-sharing platforms and mechanisms. Knowledge sharing allows organizations to collaborate and alternate info, which may result in improved decision-making and innovation.

- Kafka: Kafka is a distributed streaming platform that can be utilized to devour and course of real-time information. Built-in information might be streamed into Kafka, the place it may be consumed by purposes and providers that require real-time information processing capabilities.

- AI/ML: The built-in information might be consumed by AI (Synthetic Intelligence) and ML (Machine Studying) fashions for coaching and inference. AI/ML fashions use the information to be taught patterns and make predictions, which can be utilized for duties equivalent to picture recognition, pure language processing, and fraud detection.

The consumption of built-in information empowers companies to make knowledgeable selections, optimize operations, enhance buyer experiences, and drive innovation. By offering a unified and constant view of knowledge, organizations can unlock the complete potential of their information belongings and achieve a aggressive benefit.

What Are Knowledge Integration Structure Patterns?

On this part, we’ll delve into an array of integration patterns, every tailor-made to supply seamless integration options. These patterns act as structured frameworks, facilitating connections and information alternate between numerous methods. Broadly, they fall into three classes:

- Actual-Time Knowledge Integration

- Close to Actual-Time Knowledge Integration

- Batch Knowledge Integration

1. Actual-Time Knowledge Integration

In varied industries, real-time information ingestion serves as a pivotal component. Let’s discover some sensible real-life illustrations of its purposes:

- Social media feeds show the newest posts, tendencies, and actions.

- Good houses use real-time information to automate duties.

- Banks use real-time information to observe transactions and investments.

- Transportation corporations use real-time information to optimize supply routes.

- On-line retailers use real-time information to personalize purchasing experiences.

Understanding real-time information ingestion mechanisms and architectures is important for selecting the perfect method in your group.

Certainly, there’s a variety of Actual-Time Knowledge Integration Architectures to select from. Amongst them mostly used architectures are:

- Streaming-Based mostly Structure

- Occasion-Pushed Integration Structure

- Lambda Structure

- Kappa Structure

Every of those architectures provides its distinctive benefits and use circumstances, catering to particular necessities and operational wants.

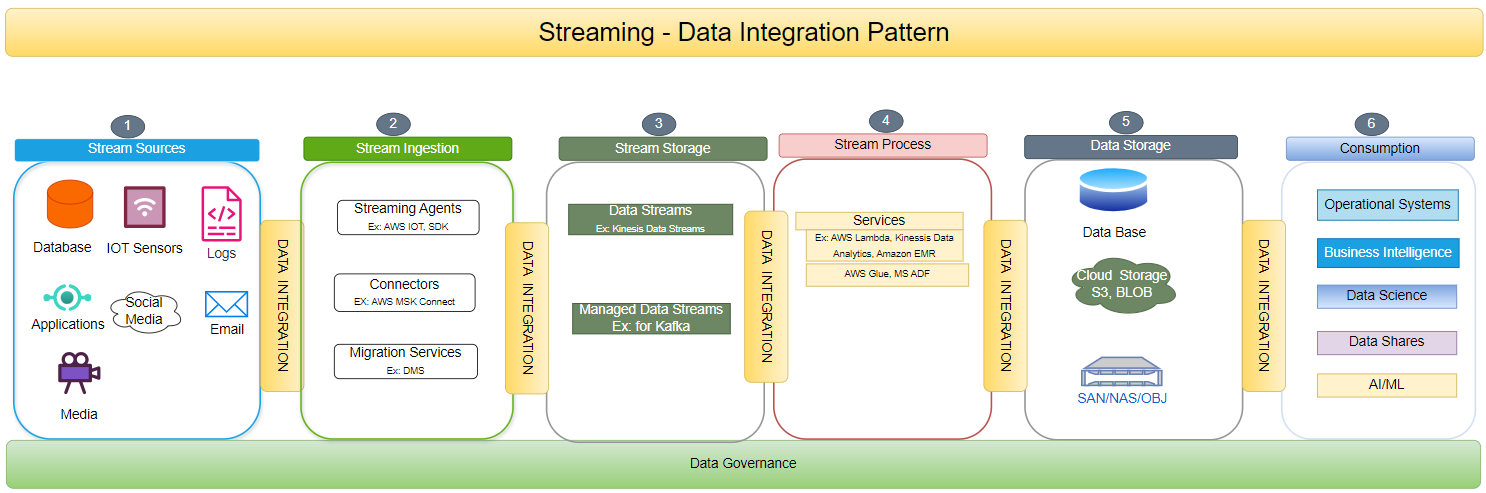

a. Streaming-Based mostly Knowledge Integration Structure

In a streaming-based structure, information streams are repeatedly ingested as they arrive. Instruments like Apache Kafka are employed for real-time information assortment, processing, and distribution.

This structure is good for dealing with high-velocity, high-volume information whereas making certain information high quality and low-latency insights.

Streaming-based structure, powered by Apache Kafka, revolutionizes information processing. It includes steady information ingestion, enabling real-time assortment, processing, and distribution. This method facilitates real-time information processing, handles giant volumes of knowledge, and prioritizes information high quality and low-latency insights.

The diagram beneath illustrates the assorted elements concerned in a streaming information integration structure.

b. Occasion-Pushed Integration Structure

An event-driven structure is a extremely scalable and environment friendly method for contemporary purposes and microservices. This structure responds to particular occasions or triggers inside a system by ingesting information because the occasions happen, enabling the system to react rapidly to adjustments. This permits for environment friendly dealing with of enormous volumes of knowledge from varied sources.

c. Lambda Integration Structure

The Lambda structure embraces a hybrid method, skillfully mixing the strengths of batch and real-time information ingestion. It includes two parallel information pipelines, every with a definite objective. The batch layer expertly handles the processing of historic information, whereas the velocity layer swiftly addresses real-time information. This architectural design ensures low-latency insights, upholding information accuracy and consistency even in intensive distributed methods.

d. Kappa Knowledge Integration Structure

Kappa structure is a simplified variation of Lambda structure particularly designed for real-time information processing. It employs a solitary stream processing engine, equivalent to Apache Flink or Apache Kafka Streams, to handle each historic and real-time information, streamlining the information ingestion pipeline. This method minimizes complexity and upkeep bills whereas concurrently delivering fast and exact insights.

2. Close to Actual-Time Knowledge Integration

In close to real-time information integration, the information is processed and made accessible shortly after it’s generated, which is important for purposes requiring well timed information updates. A number of patterns are used for close to real-time information integration, a few of them have been highlighted beneath:

a. Change Knowledge Seize — Knowledge Integration

Change Knowledge Seize (CDC) is a technique of capturing adjustments that happen in a supply system’s information and propagating these adjustments to a goal system.

b. Knowledge Replication — Knowledge Integration Structure

With the Knowledge Replication Integration Structure, two databases can seamlessly and effectively replicate information based mostly on particular necessities. This structure ensures that the goal database stays in sync with the supply database, offering each methods with up-to-date and constant information. Because of this, the replication course of is easy, permitting for efficient information switch and synchronization between the 2 databases.

c. Knowledge Virtualization — Knowledge Integration Structure

In Knowledge Virtualization, a digital layer integrates disparate information sources right into a unified view. It eliminates information replication, dynamically routes queries to supply methods based mostly on components like information locality and efficiency, and gives a unified metadata layer. The digital layer simplifies information administration, improves question efficiency, and facilitates information governance and superior integration situations. It empowers organizations to leverage their information belongings successfully and unlock their full potential.

3. Batch Course of: Knowledge Integration

Batch Knowledge Integration includes consolidating and conveying a group of messages or information in a batch to reduce community site visitors and overhead. Batch processing gathers information over a time period after which processes it in batches. This method is especially useful when dealing with giant information volumes or when the processing calls for substantial sources. Moreover, this sample allows the replication of grasp information to duplicate storage for analytical functions. The benefit of this course of is the transmission of refined outcomes. The normal batch course of information integration patterns are:

Conventional ETL Structure — Knowledge Integration Structure

This architectural design adheres to the traditional Extract, Remodel, and Load (ETL) course of. Inside this structure, there are a number of elements:

- Extract: Knowledge is obtained from quite a lot of supply methods.

- Remodel: Knowledge undergoes a metamorphosis course of to transform it into the specified format.

- Load: Remodeled information is then loaded into the designated goal system, equivalent to a knowledge warehouse.

Incremental Batch Processing — Knowledge Integration Structure

This structure optimizes processing by focusing solely on new or modified information from the earlier batch cycle. This method enhances effectivity in comparison with full batch processing and alleviates the burden on the system’s sources.

Micro Batch Processing — Knowledge Integration Structure

In Micro Batch Processing, small batches of knowledge are processed at common, frequent intervals. It strikes a stability between conventional batch processing and real-time processing. This method considerably reduces latency in comparison with typical batch processing strategies, offering a notable benefit.

Pationed Batch Processing — Knowledge Integration Structure

On this partitioned batch processing method, voluminous datasets are strategically divided into smaller, manageable partitions. These partitions can then be effectively processed independently, incessantly leveraging the ability of parallelism. This technique provides a compelling benefit by lowering processing time considerably, making it a sexy alternative for dealing with large-scale information.

Conclusion

Listed here are the details to remove from this text:

- It is vital to have a robust information governance framework in place when integrating information from completely different supply methods.

- The info integration patterns must be chosen based mostly on the use circumstances, equivalent to quantity, velocity, and veracity.

- There are 3 varieties of Knowledge integration types, and we should always select the suitable mannequin based mostly on completely different parameters.