In at present’s world, information is a key success issue for a lot of info techniques. To take advantage of information, it must be moved and picked up from many alternative areas, utilizing many alternative applied sciences and instruments.

You will need to perceive the distinction between an information pipeline and an ETL pipeline. Whereas each are designed to maneuver information from one place to a different, they serve totally different functions and are optimized for various duties. The comparability desk beneath highlights the important thing variations:

|

Comparability Desk |

||

|

Function |

Knowledge Pipeline |

ETL Pipeline |

|

Processing Mode |

Actual-time or near-real-time processing |

Batch processing at scheduled intervals |

|

Flexibility |

Extremely versatile with numerous information codecs |

Much less versatile, designed for particular information sources |

|

Complexity |

Complicated throughout transformation however simpler in batch mode |

Complicated throughout transformation however simpler in batch mode |

|

Scalability |

Simply scalable for streaming information |

Scalable however resource-intensive for giant batch duties |

|

Use Instances |

Actual-time analytics, event-driven functions |

Knowledge warehousing, historic information evaluation |

What Is a Knowledge Pipeline?

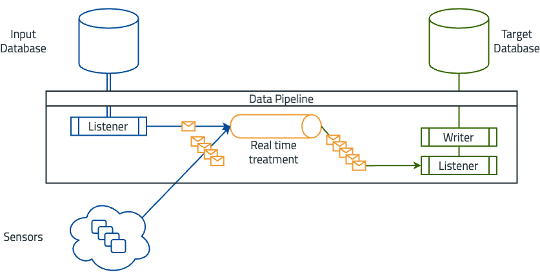

An information pipeline is a scientific course of for transferring information from one system to a different, usually in actual time or close to actual time. It allows the continual stream and processing of knowledge between techniques. The method includes amassing information from a number of sources, processing it because it strikes via the pipeline, and delivering it to focus on techniques.

Knowledge pipelines are designed to deal with the seamless integration and stream of knowledge throughout totally different platforms and functions. They play an important position in fashionable information architectures by enabling real-time analytics, information synchronization, and event-driven processing. By automating the info motion and transformation processes, information pipelines assist organizations keep information consistency and reliability, scale back latency, and be sure that information is at all times accessible for essential enterprise operations and decision-making.

Knowledge pipelines handle information from quite a lot of sources, together with:

- Databases

- APIs

- Recordsdata

- IoT gadgets

Processing

Knowledge pipelines can course of information in actual time or close to actual time. This includes cleansing, enriching, and structuring the info because it flows via the pipeline. For instance, streaming information from IoT gadgets might require real-time aggregation and filtering earlier than it’s prepared for evaluation or storage.

Supply

The ultimate stage of an information pipeline is to ship the processed information to its goal techniques, equivalent to databases, information lakes, or real-time analytics platforms. This step ensures that the info is straight away accessible to a number of functions and supplies on the spot insights that allow speedy resolution making.

Use Instances

Knowledge pipelines are important for situations requiring real-time or steady information processing.

Widespread use instances embrace:

- Actual-time analytics: Knowledge pipelines allow real-time information evaluation for quick insights and resolution making.

- Knowledge synchronization: Ensures information consistency throughout totally different techniques in actual time.

- Occasion-driven functions: Facilitate the processing of occasions in actual time, equivalent to consumer interactions or system logs.

- Stream processing: Handles steady information streams from sources like IoT gadgets, social media feeds, or transaction logs.

Knowledge pipelines are sometimes used with architectural patterns equivalent to CDC (Change Knowledge Seize) (1), Outbox sample (2), or CQRS (Command Question Accountability Segregation) (3).

Professionals and Cons

Knowledge pipelines supply a number of advantages that make them appropriate for numerous real-time information processing situations, however additionally they include their very own set of challenges.

Professionals

Essentially the most outstanding information pipeline benefits embrace:

- Actual-time processing: Offers quick information availability and insights.

- Scalability: Simply scales to deal with massive volumes of streaming information.

- Flexibility: Adapts to numerous information sources and codecs in actual time.

- Low latency: Minimizes delays in information processing and availability.

Cons

The most typical challenges associated to information pipelines embrace:

- Complicated setup: Requires intricate setup and upkeep.

- Useful resource intensive: Steady processing can demand vital computational sources.

- Potential for information inconsistency: Actual-time processing can introduce challenges in making certain information consistency.

- Monitoring Wants: Requires strong monitoring and error dealing with to take care of reliability.

What Is an ETL Pipeline?

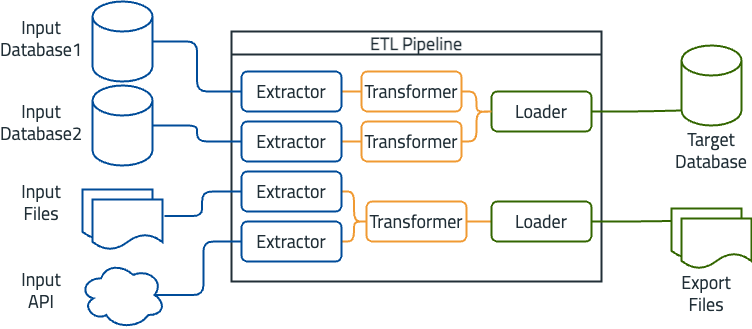

ETL, which stands for “Extract, Transform, and Load”, is a course of used to extract information from totally different sources, rework it into an appropriate format, and cargo it right into a goal system (4).

Extract

An ETL program can accumulate information from quite a lot of sources, together with databases, APIs, information, and extra. The extraction part is separated from the opposite phases to make the transformation and loading phases agnostic to adjustments within the information sources, so solely the extraction part must be tailored.

Rework

As soon as the info extraction part is full, the transformation part begins. On this step, the info is reworked to make sure that it’s structured appropriately for its meant use. As a result of information can come from many alternative sources and codecs, it usually must be cleaned, enriched, or normalized in an effort to be helpful. For instance, information meant for visualization might require a distinct construction to information collected from net varieties (5). The transformation course of ensures that the info is appropriate for its subsequent stage — whether or not that’s evaluation, reporting, or different functions.

Load

The ultimate part of the ETL course of is loading the remodeled information into the goal system, equivalent to a database or information warehouse. Throughout this part, the info is written to the goal optimized for question efficiency and retrieval. This ensures that the info is accessible and prepared for functions (e.g., enterprise intelligence, analytics, reporting, and so forth.). The effectivity of the loading course of is essential as a result of it impacts the provision of knowledge to finish customers. Strategies equivalent to indexing and partitioning can be utilized to enhance efficiency and manageability within the goal system.

Use Instances

ETL processes are important in numerous situations the place information must be consolidated and remodeled for significant evaluation.

Widespread use instances embrace:

- Knowledge warehousing: ETL aggregates information from a number of sources right into a central repository, enabling complete reporting and evaluation.

- Enterprise intelligence: ETL processes extract and rework transactional information to supply actionable insights and assist knowledgeable resolution making.

- Knowledge migration initiatives: ETL facilitates the seamless transition of knowledge from legacy techniques to fashionable platforms, making certain consistency and sustaining information high quality.

- Reporting and compliance: ETL processes rework and cargo information into safe, auditable storage techniques, simplifying the era of correct experiences and sustaining information integrity for compliance and auditing functions.

Professionals and Cons

Evaluating the strengths and limitations of ETL pipelines helps in figuring out their effectiveness for numerous information integration and transformation duties.

Professionals

Essentially the most outstanding ETL pipeline benefits embrace:

- Environment friendly information integration: Streamlines information from various sources.

- Strong transformations: Handles complicated information cleansing and structuring.

- Batch processing: Perfect for giant information volumes throughout off-peak hours.

- Improved information high quality: Enhances information usability via thorough transformations.

Cons

The most typical challenges associated to ETL pipelines embrace:

- Excessive latency: Delays in information availability as a result of batch processing.

- Useful resource intensive: Requires vital computational sources and storage.

- Complicated growth: Tough to take care of with various, altering information sources.

- No real-time processing: Restricted suitability for quick information insights.

Knowledge Pipeline vs. ETL Pipeline: Key Variations

Understanding the important thing variations between information pipelines and ETL pipelines is important for selecting the best answer on your information processing wants. Listed here are the principle distinctions:

Processing Mode

Knowledge pipelines function in actual time or close to actual time, constantly processing information because it arrives, which is right for functions that require quick information insights. In distinction, ETL pipelines course of information in batches at scheduled intervals, leading to delays between information extraction and availability.

Flexibility

Knowledge pipelines are extremely versatile, dealing with a number of information codecs and sources whereas adapting to altering information streams in actual time. ETL pipelines, then again, are much less versatile, designed for particular information sources and codecs, and require vital changes when adjustments happen.

Complexity

Knowledge pipelines are complicated to arrange and keep because of the want for real-time processing and steady monitoring. ETL pipelines are additionally complicated, particularly throughout information transformation, however their batch nature makes them considerably simpler to handle.

Scalability

Knowledge pipelines scale simply to deal with massive volumes of streaming information and adapt to altering masses in actual time. ETL pipelines can scale for giant batch duties, however they usually require vital sources and infrastructure, making them extra useful resource intensive.

Widespread Examples of ETL Pipelines and Knowledge Pipelines

To raised perceive the sensible functions of ETL pipelines and information pipelines, let’s discover some frequent examples that spotlight their use in real-world situations.

Instance of ETL Pipeline

An instance of an ETL pipeline is an information warehouse for gross sales information. On this situation, the enter sources embrace a number of databases that retailer gross sales transactions, CRM techniques, and flat information containing historic gross sales information. The ETL course of includes extracting information from all sources, reworking it to make sure consistency and accuracy, and loading it right into a centralized information warehouse. The goal system, on this case, is an information warehouse optimized for enterprise intelligence and reporting.

Determine 1: Constructing an information warehouse round gross sales information

Instance of Knowledge Pipeline

A standard instance of an information pipeline is real-time sensor information processing — sensors accumulate information that always must be aggregated with normal database information. With that, the enter sources embrace sensors that produce steady information streams and an enter database. The info pipeline consists of a listener that collects information from sensors and the database, processes it in actual time, and forwards it to the goal database. The goal system is a real-time analytics platform that displays sensor information and triggers alerts.

Determine 2: Actual-time sensor information processing

Learn how to Decide Which Is Finest for Your Group

Whether or not an ETL vs information pipeline is finest on your group is determined by a number of components. The traits of the info are essential to this resolution. Knowledge pipelines are perfect for real-time, steady information streams that require quick processing and perception. ETL pipelines, then again, are appropriate for structured information that may be processed in batches the place latency is appropriate.

Enterprise necessities additionally play an vital position. Knowledge pipelines are perfect for use instances that require real-time information evaluation, equivalent to monitoring, fraud detection, or dynamic reporting. In distinction, ETL pipelines are finest suited to situations that require intensive information consolidation and historic evaluation, like information warehousing and enterprise intelligence.

Scalability necessities should even be thought of. Knowledge pipelines supply excessive scalability for real-time information processing and may effectively deal with fluctuating information volumes. ETL pipelines are scalable for giant batch processing duties however might in the end require extra infrastructure and sources.

Backside Line: Knowledge Pipeline vs. ETL Pipeline

The selection between an information pipeline and an ETL pipeline is determined by your particular information wants and enterprise goals. Knowledge pipelines excel in situations that require real-time information processing and quick insights, making them superb for dynamic, fast-paced environments. ETL pipelines, quite the opposite, are designed for batch processing, making them superb for structured information integration, historic evaluation, and complete reporting. Understanding these variations will assist you to select the proper strategy to optimize your information technique and meet what you are promoting goals.

To be taught extra about ETL and information pipelines, take a look at these further programs: