As high-tech firms ramp up building of large information facilities to fulfill the enterprise growth in synthetic intelligence, one element is turning into an more and more uncommon commodity: electrical energy.

Business demand for electrical energy has been rising sharply lately and is projected to extend by 3% in 2024 alone, in accordance with the U.S. Vitality Data Administration. However that progress has been pushed by only a few states—those which might be quickly turning into hubs for large-scale computing amenities, resembling Virginia and Texas.

The stock of North American information facilities grew 24.4% 12 months over 12 months within the first quarter of 2024, because the real-estate providers agency CBRE reviews in its “Global Data Center Trends 2024” examine. These new facilities are being constructed with capacities of 100 to 1,000 megawatts, or about the identical masses that may energy from 80,000 to 800,000 houses, notes the Electrical Energy Analysis Institute in a 2024 white paper.

On this paper, EPRI analyzes AI and data-center vitality consumption and predicts that if a projected excessive progress price of 10% per 12 months continues, information facilities will yearly eat as much as 6.8% of whole U.S. electrical energy technology by 2030—versus an estimated 4% as we speak.

To fulfill that hovering demand, Goldman Sachs Analysis estimates that U.S. utilities might want to make investments round $50 billion in new electrical technology capability. In the meantime, group opposition to information heart building in some areas can also be rising, as grassroots teams protest the potential native impacts of increasingly information facilities and their rising calls for for electrical energy for AI and water for cooling.

Whether or not the nation’s non-public enterprises can pull off the daunting problem of powering an AI “revolution” could rely much less on cash and extra on ingenuity. That CBRE examine concludes with a useful, or maybe hopeful, suggestion: “High-performance computing [or HPC] will require rapid innovation in data center design and technology to manage rising power density needs.”

On the Oak Ridge Management Computing Facility, a Division of Vitality Workplace of Science consumer facility situated at Oak Ridge Nationwide Laboratory, investigating new approaches to energy-efficient supercomputing has at all times been a part of its mission.

Since its formation in 2004, the OLCF has fielded 5 generations of world-class supercomputing techniques which have produced a virtually 2,000 instances enhance in vitality effectivity per floating level operation per second, or flops. Frontier, the OLCF’s newest supercomputer, presently ranks first within the TOP500 checklist of the world’s strongest computer systems, and in 2022, it debuted on the prime of the Green500 checklist of the world’s most energy-efficient computer systems.

Conserving the electrical energy invoice inexpensive goes hand in hand with being a government-funded facility. However setting up and sustaining management supercomputers are not simply the area of presidency. Main tech firms have entered HPC in a giant means however are solely simply now beginning to fear about how a lot energy these mega techniques eat.

“Our machines were always the biggest ones on the planet, but that is no longer true. Private companies are now deploying machines that are several times larger than Frontier. Today, they essentially have unlimited deep pockets, so it’s easy for them to stand up a data center without concern for efficiency,” mentioned Scott Atchley, chief know-how officer of the Nationwide Middle for Computational Sciences, or NCCS, at ORNL. “That will change once they become more power constrained, and they will want to get the most bang for their buck.”

With a long time of expertise in making HPC extra vitality environment friendly, the OLCF could function a useful resource for finest “bang for the buck” practices in a all of the sudden burgeoning {industry}.

“We are uniquely positioned to influence the full energy-efficiency ecosystem of HPC, from the applications to the hardware to the facilities. And you need efficiency gains in all three of those areas to attack the problem,” mentioned Ashley Barker, OLCF program director.

“Striving for improvements in energy efficiency comes into play in every aspect of our facility. What is the most energy-efficient hardware we can buy? What is the most energy-efficient way we can run that hardware? And what are the most energy-efficient ways that we can tweak the applications that run on the hardware?”

Because the OLCF plans its successor to Frontier—known as Discovery—these questions are requested every day as completely different groups work collectively to ship a brand new supercomputer by 2028 that may even exhibit next-generation vitality efficiencies in HPC.

System {hardware}

One of the vital vital computational effectivity developments of the previous 30 years originated from an unlikely supply: video video games.

Extra particularly, the innovation got here from chip makers competing to satisfy the online game {industry}’s want for more and more refined in-game graphics. To attain the real looking visuals that drew in avid gamers, private computer systems and sport consoles required devoted chips—also referred to as the graphics processing unit, or GPU—to render detailed shifting photographs.

As we speak, GPUs are an indispensable a part of most supercomputers, particularly ones used for coaching synthetic intelligence fashions. In 2012, when the OLCF pioneered the usage of GPUs in leadership-scale HPC with its Titan supercomputer, the design was thought of a daring departure from conventional techniques that rely solely on central processing items, or CPUs.

It required computational scientists to adapt their codes to completely exploit the GPU’s means to churn by means of easy calculations and velocity up the time to resolution. The much less time it takes a pc to unravel a selected downside, the extra issues it will probably remedy in a given timeframe.

“A GPU is, by design, extra vitality environment friendly than a CPU. Why is it extra environment friendly? If you are going to run electrical energy into a pc and also you need it to do calculations very effectively, you then need virtually all of the electrical energy powering floating level operations. You need as a lot silicon space to only be floating level items, not all the opposite stuff that is on each CPU chip.

“A GPU is almost pure floating point units. When you run electricity into a machine with GPUs, it takes roughly about a tenth the amount of energy as a machine that just has CPUs,” mentioned ORNL’s Al Geist, director of the Frontier mission.

The OLCF’s gamble on GPUs in 2012 paid off over the subsequent decade with progressively extra energy-efficient techniques as every technology of OLCF supercomputer elevated its variety of speedier GPUs. This evolution culminated within the structure of Frontier, launched in 2022 because the world’s first exascale supercomputer, able to greater than 1 quintillion calculations per second and consisting of 9,408 compute nodes.

Nevertheless, when exascale discussions started in 2008, the Exascale Research Group issued a report outlining its 4 largest challenges, foremost of which was energy consumption. It foresaw an electrical invoice of probably $500 million a 12 months. Even accounting for the projected technological advances of 2015, the report predicted {that a} stripped-down 1-exaflop system would use 150 megawatts of electrical energy.

“DOE said, ‘That’s a non-starter.’ Well, we asked, what would be acceptable? And the answer that came back was, ‘We don’t want you to spend more money on electricity than the cost of the machine,'” Geist mentioned. “Within the 2009 timeframe, supercomputers price about $100 million. They’ve a lifetime of about 5 years.

“What you end up with is about $20 million per year that we could spend on electricity. How many megawatts can I get out of $20 million? It turns out that 1 megawatt here in East Tennessee is $1 million a year, roughly. So that was the number we set as our target: a 20-megawatt per exaflop system.”

There wasn’t a transparent path to reaching that vitality consumption objective. So, in 2012, the DOE Workplace of Science launched the FastForward and DesignForward packages to work with distributors to advance new applied sciences.

FastForward initially targeted on the processor, reminiscence and storage distributors to handle efficiency, power-consumption and resiliency points. It later moved its focus to node design (i.e., the person compute server). DesignForward initially targeted on scaling networks to the anticipated system sizes and later targeted on whole-system packaging, integration and engineering.

On account of the FastForward funding, semiconductor chip vendor AMD developed a sooner, extra highly effective compute node for Frontier—consisting of a 64-core third Gen EPYC CPU and 4 Intuition MI250X GPUs—and discovered a approach to make the GPUs extra environment friendly by turning off sections of the chips that aren’t getting used after which turning them again on when wanted in only a few milliseconds.

“In the old days, the entire system would light up and sit there idle, still burning electricity. Now we can turn off everything that’s not being used—and not just a whole GPU. On Frontier, about 50 different areas on each GPU can be turned off individually if they’re not being used. Now, not only is the silicon area mostly devoted to floating point operations, but in fact I’m not going to waste any energy on anything I’m not using,” Geist mentioned.

Nevertheless, with the subsequent technology of supercomputers, merely persevering with so as to add extra GPUs to attain extra calculations per watt could have reached its level of diminishing returns, even with newer and extra superior architectures.

“The processor vendors will really have to reach into their bag of tricks to come up with techniques that will give them just small, incremental improvements. And that’s not only true for energy efficiency, but it’s also true for performance. They’re getting about as much performance out of the silicon as they can,” Atchley mentioned.

“We’ve been benefiting from Moore’s Law: transistors got smaller, they got cheaper and they got faster. Our applications ran faster, and the price point was the same or less. That world is over. There are some possible technologies out there that might give us some jumps, but the biggest thing that will help us is a more integrated, holistic approach to energy efficiency.”

System operations

Feiyi Wang—chief of the OLCF’s Analytics and AI Strategies at Scale, or AAIMS, group—has been spending a lot of his time pondering an elusive objective: the right way to function a supercomputer in order that it makes use of much less vitality. Tackling this downside first required the meeting of a large quantity of HPC operational information.

Lengthy earlier than Frontier was constructed, he and the AAIMS group collected over one 12 months’s price of energy profiling information from Summit, the OLCF’s 200-petaflop supercomputer launched in 2018. Summit’s 4,608 nodes every have over 100 sensors that report metrics at 1 hertz, which means that for each second, the system reviews over 460,000 metrics.

Utilizing this 10-terabyte dataset, Wang’s group analyzed Summit’s whole system from finish to finish, together with its central vitality plant, which incorporates all its cooling equipment. They overlaid the system’s job allocation historical past on the telemetry information to assemble per-job, fine-grained power-consumption profiles for over 840,000 jobs. This work earned them the Greatest Paper Award on the 2021 Worldwide Convention for Excessive Efficiency Computing, Networking, Storage, and Evaluation, or SC21.

The trouble additionally led Wang to provide you with a couple of concepts about how such information can be utilized to make knowledgeable operational selections for higher vitality effectivity.

Utilizing the energy-profile datasets from Summit, Wang and his group kicked off the Good Facility for Science mission to offer ongoing manufacturing perception into HPC techniques and provides system operators “data-driven operational intelligence,” as Wang places it.

“I want to take this continuous monitoring one step further to ‘continuous integration,’ meaning that we want to take the computer’s ongoing metrics and integrate them into a system so that the user can observe how their energy usage is going to be for their particular job application. Taking this further, we also want to implement ‘continuous optimization,” going from simply monitoring and integration to really optimizing the work on the fly,” Wang mentioned.

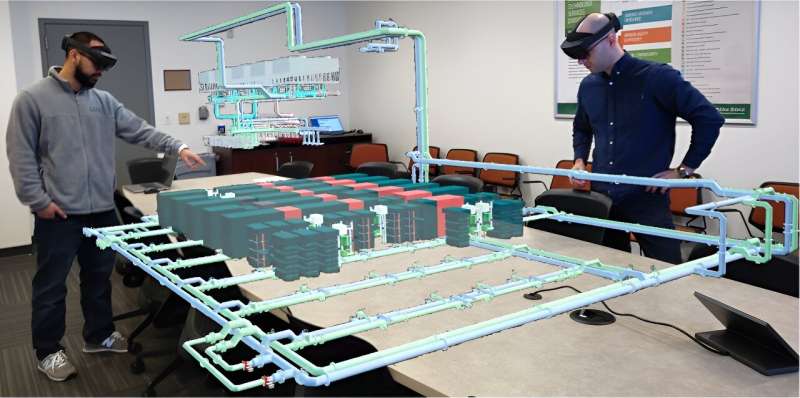

One other certainly one of Wang’s concepts could help in that objective. At SC23, Wang and lead creator Wes Brewer, a senior analysis scientist within the AAIMS group, delivered a presentation, “Toward the Development of a Comprehensive Digital Twin of an Exascale Supercomputer.” They proposed a framework known as ExaDIGIT that makes use of augmented actuality, or AG, and digital actuality, or VR, to offer holistic insights into how a facility operates to enhance its general vitality effectivity.

Now, ExaDIGIT has advanced right into a collaborative mission of 10 worldwide and {industry} companions, and Brewer will current the group’s latest paper at SC24 in Atlanta, Georgia.

At ORNL, the AAIMS group launched the Digital Twin for Frontier mission to assemble a simulation of the Frontier supercomputer. This digital Frontier will allow operators to experiment with “What if we tried this?” energy-saving situations earlier than trying them on the true Frontier machine. What in the event you raised the incoming water temperature of Frontier’s cooling system—would that enhance its effectivity? Or will you set it vulnerable to not cooling the system sufficient, thereby driving up its failure price?

“Frontier is a system so valuable that you can’t just say, ‘Let’s try it out. Let’s experiment on the system,’ because the consequences may be destructive if you get it wrong,” Wang mentioned. “But with this digital twin idea, we can take all that telemetry data into a system where, if we have enough fidelity modeled for the power and cooling aspects of the system, we can experiment. What if I change this setting—does it have a positive effect on the system or not?”

Frontier’s digital twin may be run on a desktop laptop, and utilizing VR and AR permits operators to look at the system telemetry in a extra interactive and intuitive means as they modify parameters. The AAIMS group additionally created a digital scheduling system to look at the digital twin’s energy consumption and the way it progresses over time because it runs jobs.

Though the digital Frontier continues to be being developed, it’s already yielding insights into how workloads can have an effect on its cooling system and what occurs with the facility losses that happen throughout rectification, which is the method of changing alternating present to direct present. The system can also be getting used to foretell the longer term energy and cooling wants of Discovery.

“We can and will tailor our development as well as the system to address any current and future pressing challenges faced by the OLCF,” Wang mentioned.

Facility infrastructure

Powering a supercomputer does not simply imply turning it on—it additionally means powering your entire facility that helps it. Most crucial is the cooling system that should take away the warmth generated by all the pc’s cupboards in its information heart.

“From a 10,000-foot viewpoint, a supercomputer is really just a giant heater—I take electricity from the grid, I run it into this big box, and it gets hot because it’s using electricity. Now I have to run more electricity into an air conditioner to cool it back off again so that I can keep it running and it doesn’t melt,” Geist mentioned.

“Inside the data center there is a lot of work that goes into cooling these big machines more efficiently. From 2009 to 2022, we have reduced the energy needed for cooling by 10 times, and our team will continue to make cooling optimizations going forward.”

A lot of the planning for these cooling optimizations is led by David Grant, the lead HPC mechanical engineer in ORNL’s Laboratory Modernization Division. Grant oversees the design and building of recent mechanical amenities and is primarily accountable for guaranteeing that each new supercomputer system put in on the OLCF has the cooling it requires to reliably function 24-7.

He began at ORNL in 2009 and labored on operations for the Jaguar supercomputer. Then, he turned concerned in its transition into Titan in 2012, led Summit’s infrastructure design for its launch in 2018, and most lately oversaw all of the engineering to help Frontier.

In that span of time, the OLCF’s cooling techniques have considerably advanced alongside the chip know-how, going from loud followers and chiller-based air-conditioning in Jaguar to fan-free liquid cooling in Frontier.

Moreover, the water temperatures required to chill down the compute nodes have risen from 42°F for Titan to Frontier’s 90°F—a goal set by the FastForward program. That additional heat spurs large vitality financial savings as a result of the circulating water not must be refrigerated and may be sufficiently cooled by evaporative towers as an alternative.

“We are trying to get the warmest water possible back from the cabinets while serving them the warmest water-supply temperatures—the higher the supply temperatures, the better,” Grant mentioned.

“Warmer water coming back to us allows us to minimize the flow that we have to circulate on the facility side of the system, which saves pumping energy. And then the warmer temperatures allow us to be more efficient with our cooling towers to be able to reject that heat to our environment.”

Frontier’s energy utilization effectiveness, or PUE—the ratio of the full energy utilized by a pc data-center facility versus the facility delivered to computing gear—is delivering 1.03 at peak utilization. This basically implies that for each 1,000 watts of warmth, it takes simply 30 watts of further electrical energy to take care of the system’s applicable thermal envelope.

The worldwide, industry-wide common for information facilities is round 1.47 PUE, in accordance with the Uptime Institute.

Making additional reductions in energy utilization for a sooner system resembling Discovery would require much more revolutionary approaches, which Grant is investigating.

First, the idea of recovering (or utilizing) a few of Discovery’s extra warmth could maintain some promise. The power is properly located to reuse waste warmth if it may be moved from the cooling system to the heating system. However this activity is difficult due to the elevated temperatures of the heating system, the low-grade warmth from the cooling system and the extremely dynamic nature of the warmth being generated by the HPC techniques.

Second, the incoming Discovery system will share Frontier’s cooling system. Extra operational efficiencies are anticipated from this combined-use configuration.

“Proper now, Frontier will get to take a seat by itself cooling system, and we have optimized it for that kind of operation. However you probably have Frontier demanding as much as 30 megawatts after which one other system demanding maybe that a lot once more, what does that do to our cooling system?

“It is designed to be able to do that, but we’re going to be operating at a different place in its operational envelope that we haven’t seen before. So, there’ll be new opportunities that present themselves once we get there,” Grant mentioned.

Third, Grant is analyzing how building and gear decisions could profit the power’s general vitality effectivity. For instance, Frontier’s cooling system has 20 particular person cooling towers that require a course of known as pacification to assist defend their inner metallic surfaces, and this course of entails quite a lot of pumping over time. That step could possibly be eradicated with newer towers that not require the pacification course of.

Fourth, idle time on a supercomputer can deplete quite a lot of electrical energy —Frontier’s idle masses are 7 to eight megawatts. What if that idle load could possibly be significantly lowered or eradicated?

“When we interact with the customers who have influence on the software side, we try to communicate to them how their decisions will translate through the cooling system and to the facility energy use,” Grant mentioned.

“I think there’s a lot of potential on the software side to try to reduce the idle load requirement and make their models run as efficiently as possible and increase the utilization of the system. In return, they will get higher production on their side for the data that they’re trying to produce.”

Functions

Optimizing science purposes to run extra effectively on the OLCF’s supercomputers is the area of Tom Beck, head of the NCCS’s Science Engagement part, and Trey White, a distinguished analysis scientist within the NCCS’s Algorithms and Efficiency Evaluation group. Getting codes to return their outcomes sooner isn’t precisely a brand new idea, however the objective is now shifting away from simply pure velocity.

“For a long time, people have wanted to make their codes run faster, and that’s what we’ve concentrated on—that singular goal of running faster applications, which also happened to reduce energy use,” White mentioned.

“Hardware is still increasing in speed, just not as fast as it used to, and so now we must look at applications in terms of both time and energy efficiency. For the most part, running faster means less energy, but it’s not a perfect correlation. So, we are now starting to look at trade-offs between the two.”

One space the group is investigating is how the working frequency of the GPUs can impression their vitality consumption. The utmost frequency for a GPU to attain its quickest throughput could not essentially be probably the most energy-efficient frequency.

“But if you start at the maximum frequency and pull back by 5% to 10%, there are some indications you might get 20% or 25% energy savings. So, then it’s an arbitrage of, are you willing to give up a little bit of your performance to get big energy savings?” Beck mentioned.

“Previously, what maximum clock frequency the computer uses was set for all projects to a single number, in general. But now we’re looking at adapting that per application and maybe even within a single run,” White mentioned. “That ‘frequency knob’ is one example of something where there’s a trade-off between time and energy efficiency, and we’re investigating how to give users that choice.”

One other space the group is exploring is the usage of mixed-precision arithmetic. Traditionally, full-precision floating level arithmetic at 64 bits was thought of the usual for computational accuracy in science purposes. More and more extra highly effective supercomputers because the early 2000s made full precision practically as quick to make use of as single-precision arithmetic at 32 bits.

Now, with the rise of the AI market, low-precision arithmetic—16 bits or fewer—has demonstrated that it’s correct sufficient for coaching neural networks and different data-science purposes. Pushed by GPUs, low-precision calculations can provide substantial speedups and vitality financial savings.

“Using lower precision is a scary landscape to users because everybody’s used to assuming full precision’s 64 bits and partly just because it’s already there and accessible,” Beck mentioned.

“And if you start deviating from 64 bits, it could impact things in nonlinear ways throughout your code, where it’s really hard to track down what’s going on. So that’s part of our research strategy—to do a broad study of the impacts of going to mixed-precision arithmetic in some applications.”

One other space which will reap will increase in vitality effectivity is information switch—the much less motion of knowledge, the much less electrical energy required. This work could possibly be completed by setting up software program algorithms that cut back information motion. Beck wish to provide customers pie charts that present the chances of energy utilized by every completely different operation of an algorithm, thereby permitting them to focus on potential reductions.

“Without a radical hardware change or revolution in the architecture, applications are really the place that people are looking now for increasing energy efficiency,” Beck mentioned. “Almost definitely, this isn’t a sport of getting a 300% enchancment by means of coding.

“There are definitely places where we can make improvements, but it’s probably going to be a more incremental process of 3% here, 5% there. But if you can accumulate that over a bunch of changes and get to 20%, that’s a big accomplishment.”

Oak Ridge Nationwide Laboratory

Quotation:

Laptop engineers pioneer approaches to energy-efficient supercomputing (2024, September 11)

retrieved 12 September 2024

from https://techxplore.com/information/2024-09-approaches-energy-efficient-supercomputing.html

This doc is topic to copyright. Other than any honest dealing for the aim of personal examine or analysis, no

half could also be reproduced with out the written permission. The content material is offered for info functions solely.