Apple cancelled its main CSAM proposals however launched options equivalent to automated blocking of nudity despatched to kids

A baby safety group says it has discovered extra instances of abuse photos on Apple platforms within the UK than Apple has reported globally.

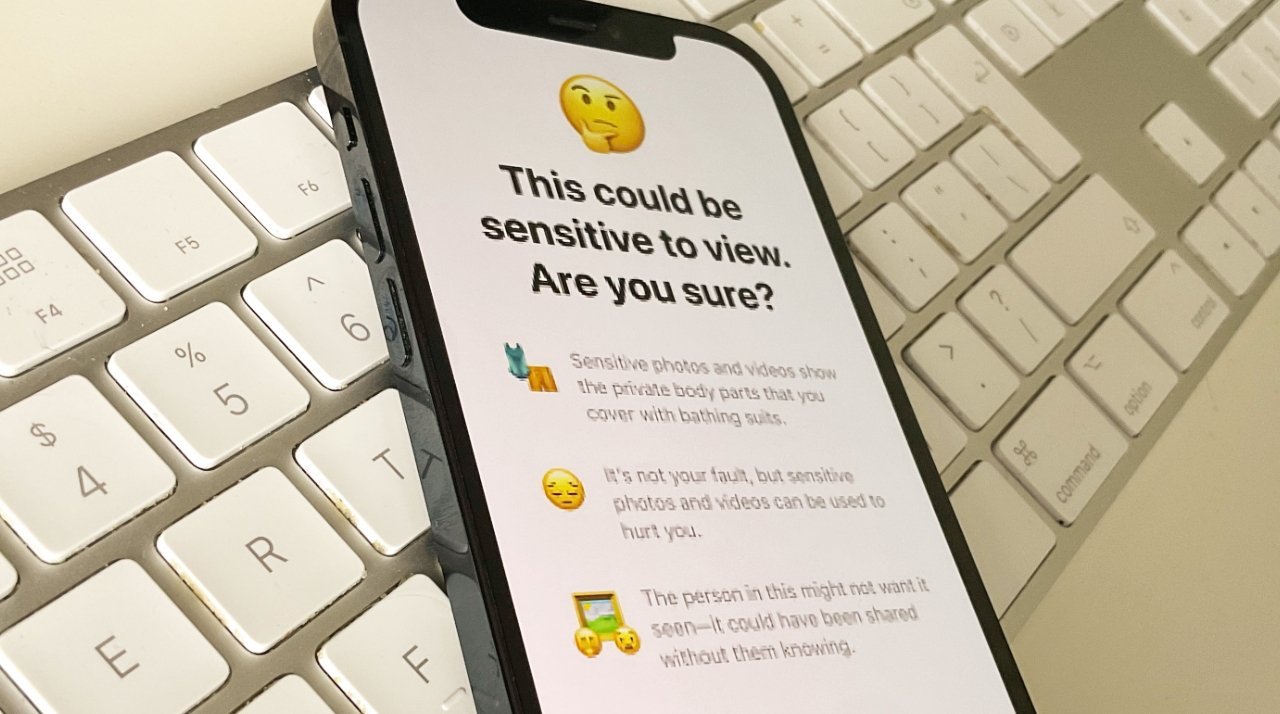

In 2022, Apple deserted its plans for Little one Sexual Abuse Materials (CSAM) detection, following allegations that it could finally be used for surveillance of all customers. The corporate switched to a set of options it calls Communication Security, which is what blurs nude photographs despatched to kids.

In keeping with The Guardian newspaper, the UK’s Nationwide Society for the Prevention of Cruelty to Youngsters (NSPCC) says Apple is vastly undercounting incidents of CSAM in providers equivalent to iCloud, FaceTime and iMessage. All US know-how corporations are required to report detected instances of CSAM to the Nationwide Middle for Lacking & Exploited Youngsters (NCMEC), and in 2023, Apple made 267 studies.

These studies presupposed to be for CSAM detection globally. However the UK’s NSPCC has independently discovered that Apple was implicated in 337 offenses between April 2022 and March 2023 — in England and Wales alone.

“There is a concerning discrepancy between the number of UK child abuse image crimes taking place on Apple’s services and the almost negligible number of global reports of abuse content they make to authorities,” Richard Collard, head of kid security on-line coverage on the NSPCC stated. “Apple is clearly behind many of their peers in tackling child sexual abuse when all tech firms should be investing in safety and preparing for the roll out of the Online Safety Act in the UK.”

Compared to Apple, Google reported over 1,470,958 instances in 2023. For a similar interval, Meta reported 17,838,422 instances on Fb, and 11,430,007 on Instagram.

Apple is unable to see the contents of customers iMessages, as it’s an encrypted service. However the NCMEC notes that Meta’s WhatsApp can be encrypted, but Meta reported round 1,389,618 suspected CSAM instances in 2023.

In response to the allegations, Apple reportedly referred The Guardian solely to its earlier statements about general person privateness.

Reportedly, some baby abuse consultants are involved about AI-generated CSAM photos. The forthcoming Apple Intelligence won’t create photorealistic photos.