Mainframe methods have been the spine of enterprise computing for many years, famend for his or her reliability, efficiency, and safety. Nonetheless, the evolving enterprise panorama calls for agility, scalability, and cost-effectiveness, prompting organizations to discover cloud-based options. Main know-how firms, together with cloud suppliers and system integrators, have invested closely in mainframe migration practices, recognizing the importance of this transformation.

Mainframes and cloud computing every have their strengths and particular use instances. It isn’t honest to generalize both, and in observe, a hybrid method is widespread. This publish will discover a sensible resolution for mainframe workload migration utilizing a hybrid sample, the place sure workloads transfer to the cloud whereas nonetheless interacting with on-premises functions and information sources.

I intention to supply an end-to-end workflow with detailed, hands-on info vital for implementing production-ready options. The main focus is on migrating a mainframe workload to IBM Cloud, however the resolution stays cloud-agnostic. To emphasise this, I will conclude with a reference structure for AWS.

This documentation takes a options architect’s perspective, outlining a mainframe workload migration. Whereas some customary assumptions are made for simplicity, the situation stays sensible. The publish additionally covers a multi-zone deployment technique for top availability and catastrophe restoration.

Do be aware that we’ll not be diving into the features of code transformations and database migrations on this weblog. The aim right here is to provide the vital instruments and structure so that you can leverage while you embark upon a mainframe modernization journey.

Methodology

The proposed mainframe modernization technique adopts a hybrid method, enabling the co-existence of mainframe and cloud environments. By leveraging refactoring strategies, mainframe workloads are remodeled into cloud-native functions, whereas sustaining integration with on-premises methods and information sources. The answer encompasses varied features, together with:

- Software refactoring: Reworking mainframe functions, akin to CICS and BMS maps, into fashionable, cloud-native Java functions

- Knowledge migration: Migrating mainframe information shops, together with DB2 and VSAM datasets, to cloud-based managed database providers, akin to IBM Db2 on Cloud or AWS Aurora PostgreSQL

- Safe connectivity: Establishing safe connectivity between the cloud atmosphere and on-premises methods, leveraging applied sciences like Direct Join or Digital Personal Community (VPN)

- Integration and interoperability: Facilitating seamless integration and interoperability between the migrated functions and present on-premises methods by safe file transfers and API-based interactions

- Safety and compliance: Implementing sturdy safety measures, together with encryption, entry controls, community segmentation, and menace detection, to make sure information safety and regulatory compliance

- Excessive availability and catastrophe restoration: Deploying multi-zone and multi-region methods to attain excessive availability and catastrophe restoration, aligning with the unique mainframe atmosphere’s uptime necessities

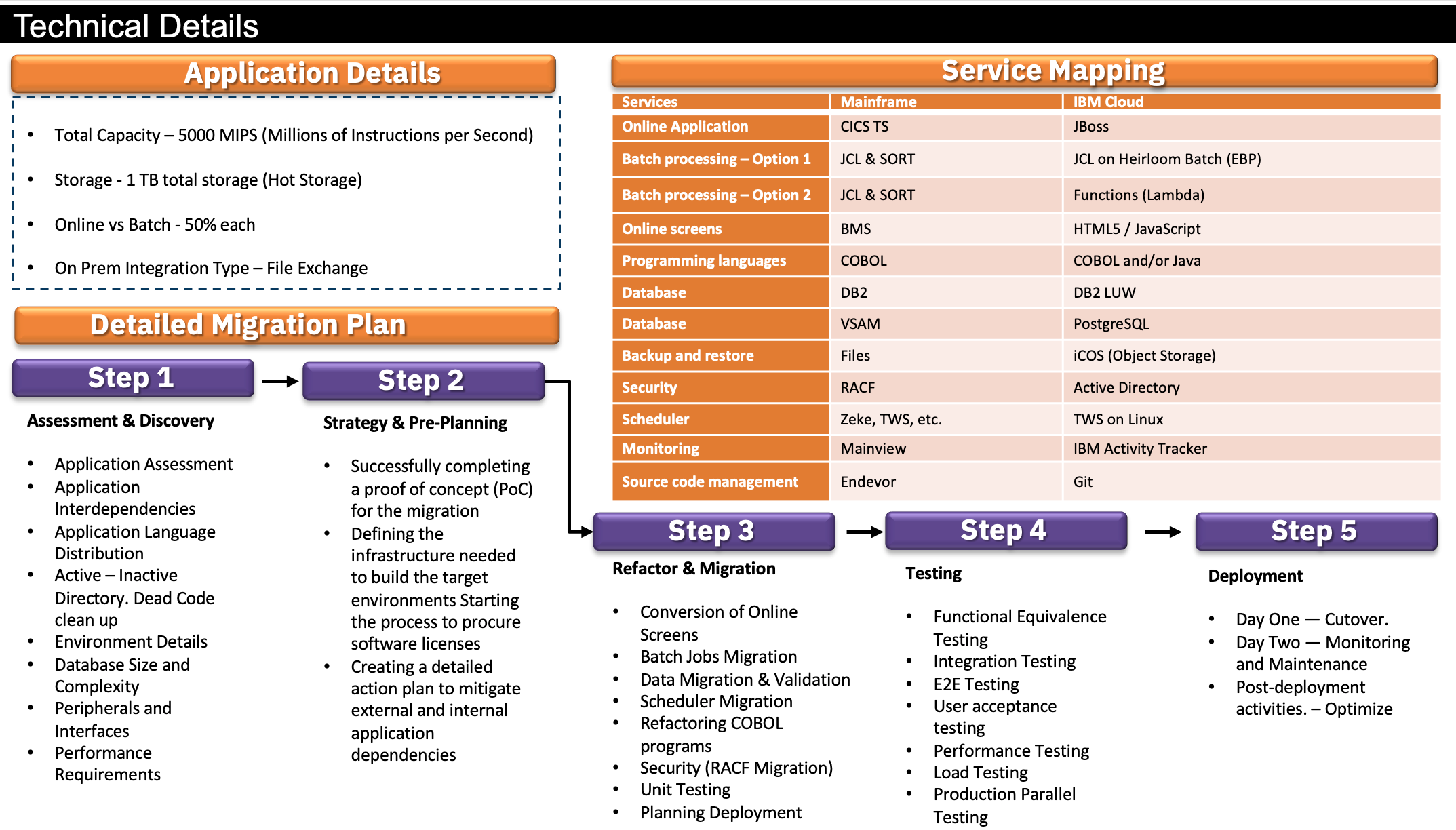

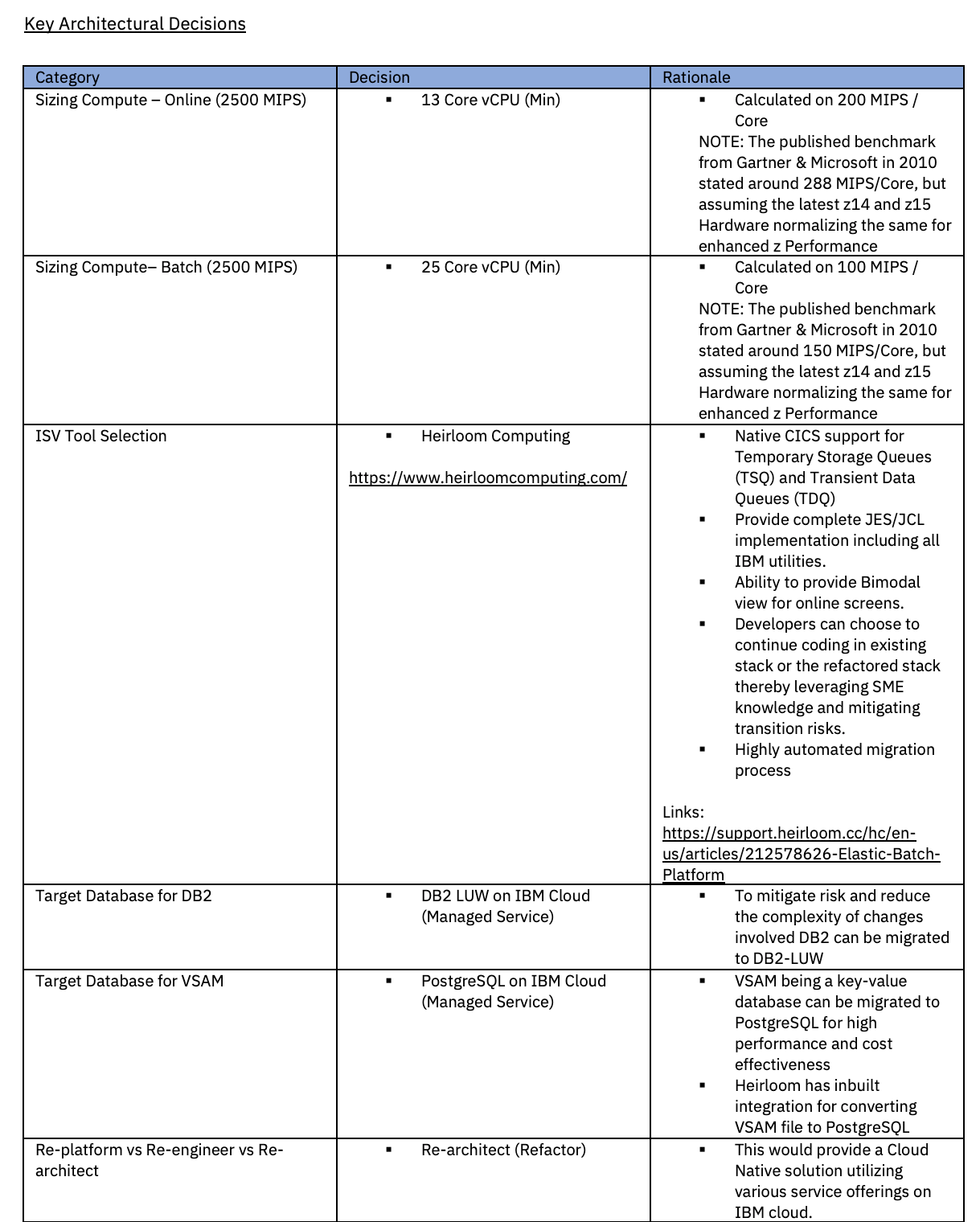

For the aim of this weblog, we’ll take into account the next technical particulars.

Assumptions

- 5000 MIPS is predicated on a single LPAR and never unfold throughout LPARS.

- 1 TB complete storage being listed is all sizzling storage and the design provisions for DASD-like entry. Any chilly storage found within the evaluation part will probably be factored in later.

- 50% of the applying consists of batch processing.

- The migrated software would nonetheless want to speak with present on-premises functions.

- The present software has entry to all their underlying code which will probably be used for refactoring.

The top-to-end challenge plan will comply with this path:

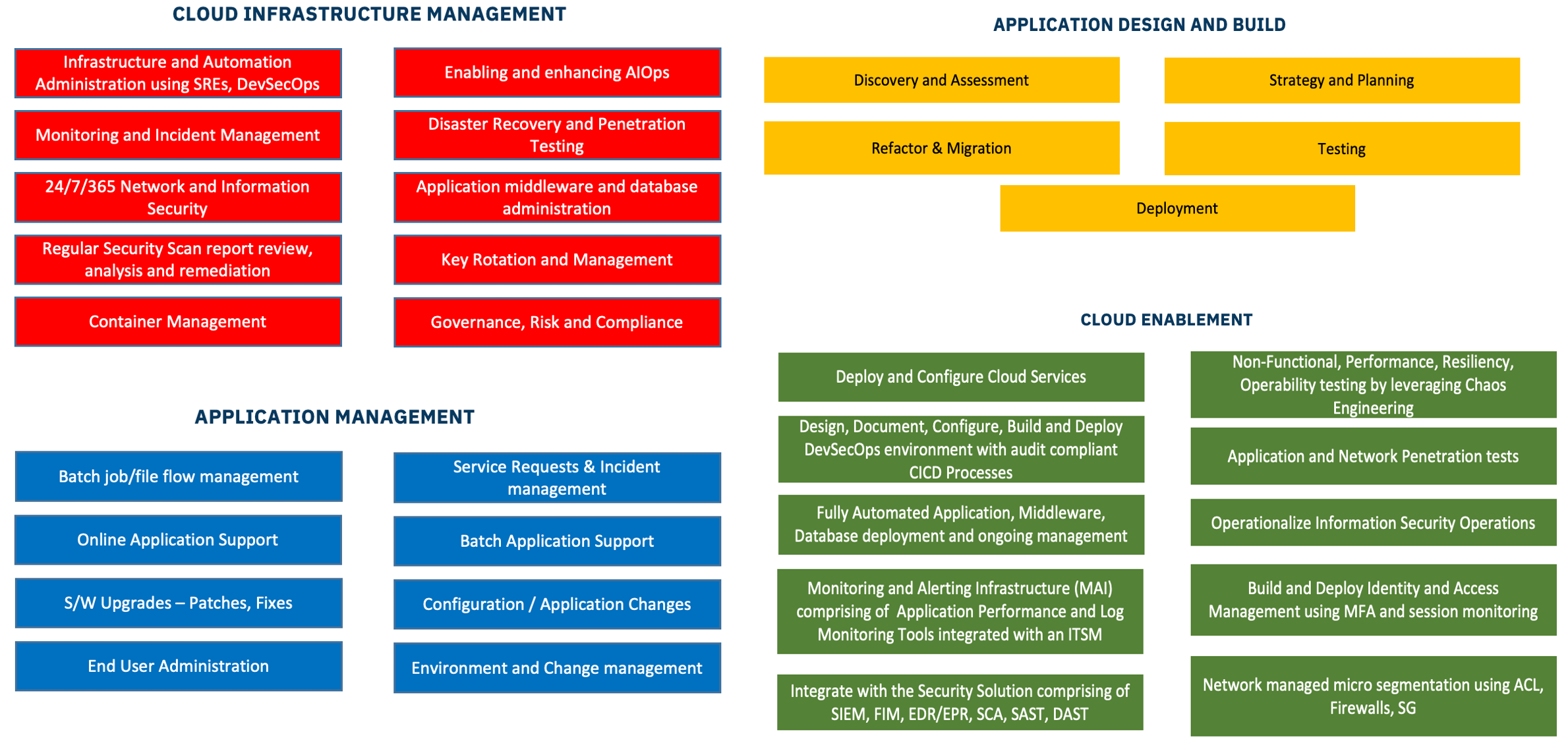

Following is the cloud migration mannequin encompassing completely different features of the goal state (cloud). Transferring to the cloud additionally comes with completely different duties and finest practices.

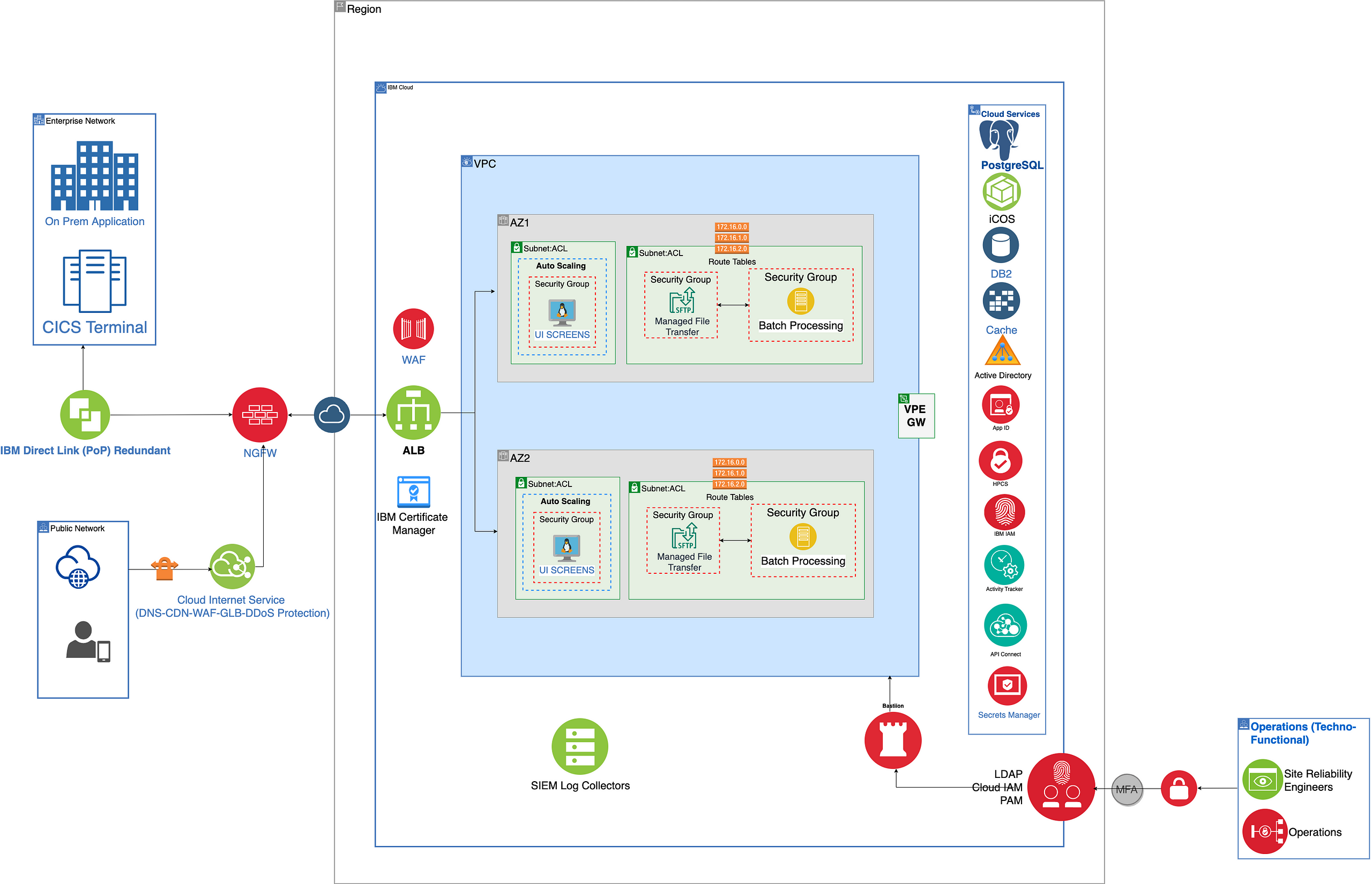

Reference Structure (IBM Cloud)

The part gives detailed reference architectures tailor-made for each IBM Cloud and Amazon Internet Providers (AWS), demonstrating the cloud-agnostic nature of the proposed resolution. These architectures illustrate the combination of varied cloud providers and parts, akin to load balancers, digital non-public endpoints, bastion hosts, and role-based entry controls (RBAC), to handle the precise necessities of the migrated mainframe workload.

Reference structure on IBM cloud

- Internet customers with completely different personas work together with the UI screens (refactored from CICS/BMS maps) over the general public web. The site visitors right here is proven to be routed by IBM Cloud Web Service, which incorporates Area Title Service (DNS), International Load Balancer (GLB), Distributed Denial of Service (DDoS) safety, Internet Software Firewall (WAF), Transport Layer Safety (TLS), Price Limiting, Good Routing, and Caching.

- On-premises functions talk by Direct Join for a safe, quick, and dependable connection to the platform.

- The on-premises functions change information with the migrated software operating on the IBM cloud utilizing a managed file switch service.

- Safe Sockets Layer (SSL) or Transport Layer Safety (TLS) are used to authenticate information transfers originating from exterior the community.

- The incoming site visitors hits a Load Balancer; SSL offloading occurs on the Internet Software Firewalls. All in-transit information is aggregated and analyzed for threats and compliance adherence.

- East-West site visitors is managed by Entry Management Lists (ACL) and Safety Teams (SG). ACL and SG assist isolate workloads from each other and safe them individually.

- ALB will route the site visitors based mostly on customary well being checks and different routing guidelines offering vital resiliency.

- Incoming and outgoing information would leverage a predefined Touchdown Zone, which can be arrange utilizing any File Gateway (e.g., IBM Sterling File Gateway) utilizing customary file switch protocols.

- Each On-line and Batch functions had been refactored into Java Purposes operating on Digital Machines on the cloud.

- Leveraging the Digital Personal Endpoint, the applying layers connect with the native providers (Managed and Hosted) on the IBM cloud with out traversing by the general public web.

- All information at relaxation (datastore, database, backups, snapshots) will probably be encrypted for enhanced safety.

- Outbound information are being transferred to on-premises downstream functions utilizing the identical secured community.

- Admin and Administration site visitors could be regulated with privileged entry for operations and upkeep.

- Bastion Host gives admin entry to the digital machines (VMs), maximizing safety by minimizing open ports.

- Service-based authentication is used to determine trusted identities to regulate entry to providers and assets.

- Position-based entry controls (RBAC) will probably be used to supply required authorization to limit entry rights and permissions.

- All of the logs, uncooked safety, and occasion information generated by the functions, databases, safety units, and host methods will probably be despatched to a regular SIEM interface for aggregation, evaluation, and detection.

- All Infrastructure, Administration, and Safety providers and parts are deployed to assist the Manufacturing Software and Knowledge Availability necessities aligned to the present on-premises Mainframe atmosphere.

Deployment Technique for Excessive Availability and Catastrophe Restoration

Generally, mainframe functions are extremely out there. A few of them really ship as much as 5 9s of availability which will be additional elevated by clustering know-how like Parallel Sysplex. The deployment mannequin should meet the supply and resiliency necessities of on-premises mainframe environments.

For this software, we’ll goal a two-zone deployment on the Main area with redundancy inbuilt by one other single-zone deployment on the DR facet. This can give an optimum price case for the applying with 5000 MIPS the place solely 50% (2500 MIPS) is OLTP.

Batch processing, basically, doesn’t warrant that a lot built-in redundancy. Primarily based on the enterprise case, we will all the time transfer to a 2-zone deployment even on the DR facet, however that can have an effect on the price. Additionally, whereas migrating mainframe functions, it must be assessed whether or not they can leverage multi-zone deployments each on an Software and Database layer to realize the utmost advantage of distributed processing.

At this level, we’re not contemplating any RTO and RPO necessities. The deployment technique might change based mostly on the precise RTO and RPO necessities.

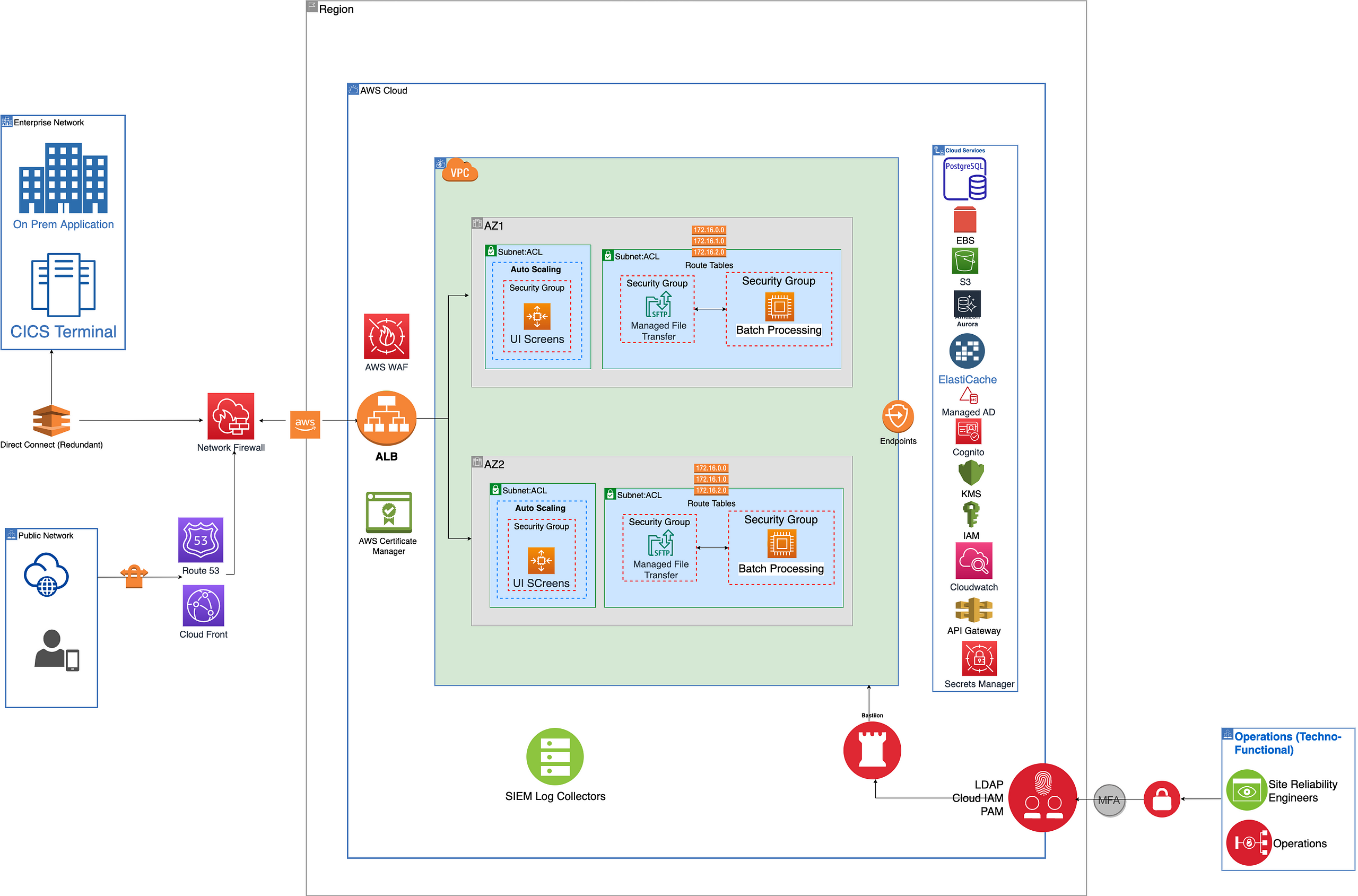

Reference Structure (AWS Cloud)

Reference structure on AWS cloud

As beforehand famous, I will conclude this publish by presenting an equal AWS reference structure. This illustration will display that, regardless of some variations within the underlying providers, the general architectural construction stays largely constant.

For simplicity’s sake and ease of readers, I’ve maintained the essential structure whereas changing the providers and parts with equal ones in AWS.

A key level to focus on is the method to database internet hosting. Although it’s potential to run Db2 as a self-managed or AWS-managed software on Amazon Internet Providers (AWS), for the aim of showcasing heterogeneous migration, we now have leveraged Aurora PostgreSQL for each the DB2 and VSAM datasets.

Conclusion

I’ve tried to seize the integral parts by this resolution, however each use case could be completely different and might have additional refinements. Nonetheless, this might be referenced as a place to begin to your migration options.