Quite a few AI tasks launched with promise fail to set sail. This isn’t often due to the standard of the machine studying (ML) fashions. Poor implementation and system integration sink 90% of tasks. Organizations can save their AI endeavors. They need to undertake satisfactory MLOps practices and select the correct set of instruments. This text discusses MLOps practices and instruments that may save sinking AI tasks and increase strong ones, probably doubling undertaking launch pace.

MLOps in a Nutshell

MLOps is a mixture of machine studying utility growth (Dev) and operational actions (Ops). It’s a set of practices that helps automate and streamline the deployment of ML fashions. Because of this, the whole ML lifecycle turns into standardized.

MLOps is advanced. It requires concord between information administration, mannequin growth, and operations. It might additionally want shifts in know-how and tradition inside a corporation. If adopted easily, MLOps permits professionals to automate tedious duties, similar to information labeling, and make deployment processes clear. It helps be sure that undertaking information is safe and compliant with information privateness legal guidelines.

Organizations improve and scale their ML techniques by means of MLOps practices. This makes collaboration between information scientists and engineers more practical and fosters innovation.

Weaving AI Initiatives From Challenges

MLOps professionals remodel uncooked enterprise challenges into streamlined, measurable machine studying targets. They design and handle ML pipelines, guaranteeing thorough testing and accountability all through an AI undertaking’s lifecycle.

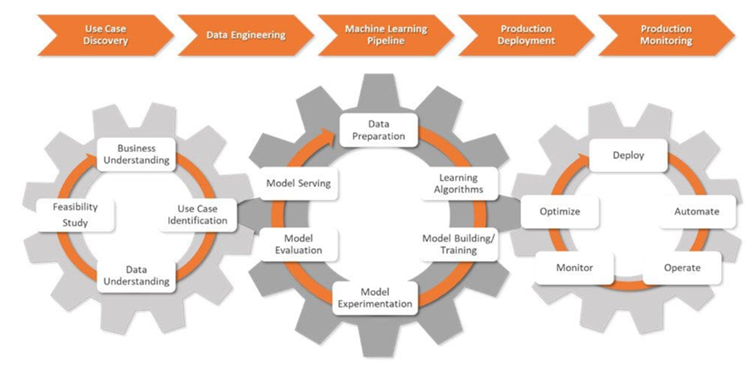

Within the preliminary part of an AI undertaking known as use case discovery, information scientists work with companies to outline the issue. They translate it into an ML drawback assertion and set clear aims and KPIs.

MLOps framework

Subsequent, information scientists crew up with information engineers. They collect information from numerous sources, after which clear, course of, and validate this information.

When information is prepared for modeling, information scientists design and deploy strong ML pipelines, built-in with CI/CD processes. These pipelines assist testing and experimentation and assist monitor information, mannequin lineage, and related KPIs throughout all experiments.

Within the manufacturing deployment stage, ML fashions are deployed within the chosen atmosphere: cloud, on-premises, or hybrid.

Information scientists monitor the fashions and infrastructure, utilizing key metrics to spot modifications in information or mannequin efficiency. After they detect modifications, they replace the algorithms, information, and hyperparameters, creating new variations of the ML pipelines. Additionally they handle reminiscence and computing assets to maintain fashions scalable and working easily.

MLOps Instruments Meet AI Initiatives

Image an information scientist creating an AI utility to boost a shopper’s product design course of. This answer will speed up the prototyping part by offering AI-generated design alternate options primarily based on specified parameters.

Information scientists navigate by means of numerous duties, from designing the framework to monitoring the AI mannequin in real-time. They want the correct instruments and a grasp of the way to use them at each step.

Higher LLM Efficiency, Smarter AI Apps

On the core of an correct and adaptable AI answer are vector databases and these key instruments to spice up LLMs efficiency:

- Guardrails is an open-source Python bundle that helps information scientists add construction, sort, and high quality checks to LLM outputs. It robotically handles errors and takes actions, like re-querying the LLM, if validation fails. It additionally enforces ensures on output construction and kinds, similar to JSON.

- Information scientists want a device for environment friendly indexing, looking, and analyzing massive datasets. That is the place LlamaIndex steps in. The framework gives highly effective capabilities to handle and extract insights from intensive data repositories.

- The DUST framework permits LLM-powered functions to be created and deployed with out execution code. It helps with the introspection of mannequin outputs, helps iterative design enhancements, and tracks totally different answer variations.

Observe Experiments and Handle Mannequin Metadata

Information scientists experiment to raised perceive and enhance ML fashions over time. They want instruments to arrange a system that enhances mannequin accuracy and effectivity primarily based on real-world outcomes.

- MLflow is an open-source powerhouse, helpful to supervise the whole ML lifecycle. It gives options like experiment monitoring, mannequin versioning, and deployment capabilities. This suite lets information scientists log and examine experiments, monitor metrics, and preserve ML fashions and artifacts organized.

- Comet ML is a platform for monitoring, evaluating, explaining, and optimizing ML fashions and experiments. Information scientists can use Comet ML with Scikit-learn, PyTorch, TensorFlow, or HuggingFace — it’ll present insights to enhance ML fashions.

- Amazon SageMaker covers the whole machine-learning lifecycle. It helps label and put together information, in addition to construct, prepare, and deploy advanced ML fashions. Utilizing this device, information scientists shortly deploy and scale fashions throughout numerous environments.

- Microsoft Azure ML is a cloud-based platform that helps streamline machine studying workflows. It helps frameworks like TensorFlow and PyTorch, and it might probably additionally combine with different Azure companies. This device helps information scientists with experiment monitoring, mannequin administration, and deployment.

- DVC (information model management) is an open-source device meant to deal with massive information units and machine studying experiments. This device makes information science workflows extra agile, reproducible, and collaborative. DVC works with present model management techniques like Git, simplifying how information scientists monitor modifications and share progress on advanced AI tasks.

Optimize and Handle ML Workflows

Information scientists want optimized workflows to realize smoother and more practical processes on AI tasks. The next instruments can help:

- Prefect is a contemporary open-source device that information scientists use to observe and orchestrate workflows. Light-weight and versatile, it has choices to handle ML pipelines (Prefect Orion UI and Prefect Cloud).

- Metaflow is a strong device for managing workflows. It’s meant for information science and machine studying. It eases specializing in mannequin growth with out the effort of MLOps complexities.

- Kedro is a Python-based device that helps information scientists preserve a undertaking reproducible, modular, and straightforward to keep up. It applies key software program engineering rules to machine studying (modularity, separation of considerations, and versioning). This helps information scientists construct environment friendly, scalable tasks.

Handle Information and Management Pipeline Variations

ML workflows want exact information administration and pipeline integrity. With the correct instruments, information scientists keep on prime of these duties and deal with even essentially the most advanced information challenges with confidence.

- Pachyderm helps information scientists automate information transformation and provides strong options for information versioning, lineage, and end-to-end pipelines. These options can run seamlessly on Kubernetes. Pachyderm helps integration with numerous information sorts: pictures, logs, movies, CSVs, and a number of languages (Python, R, SQL, and C/C++). It scales to deal with petabytes of knowledge and hundreds of jobs.

- LakeFS is an open-source device designed for scalability. It provides Git-like model management to object storage and helps information model management on an exabyte scale. This device is good for dealing with intensive information lakes. Information scientists use this device to handle information lakes with the identical ease as they deal with code.

Check ML Fashions for High quality and Equity

Information scientists deal with creating extra dependable and truthful ML options. They take a look at fashions to reduce biases. The precise instruments assist them assess key metrics, like accuracy and AUC, assist error evaluation and model comparability, doc processes, and combine seamlessly into ML pipelines.

- Deepchecks is a Python bundle that assists with ML fashions and information validation. It additionally eases mannequin efficiency checks, information integrity, and distribution mismatches.

- Truera is a contemporary mannequin intelligence platform that helps information scientists improve belief and transparency in ML fashions. Utilizing this device, they will perceive mannequin conduct, determine points, and cut back biases. Truera gives options for mannequin debugging, explainability, and equity evaluation.

- Kolena is a platform that enhances crew alignment and belief by means of rigorous testing and debugging. It gives a web based atmosphere for logging outcomes and insights. Its focus is on ML unit testing and validation at scale, which is essential to constant mannequin efficiency throughout totally different eventualities.

Convey Fashions to Life

Information scientists want dependable instruments to effectively deploy ML fashions and serve predictions reliably. The next instruments assist them obtain clean and scalable ML operations:

- BentoML is an open platform that helps information scientists deal with ML operations in manufacturing. It helps streamline mannequin packaging and optimize serving workloads for effectivity. It additionally assists with sooner setup, deployment, and monitoring of prediction companies.

- Kubeflow simplifies deploying ML fashions on Kubernetes (domestically, on-premises, or within the cloud). With this device, the whole course of turns into easy, moveable, and scalable. It helps all the things from information preparation to prediction serving.

Simplify the ML Lifecycle With Finish-To-Finish MLOps Platforms

Finish-to-end MLOps platforms are important for optimizing the machine studying lifecycle, providing a streamlined strategy to creating, deploying, and managing ML fashions successfully. Listed here are some main platforms on this area:

- Amazon SageMaker provides a complete interface that helps information scientists deal with the whole ML lifecycle. It streamlines information preprocessing, mannequin coaching, and experimentation, enhancing collaboration amongst information scientists. With options like built-in algorithms, automated mannequin tuning, and tight integration with AWS companies, SageMaker is a prime choose for creating and deploying scalable machine studying options.

- Microsoft Azure ML Platform creates a collaborative atmosphere that helps numerous programming languages and frameworks. It permits information scientists to make use of pre-built fashions, automate ML duties, and seamlessly combine with different Azure companies, making it an environment friendly and scalable alternative for cloud-based ML tasks.

- Google Cloud Vertex AI gives a seamless atmosphere for each automated mannequin growth with AutoML and customized mannequin coaching utilizing in style frameworks. Built-in instruments and easy accessibility to Google Cloud companies make Vertex AI very best for simplifying the ML course of, serving to information science groups construct and deploy fashions effortlessly and at scale.

Signing Off

MLOps isn’t just one other hype. It’s a important discipline that helps professionals prepare and analyze massive volumes of knowledge extra shortly, precisely, and simply. We are able to solely think about how this can evolve over the subsequent ten years, however it’s clear that AI, large information, and automation are simply starting to achieve momentum.