One of many best needs of firms is end-to-end visibility of their operational and analytical workflows. The place does information come from? The place does it go? To whom am I giving entry to? How can I observe information high quality points? The aptitude to comply with the information circulate to reply these questions is named information lineage. This weblog publish explores market tendencies, efforts to offer an open customary with OpenLineage, and the way information governance options from distributors resembling IBM, Google, Confluent, and Collibra assist fulfill the enterprise-wide information governance wants of most firms, together with information streaming applied sciences resembling Apache Kafka and Flink.

What Is Knowledge Governance?

Knowledge governance refers back to the total administration of the supply, usability, integrity, and safety of information utilized in a company. It entails establishing processes, roles, insurance policies, requirements, and metrics to make sure that information is correctly managed all through its lifecycle. Knowledge governance goals to make sure that information is correct, constant, safe, and compliant with regulatory necessities and organizational insurance policies. It encompasses actions resembling information high quality administration, information safety, metadata administration, and compliance with data-related rules and requirements.

What Is the Enterprise Worth of Knowledge Governance?

The enterprise worth of information governance is critical and multifaceted:

- Improved information high quality: Knowledge governance ensures that information is correct, constant, and dependable, main to raised decision-making, lowered errors, and improved operational effectivity.

- Enhanced regulatory compliance: By establishing insurance policies and procedures for information administration and making certain compliance with rules resembling GDPR, HIPAA, and CCPA, information governance helps mitigate dangers related to non-compliance, together with penalties and reputational harm.

- Elevated belief and confidence: Efficient information governance instills belief and confidence in information amongst stakeholders. It results in higher adoption of data-driven decision-making and improved collaboration throughout departments.

- Price discount: By decreasing information redundancy, eliminating information inconsistencies, and optimizing information storage and upkeep processes, information governance helps organizations decrease prices related to information administration and compliance.

- Higher threat administration: Knowledge governance allows organizations to establish, assess, and mitigate dangers related to information administration, safety, privateness, and compliance, decreasing the probability and affect of data-related incidents.

- Help for enterprise initiatives: Knowledge governance gives a basis for strategic initiatives resembling digital transformation, information analytics, and AI/ML initiatives by making certain that information is offered, accessible, and dependable for evaluation and decision-making.

- Aggressive benefit: Organizations with strong information governance practices can leverage information extra successfully to realize insights, innovate, and reply to market adjustments shortly, giving them a aggressive edge of their trade.

General, information governance contributes to improved information high quality, compliance, belief, price effectivity, threat administration, and competitiveness, in the end driving higher enterprise outcomes and worth creation.

What Is Knowledge Lineage?

Knowledge lineage refers back to the capability to hint the entire lifecycle of information, from its origin via each transformation and motion throughout totally different programs and processes. It gives an in depth understanding of how information is created, modified, and consumed inside a company’s information ecosystem, together with details about its supply, transformations utilized, and locations.

Knowledge lineage is an integral part of information governance: Understanding information lineage helps organizations guarantee information high quality, compliance with rules, and adherence to inner insurance policies by offering visibility into information flows and transformations.

Knowledge Lineage Is NOT Occasion Tracing!

Occasion tracing and information lineage are totally different ideas that serve distinct functions within the realm of information administration:

Knowledge Lineage

- Knowledge lineage refers back to the capability to trace and visualize the entire lifecycle of information, from its origin via each transformation and motion throughout totally different programs and processes.

- It gives an in depth understanding of how information is created, modified, and consumed inside a company’s information ecosystem, together with details about its supply, transformations utilized, and locations.

- Knowledge lineage focuses on the circulate of information and metadata, serving to organizations guarantee information high quality, compliance, and trustworthiness by offering visibility into information flows and transformations.

Occasion Tracing

- Occasion tracing, often known as distributed tracing, is a method utilized in distributed programs to observe and debug the circulate of particular person requests or occasions as they traverse via numerous elements and providers.

- It entails instrumenting functions to generate hint information, which accommodates details about the trail and timing of occasions as they propagate throughout totally different nodes and providers.

- Occasion tracing is primarily used for efficiency monitoring, troubleshooting, and root trigger evaluation in advanced distributed programs, serving to organizations establish bottlenecks, latency points, and errors in request processing.

In abstract, information lineage focuses on the lifecycle of information inside a company’s information ecosystem, whereas occasion tracing is extra involved with monitoring the circulate of particular person occasions or requests via distributed programs for troubleshooting and efficiency evaluation.

Right here is an instance in cost processing: Knowledge lineage would observe the trail of cost information from initiation to settlement, detailing every step and transformation it undergoes. In the meantime, occasion tracing would monitor particular person occasions inside the cost system in actual time, capturing the sequence and end result of actions, resembling authentication checks and transaction approvals.

What Is the Customary “OpenLineage”?

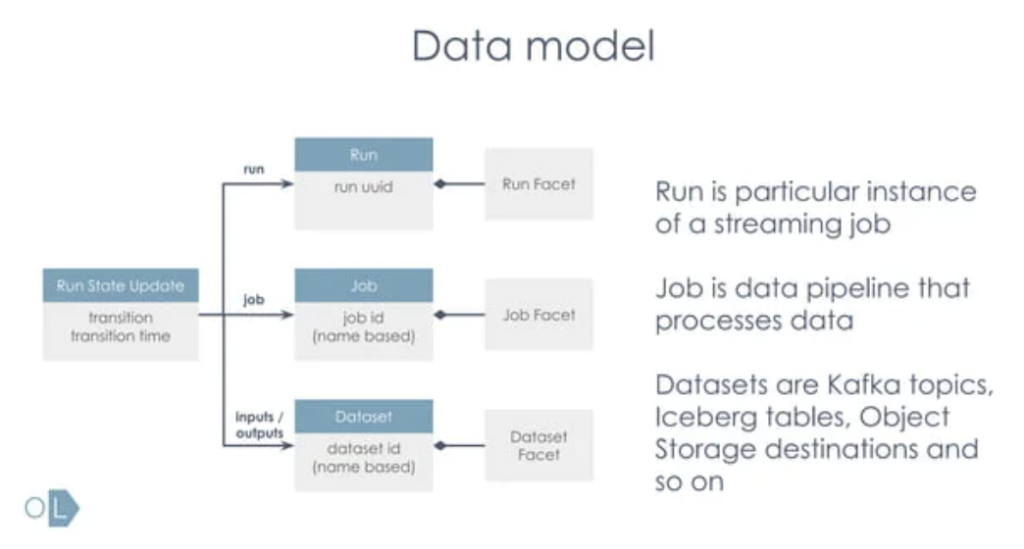

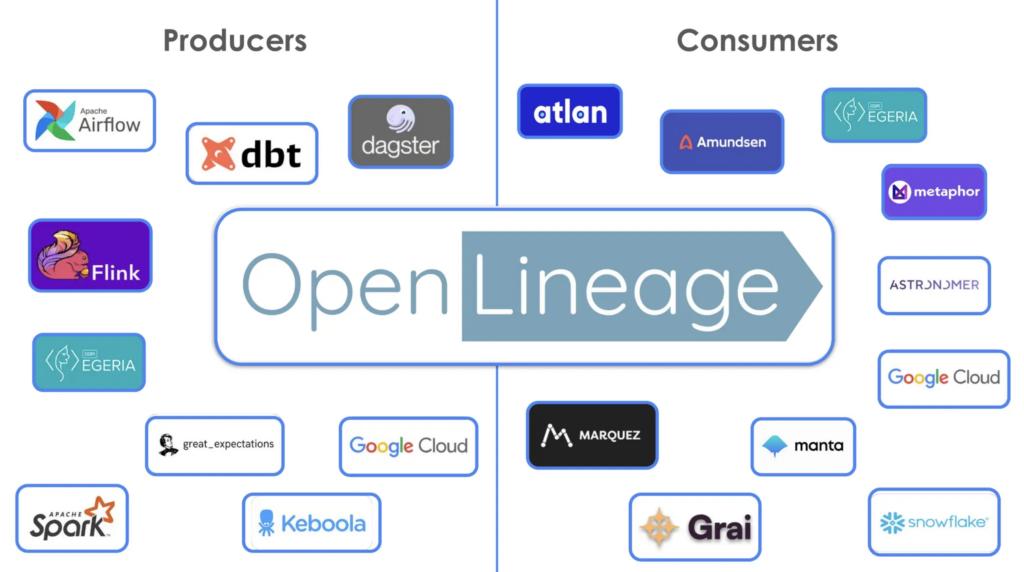

Open Lineage is an open-source challenge that goals to standardize metadata administration for information lineage. It gives a framework for capturing, storing, and sharing metadata associated to the lineage of information because it strikes via numerous phases of processing inside a company’s information infrastructure. By offering a standard format and APIs for expressing and accessing lineage data, Open Lineage allows interoperability between totally different information processing programs and instruments, facilitating information governance, compliance, and information high quality efforts.

Supply: OpenLineage (introduced at Kafka Summit London 2024)

OpenLineage is an open platform for the gathering and evaluation of information lineage. It contains an open customary for lineage information assortment, integration libraries for the most typical instruments, and a metadata repository/reference implementation (Marquez). Many frameworks and instruments already help producers/customers:

Supply: OpenLineage (introduced at Kafka Summit London 2024)

Knowledge Governance for Knowledge Streaming (Like Apache Kafka and Flink)

Knowledge streaming entails the real-time processing and motion of information via its distributed messaging platform. This permits organizations to effectively ingest, course of, and analyze giant volumes of information from numerous sources. By decoupling information producers and customers, a knowledge streaming platform gives a scalable and fault-tolerant answer for constructing real-time information pipelines to help use instances resembling real-time analytics, event-driven architectures, and information integration.

The de facto customary for information streaming is Apache Kafka, utilized by over 100,000 organizations. Kafka is not only used for giant information, it additionally gives help for transactional workloads.

Knowledge Governance Variations With Knowledge Streaming In comparison with Knowledge Lake and Knowledge Warehouse

Implementing information governance and lineage with information streaming presents a number of variations and challenges in comparison with information lakes and information warehouses:

1. Actual-Time Nature

Knowledge streaming entails the processing of information in actual time when it’s generated, whereas information lakes and information warehouses usually cope with batch processing of historic information. This real-time nature of information streaming requires governance processes and controls that may function on the velocity of streaming information ingestion, processing, and evaluation.

2. Dynamic Knowledge Circulation

Knowledge streaming environments are characterised by dynamic and steady information flows, with information being ingested, processed, and analyzed in near-real-time. This dynamic nature requires information governance mechanisms that may adapt to altering information sources, schemas, and processing pipelines in actual time, making certain that governance insurance policies are utilized persistently throughout the complete streaming information ecosystem.

3. Granular Knowledge Lineage

In information streaming, information lineage must be tracked at a extra granular degree in comparison with information lakes and information warehouses. It’s because streaming information usually undergoes a number of transformations and enrichments because it strikes via streaming pipelines. In some instances, the lineage of every particular person information report should be traced to make sure information high quality, compliance, and accountability.

4. Rapid Actionability

Knowledge streaming environments usually require fast actionability of information governance insurance policies and controls to handle points resembling information high quality points, safety breaches, or compliance violations in actual time. This necessitates the automation of governance processes and the mixing of governance controls immediately into streaming information processing pipelines, enabling well timed detection, notification, and remediation of governance points.

5. Scalability and Resilience

Knowledge streaming platforms like Apache Kafka and Apache Flink are designed for scalability and resilience to deal with each, excessive volumes of information and transactional workloads with important SLAs. The platform should guarantee steady stream processing even within the face of failures or disruptions. Knowledge governance mechanisms in streaming environments must be equally scalable and resilient to maintain tempo with the dimensions and velocity of streaming information processing, making certain constant governance enforcement throughout distributed and resilient streaming infrastructure.

6. Metadata Administration Challenges

Knowledge streaming introduces distinctive challenges for metadata administration, as metadata must be captured and managed in actual time to offer visibility into streaming information pipelines, schema evolution, and information lineage. This requires specialised instruments and strategies for capturing, storing, and querying metadata in streaming environments, enabling stakeholders to grasp and analyze the streaming information ecosystem successfully.

In abstract, implementing information governance with information streaming requires addressing the distinctive challenges posed by the real-time nature, dynamic information circulate, granular information lineage, fast actionability, scalability, resilience, and metadata administration necessities of streaming information environments. This entails adopting specialised governance processes, controls, instruments, and strategies tailor-made to the traits and necessities of information streaming platforms like Apache Kafka and Apache Flink.

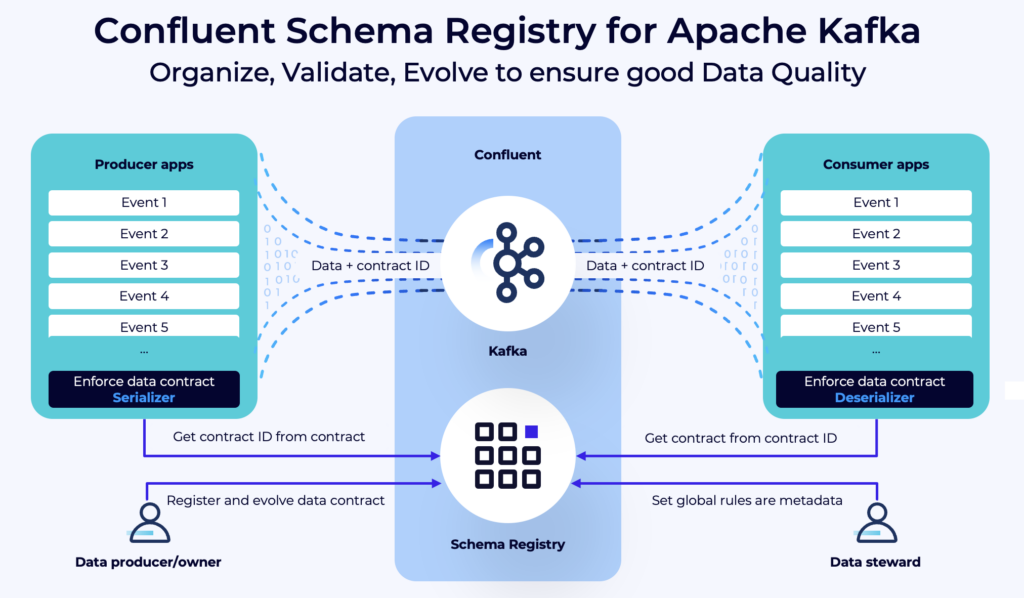

Schemas and Knowledge Contracts for Streaming Knowledge

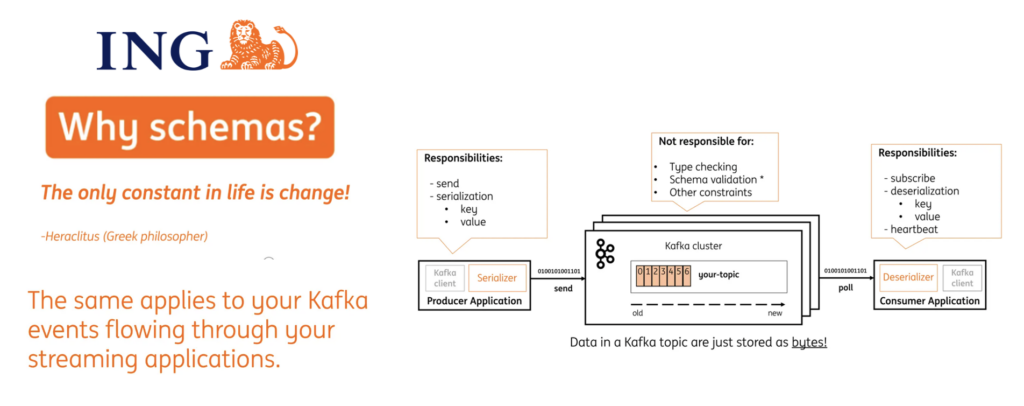

The muse of information governance for streaming information are schemas and information contracts. Confluent Schema Registry is offered on GitHub. Schema Registry is offered underneath the Confluent Neighborhood License which permits deployment in manufacturing eventualities with no licensing prices.

For extra particulars, try my article, “Policy Enforcement and Data Quality for Apache Kafka with Schema Registry.” Listed below are two nice case research for monetary providers firms leveraging schemas and group-wide API contracts throughout the group for information governance:

Supply: ING Financial institution

Knowledge Lineage for Streaming Knowledge

Being a core fundament of information governance, information streaming initiatives require good information lineage for visibility and governance. Immediately’s market primarily gives two choices: customized initiatives or shopping for a industrial product/cloud service. Nonetheless the market is growing, and open requirements emerge for information lineage and integrating information streaming into its implementations.

Let’s discover an instance of a industrial answer and an open customary for streaming information lineage:

- Cloud service: Knowledge lineage as a part of Confluent Cloud

- Open customary: OpenLineage’s integration with Apache Flink and Marquez

Knowledge Lineage in Confluent Cloud for Kafka and Flink

To maneuver ahead with updates to important functions or reply questions on essential topics like information regulation and compliance, groups want a straightforward technique of comprehending the big-picture journey of information in movement.

Stream lineage gives a graphical UI of occasion streams and information relationships with each a fowl’s eye view and drill-down magnification for answering questions like:

- The place did information come from?

- The place is it going?

- The place, when, and the way was it reworked?

Solutions to questions like these permit builders to belief the information they’ve discovered, and achieve the visibility wanted to verify their adjustments gained’t trigger any detrimental or sudden downstream affect. Builders can be taught and resolve shortly with reside metrics and metadata inspection embedded immediately inside lineage graphs.

The Confluent documentation goes into rather more element, together with examples, tutorials, free cloud credit, and many others. A lot of the above description can also be copied from there.

OpenLineage for Stream Processing With Apache Flink

In current months, stream processing has gained the actual focus of the OpenLineage neighborhood, as described in a devoted speak at Kafka Summit 2024 in London.

Many helpful options for stream processing accomplished or begun in OpenLineage’s implementation, together with:

- A seamless OpenLineage and Apache Flink integration

- Help for streaming jobs in information catalogs like Marquez, manta, atlan

- Progress on a built-in lineage API inside the Flink codebase

The builders did a pleasant reside demo on the Kafka Summit speak that reveals information lineage throughout Kafka Matters, Flink functions, and different databases with the reference implementation of OpenLineage (Marquez).

The OpenLineage Flink integration is within the early stage with limitations, like no help for Flink SQL or Desk API but. However this is a crucial initiative. Cross-platform lineage allows a holistic overview of information circulate and its dependencies inside organizations. This should embody stream processing (which frequently runs essentially the most important workloads in an enterprise).

The Want for Enterprise-Huge Knowledge Governance and Knowledge Lineage

Knowledge governance, together with information lineage, is an enterprise-wide problem. OpenLineage is a wonderful method for an open customary to combine with numerous information platforms like information streaming platforms, information lakes, information warehouses, lake homes, and every other enterprise software.

Nonetheless, we’re nonetheless early on this journey. Most firms (must) construct customized options right now for enterprise-wide governance and lineage of information throughout numerous platforms. Quick time period, most firms leverage purpose-built information governance and lineage options from cloud merchandise like Confluent, Databricks, and Snowflake. This is sensible because it creates visibility within the information flows and improves information high quality.

Enterprise-wide information governance must combine with all of the totally different information platforms. Immediately, most firms have constructed their very own options – if they’ve something in any respect right now (most do not but). Devoted enterprise governance suites like Collibra or Microsoft Purview have been adopted increasingly more to resolve these challenges. Software program/cloud distributors like Confluent combine their purpose-built information lineage and governance into these platforms. Both simply by way of open APIs or by way of direct and authorized integrations.

Balancing Standardization and Innovation With Open Requirements and Cloud Providers

OpenLineage is a good neighborhood initiative to standardize the mixing between information platforms and information governance. Hopefully, distributors will undertake such open requirements sooner or later. Immediately, it’s an early stage and you’ll most likely combine by way of open APIs or licensed (proprietary) connectors.

Balancing standardization and innovation is at all times a trade-off: Discovering the fitting stability between standardization and innovation entails simplicity, flexibility, and diligent evaluation processes, with a give attention to addressing real-world ache factors and fostering community-driven extensions.

How do you implement information governance and lineage? Do you already leverage OpenLineage or different requirements? Or are you investing in industrial merchandise? Let’s join on LinkedIn and focus on it!