Every single day, builders are pushed to judge and use totally different instruments, cloud supplier companies, and comply with complicated inner-development loops. On this article, we have a look at how the open-source Dapr mission can assist Spring Boot builders construct extra resilient and environment-agnostic functions. On the identical time, they maintain their interior improvement loop intact.

Assembly Builders The place They Are

A few weeks in the past at Spring I/O, we had the possibility to satisfy the Spring group face-to-face within the lovely metropolis of Barcelona, Spain. At this convention, the Spring framework maintainers, core contributors, and finish customers meet yearly to debate the framework’s newest additions, information, upgrades, and future initiatives.

Whereas I’ve seen many shows overlaying matters corresponding to Kubernetes, containers, and deploying Spring Boot functions to totally different cloud suppliers, these matters are all the time lined in a method that is sensible for Spring builders. Most instruments introduced within the cloud-native area contain utilizing new instruments and altering the duties carried out by builders, generally together with complicated configurations and distant environments.

Instruments just like the Dapr mission, which may be put in on a Kubernetes cluster, push builders so as to add Kubernetes as a part of their inner-development loop duties. Whereas some builders may be snug with extending their duties to incorporate Kubernetes for native improvement, some groups favor to maintain issues easy and use instruments like Testcontainers to create ephemeral environments the place they’ll check their code adjustments for native improvement functions.

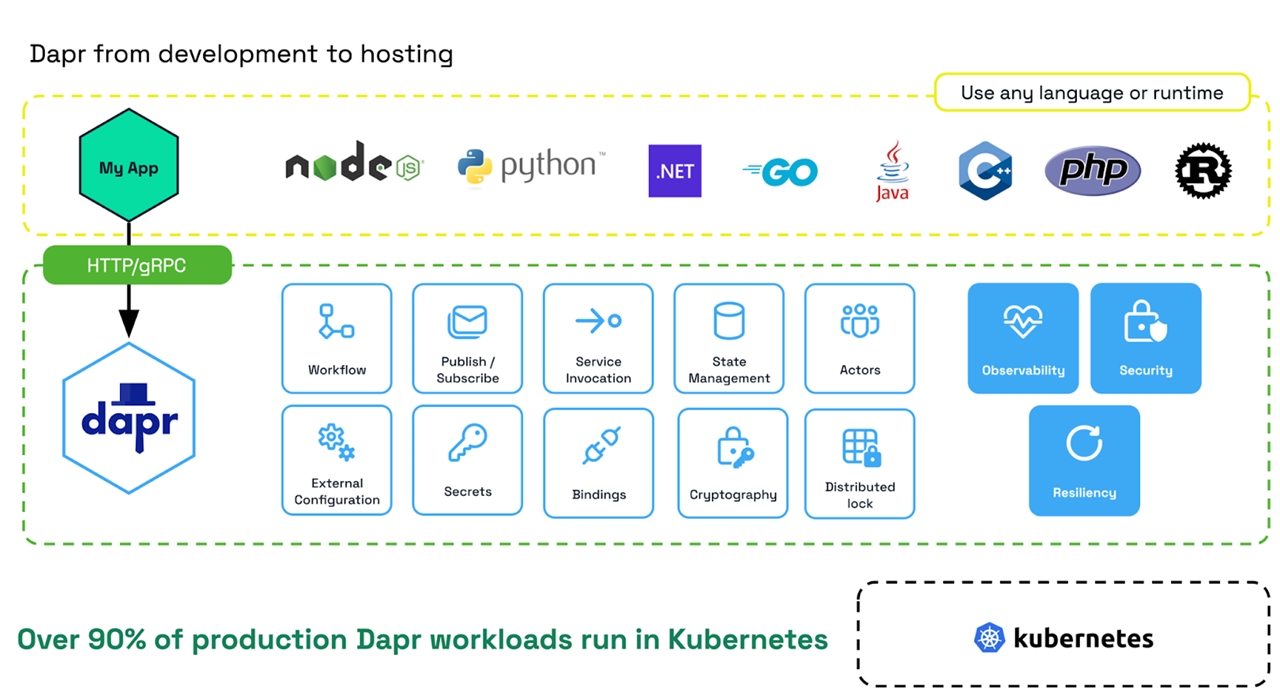

With Dapr, builders can depend on constant APIs throughout programming languages. Dapr gives a set of constructing blocks (state administration, publish/subscribe, service Invocation, actors, and workflows, amongst others) that builders can use to code their software options.

As an alternative of spending an excessive amount of time describing what Dapr is, on this article, we cowl how the Dapr mission and its integration with the Spring Boot framework can simplify the event expertise for Dapr-enabled functions that may be run, examined, and debugged domestically with out the necessity to run inside a Kubernetes cluster.

At present, Kubernetes, and Cloud-Native Runtimes

At present, if you wish to work with the Dapr mission, irrespective of the programming language you might be utilizing, the best method is to put in Dapr right into a Kubernetes cluster. Kubernetes and container runtimes are the most typical runtimes for our Java functions at this time. Asking Java builders to work and run their functions on a Kubernetes cluster for his or her day-to-day duties may be method out of their consolation zone. Coaching a big group of builders on utilizing Kubernetes can take some time, and so they might want to learn to set up instruments like Dapr on their clusters.

If you’re a Spring Boot developer, you in all probability need to code, run, debug, and check your Spring Boot functions domestically. For that reason, we created a neighborhood improvement expertise for Dapr, teaming up with the Testcontainers people, now a part of Docker.

As a Spring Boot developer, you should use the Dapr APIs with no Kubernetes cluster or needing to learn the way Dapr works within the context of Kubernetes.

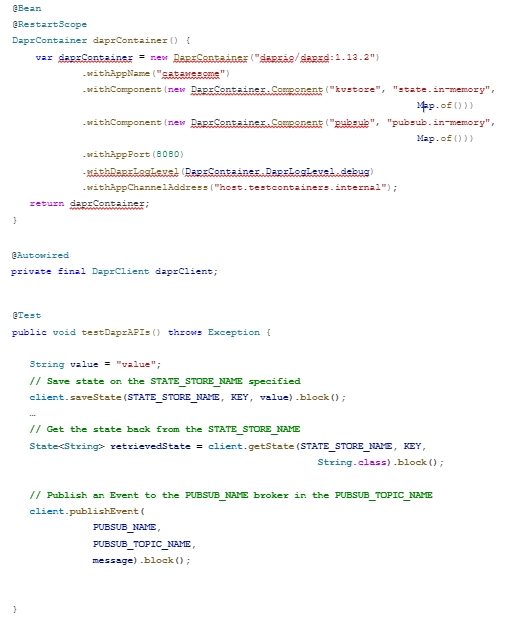

This check reveals how Testcontainers provisions the Dapr runtime by utilizing the @ClassRule annotation, which is answerable for bootstrapping the Dapr runtime so your software code can use the Dapr APIs to avoid wasting/retrieve state, trade asynchronous messages, retrieve configurations, create workflows, and use the Dapr actor mannequin.

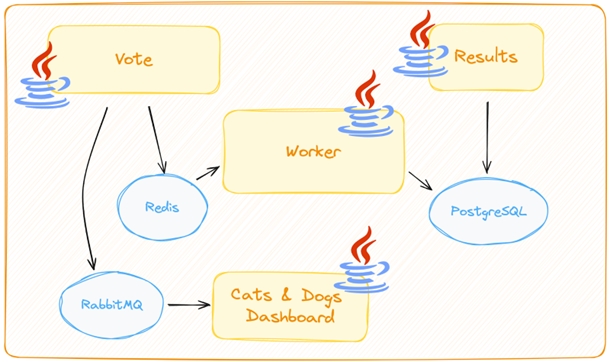

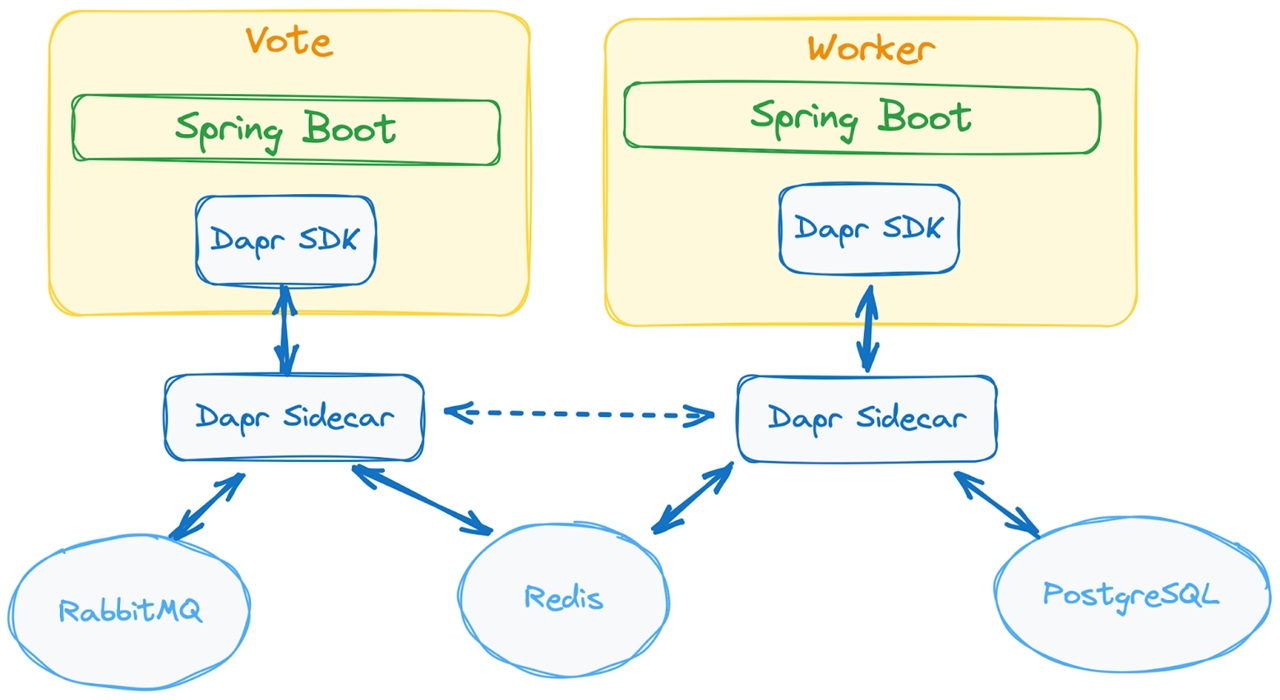

How does this evaluate to a typical Spring Boot software? Let’s say you’ve gotten a distributed software that makes use of Redis, PostgreSQL, and RabbitMQ to persist and browse state and Kafka to trade asynchronous messages. You could find the code for this software right here (underneath the java/ listing, yow will discover all of the Java implementations).

Your Spring Boot functions might want to haven’t solely the Redis shopper but in addition the PostgreSQL JDBC driver and the RabbitMQ shopper as dependencies. On prime of that, it’s fairly customary to make use of Spring Boot abstractions, corresponding to Spring Knowledge KeyValue for Redis, Spring Knowledge JDBC for PostgreSQL, and Spring Boot Messaging RabbitMQ. These abstractions and libraries elevate the essential Redis, relational database, and RabbitMQ shopper experiences to the Spring Boot programming mannequin. Spring Boot will do extra than simply name the underlying purchasers. It would handle the underlying shopper lifecycle and assist builders implement widespread use instances whereas selling greatest practices underneath the covers.

If we glance again on the check that confirmed how Spring Boot builders can use the Dapr APIs, the interactions will seem like this:

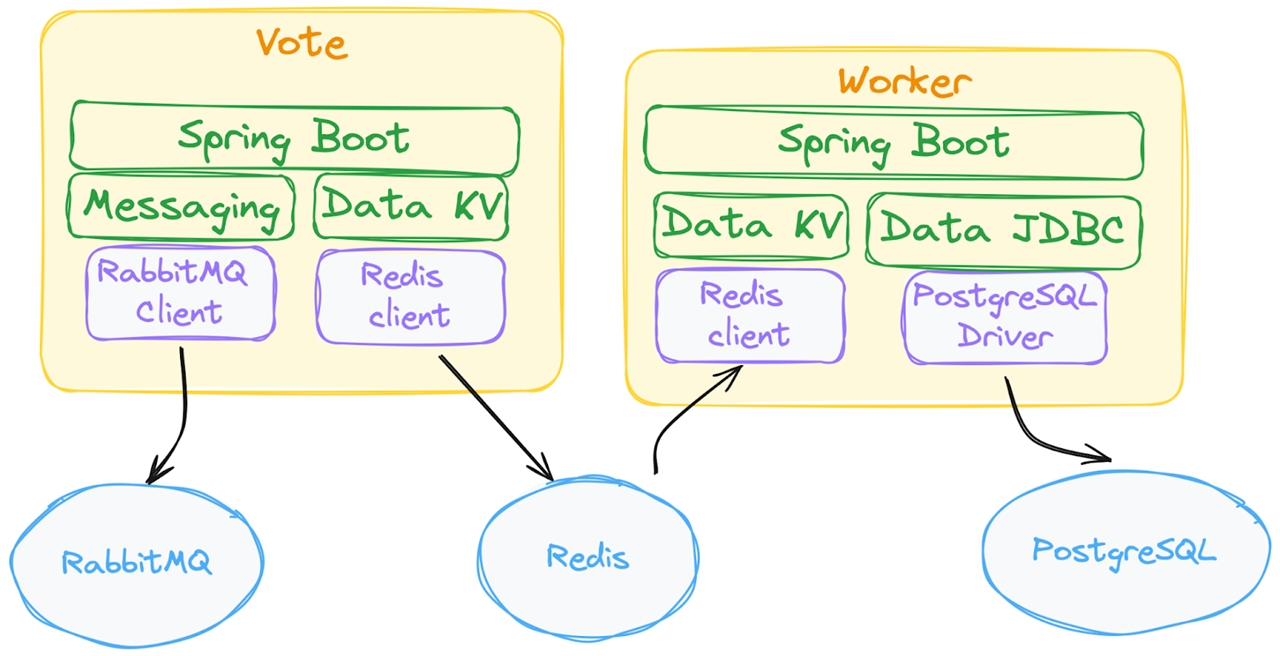

Within the second diagram, the Spring Boot software solely is dependent upon the Dapr APIs. In each the unit check utilizing the Dapr APIs proven above and the earlier diagram, as a substitute of connecting to the Dapr APIs instantly utilizing HTTP or gRPC requests, we have now chosen to make use of the Dapr Java SDK. No RabbitMQ, Redis purchasers, or JDBC drivers had been included within the software classpath.

This method of utilizing Dapr has a number of benefits:

- The appliance has fewer dependencies, so it doesn’t want to incorporate the Redis or RabbitMQ shopper. The appliance measurement is just not solely smaller however much less depending on concrete infrastructure parts which are particular to the setting the place the appliance is being deployed. Do not forget that these purchasers’ variations should match the part occasion operating on a given setting. With increasingly more Spring Boot functions deployed to cloud suppliers, it’s fairly customary to not have management over which variations of parts like databases and message brokers will probably be obtainable throughout environments. Builders will possible run a neighborhood model of those parts utilizing containers, inflicting model mismatches with environments the place the functions run in entrance of our clients.

- The appliance doesn’t create connections to Redis, RabbitMQ, or PostgreSQL. As a result of the configuration of connection swimming pools and different particulars carefully relate to the infrastructure and these parts are pushed away from the appliance code, the appliance is simplified. All these issues at the moment are moved out of the appliance and consolidated behind the Dapr APIs.

- A brand new software developer doesn’t have to learn the way RabbitMQ, PostgreSQL, or Redis works. The Dapr APIs are self-explanatory: if you wish to save the appliance’s state, use the

saveState()technique. In case you publish an occasion, use thepublishEvent()technique. Builders utilizing an IDE can simply examine which APIs can be found for them to make use of. - The groups configuring the cloud-native runtime can use their favourite instruments to configure the obtainable infrastructure. In the event that they transfer from a self-managed Redis occasion to a Google Cloud In-Reminiscence Retailer, they’ll swap their Redis occasion with out altering the appliance code. In the event that they need to swap a self-managed Kafka occasion for Google PubSub or Amazon SQS/SNS, they’ll shift Dapr configurations.

However, you ask, what about these APIs, saveState/getState and publishEvent? What about subscriptions? How do you eat an occasion? Can we elevate these API calls to work higher with Spring Boot so builders don’t have to study new APIs?

Tomorrow, a Unified Cross-Runtime Expertise

In distinction with most technical articles, the reply right here is just not, “It relies upon.” After all, the reply is YES. We will comply with the Spring Knowledge and Messaging method to offer a richer Dapr expertise that integrates seamlessly with Spring Boot. This, mixed with a neighborhood improvement expertise (utilizing Testcontainers), can assist groups design and code functions that may run rapidly and with out adjustments throughout environments (native, Kubernetes, cloud supplier).

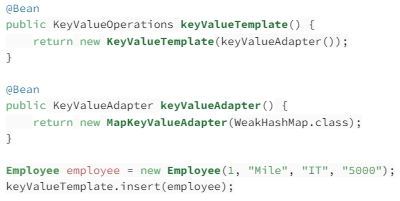

If you’re already working with Redis, PostgreSQL, and/or RabbitMQ, you might be most definitely utilizing Spring Boot abstractions Spring Knowledge and Spring RabbitMQ/Kafka/Pulsar for asynchronous messaging.

For Spring Knowledge KeyValue, examine the put up A Information to Spring Knowledge Key Worth for extra particulars.

To search out an Worker by ID:

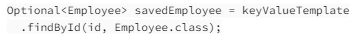

For asynchronous messaging, we will check out Spring Kafka, Spring Pulsar, and Spring AMQP (RabbitMQ) (see additionally Messaging with RabbitMQ ), which all present a method to produce and eat messages.

Producing messages with Kafka is this straightforward:

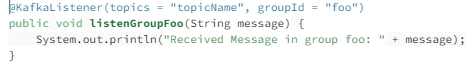

Consuming Kafka messages is extraordinarily simple too:

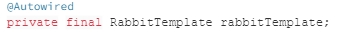

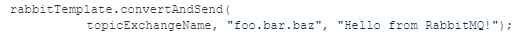

For RabbitMQ, we will do just about the identical:

After which to ship a message:

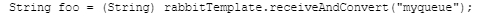

To eat a message from RabbitMQ, you are able to do:

Elevating Dapr to the Spring Boot Developer Expertise

Now let’s check out how it will look with the brand new Dapr Spring Boot starters:

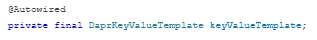

Let’s check out the DaprKeyValueTemplate:

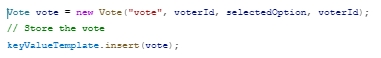

Let’s now retailer our Vote object utilizing the KeyValueTemplate.

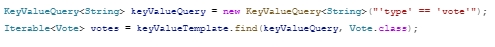

Let’s discover all of the saved votes by creating a question to the KeyValue retailer:

Now, why does this matter? The DaprKeyValueTemplate, implements the KeyValueOperations interfaces supplied by Spring Knowledge KeyValue, which is carried out by instruments like Redis, MongoDB, Memcached, PostgreSQL, and MySQL, amongst others. The massive distinction is that this implementation connects to the Dapr APIs and doesn’t require any particular shopper. The identical code can retailer knowledge in Redis, PostgreSQL, MongoDB, and cloud provider-managed companies corresponding to AWS DynamoDB and Google Cloud Firestore. Over 30 knowledge shops are supported in Dapr, and no adjustments to the appliance or its dependencies are wanted.

Equally, let’s check out the DaprMessagingTemplate.

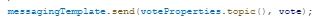

Let’s publish a message/occasion now:

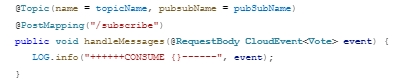

To eat messages/occasions, we will use the annotation method just like the Kafka instance:

An vital factor to note is that out-of-the-box Dapr makes use of CloudEvents to trade occasions (different codecs are additionally supported), whatever the underlying implementations. Utilizing the @Matter annotation, our software subscribes to take heed to all occasions taking place in a particular Dapr PubSub part in a specified Matter.

As soon as once more, this code will work for all supported Dapr PubSub part implementations corresponding to Kafka, RabbitMQ, Apache Pulsar, and cloud provider-managed companies corresponding to Azure Occasion Hub, Google Cloud PubSub, and AWS SNS/SQS (see Dapr Pub/sub brokers documentation).

Combining the DaprKeyValueTemplate and DaprMessagingTemplate provides builders entry to knowledge manipulation and asynchronous messaging underneath a unified API, which doesn’t add software dependencies, and it’s transportable throughout environments, as you possibly can run the identical code in opposition to totally different cloud supplier companies.

Whereas this appears way more like Spring Boot, extra work is required. On prime of Spring Knowledge KeyValue, the Spring Repository interface may be carried out to offer a CRUDRepository expertise. There are additionally some tough edges for testing, and documentation is required to make sure builders can get began with these APIs rapidly.

Benefits and Commerce-Offs

As with every new framework, mission, or instrument you add to the combination of applied sciences you might be utilizing, understanding trade-offs is essential in measuring how a brand new instrument will work particularly for you.

A technique that helped me perceive the worth of Dapr is to make use of the 80% vs 20% rule. Which works as follows:

- 80% of the time, functions do easy operations in opposition to infrastructure parts corresponding to message brokers, key/worth shops, configuration servers, and so on. The appliance might want to retailer and retrieve state and emit and eat asynchronous messages simply to implement software logic. For these situations, you may get essentially the most worth out of Dapr.

- 20% of the time, you want to construct extra superior options that require deeper experience on the particular message dealer that you’re utilizing or to put in writing a really performant question to compose a posh knowledge construction. For these situations, it’s okay to not use the Dapr APIs, as you in all probability require entry to particular underlying infrastructure options out of your software code.

It is not uncommon after we have a look at a brand new instrument to generalize it to suit as many use instances as we will. With Dapr, we must always give attention to serving to builders when the Dapr APIs match their use instances. When the Dapr APIs don’t match or require particular APIs, utilizing provider-specific SDKs/purchasers is okay. By having a transparent understanding of when the Dapr APIs may be sufficient to construct a function, a group can design and plan upfront what expertise are wanted to implement a function. For instance, do you want a RabbitMQ/Kafka or an SQL and area professional to construct some superior queries?

One other mistake we must always keep away from is just not contemplating the influence of instruments on our supply practices. If we will have the proper instruments to scale back friction between environments and if we will allow builders to create functions that may run domestically utilizing the identical APIs and dependencies required when operating on a cloud supplier.

With these factors in thoughts let’s have a look at the benefit and trade-offs:

Benefits

- Concise APIs to sort out cross-cutting issues and entry to widespread conduct required by distributed functions. This permits builders to delegate to Dapr issues corresponding to resiliency (retry and circuit breaker mechanisms), observability (utilizing OpenTelemetry, logs, traces and metrics), and safety (certificates and mTLS).

- With the brand new Spring Boot integration, builders can use the prevailing programming mannequin to entry performance

- With the Dapr and Testcontainers integration, builders don’t want to fret about operating or configuring Dapr, or studying different instruments which are exterior to their current interior improvement loops. The Dapr APIs will probably be obtainable for builders to construct, check, and debug their options domestically.

- The Dapr APIs can assist builders save time when interacting with infrastructure. For instance, as a substitute of pushing each developer to study how Kafka/Pulsar/RabbitMQ works, they simply have to learn to publish and eat occasions utilizing the Dapr APIs.

- Dapr allows portability throughout cloud-native environments, permitting your software to run in opposition to native or cloud-managed infrastructure with none code adjustments. Dapr gives a transparent separation of issues to allow operations/platform groups to wire infrastructure throughout a variety of supported parts.

Commerce-Offs

Introducing abstraction layers, such because the Dapr APIs, all the time comes with some trade-offs.

- Dapr won’t be the most effective match for all situations. For these instances, nothing stops builders from separating extra complicated performance that requires particular purchasers/drivers into separate modules or companies.

- Dapr will probably be required within the goal setting the place the appliance will run. Your functions will rely upon Dapr to be current and the infrastructure wanted by the appliance wired up accurately on your software to work. In case your operation/platform group is already utilizing Kubernetes, Dapr must be simple to undertake as it’s a fairly mature CNCF mission with over 3,000 contributors.

- Troubleshooting with an additional abstraction between our software and infrastructure parts can turn into tougher. The standard of the Spring Boot integration may be measured by how properly errors are propagated to builders when issues go improper.

I do know that benefits and trade-offs rely in your particular context and background, be happy to achieve out for those who see one thing lacking on this checklist.

Abstract and Subsequent Steps

Masking the Dapr Statestore (KeyValue) and PubSub (Messaging) is simply step one, as including extra superior Dapr options into the Spring Boot programming mannequin can assist builders entry extra performance required to create sturdy distributed functions. On our TODO checklist, Dapr Workflows for sturdy executions is coming subsequent, as offering a seamless expertise to develop complicated, long-running orchestration throughout companies is a standard requirement.

One of many the reason why I used to be so wanting to work on the Spring Boot and Dapr integration is that I do know that the Java group has labored exhausting to shine their developer experiences specializing in productiveness and constant interfaces. I strongly imagine that every one this amassed information within the Java group can be utilized to take the Dapr APIs to the subsequent degree. By validating which use instances are lined by the prevailing APIs and discovering gaps, we will construct higher integrations and robotically enhance builders’ experiences throughout languages.

You could find all of the supply code for the instance we introduced at Spring I/O linked within the “Today, Kubernetes, and Cloud-Native Runtimes” part of this text.

We count on to merge the Spring Boot and Dapr integration code to the Dapr Java SDK to make this expertise the default Dapr expertise when working with Spring Boot. Documentation will come subsequent.

If you wish to contribute or collaborate with these tasks and assist us make Dapr much more built-in with Spring Boot, please contact us.