PostgreSQL is without doubt one of the hottest SQL databases. It’s a go-to database for a lot of initiatives coping with On-line Transaction Processing techniques. Nonetheless, PostgreSQL is far more versatile and might efficiently deal with much less widespread SQL eventualities and workflows that don’t use SQL in any respect. On this weblog put up, we’ll see different eventualities the place PostgreSQL shines and can clarify use it in these circumstances.

How It All Began

Traditionally, we targeted on two distinct database workflows: On-line Transaction Processing (OLTP) and On-line Analytical Processing (OLAP).

OLTP represents the day-to-day purposes that we use to create, learn, replace, and delete (CRUD) knowledge. Transactions are brief and contact solely a subset of the entities directly, and we wish to carry out all these transactions concurrently and in parallel. We goal for the bottom latency and the very best throughput as we have to execute hundreds of transactions every second.

OLAP focuses on analytical processing. Usually after the top of the day, we wish to recalculate many aggregates representing our enterprise knowledge. We summarize the money circulation, recalculate customers’ preferences, or put together studies. Equally, after every month, quarter, or 12 months, we have to construct enterprise efficiency summaries and visualize them with dashboards. These workflows are fully totally different from the OLTP ones, as OLAP touches many extra (presumably all) entities, calculates advanced aggregates, and runs one-off transactions. We goal for reliability as a substitute of efficiency because the queries can take longer to finish (like hours and even days) however mustn’t fail as we would want to start out from scratch. We even devised advanced workflows known as Extract-Remodel-Load (ETL) that supported the entire preparation for knowledge processing to seize knowledge from many sources, remodel it into widespread schemas, and cargo it into knowledge warehouses. OLAP queries don’t intrude with OLTP queries sometimes as they run in a unique database.

A Plethora of Workflows At the moment

The world has modified dramatically over the past decade. On one hand, we improved our OLAP options quite a bit by involving massive knowledge, knowledge lakes, parallel processing (like with Spark), or low-code options to construct enterprise intelligence. Then again, OLTP transactions turn into extra advanced, the info is much less and fewer relational, and the internet hosting infrastructures modified considerably.

It’s not unusual as of late to make use of one database for each OLTP and OLAP workflows. Such an method known as Hybrid Transactional/Analytical Processing (HTAP). We could wish to keep away from copying knowledge between databases to save lots of time, or we could must run far more advanced queries usually (like each quarter-hour). In these circumstances, we wish to execute all of the workflows in a single database as a substitute of extracting knowledge some place else to run the evaluation. This will likely simply overload the database as OLAP transactions could lock the tables for for much longer which might considerably decelerate the OLTP transactions.

Yet one more growth is within the space of what knowledge we course of. We regularly deal with textual content, non-relational knowledge like JSON or XML, machine studying knowledge like embeddings, spatial knowledge, or time sequence knowledge. The world is commonly non-SQL as we speak.

Lastly, we additionally modified what we do. We don’t calculate the aggregates anymore. We regularly want to search out related paperwork, prepare massive language fashions, or course of thousands and thousands of metrics from Web of Issues (IoT) units.

Fortuitously, PostgreSQL may be very extensible and might simply accommodate these workflows. Regardless of if we take care of relational tables or advanced buildings, PostgreSQL supplies a number of extensions that may enhance the efficiency of the processing. Let’s undergo these workflows and perceive how PostgreSQL may help.

Non-Relational Knowledge

PostgreSQL can retailer numerous kinds of knowledge. Other than common numbers or textual content, we could wish to retailer nested buildings, spatial knowledge, or mathematical formulation. Querying such knowledge could also be considerably slower with out specialised knowledge buildings that perceive the content material of the columns. Fortuitously, PostgreSQL helps a number of extensions and applied sciences to take care of non-relational knowledge.

XML

PostgreSQL helps XML knowledge due to its built-in xml kind. The sort can retailer each well-formed paperwork (outlined by the XML customary) or the nodes of the paperwork which signify solely a fraction of the content material. We are able to then extract the elements of the paperwork, create new paperwork, and effectively seek for the info.

To create the doc, we will use the XMLPARSE perform or PostgreSQL’s proprietary syntax:

CREATE TABLE check

(

id integer NOT NULL,

xml_data xml NOT NULL,

CONSTRAINT test_pkey PRIMARY KEY (id)

)

INSERT INTO check VALUES (1, XMLPARSE(DOCUMENT '')) INSERT INTO check VALUES (2, XMLPARSE(CONTENT ' Some title 123 ')) INSERT INTO check VALUES (3, xml ' Some title 2 456 '::xml) Some title 3 789

We are able to additionally serialize knowledge as XML with XMLSERIALIZE:

SELECT XMLSERIALIZE(DOCUMENT 'Some title Many features produce XML. xmlagg creates the doc from values extracted from the desk:

SELECT xmlagg(xml_data) FROM checkxmlaggSome title 123 Some title 2 456 Some title 3 789

We are able to use xmlpath to extract any property from given nodes:

SELECT xpath('/e book/size/textual content()', xml_data) FROM checkxpath {123} {456} {789}We are able to use table_to_xml to dump your complete desk to XML:

SELECT table_to_xml('check', true, false, '')table_to_xml|

1 <_x0078_ml_data>Some title 123 |

2 <_x0078_ml_data>Some title 2 456 |

3 <_x0078_ml_data>Some title 3 789

The xml knowledge kind doesn’t present any comparability operators. To create indexes, we have to solid the values to the textual content or one thing equal and we will use this method with many index sorts. As an illustration, that is how we will create a B-tree index:

CREATE INDEX test_idx

ON check USING BTREE

(solid(xpath('/e book/title', xml_data) as textual content[]));

We are able to then use the index like this:

EXPLAIN ANALYZE

SELECT * FROM check the place

solid(xpath('/e book/title', xml_data) as textual content[]) = '{Some title }';

QUERY PLAN

Index Scan utilizing test_idx on check (value=0.13..8.15 rows=1 width=36) (precise time=0.065..0.067 rows=1 loops=1)

Index Cond: ((xpath('/e book/title'::textual content, xml_data, '{}'::textual content[]))::textual content[] = '{""Some title ""}'::textual content[])

Planning Time: 0.114 ms

Execution Time: 0.217 ms

Equally, we will create a hash index:

CREATE INDEX test_idx

ON check USING HASH

(solid(xpath('/e book/title', xml_data) as textual content[]));

PostgreSQL helps different index sorts. Generalized Inverted Index (GIN) is usually used for compound sorts the place values aren’t atomic, however encompass components. These indexes seize all of the values and retailer an inventory of reminiscence pages the place these values happen. We are able to use it like this:

CREATE INDEX test_idx

ON check USING gin

(solid(xpath('/e book/title', xml_data) as textual content[]));

EXPLAIN ANALYZE

SELECT * FROM check the place

solid(xpath('/e book/title', xml_data) as textual content[]) = '{Some title }';

QUERY PLAN

Bitmap Heap Scan on check (value=8.01..12.02 rows=1 width=36) (precise time=0.152..0.154 rows=1 loops=1)

Recheck Cond: ((xpath('/e book/title'::textual content, xml_data, '{}'::textual content[]))::textual content[] = '{""Some title ""}'::textual content[])

Heap Blocks: actual=1

-> Bitmap Index Scan on test_idx (value=0.00..8.01 rows=1 width=0) (precise time=0.012..0.013 rows=1 loops=1)

Index Cond: ((xpath('/e book/title'::textual content, xml_data, '{}'::textual content[]))::textual content[] = '{""Some title ""}'::textual content[])

Planning Time: 0.275 ms

Execution Time: 0.371 ms

JSON

PostgreSQL supplies two sorts to retailer JavaScript Object Notation (JSONB): json and jsonb. It additionally supplies a built-in kind jsonpath to signify the queries for extracting the info. We are able to then retailer the contents of the paperwork, and successfully search them based mostly on a number of standards.

Let’s begin by making a desk and inserting some pattern entities:

CREATE TABLE check

(

id integer NOT NULL,

json_data jsonb NOT NULL,

CONSTRAINT test_pkey PRIMARY KEY (id)

)

INSERT INTO check VALUES (1, '{"title": "Some title", "length": 123}')

INSERT INTO check VALUES (2, '{"title": "Some title 2", "length": 456}')

INSERT INTO check VALUES (3, '{"title": "Some title 3", "length": 789}')

We are able to use json_agg to mixture knowledge from a column:

SELECT json_agg(u) FROM (SELECT * FROM check) AS ujson_agg

[{"id":1,"json_data":{"title": "Some title", "length": 123}},

{"id":2,"json_data":{"title": "Some title 2", "length": 456}},

{"id":3,"json_data":{"title": "Some title 3", "length": 789}}]

We are able to additionally extract specific fields with a plethora of features:

SELECT json_data->'size' FROM check?column? 123 456 789

We are able to additionally create indexes:

CREATE INDEX test_idx

ON check USING BTREE

(((json_data -> 'size')::int));

And we will use it like this:

EXPLAIN ANALYZE SELECT * FROM check the place (json_data -> 'size')::int = 456

QUERY PLAN Index Scan utilizing test_idx on check (value=0.13..8.15 rows=1 width=36) (precise time=0.022..0.023 rows=1 loops=1) Index Cond: (((json_data -> 'size'::textual content))::integer = 456) Planning Time: 0.356 ms Execution Time: 0.119 ms

Equally, we will use the hash index:

CREATE INDEX test_idx

ON check USING HASH

(((json_data -> 'size')::int));

We are able to additionally play with different index sorts. As an illustration, the GIN index:

CREATE INDEX test_idx ON check USING gin(json_data)

We are able to use it like this:

EXPLAIN ANALYZE

SELECT * FROM check the place

json_data @> '{"length": 123}'

QUERY PLAN

Bitmap Heap Scan on check (value=12.00..16.01 rows=1 width=36) (precise time=0.024..0.025 rows=1 loops=1)

Recheck Cond: (json_data @> '{""length"": 123}'::jsonb)

Heap Blocks: actual=1

-> Bitmap Index Scan on test_idx (value=0.00..12.00 rows=1 width=0) (precise time=0.013..0.013 rows=1 loops=1)

Index Cond: (json_data @> '{""length"": 123}'::jsonb)

Planning Time: 0.905 ms

Execution Time: 0.513 ms

There are different choices. We might create a GIN index with trigrams or GIN with pathopts simply to call a couple of.

Spatial

Spatial knowledge represents any coordinates or factors within the house. They are often two-dimensional (on a airplane) or for increased dimensions as properly. PostgreSQL helps a built-in level kind that we will use to signify such knowledge. We are able to then question for distance between factors, and their bounding containers, or get them organized by the gap from some specified level.

Let’s see use them:

CREATE TABLE check

(

id integer NOT NULL,

p level,

CONSTRAINT test_pkey PRIMARY KEY (id)

)

INSERT INTO check VALUES (1, level('1, 1'))

INSERT INTO check VALUES (2, level('3, 2'))

INSERT INTO check VALUES (3, level('8, 6'))

To enhance queries on factors, we will use a Generalized Search Tree index (GiST). One of these index helps any knowledge kind so long as we will present some affordable ordering of the weather.

CREATE INDEX ON check USING gist(p)EXPLAIN ANALYZE SELECT * FROM check the place pQUERY PLAN Index Scan utilizing test_p_idx on check (value=0.13..8.15 rows=1 width=20) (precise time=0.072..0.073 rows=1 loops=1) Index Cond: (pWe are able to additionally use House Partitioning GiST (SP-GiST) which makes use of some extra advanced knowledge buildings to assist spatial knowledge:

CREATE INDEX test_idx ON check USING spgist(p)Intervals

Yet one more knowledge kind we will contemplate is intervals (like time intervals). They're supported by

tsrangeknowledge kind in PostgreSQL. We are able to use them to retailer reservations or occasion instances after which course of them by discovering occasions that collide or get them organized by their length.Let’s see an instance:

CREATE TABLE check ( id integer NOT NULL, throughout tsrange, CONSTRAINT test_pkey PRIMARY KEY (id) )INSERT INTO check VALUES (1, '[2024-07-30, 2024-08-02]') INSERT INTO check VALUES (2, '[2024-08-01, 2024-08-03]') INSERT INTO check VALUES (3, '[2024-08-04, 2024-08-05]')We are able to now use the GiST index:

CREATE INDEX test_idx ON check USING gist(throughout)EXPLAIN ANALYZE SELECT * FROM check the place throughout && '[2024-08-01, 2024-08-02]'QUERY PLAN Index Scan utilizing test_idx on check (value=0.13..8.15 rows=1 width=36) (precise time=0.023..0.024 rows=2 loops=1) Index Cond: (throughout && '[""2024-08-01 00:00:00"",""2024-08-02 00:00:00""]'::tsrange)" Planning Time: 0.226 ms Execution Time: 0.162 msWe are able to use SP-GiST for that as properly:

CREATE INDEX test_idx ON check USING spgist(throughout)Vectors

We wish to retailer any knowledge within the SQL database. Nonetheless, there isn't a simple approach to retailer films, songs, actors, PDF paperwork, photographs, or movies. Due to this fact, discovering similarities is way more durable, as we don’t have a easy technique for locating neighbors or clustering objects in these circumstances. To have the ability to carry out such a comparability, we have to remodel the objects into their numerical illustration which is an inventory of numbers (a vector or an embedding) representing numerous traits of the article. As an illustration, traits of a film might embody its star score, length in minutes, variety of actors, or variety of songs used within the film.

PostgreSQL helps these kinds of embeddings due to the pgvector extension. The extension supplies a brand new column kind and new operators that we will use to retailer and course of the embeddings. We are able to carry out element-wise addition and different arithmetic operations. We are able to calculate the Euclidean or cosine distance of the 2 vectors. We are able to additionally calculate interior merchandise or the Euclidean norm. Many different operations are supported.

Let’s create some pattern knowledge:

CREATE TABLE check ( id integer NOT NULL, embedding vector(3), CONSTRAINT test_pkey PRIMARY KEY (id) )INSERT INTO check VALUES (1, '[1, 2, 3]') INSERT INTO check VALUES (2, '[5, 10, 15]') INSERT INTO check VALUES (3, '[6, 2, 4]')We are able to now question the embeddings and get them organized by their similarity to the filter:

SELECT embedding FROM check ORDER BY embedding '[3,1,2]';embedding [1,2,3] [6,2,4] [5,10,15]Pgvector helps two kinds of indexes: Inverted File (IVFFlat) and Hierarchical Navigable Small Worlds (HNSW).

IVFFlat index divides vectors into lists. The engine takes a pattern of vectors within the database, clusters all the opposite vectors based mostly on the gap to the chosen neighbors, after which shops the consequence. When performing a search, pgvector chooses lists which are closest to the question vector after which searches these lists solely. Since IVFFlat makes use of the coaching step, it requires some knowledge to be current within the database already when constructing the index. We have to specify the variety of lists when constructing the index, so it’s finest to create the index after we fill the desk with knowledge. Let’s see the instance:

CREATE INDEX test_idx ON check USING ivfflat (embedding) WITH (lists = 100);EXPLAIN ANALYZE SELECT * FROM check ORDER BY embedding '[3,1,2]';QUERY PLAN Index Scan utilizing test_idx on check (value=1.01..5.02 rows=3 width=44) (precise time=0.018..0.018 rows=0 loops=1) Order By: (embedding '[3,1,2]'::vector) Planning Time: 0.072 ms Execution Time: 0.052 msOne other index is HNSW. An HNSW index relies on a multilayer graph. It doesn’t require any coaching step (like IVFFlat), so the index might be constructed even with an empty database.HNSW construct time is slower than IVFFlat and makes use of extra reminiscence, however it supplies higher question efficiency afterward. It really works by making a graph of vectors based mostly on a really related thought as a skip record. Every node of the graph is linked to some distant vectors and a few shut vectors. We enter the graph from a recognized entry level, after which observe it on a grasping foundation till we will’t transfer any nearer to the vector we’re on the lookout for. It’s like beginning in an enormous metropolis, taking a flight to some distant capital to get as shut as potential, after which taking some native prepare to lastly get to the vacation spot. Let’s see that:

CREATE INDEX test_idx ON check USING hnsw (embedding vector_l2_ops) WITH (m = 4, ef_construction = 10);EXPLAIN ANALYZE SELECT * FROM check ORDER BY embedding '[3,1,2]';QUERY PLAN Index Scan utilizing test_idx on check (value=8.02..12.06 rows=3 width=44) (precise time=0.024..0.026 rows=3 loops=1) Order By: (embedding '[3,1,2]'::vector) Planning Time: 0.254 ms Execution Time: 0.050 msFull-Textual content Search

Full-text search (FTS) is a search method that examines all the phrases in each doc to match them with the question. It’s not simply looking the paperwork that comprise the desired phrase, but in addition on the lookout for related phrases, typos, patterns, wildcards, synonyms, and far more. It’s a lot more durable to execute as each question is far more advanced and might result in extra false positives. Additionally, we will’t merely scan every doc, however we have to someway remodel the info set to precalculate aggregates after which use them in the course of the search.

We sometimes remodel the info set by splitting it into phrases (or characters, or different tokens), eradicating the so-called cease phrases (like the, an, in, a, there, was, and others) that don't add any area information, after which compress the doc to a illustration permitting for quick search. That is similar to calculating embeddings in machine studying.

tsvector

PostgreSQL helps FTS in some ways. We begin with the

tsvectorkind that incorporates the lexemes (form of phrases) and their positions within the doc. We are able to begin with this question:choose to_tsvector('There was a crooked man, and he walked a crooked mile');to_tsvector 'criminal':4,10 'man':5 'mile':11 'stroll':8The opposite kind that we want is

tsquerywhich represents the lexemes and operators. We are able to use it to question the paperwork.choose to_tsquery('man & (strolling | working)');to_tsquery "'man' & ( 'walk' | 'run' )"We are able to see the way it remodeled the verbs into different varieties.

Let’s now use some pattern knowledge for testing the mechanism:

CREATE TABLE check ( id integer NOT NULL, tsv tsvector, CONSTRAINT test_pkey PRIMARY KEY (id) )INSERT INTO check VALUES (1, to_tsvector('John was working')) INSERT INTO check VALUES (2, to_tsvector('Mary was working')) INSERT INTO check VALUES (3, to_tsvector('John was singing'))We are able to now question the info simply:

SELECT tsv FROM check WHERE tsv @@ to_tsquery('mary | sing')tsv 'mari':1 'run':3 'john':1 'sing':3We are able to now create a GIN index to make this question run quicker:

CREATE INDEX test_idx ON check utilizing gin(tsv);EXPLAIN ANALYZE SELECT * FROM check WHERE tsv @@ to_tsquery('mary | sing')QUERY PLAN Bitmap Heap Scan on check (value=12.25..16.51 rows=1 width=36) (precise time=0.019..0.019 rows=2 loops=1) Recheck Cond: (tsv @@ to_tsquery('mary | sing'::textual content)) Heap Blocks: actual=1 -> Bitmap Index Scan on test_idx (value=0.00..12.25 rows=1 width=0) (precise time=0.016..0.016 rows=2 loops=1) Index Cond: (tsv @@ to_tsquery('mary | sing'::textual content)) Planning Time: 0.250 ms Execution Time: 0.039 msWe are able to additionally use the GiST index with RD-tree.

CREATE INDEX ts_idx ON check USING gist(tsv)EXPLAIN ANALYZE SELECT * FROM check WHERE tsv @@ to_tsquery('mary | sing')QUERY PLAN Index Scan utilizing ts_idx on check (value=0.38..8.40 rows=1 width=36) (precise time=0.028..0.032 rows=2 loops=1) Index Cond: (tsv @@ to_tsquery('mary | sing'::textual content)) Planning Time: 0.094 ms Execution Time: 0.044 msTextual content and Trigrams

Postgres helps FTS with common textual content as properly. We are able to use the

pg_trgmextension that gives trigram matching and operators for fuzzy search.Let’s create some pattern knowledge:

CREATE TABLE check ( id integer NOT NULL, sentence textual content, CONSTRAINT test_pkey PRIMARY KEY (id) )INSERT INTO check VALUES (1, 'John was working') INSERT INTO check VALUES (2, 'Mary was working') INSERT INTO check VALUES (3, 'John was singing')We are able to now create the GIN index with trigrams:

CREATE INDEX test_idx ON check USING GIN (sentence gin_trgm_ops);We are able to use the index to look by common expressions:

EXPLAIN ANALYZE SELECT * FROM check WHERE sentence ~ 'John | Mary'QUERY PLAN Bitmap Heap Scan on check (value=30.54..34.55 rows=1 width=36) (precise time=0.096..0.104 rows=2 loops=1) Recheck Cond: (sentence ~ 'John | Mary'::textual content) Rows Eliminated by Index Recheck: 1 Heap Blocks: actual=1 -> Bitmap Index Scan on test_idx (value=0.00..30.54 rows=1 width=0) (precise time=0.077..0.077 rows=3 loops=1) Index Cond: (sentence ~ 'John | Mary'::textual content) Planning Time: 0.207 ms Execution Time: 0.136 msDifferent Options

There are different extensions for FTS as properly. As an illustration, pg_search (which is a part of ParadeDB) claims it's 20 instances quicker than the

tsvectoranswer. The extension relies on the Okapi BM25 algorithm which is utilized by many search engines like google and yahoo to estimate the relevance of paperwork. It calculates the Inverse Doc Frequency (IDF) formulation which makes use of likelihood to search out matches.Analytics

Let’s now talk about analytical eventualities. They differ from OLTP workflows considerably due to these most important causes: cadence, quantity of extracted knowledge, and kind of modifications.

With regards to cadence, OLTP transactions occur many instances every second. We try for optimum potential throughput and we take care of hundreds of transactions every second. Then again, analytical workflows run periodically (like yearly or day by day), so we don’t must deal with hundreds of them. We merely run one OLAP workflow occasion a day and that’s it.

OLTP transactions contact solely a subset of the information. They sometimes learn and modify a couple of situations. They very hardly ever must scan your complete desk and we try to make use of indexes with excessive selectivity to keep away from studying unneeded rows (as they lower the efficiency of reads). OLAP transactions usually learn all the things. They should recalculate knowledge for lengthy durations (like a month or a 12 months) and they also usually learn thousands and thousands of information. Due to this fact, we don’t want to make use of indexes (as they will’t assist) and we have to make the sequential scans as quick as potential. Indexes are sometimes dangerous to OLAP databases as they must be saved in sync however aren't used in any respect.

Final however not least, OLTP transactions usually modify the info. They replace the information and the indexes and must deal with concurrent updates and transaction isolation ranges. OLAP transactions don’t try this. They wait till the ETL half is completed after which solely learn the info. Due to this fact, we don’t want to take care of locks or snapshots as OLAP workflows solely learn the info. Then again, OLTP transactions take care of primitive values and barely use advanced aggregates. OLAP workflows must mixture the info, calculate the averages and estimators, and use window features to summarize the figures for enterprise functions.

There are numerous extra features of OLTP vs OLAP variations. As an illustration, OLTP could profit from caches however OLAP is not going to since we scan every row solely as soon as. Equally, OLTP workflows goal to current the most recent potential knowledge whereas it’s okay for OLAP to be somewhat outdated (so long as we will management the delay).

To summarize, the primary variations:

- OLTP:

- Quick transactions

- Many transactions every second

- Transactions modify information

- Transactions take care of primitive values

- Transactions contact solely a subset of rows

- Can profit from caches

- We have to have extremely selective indexes

- They hardly ever contact exterior knowledge sources

- OLAP:

- Lengthy transactions

- Rare transactions (day by day, quarterly, yearly)

- Transactions don’t modify the information

- Transactions calculate advanced aggregates

- Transactions usually learn all of the out there knowledge

- Not often advantages from caches

- We wish to have quick sequential scans

- They usually depend on ETL processes bringing knowledge from many sources

With the expansion of the info, we wish to run each OLTP and OLAP transactions utilizing our PostgreSQL. This method known as HTAP (Hybrid Transactional/Analytical Processing) and PostgreSQL helps it considerably. Let’s see how.

Knowledge Lakes

For analytical functions, we frequently carry knowledge from a number of sources. These can embody SQL or non-SQL databases, e-commerce techniques, knowledge warehouses, blob storages, log sources, or clickstreams, simply to call a couple of. We carry the info as a part of the ETL course of that hundreds issues from a number of locations.

We are able to carry the info utilizing a number of applied sciences, nonetheless, PostgreSQL helps that natively with International Knowledge Wrappers due to the postres_fdw module. We are able to simply carry knowledge from numerous sources with out utilizing any exterior purposes.

The method seems to be usually as follows:

- We set up the extension.

- We create a international server object to signify the distant database (or knowledge supply typically).

- We create a consumer mapping for every database we wish to use.

- We create a international desk.

We are able to then simply learn knowledge from exterior sources with the common SELECT assertion.

There are numerous extra sources we will use. As an illustration:

One other expertise that we will use is dblink, which focuses on executing queries in distant databases. It’s not as versatile as FDW interfaces, although.

Environment friendly Sequential Scans

We talked about that OLAP workflows sometimes must scan far more knowledge. Due to this fact, we wish to optimize the way in which we retailer and course of the entities. Let’s see how to try this.

By default, PostgreSQL shops tuples within the uncooked order. Let’s take the next desk:

CREATE TABLE check

(

id integer NOT NULL,

field1 integer,

field2 integer,

CONSTRAINT test_pkey PRIMARY KEY (id)

)

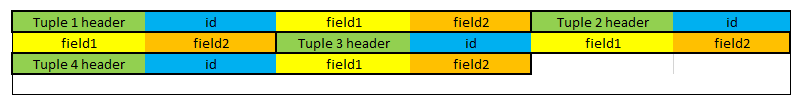

The content material of the desk can be saved within the following manner:

We are able to see that every tuple has a header adopted by the fields (id, field1, and field2). This method works properly for generic knowledge and typical circumstances. Discover that so as to add a brand new tuple, we merely must put it on the very finish of this desk (after the final tuple). We are able to additionally simply modify the info in place (so long as the info is just not getting greater).

Nonetheless, this storage kind has one massive disadvantage. Computer systems don’t learn the info byte by byte. As an alternative, they carry complete packs of bytes directly and retailer them in caches. When our software desires to learn one byte, the working system reads 64 bytes or much more like the entire web page which is 8kB lengthy. Think about now that we wish to run the next question:

SELECT SUM(field1) FROM checkTo execute this question, we have to scan all of the tuples and extract one discipline from every. Nonetheless, the database engine can not merely skip different knowledge. It nonetheless must load almost complete tuples as a result of it reads a bunch of bytes directly. Let’s see make it quicker.

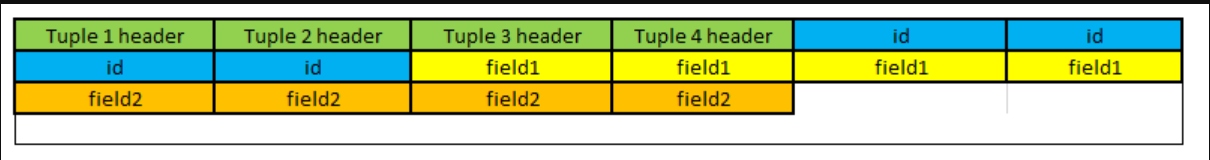

What if we saved the info column by column as a substitute? Similar to this:

If we now wish to extract the field1 column solely, we will merely discover the place it begins after which scan all of the values directly. This closely improves the learn efficiency.

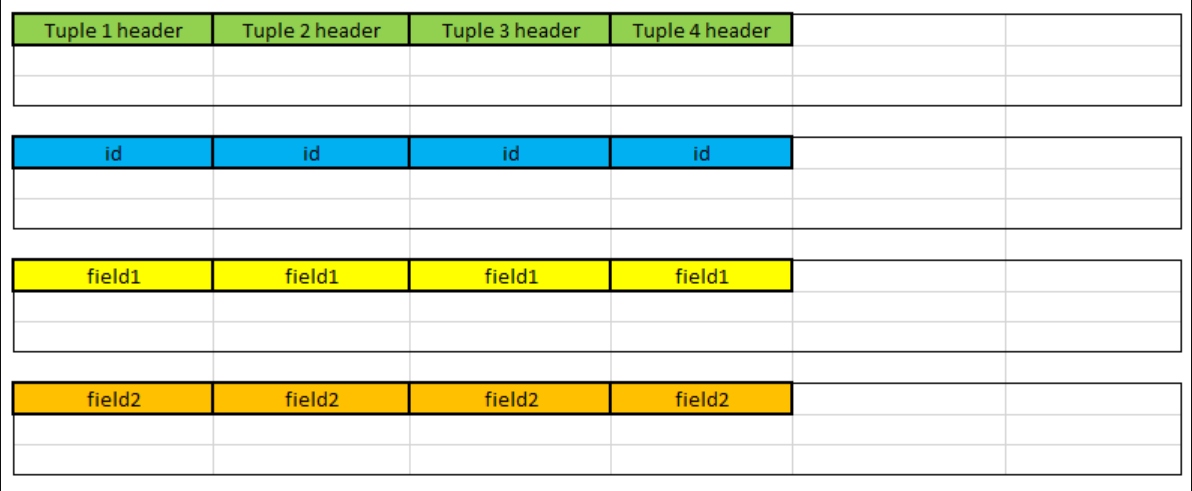

One of these storage known as columnar. We are able to see that we retailer every column one after one other. In actual fact, databases retailer the info independently, so it seems to be far more like this:

Successfully, the database shops every column individually. Since values inside a single column are sometimes related, we will additionally compress them or construct indexes that may exhibit the similarities, so the scans are even quicker.

Nonetheless, the columnar storage brings some drawbacks as properly. As an illustration, to take away a tuple, we have to delete it from a number of unbiased storages. Updates are additionally slower as they could require eradicating the tuple and including it again.

One of many options that works this manner is ParadeDB. It’s an extension to PostgreSQL that makes use of pg_lakehouse extension to run the queries utilizing DuckDB which is an in-process OLAP database. It’s extremely optimized due to its columnar storage and might outperform native PostgreSQL by orders of magnitude. They declare they’re 94 instances quicker however it may be much more relying in your use case.

DuckDB is an instance of a vectorized question processing engine. It tries to chunk the info into items that may match caches. This manner, we will keep away from the penalty of pricy I/O operations. Moreover, we will additionally use SIMD directions to carry out the identical operation on a number of values (due to the columnar storage) which improves efficiency much more.

DuckDB helps two kinds of indexes:

- Min-max (block vary) indexes that describe minimal and most values in every reminiscence block to make the scans quicker. One of these index can also be out there in native PostgreSQL as BRIN.

- Adaptive Radix Tree (ART) indexes to make sure the constraints and pace up the extremely selective queries.

Whereas these indexes carry efficiency advantages, DuckDB can not replace the info. As an alternative, it deletes the tuples and reinserts them again.

ParadeDB developed from pg_search and pg_analytics extensions. The latter helps yet one more manner of storing knowledge if is in parquet format. This format can also be based mostly on columnar storage and permits for important compression.

Mechanically Up to date Views

Other than columnar storage, we will optimize how we calculate the info. OLAP workflows usually give attention to calculating aggregates (like averages) on the info. At any time when we replace the supply, we have to replace the aggregated worth. We are able to both recalculate it from scratch, or we will discover a higher manner to try this.

Hydra boosts the efficiency of the combination queries with the assistance of pg_ivm. Hydra supplies materialized views on the analytical knowledge. The concept is to retailer the outcomes of the calculated view to not must recalculate it the subsequent time we question the view. Nonetheless, if the tables backing the view change, the view turns into out-of-date and must be refreshed. pg_ivm introduces the idea of Incremental View Upkeep (IVM) which refreshes the view by recomputing the info utilizing solely the subset of the rows that modified.

IVM makes use of triggers to replace the view. Think about that we wish to calculate the typical of all of the values in a given column. When a brand new row is added, we don’t must learn all of the rows to replace the typical. As an alternative, we will use the newly added worth and see how it might have an effect on the combination. IVM does that by working a set off when the bottom desk is modified.

Materialized views supporting IVM are known as Incrementally Maintainable Materialized Views (IMMV). Hydra supplies columnar tables (tables that use columnar storage) and helps IMMV for them. This manner, we will get important efficiency enhancements as knowledge doesn’t must be recalculated from scratch. Hydra additionally makes use of vectorized execution and closely parallelizes the queries to enhance efficiency. In the end, they declare they are often as much as 1500 instances quicker than native PostgreSQL.

Time Sequence

We already lined most of the workflows that we have to take care of in our day-to-day purposes. With the expansion of Web of Issues (IoT) units, we face yet one more problem: effectively course of billions of indicators obtained from sensors day by day.

The info we obtain from sensors known as time sequence. It’s a sequence of knowledge factors listed in time order. For instance, we could also be studying the temperature at dwelling each minute or checking the dimensions of the database each second. As soon as we now have the info, we will analyze it and use it for forecasts to detect anomalies or optimize our lives. Time sequence might be utilized to any kind of knowledge that’s real-valued, steady, or discrete.

When coping with time sequence, we face two issues: we have to mixture the info effectively (equally as in OLAP workflows) and we have to do it quick (as the info adjustments each second). Nonetheless, the info is often append-only and is inherently ordered so we will exploit this time ordering to calculate issues quicker.

Timescale is an extension for PostgreSQL that helps precisely that. It turns PostgreSQL right into a time sequence database that’s environment friendly in processing any time sequence knowledge we now have. Time sequence achieves that with intelligent chunking, IMMV with aggregates, and hypertables. Let’s see the way it works.

The principle a part of Timescale is hypertables. These are tables that routinely partition the info by time. From the consumer’s perspective, the desk is only a common desk with knowledge. Nonetheless, Timescale partitions the info based mostly on the time a part of the entities. The default setting is to partition the info into chunks protecting 7 days. This may be modified to go well with our wants as we must always attempt for one chunk consuming round 25% of the reminiscence of the server.

As soon as the info is partitioned, Timescale can vastly enhance the efficiency of the queries. We don’t must scan the entire desk to search out the info as a result of we all know which partitions to skip. Timescale additionally introduces indexes which are created routinely for the hypertables. Because of that, we will simply compress the tables and transfer older knowledge to tiered storage to save cash.

The most important benefit of Timescale is steady aggregates. As an alternative of calculating aggregates each time new knowledge is added, we will replace the aggregates in real-time. Timescale supplies three kinds of aggregates: materialized views (identical to common PostgreSQL’s materialized views), steady aggregates (identical to IMMV we noticed earlier than), and real-time aggregates. The final kind provides the newest uncooked knowledge to the beforehand aggregated knowledge to supply correct and up-to-date outcomes.

Not like different extensions, Timescale helps aggregates on JOINs and might stack one mixture on prime of one other. Steady aggregates additionally assist features, ordering, filtering, or duplicate removing.

Final however not least, Timescale supplies hyperfunctions which are analytical features devoted to time sequence. We are able to simply bucket the info, calculate numerous kinds of aggregates, group them with home windows, and even construct pipelines for simpler processing.

Abstract

PostgreSQL is without doubt one of the hottest SQL databases. Nonetheless, it’s not solely an SQL engine. Because of many extensions, it will probably now take care of non-relational knowledge, full-text search, analytical workflows, time sequence, and far more. We don’t must differentiate between OLAP and OLTP anymore. As an alternative, we will use PostgreSQL to run HTAP workflows inside one database. This makes PostgreSQL probably the most versatile database that ought to simply deal with all of your wants.