All efficiently applied machine studying fashions are backed by at the least two robust parts: knowledge and mannequin. In my discussions with ML engineers, I heard many instances that, as a substitute of spending a major period of time on knowledge preparation, together with labeling for supervised studying, they’d quite spend their time on mannequin improvement. In terms of most issues, labeling enormous quantities of information is far more troublesome than acquiring it within the first place.

Unlabeled knowledge fails to offer the specified accuracy throughout coaching, and labeling enormous datasets for supervised studying could be time-consuming and costly. What if the info labeling finances was restricted? What knowledge must be labeled first? These are simply among the daunting questions going through ML engineers who would quite be doing productive work as a substitute.

In actuality, there are various fields the place an absence of labels is pure. Under are some examples of fields the place we will observe an absence of labels and the explanation why this happens.

Researchers and practitioners have developed a number of methods to handle these labeling challenges:

- Switch studying and area adaptation

- Artificial knowledge technology

- Semi-supervised studying

- Lively studying

Amongst these approaches, semi-supervised studying stands out as a very promising resolution. This system permits leveraging each small quantities of labeled knowledge and far bigger quantities of unlabeled knowledge concurrently. By combining the strengths of supervised and unsupervised studying, semi-supervised studying gives a possible resolution to the labeling problem whereas sustaining mannequin efficiency.

On this article, we’ll dive into the idea of semi-supervised studying and discover its ideas, purposes, and potential to revolutionize how we method data-hungry ML duties.

Understanding Semi-Supervised Studying

Semi-supervised studying is a machine studying method that mixes supervised and unsupervised studying by coaching fashions with a small quantity of labeled knowledge and a bigger pool of unlabeled knowledge. This method could be represented mathematically as follows:

- Let DS: (x, y) ~ p(x,y) be a small labeled dataset, and DU: x ~ p(x) be a big unlabeled dataset. As regular, we use labeled knowledge for supervised studying, and unlabeled knowledge for unsupervised studying.

- In semi-supervised studying, we use each datasets to reduce a loss perform that mixes supervised and unsupervised parts: L = μsLs + μu Lu.

- This loss perform permits the mannequin to study from each labeled and unlabeled knowledge concurrently. It’s price mentioning that this methodology is extra profitable with a bigger quantity of labeled knowledge.

Semi-supervised studying is very helpful when buying a complete set of labeled knowledge is simply too pricey or impractical. Nonetheless, its effectiveness depends on the belief that unlabeled knowledge can present significant info for mannequin coaching, which isn’t all the time the case.

The problem lies in balancing the usage of labeled and unlabeled knowledge, in addition to guaranteeing that the mannequin doesn’t reinforce incorrect pseudo-labels generated by the unlabeled knowledge.

Core Ideas in Semi-Supervised Studying

The analysis group has launched a number of semi-supervised studying ideas. Let’s dive into probably the most impactful ones under.

Confidence and Entropy

The principle concept of entropy minimization is to make sure that a classifier educated on labeled knowledge makes assured predictions on unlabeled knowledge as effectively (as in, produces predictions with minimal entropy). Entropy, on this context, refers back to the uncertainty of the mannequin’s predictions. Decrease entropy signifies increased confidence. This method has been confirmed to have a regularizing impact on the classifier.

An identical idea is pseudo labeling, often known as self-training in some literature, which entails:

- Asking the classifier to foretell labels for unlabeled knowledge.

- Utilizing probably the most confidently predicted samples as further floor fact for the subsequent iteration of coaching.

This can be a primary kind of semi-supervised studying and must be utilized rigorously. The reinforcing impact on the mannequin can doubtlessly amplify preliminary biases or errors if not correctly managed.

Different examples of comparable strategies embrace:

- Co-training

- Multi-view coaching

- Noisy scholar

The overall course of for these strategies sometimes follows these levels:

- A mannequin is first educated on a small set of labeled knowledge.

- The mannequin generates pseudo-labels by predicting labels for a bigger set of unlabeled knowledge.

- Essentially the most assured labels (with minimal entropy) are chosen to complement the coaching dataset.

- The mannequin is retrained utilizing the enriched dataset from step 3.

This iterative course of goals to leverage the mannequin’s rising confidence to enhance its efficiency on each labeled and unlabeled knowledge.

Label Consistency and Regularization

This method is predicated on the concept that the prediction mustn’t change the category if we apply easy augmentation to the pattern. Easy augmentation refers to minor modifications of the enter knowledge, comparable to slight rotations, crops, or shade modifications for pictures.

The mannequin is then educated on unlabeled knowledge to make sure that predictions between a pattern and its augmented model are constant. This idea is just like concepts from self-supervised studying approaches primarily based on consistency constraints.

Examples of methods utilizing this method embrace:

- Pi-Mannequin

- Temporal Ensembling

- Imply Trainer

- FixMatch algorithm

- Digital Adversarial Coaching (VAT)

The principle steps on this method are:

- Take an unlabeled pattern.

- Create a number of completely different views (augmentations) of the chosen pattern.

- Apply a classifier and be certain that the predictions for these views are roughly comparable.

This methodology leverages the belief that small modifications to the enter mustn’t dramatically alter the mannequin’s prediction, thus encouraging the mannequin to study extra sturdy and generalizable options from the unlabeled knowledge.

In contrast to the Confidence and Entropy method, which focuses on maximizing prediction confidence, Label Consistency and Regularization emphasizes the soundness of predictions throughout comparable inputs. This can assist forestall overfitting to particular knowledge factors and encourage the mannequin to study extra significant representations.

Generative Fashions

Generative fashions in semi-supervised studying make the most of the same methodology to switch studying in supervised studying, the place options realized on one job could be transferred to different downstream duties.

Nonetheless, there is a key distinction: generative fashions are capable of study the info distribution p(x), generate samples from this distribution, and finally improve supervised studying by enhancing the modeling of p(y|x) for a given pattern x with a given goal label y. This method is especially helpful in semi-supervised studying as a result of it may well leverage giant quantities of unlabeled knowledge to study the underlying knowledge distribution, which might then inform the supervised studying job.

The most well-liked kinds of generative fashions used to reinforce Semi-Supervised Studying are:

The process sometimes follows these steps:

- Assemble each generative and supervised elements of the loss perform.

- Practice generative and supervised fashions concurrently utilizing the mixed loss perform.

- Use the educated supervised mannequin for the goal job.

On this course of, the generative mannequin learns from each labeled and unlabeled knowledge, serving to to seize the underlying construction of the info area. This realized construction can then inform the supervised mannequin, doubtlessly enhancing its efficiency, particularly when labeled knowledge is scarce.

Graph-Primarily based Semi-Supervised Studying

Graph-based semi-supervised studying strategies make use of a graph knowledge construction to symbolize each labeled and unlabeled knowledge as nodes. This method is especially efficient in capturing complicated relationships between knowledge factors, making it helpful when the info has inherent structural or relational properties.

On this methodology, labels are propagated by way of the graph. The variety of paths from an unlabeled node to labeled nodes aids in figuring out its label. This method leverages the belief that comparable knowledge factors (linked by edges within the graph) are more likely to have comparable labels.

The process sometimes follows these steps:

- Assemble a graph with nodes representing knowledge factors (each labeled and unlabeled).

- Join nodes by edges, typically primarily based on similarity measures between knowledge factors (e.g., k-nearest neighbors or Gaussian kernel).

- Use graph algorithms (comparable to Label Propagation or Graph Neural Networks) to unfold labels from labeled nodes to unlabeled nodes.

- Assign labels to unlabeled nodes primarily based on the propagated info.

- Optionally repeat the method to refine labels on unlabeled nodes.

This methodology is especially advantageous when coping with knowledge that has a pure graph construction (e.g., social networks, quotation networks) or when the connection between knowledge factors is essential for classification. Nonetheless, efficiency could be delicate to the selection of graph development methodology and similarity measure. Frequent algorithms on this method embrace Label Propagation, Label Spreading, and extra lately, Graph Neural Networks.

Examples in Analysis

Semi-supervised studying has led to vital advances throughout numerous domains, together with speech recognition, internet content material classification, and textual content doc evaluation. These developments haven’t solely improved efficiency in duties with restricted labeled knowledge however have additionally launched novel approaches to leveraging unlabeled knowledge successfully.

Under, I current a choice of papers that, in my opinion, symbolize among the most impactful and fascinating contributions to the sphere of semi-supervised studying. These works have formed our understanding of the topic and proceed to affect present analysis and purposes.

Temporal Ensembling for Semi-Supervised Studying (2017): Laine and Aila

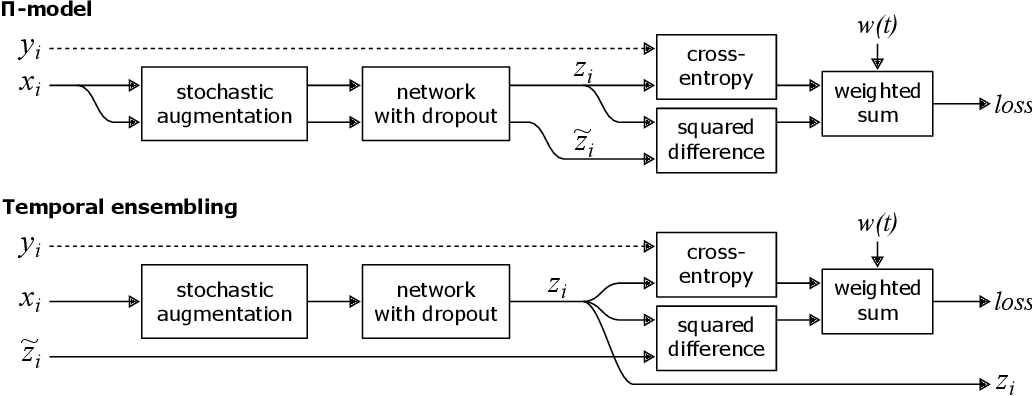

This paper launched the idea of consistency regularization, a cornerstone of many subsequent semi-supervised studying strategies. The authors first proposed the Pi-Mannequin, which applies stochastic augmentations to every unlabeled enter twice and encourages constant predictions for each variations. This method leverages the concept that a mannequin ought to produce comparable outputs for perturbed variations of the identical enter.

Constructing upon the Pi-Mannequin, the authors then launched Temporal Ensembling. This methodology addresses a key limitation of the Pi-Mannequin by lowering the noise within the consistency targets. As an alternative of evaluating predictions from two concurrent passes, Temporal Ensembling maintains an exponential transferring common (EMA) of previous predictions for every unlabeled instance. This EMA serves as a extra steady goal for the consistency loss, successfully ensembling the mannequin’s predictions over time.

The Temporal Ensembling method considerably improved upon the Pi-Mannequin, demonstrating higher efficiency and sooner convergence. This work had a considerable influence on the sphere, laying the inspiration for quite a few consistency-based strategies in SSL and exhibiting how leveraging a mannequin’s personal predictions may result in improved studying from unlabeled knowledge.

Digital Adversarial Coaching (2018): Miyato et al.

Digital Adversarial Coaching (VAT) cleverly tailored the idea of adversarial assaults to semi-supervised studying. The concept originated from the well-known phenomenon of adversarial examples in picture classification, the place small, imperceptible perturbations to an enter picture may dramatically change a mannequin’s prediction. Researchers found these perturbations through the use of backpropagation to maximise the change within the mannequin’s output however with respect to the enter quite than the mannequin weights.

VAT’s key innovation was to use this adversarial perturbation idea to unlabeled knowledge in a semi-supervised setting. As an alternative of utilizing backpropagation to search out perturbations that change the mannequin’s prediction, VAT makes use of it to search out perturbations that might most importantly alter the mannequin’s predicted distribution. The mannequin is then educated to withstand these perturbations, encouraging constant predictions even beneath small, adversarial modifications to the enter.

This methodology tackled the issue of enhancing mannequin robustness and generalization in SSL. VAT’s influence was vital, exhibiting how adversarial methods might be successfully utilized in SSL and opening up new avenues for analysis on the intersection of adversarial robustness and semi-supervised studying. It demonstrated that ideas from adversarial machine studying might be repurposed to extract extra info from unlabeled knowledge, resulting in improved semi-supervised studying efficiency.

Imply Trainer (2017): Tarvainen and Valpola

The Imply Trainer methodology launched a easy but efficient method to creating high-quality consistency targets in SSL. Its key innovation was the usage of an exponential transferring common of mannequin weights to create a “teacher” mannequin, which supplies targets for the “student” mannequin. This addressed the issue of stabilizing coaching and enhancing efficiency in SSL.

Whereas each Imply Trainer and Temporal Ensembling use EMA, they apply it otherwise:

- Temporal Ensembling applies EMA to the predictions for every knowledge level over completely different epochs. This creates steady targets however updates slowly, particularly for big datasets the place every instance is seen occasionally.

- Imply Trainer, then again, applies EMA to the mannequin weights themselves. This creates a trainer mannequin that is an ensemble of current scholar fashions. The trainer can then generate consistency targets for any enter, together with unseen augmentations, permitting for extra frequent updates.

This refined distinction permits Imply Trainer to adapt extra rapidly to new knowledge and supply extra constant targets, particularly early in coaching and for bigger datasets. It additionally allows the usage of completely different augmentations for the scholar and trainer fashions, doubtlessly capturing a broader vary of invariances.

Imply Trainer demonstrated that easy averaging methods may result in vital enhancements in SSL efficiency. It impressed additional analysis into teacher-student fashions in SSL and confirmed how the concepts from Temporal Ensembling might be prolonged and improved upon.

Unsupervised Knowledge Augmentation (2020): Xie et al.

Unsupervised Knowledge Augmentation (UDA) leveraged superior knowledge augmentation methods for consistency regularization in SSL. The important thing innovation was the usage of state-of-the-art knowledge augmentation strategies, notably in NLP duties the place such methods have been much less explored.

By “advanced data augmentation,” the authors discuss with extra refined transformations that transcend easy perturbations:

- For picture duties: UDA makes use of RandAugment, which mechanically searches for optimum augmentation insurance policies. This consists of mixtures of shade changes, geometric transformations, and numerous filters.

- For textual content duties: UDA introduces strategies like back-translation and phrase changing utilizing TF-IDF. Again-translation entails translating a sentence to a different language after which again to the unique, making a paraphrased model. TF-IDF-based phrase substitute swaps phrases with synonyms whereas preserving the sentence’s total which means.

These superior augmentations create extra numerous and semantically significant variations of the enter knowledge, serving to the mannequin study extra sturdy representations. UDA addressed the issue of enhancing SSL efficiency throughout numerous domains, with a specific deal with textual content classification duties. Its influence was vital, demonstrating the facility of task-specific knowledge augmentation in SSL and reaching state-of-the-art leads to a number of benchmarks with restricted labeled knowledge.

The success of UDA highlighted the significance of rigorously designed knowledge augmentation methods in semi-supervised studying, particularly for domains the place conventional augmentation methods have been restricted.

FixMatch (2020): Sohn et al.

FixMatch represents a major simplification in semi-supervised studying methods whereas reaching state-of-the-art efficiency. The important thing innovation lies in its elegant mixture of two primary concepts:

- Consistency regularization: FixMatch makes use of robust and weak augmentations on unlabeled knowledge. The mannequin’s prediction on weakly augmented knowledge should match its prediction on strongly augmented knowledge.

- Pseudo-Labeling: It solely retains pseudo-labels from weakly augmented unlabeled knowledge when the mannequin’s prediction is very assured (above a set threshold).

What units FixMatch aside is its use of extraordinarily robust augmentations (like RandAugment) for the consistency regularization part, coupled with a easy threshold-based pseudo-labeling mechanism. This method permits the mannequin to generate dependable pseudo-labels from weakly augmented pictures and study sturdy representations from strongly augmented ones.

FixMatch demonstrated exceptional efficiency with extraordinarily restricted labeled knowledge, typically utilizing as few as 10 labeled examples per class. Its success confirmed {that a} well-designed, easy SSL algorithm may outperform extra complicated strategies, setting a brand new benchmark within the area and influencing subsequent analysis in low-label regimes.

Noisy Pupil (2020): Xie et al.

Noisy Pupil launched an iterative self-training method with noise injection for SSL, marking a major milestone within the area. The important thing innovation was the usage of a big EfficientNet mannequin as a scholar, educated on the noisy predictions of a trainer mannequin, with the method repeated iteratively.

What units Noisy Pupil aside is its groundbreaking efficiency:

- Surpassing supervised studying: Notably, it was the primary SSL methodology to outperform purely supervised studying even when a considerable amount of labeled knowledge was out there. This breakthrough challenged the standard knowledge that SSL was solely useful in low-labeled knowledge regimes.

- Scale and effectiveness: The tactic demonstrated that by leveraging a considerable amount of unlabeled knowledge (300M unlabeled pictures), it may enhance upon state-of-the-art supervised fashions educated on all 1.28M labeled ImageNet pictures.

- Noise injection: The “noisy” facet entails making use of knowledge augmentation, dropout, and stochastic depth to the scholar throughout coaching, which helps in studying extra sturdy options.

Noisy Pupil pushed the boundaries of efficiency on difficult, large-scale datasets like ImageNet. It confirmed that SSL methods might be useful even in situations with ample labeled knowledge, increasing the potential purposes of SSL. The tactic additionally impressed additional analysis into scalable SSL methods and their software to enhance state-of-the-art fashions in numerous domains.

Noisy Pupil’s success in outperforming supervised studying with substantial labeled knowledge out there marked a paradigm shift in how researchers and practitioners view the potential of semi-supervised studying methods.

Semi-Supervised Studying With Deep Generative Fashions (2014): Kingma et al.

This seminal paper launched a novel method to semi-supervised studying utilizing variational autoencoders (VAEs). The important thing innovation lies in the way it combines generative and discriminative studying inside a single framework.

Central to this methodology is the mixed loss perform, which has two primary parts:

- Generative part: This a part of the loss ensures that the mannequin learns to reconstruct enter knowledge successfully, capturing the underlying knowledge distribution p(x).

- Discriminative part: This half focuses on the classification job, optimizing for correct predictions on labeled knowledge.

The mixed loss perform permits the mannequin to concurrently study from each labeled and unlabeled knowledge. For labeled knowledge, each parts are used. For unlabeled knowledge, solely the generative part is lively, nevertheless it not directly improves the discriminative efficiency by studying higher representations.

This method addressed the issue of leveraging unlabeled knowledge to enhance classification efficiency, particularly when labeled knowledge is scarce. It opened up new instructions for utilizing deep generative fashions in SSL. The tactic additionally demonstrated how generative fashions may enhance discriminative duties, bridging the hole between unsupervised and supervised studying and impressed a wealth of subsequent analysis on the intersection of generative modeling and semi-supervised studying.

This work laid the inspiration for a lot of future developments in SSL, exhibiting how deep generative fashions might be successfully utilized to extract helpful info from unlabeled knowledge for classification duties.

Examples of Software

Semi-supervised studying has led to vital advances throughout numerous domains, demonstrating its versatility and effectiveness in dealing with giant quantities of unlabeled knowledge. Listed below are some notable purposes:

1. Speech Recognition

In 2021, Meta (previously Fb) used self-training with SSL on a base mannequin educated with 100 hours of labeled audio and 500 hours of unlabeled knowledge. This method diminished the phrase error fee by 33.9%, showcasing SSL’s potential in enhancing speech recognition methods.

2. Net Content material Classification

Search engines like google like Google make use of SSL to categorise internet content material and enhance search relevance. This software is essential for dealing with the huge and always rising quantity of internet pages, enabling extra correct and environment friendly content material categorization.

3. Textual content Doc Classification

SSL has confirmed efficient in constructing textual content classifiers. As an example, the SALnet textual content classifier developed by Yonsei College makes use of deep studying neural networks like LSTM for duties comparable to sentiment evaluation. This demonstrates SSL’s functionality in managing giant, unlabeled datasets in pure language processing duties.

4. Medical Picture Evaluation

In 2023, researchers at Stanford College utilized SSL methods to reinforce the accuracy of mind tumor segmentation in MRI scans. By leveraging a small set of labeled pictures alongside a bigger pool of unlabeled knowledge, they achieved a 15% enchancment in tumor detection accuracy in comparison with totally supervised strategies. This software highlights SSL’s potential in medical imaging, the place labeled knowledge is usually scarce and costly to acquire, however unlabeled knowledge is ample.

Conclusion

Semi-supervised studying (SSL) has established itself as a vital machine studying method, successfully bridging the hole between the abundance of unlabeled knowledge and the shortage of labeled knowledge. By ingeniously combining supervised and unsupervised studying approaches, SSL gives a practical and environment friendly resolution to the perennial problem of information labeling. This text has delved into numerous SSL methodologies, from the foundational constant regularization methods like Temporal Ensembling to cutting-edge approaches comparable to FixMatch and Noisy Pupil.

The flexibility of SSL is prominently displayed in its profitable implementation throughout a large spectrum of domains, together with speech recognition, internet content material classification, and textual content doc evaluation. In an period the place knowledge technology far outpaces our capability to label it, SSL emerges as a pivotal improvement in Machine Studying, empowering researchers and practitioners to harness the potential of huge unlabeled datasets.

As we glance to the long run, SSL is poised to imagine an much more vital function within the AI and machine studying panorama. Whereas challenges persist, comparable to enhancing efficiency with extraordinarily restricted labeled knowledge and adapting SSL methods to extra intricate real-world situations, the sphere’s speedy developments recommend a trajectory of continued innovation. These developments might result in groundbreaking approaches in mannequin coaching and knowledge interpretation

The core ideas of SSL are more likely to affect and intersect with different burgeoning areas of machine studying, together with few-shot studying and self-supervised studying. This cross-pollination of concepts guarantees to additional broaden SSL’s influence and doubtlessly reshape our understanding of studying from restricted labeled knowledge.

SSL represents not only a set of methods, however a paradigm shift in how we method the elemental drawback of studying from knowledge. Because it continues to evolve, SSL might be the important thing to unlocking the total potential of the huge, largely unlabeled knowledge sources that characterize our digital age.