Drawback Assertion

By default, the IBM Handle JMS setup creates a single JMS retailer file for all queues, which successfully locations all queues in a single repository. This strategy poses a danger: if one queue turns into corrupt and a message is affected, you would need to delete the shop file for all queues to resolve the problem.

How To Overcome the Drawback

We developed an answer to configure the MAS 8 utility with a definite JMS retailer file for every queue.

Design and Resolution Implementation

On this instance, I will exhibit the setup for 4 queues: cqin, cgout, sqin, and sqout. You possibly can have extra queues if they’re enabled in your Maximo internet.xml configuration. I’ll modify the IBM configuration to point out tips on how to assign separate JMS retailer information for every queue, guaranteeing that an issue in a single queue doesn’t affect the others.

Make sure you create a Persistent Quantity Declare (PVC) on your JMS retailer information inside your MAS Core Suite utility. In my case, the PVC is called jmsstore and is positioned at /jmsstore, with a storage dimension of 20GB for this demo. Nonetheless, you may all the time allocate extra storage as wanted.

You probably have entry to OpenShift, navigate to your managed namespace and verify if pvc has been created:

$oc challenge mas-masivt810x-manage

$oc get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

masivt810x-wkspivt810x-custom-logs Certain pvc-cba1d87d-ab9f-4213-96fa-f52a135b2863 20Gi RWX nfs-storage 18d

masivt810x-wkspivt810x-doclink Certain pvc-981fa13b-0be6-4b4c-b595-e587473109ae 10Gi RWX nfs-storage 18d

masivt810x-wkspivt810x-globaldir Certain pvc-b8c9ffd6-9c4c-403e-9706-d750c8fa4394 20Gi RWX nfs-storage 18d

masivt810x-wkspivt810x-jmsstore Certain pvc-c6a2bbdc-85dc-4e57-a2ac-4a284c1e1976 20Gi RWX nfs-storage 18d

masivt810x-wkspivt810x-migration Certain pvc-2e06b37b-b7ff-4914-b532-e482ea5bb233 10Gi RWX nfs-storage 18dYou can even navigate to the maxinst pod to verify if the /jmsstore listing was created.

$ oc exec -n mas-masivt810x-manage $(oc get -n mas-masivt810x-manage -l mas.ibm.com/appType=maxinstudb --no-headers=true pods -o title | awk -F "https://dzone.com/" '{print $2}') -- ls -ltrh / |grep jms

drwxrwxrwx. 13 root 1000330000 0 Jul 3 11:32 jmsstoreAfter your MAS Core occasion reconciliation, it’s best to see the names of your PVCs, together with a brand new pvc for the jmsstore.

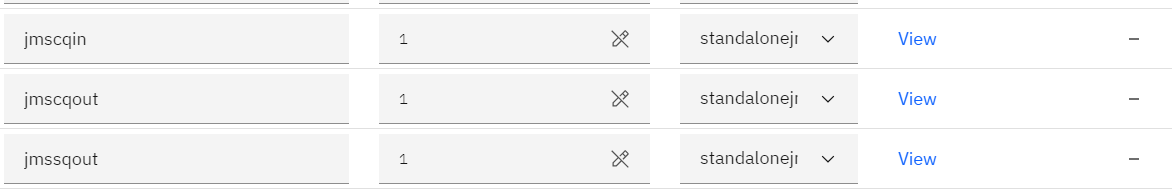

Within the Server bundles part, if the System managed checkbox is chosen, clear it. Click on Add bundle. We’re going to add 4 JMS server bundles:

- jmscqin

- jmscqout

- jmssqout

- jmssqin

Within the Sort column, choose standalonejms:

To configure the queues, full the next steps:

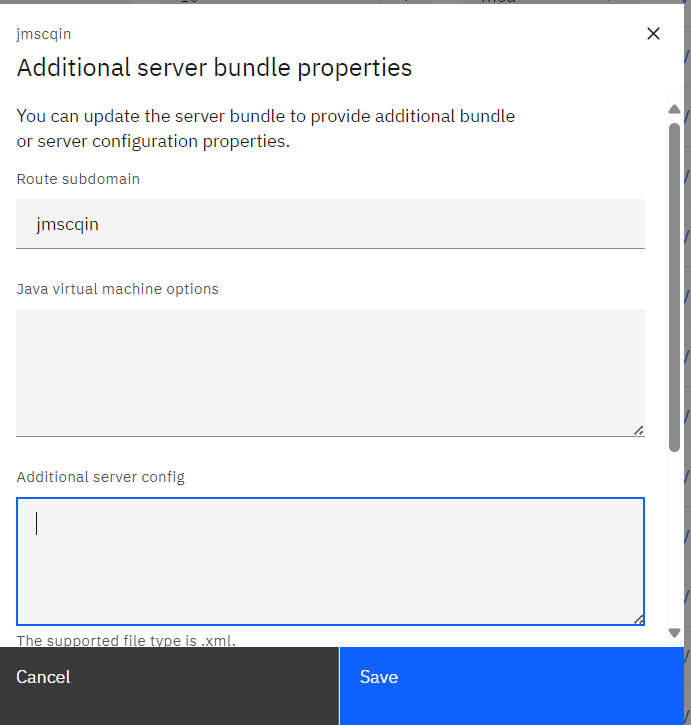

- Within the Further properties column on your JMS server bundle, click on View.

- Set a route subdomain that matches the title of your bundles. We’ll use the pod’s service because the remoteServerAddress.

- Within the Further server config part, use XML to specify default and {custom} queues.

- You possibly can specify a queue as:

- Outbound sequential

- Outbound steady

- Inbound sequential

- Inbound steady

Use this XML configuration for the jmscqin bundle. I’ve added the cqinerror engine to run alongside the cqin engine.

wasJmsSecurity-1.0

wasJmsServer-1.0

Use this XML configuration for the jmscqout bundle. I’ve added cqouterror engine to run alongside the cqout engine.

wasJmsSecurity-1.0

wasJmsServer-1.0

The jmssqout bundle runs the sqout engine alone.

wasJmsSecurity-1.0

wasJmsServer-1.0

The jmssqin bundle runs the sqin engine alone.

wasJmsSecurity-1.0

wasJmsServer-1.0

Now you’ll need the service that has been created for these bundles. Primarily, the bundles will create pods, providers, and routes. Since it’s inside the cluster, we are able to use the service for our remoteServerAddress. Be aware: Your remoteServerAddress should be within the following format:

remoteServerAddress="--.mas--manage.svc:7276:BootstrapBasicMessaging"

remoteServerAddress="masivt810x-wkspivt810x-jmsscqin.mas-masivt810x-manage.svc:7276:BootstrapBasicMessaging" Alternatively, you may go to your OpenShift GUI and get the hostname routing created on the service. Use this because the remoteServerAddress. You may get the names of the pods and providers by working these instructions:

$ oc get svc |grep jms

masivt810x-wkspivt810x-jmscqin ClusterIP None 9080/TCP,443/TCP,7276/TCP,7286/TCP 23h

masivt810x-wkspivt810x-jmscqout ClusterIP None 9080/TCP,443/TCP,7276/TCP,7286/TCP 23h

masivt810x-wkspivt810x-jmssqin ClusterIP None 9080/TCP,443/TCP,7276/TCP,7286/TCP 23h

masivt810x-wkspivt810x-jmssqout ClusterIP None 9080/TCP,443/TCP,7276/TCP,7286/TCP 23h

$ oc get pods |grep jms

masivt810x-wkspivt810x-jmscqin-0 1/1 Working 0 23h

masivt810x-wkspivt810x-jmscqout-0 1/1 Working 0 23h

masivt810x-wkspivt810x-jmssqin-0 1/1 Working 0 23h

masivt810x-wkspivt810x-jmssqout-0 1/1 Working 0 23h You can even verify your maxinst pods to see if the jmsstore listing was created by working this command:

oc exec -n mas-masivt810x-manage $(oc get -n mas-masivt810x-manage -l mas.ibm.com/appType=maxinstudb --no-headers=true pods -o title | awk -F "https://dzone.com/" '{print $2}') -- ls -ltrh /jmsstore/

whole 0

drwxr-x---. 2 1001040000 root 0 Jul 3 11:20 cqin

drwxr-x---. 2 1001040000 root 0 Jul 3 11:27 cqout

drwxr-x---. 2 1001040000 root 0 Jul 3 11:28 sqout

drwxr-x---. 2 1001040000 root 0 Jul 3 11:29 sqinYou probably have the Maximo MEA bundle, you should utilize the XML file beneath. You probably have a single server (all) bundle, change maximomea to maximo-all within the XML.

jndi-1.0

wasJmsClient-2.0

jmsMdb-3.2

mdb-3.2

You probably have UI, report, and cron bundles, use this XML:

jndi-1.0

wasJmsClient-2.0

jmsMdb-3.2

mdb-3.2

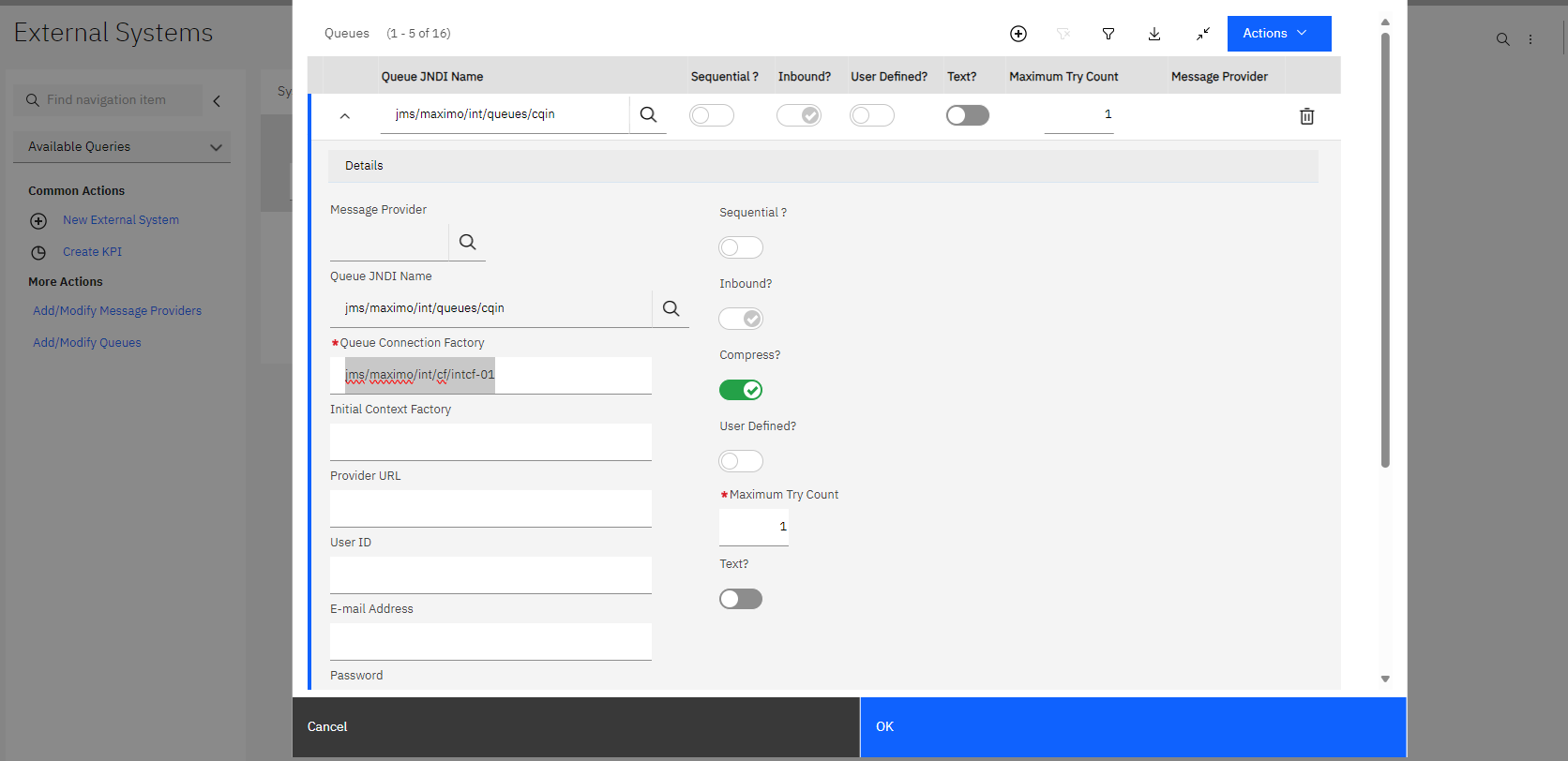

Our XML has the next checklist of jmsQueueConnectionFactory JNDI names from the offered XML:

- jms/maximo/int/cf/intcf-01

- jms/maximo/int/cf/intcf-02

- jms/maximo/int/cf/intcf-03

- jms/maximo/int/cf/intcf-04

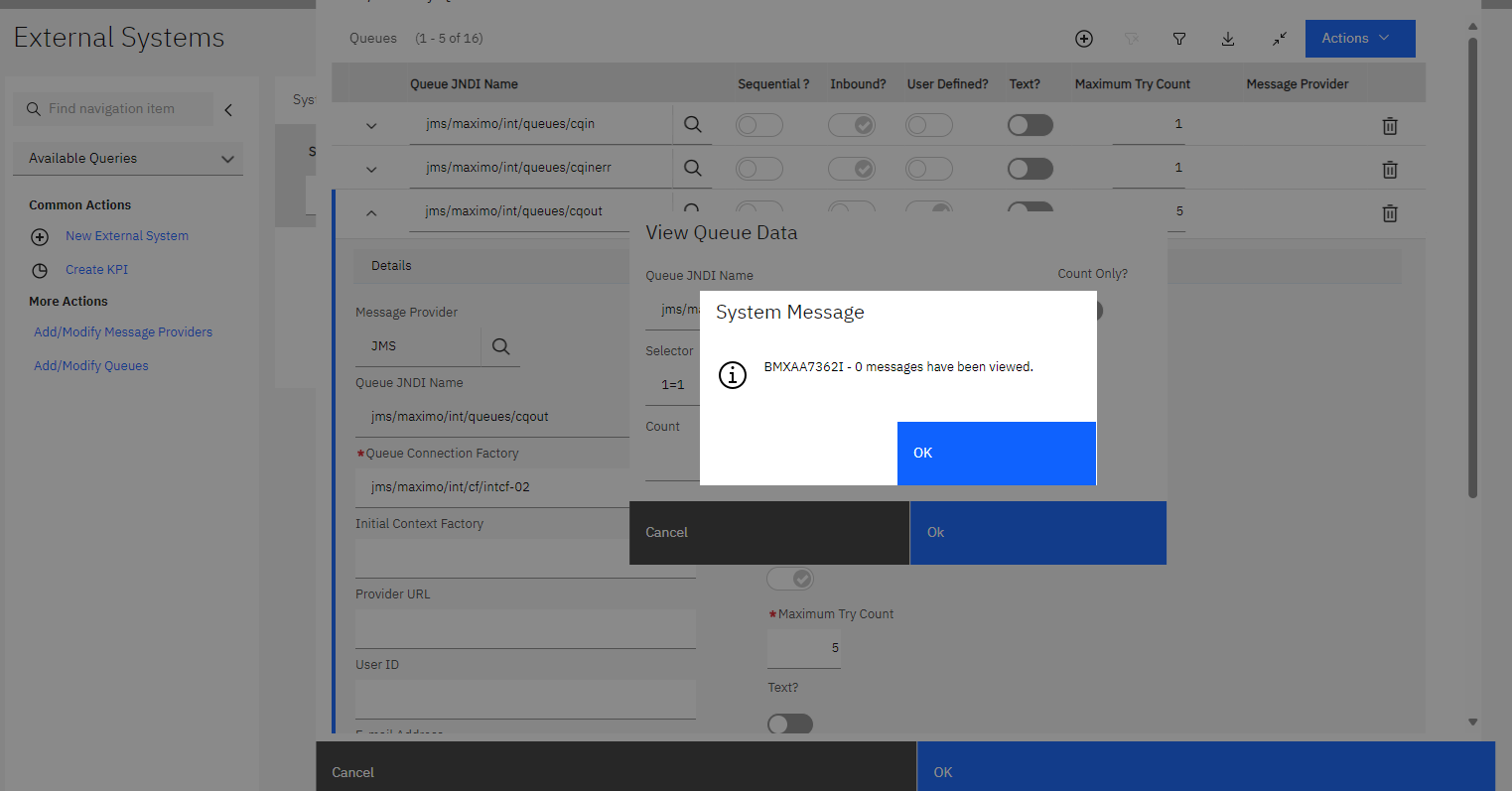

We’ll use these jmsQueueConnectionFactory JNDI names for the exterior techniques in Maximo. Add or modify the queues for sqin, sqout, cqin, cqinerr, cqouterr, and notf.

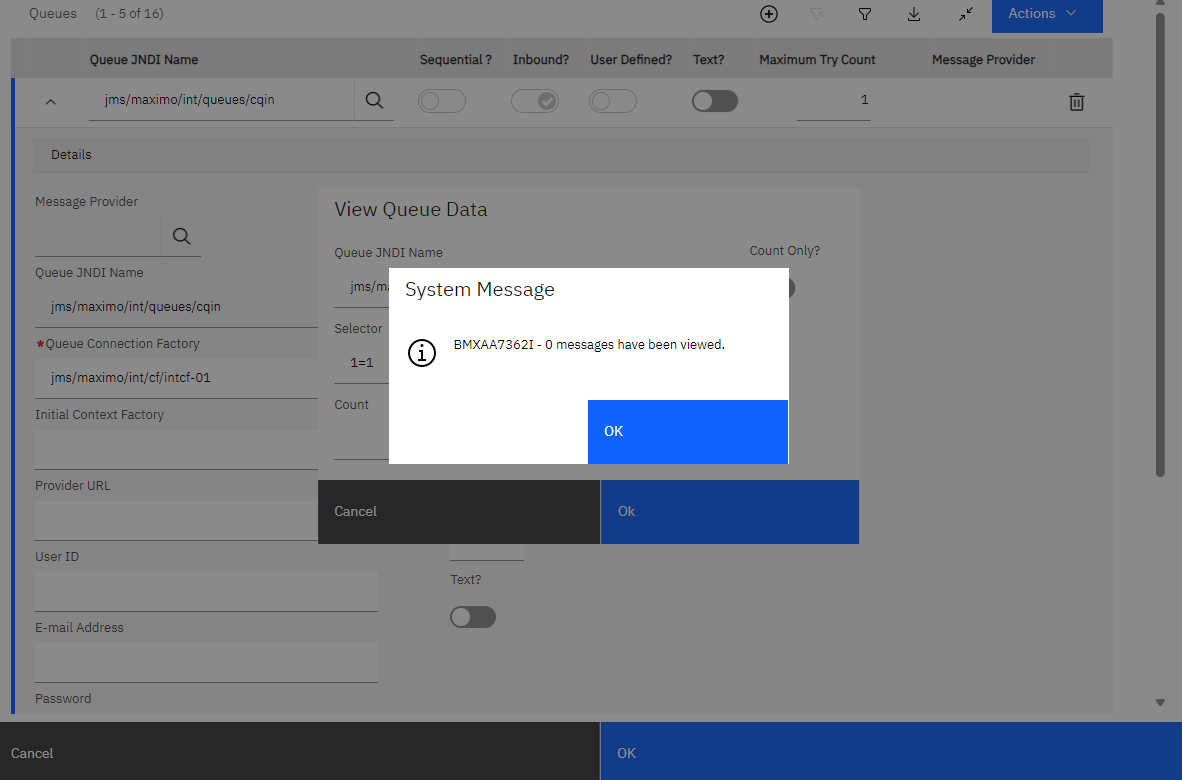

You possibly can go to Motion on the Add/Modify dialog and think about the queues. You shouldn’t encounter any errors.

Conclusion

All of the JMS queue ( cqin, cgout, sqin, and sqout) messages ought to be written to a devoted folder created inside a father or mother jmsstore folder. Therefore, we should always be capable of delete a single queue (everlasting and log) file at any time limit with out impacting the opposite queues.