Information integration is a tough problem in each enterprise. Batch processing and Reverse ETL are frequent practices in an information warehouse, information lake, or lakehouse. Information inconsistency, excessive compute prices, and rancid info are the implications. This weblog submit introduces a brand new design sample to resolve these issues: the Shift Left Structure permits an information mesh with real-time information merchandise to unify transactional and analytical workloads with Apache Kafka, Flink, and Iceberg. Constant info is dealt with with streaming processing or ingested into Snowflake, Databricks, Google BigQuery, or another analytics/AI platform to extend flexibility, cut back value, and allow a data-driven firm tradition with sooner time-to-market constructing progressive software program functions.

Information Merchandise: The Basis of a Information Mesh

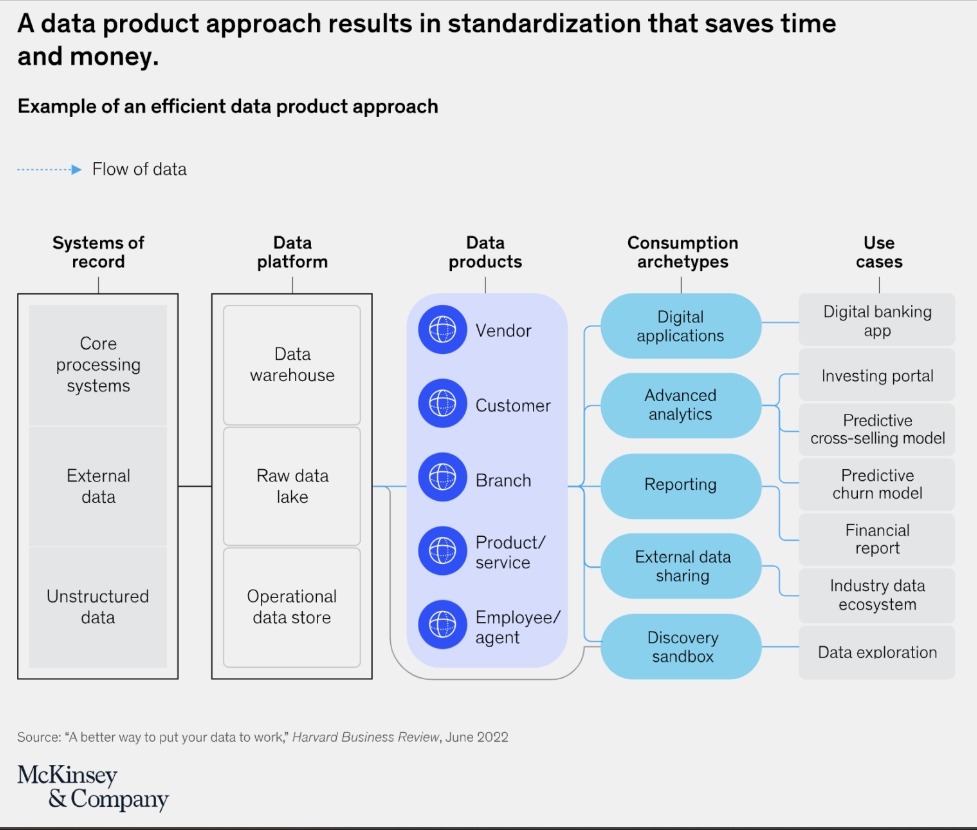

A knowledge product is an important idea within the context of an information mesh that represents a shift from conventional centralized information administration to a decentralized strategy.

McKinsey finds that “when companies instead manage data like a consumer product — be it digital or physical — they can realize near-term value from their data investments and pave the way for quickly getting more value tomorrow. Creating reusable data products and patterns for piecing together data technologies enables companies to derive value from data today and tomorrow”:

In accordance with McKinsey, the advantages of the information product strategy might be vital:

- New enterprise use circumstances might be delivered as a lot as 90 p.c sooner.

- The full value of possession, together with expertise, growth, and upkeep, can decline by 30 p.c.

- The chance and data-governance burden might be decreased.

Information Product From a Technical Perspective

Right here’s what an information product entails in an information mesh from a technical perspective:

- Decentralized possession: Every information product is owned by a particular area staff. Purposes are really decoupled.

- Sourced from operational and analytical programs: Information merchandise embrace info from any information supply, together with probably the most essential programs and analytics/reporting platforms.

- Self-contained and discoverable: A knowledge product consists of not solely the uncooked information but in addition the related metadata, documentation, and APIs.

- Standardized interfaces: Information merchandise adhere to standardized interfaces and protocols, guaranteeing that they are often simply accessed and utilized by different information merchandise and customers throughout the information mesh.

- Information high quality: Most use circumstances profit from real-time information. A knowledge product ensures information consistency throughout real-time and batch functions.

- Worth-driven: The creation and upkeep of knowledge merchandise are pushed by enterprise worth.

In essence, an information product in an information mesh framework transforms information right into a managed, high-quality asset that’s simply accessible and usable throughout a corporation, fostering a extra agile and scalable information ecosystem.

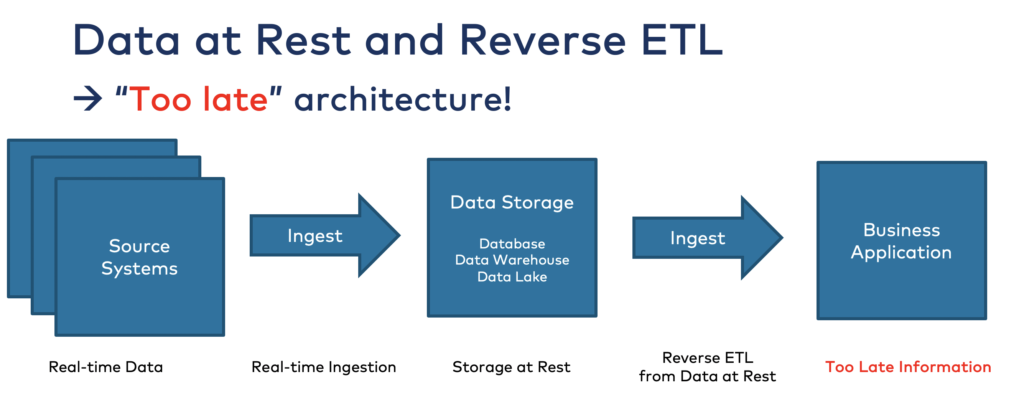

Anti-Sample: Batch Processing and Reverse ETL

The “modern” information stack leverages conventional ETL instruments or information streaming for ingestion into an information lake, information warehouse, or lakehouse. The consequence is a spaghetti structure with numerous integration instruments for batch and real-time workloads mixing analytical and operational applied sciences:

Reverse ETL is required to get info out of the information lake into operational functions and different analytical instruments. As I’ve written about beforehand, the mixture of knowledge lakes and Reverse ETL is an anti-pattern for the enterprise structure largely because of the financial and organizational inefficiencies Reverse ETL creates. Occasion-driven information merchandise allow a a lot less complicated and extra cost-efficient structure.

One key cause for the necessity for batch processing and reverse ETL patterns is the frequent use of lambda structure: an information processing structure that handles real-time and batch processing individually utilizing totally different layers. This nonetheless extensively exists in enterprise architectures. Not only for huge information use circumstances like Hadoop/Spark and Kafka, but in addition for the combination with transactional programs like file-based legacy monoliths or Oracle databases.

Contrarily, the Kappa structure handles each real-time and batch processing utilizing a single expertise stack. Be taught extra about “Kappa replacing Lambda Architecture” in its personal article. TL;DR: The Kappa structure is feasible by bringing even legacy applied sciences into an event-driven structure utilizing an information streaming platform. Change Information Seize (CDC) is likely one of the most typical helpers for this.

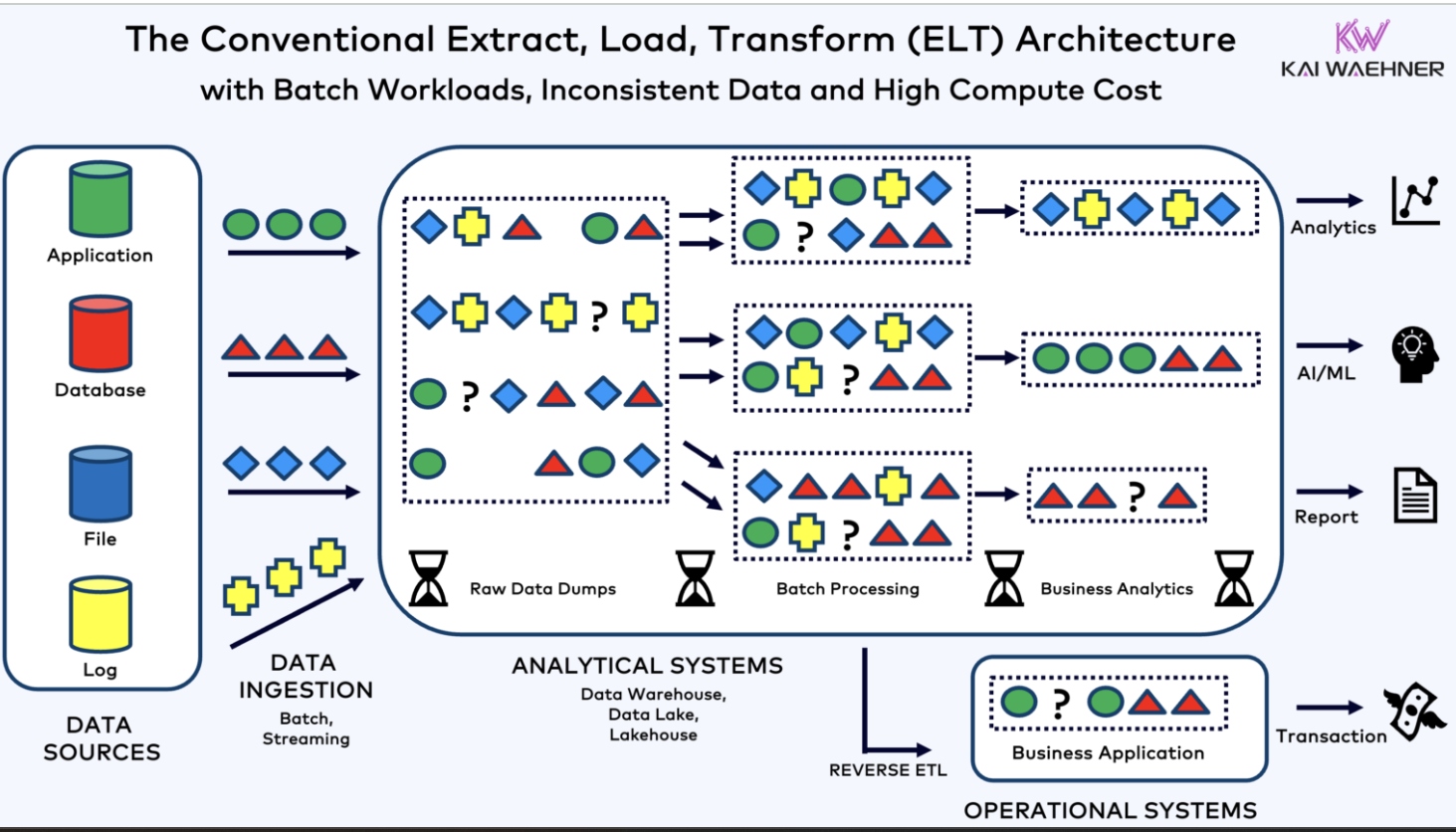

Conventional ELT within the Information Lake, Information Warehouse, Lakehouse

It looks as if no one does information warehouse anymore right now. Everybody talks a few lakehouse merging an information warehouse and an information lake. No matter time period you utilize or choose. . .The combination course of today seems to be like the next:

Simply ingesting all of the uncooked information into an information warehouse/information lake/lakehouse has a number of challenges:

- Slower updates: The longer the information pipeline and the extra instruments are used, the slower the replace of the information product.

- Longer time-to-market: Improvement efforts are repeated as a result of every enterprise unit must do the identical or related processing steps once more as a substitute of consuming from a curated information product.

- Elevated value: The money cow of analytics platforms cost is compute, not storage. The extra your corporation items use DBT, the higher for the analytics SaaS supplier.

- Repeating efforts: Most enterprises have a number of analytics platforms, together with totally different information warehouses, information lakes, and AI platforms. ELT means doing the identical processing once more, once more, and once more.

- Information inconsistency: Reverse ETL, Zero ETL, and different integration patterns be sure that your analytical and particularly operational functions see inconsistent info. You can’t join a real-time client or cellular app API to a batch layer and count on constant outcomes.

Information Integration, Zero ETL, and Reverse ETL With Kafka, Snowflake, Databricks, BigQuery, and so forth.

These disadvantages are actual! I’ve not met a single buyer prior to now months who disagreed and advised me these challenges don’t exist. To be taught extra, take a look at my weblog sequence about information streaming with Apache Kafka and analytics with Snowflake:

- Snowflake Integration Patterns: Zero ETL and Reverse ETL vs. Apache Kafka

- Snowflake Information Integration Choices for Apache Kafka (together with Iceberg)

- Apache Kafka + Flink + Snowflake: Value Environment friendly Analytics and Information Governance

The weblog sequence might be utilized to another analytics engine. It’s a worthwhile learn, regardless of in case you use Snowflake, Databricks, Google BigQuery, or a mixture of a number of analytics and AI platforms.

The answer for this information mess creating information inconsistency, outdated info, and ever-growing value is the Shift Left Structure . . .

Shift Left to Information Streaming for Operational AND Analytical Information Merchandise

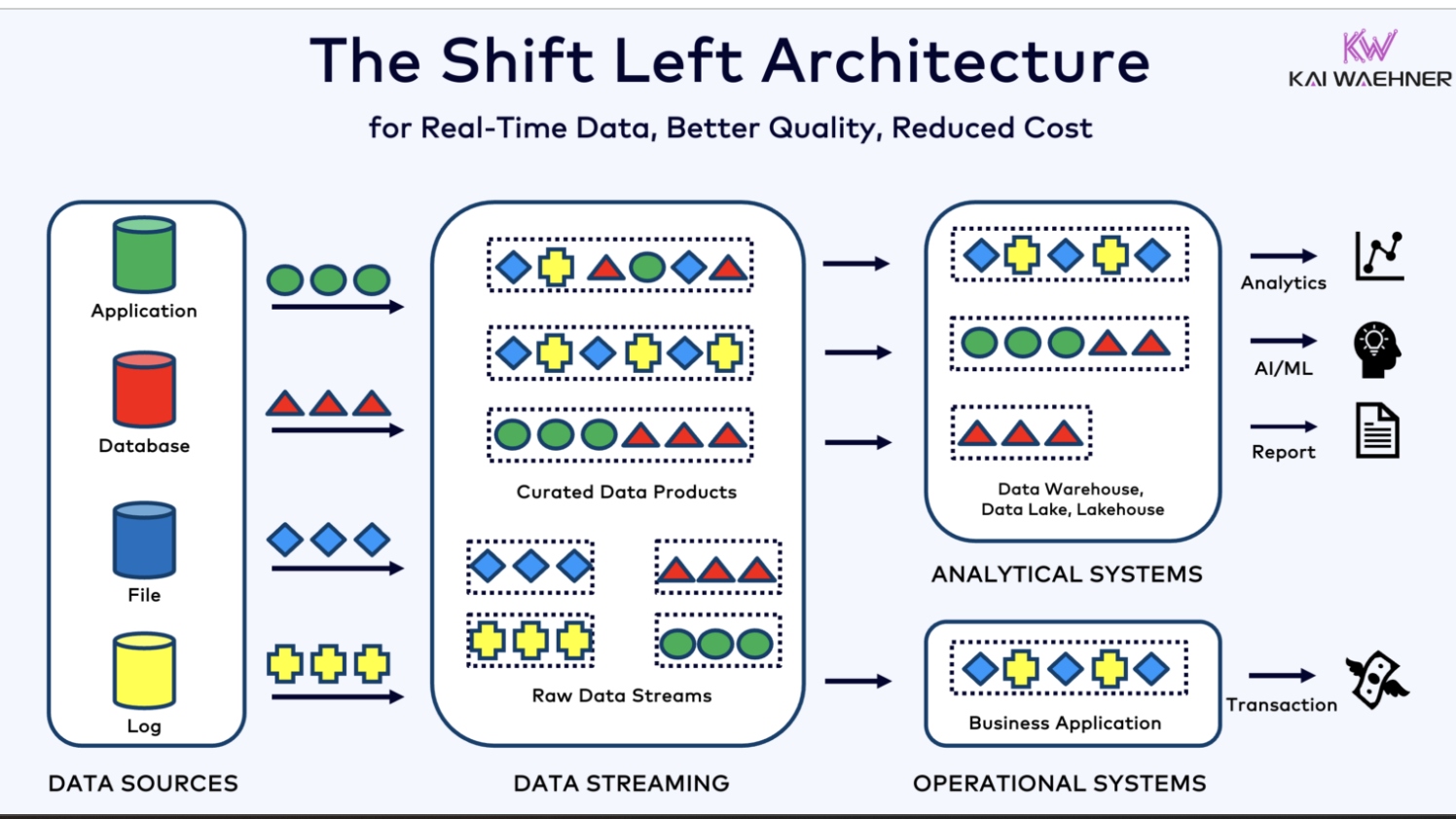

The Shift Left Structure permits constant info from dependable, scalable information merchandise, reduces the compute value, and permits a lot sooner time-to-market for operational and analytical functions with any type of expertise (Java, Python, iPaaS, Lakehouse, SaaS, “you-name-it”) and communication paradigm (real-time, batch, request-response API):

Shifting the information processing to the information streaming platform permits:

- Seize and stream information constantly when the occasion is created.

- Create information contracts for downstream compatibility and promotion of belief with any software or analytics/AI platform.

- Repeatedly cleanse, curate, and high quality examine information upstream with information contracts and coverage enforcement.

- Form information into a number of contexts on the fly to maximize reusability (and nonetheless enable downstream customers to decide on between uncooked and curated information merchandise).

- Construct reliable information merchandise which are immediately helpful, reusable, and constant for any transactional and analytical client (regardless of if consumed in real-time or later through batch or request-response API).

Whereas shifting to the left with some workloads, it’s essential to know that builders/information engineers/information scientists can often nonetheless use their favourite interfaces like SQL or a programming language resembling Java or Python.

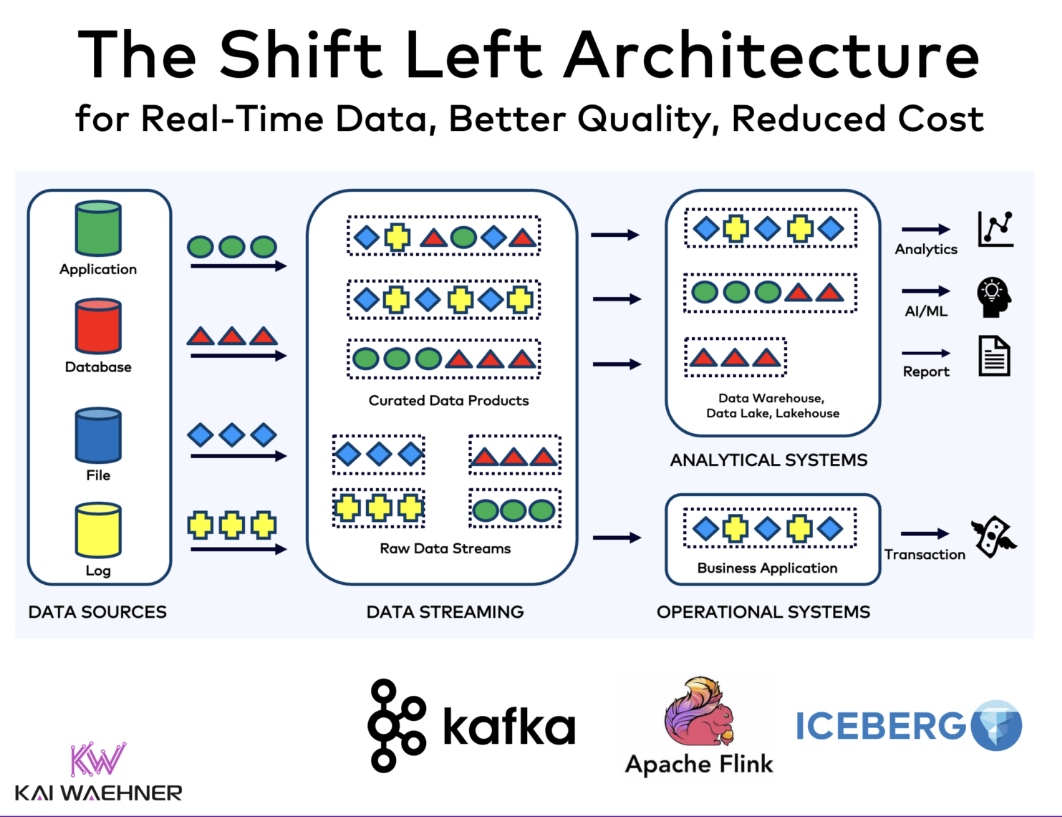

Shift Left Structure With Apache Kafka, Flink, and Iceberg

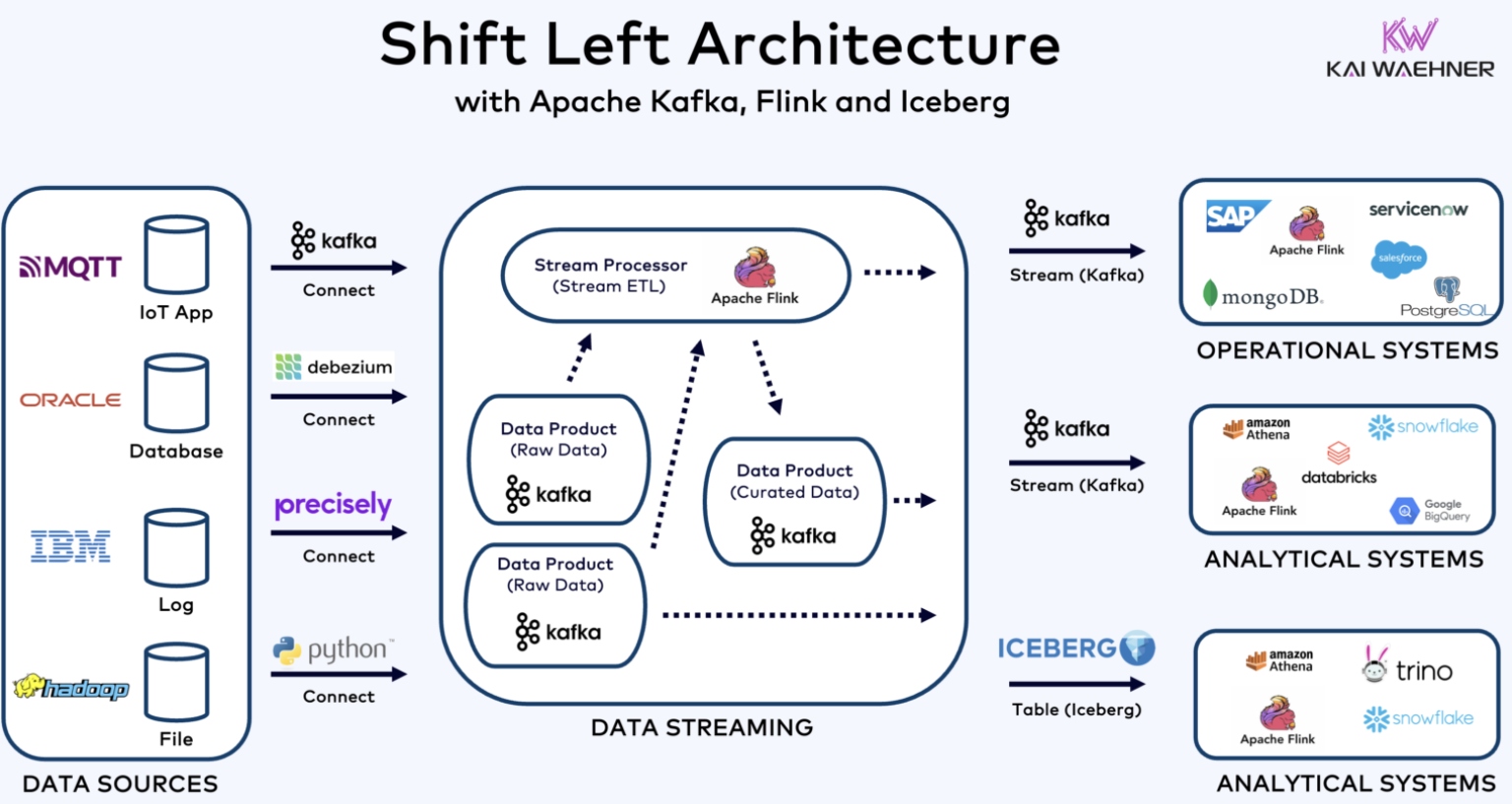

Information streaming is the core fundament of the Shift Left Structure to allow dependable, scalable real-time information merchandise with good information high quality. The next structure reveals how Apache Kafka and Flink join any information supply, curate information units (aka stream processing/streaming ETL), and share the processed occasions with any operational or analytical information sink:

The structure reveals an Apache Iceberg desk instead client. Apache Iceberg is an open desk format designed for managing large-scale datasets in a extremely performant and dependable manner, offering ACID transactions, schema evolution, and partitioning capabilities. It optimizes information storage and question efficiency, making it ideally suited for information lakes and complicated analytical workflows. Iceberg evolves to the de facto customary with assist from most main distributors within the cloud and information administration house, together with AWS, Azure, GCP, Snowflake, Confluent, and plenty of extra coming (like Databricks after its acquisition of Tabular).

From the information streaming perspective, the Iceberg desk is only a button click on away from the Kafka Subject and its Schema (utilizing Confluent’s Tableflow – I’m certain different distributors will observe quickly with their very own options). The massive benefit of Iceberg is that information must be saved solely as soon as (sometimes in a cost-efficient and scalable object retailer like Amazon S3). Every downstream software can devour the information with its personal expertise with none want for added coding or connectors. This consists of information lakehouses like Snowflake or Databricks AND information streaming engines like Apache Flink.

Video: Shift Left Structure

I summarized the above architectures and examples for the Shift Left Structure in a brief ten-minute video in case you choose listening to content material:

Apache Iceberg: The New De Facto Normal for Lakehouse Desk Format?

Apache Iceberg is such an enormous subject and an actual recreation changer for enterprise architectures, finish customers, and cloud distributors. I’ll write one other devoted weblog, together with attention-grabbing matters resembling:

- Confluent’s product technique to embed Iceberg tables into its information streaming platform

- Snowflake’s open-source Iceberg Undertaking Polaris

- Databricks’ acquisition of Tabular (the corporate behind Apache Iceberg) and the relation to Delta Lake and open-sourcing its Unity Catalog

- The (anticipated) way forward for desk format standardization, catalog wars, and different extra options like Apache Hudi or Apache XTable for omnidirectional interoperability throughout lakehouse desk codecs

Enterprise Worth of the Shift Left Structure

Apache Kafka is the de facto customary for information streaming constructing a Kappa Structure. The Information Streaming Panorama reveals numerous open-source applied sciences and cloud distributors. Information Streaming is a brand new software program class. Forrester printed “The Forrester Wave™: Streaming Information Platforms, This autumn 2023“. The leaders are Microsoft, Google, and Confluent, adopted by Oracle, Amazon, Cloudera, and some others.

Constructing information merchandise extra left within the enterprise structure with an information streaming platform and applied sciences resembling Kafka and Flink creates enormous enterprise worth:

- Value discount: Decreasing the compute value in a single and even a number of information platforms (information lake, information warehouse, lakehouse, AI platform, and so forth.).

- Much less growth effort: Streaming ETL, information curation, and information high quality management are already executed immediately (and solely as soon as) after the occasion creation.

- Quicker time to market: Deal with new enterprise logic as a substitute of doing repeated ETL jobs.

- Flexibility: Greatest-of-breed strategy for selecting the perfect and/or most cost-efficient expertise per use case.

- Innovation: Enterprise items can select any programming language, instrument, or SaaS to do real-time or batch consumption from information merchandise to try to fail or scale quick.

The unification of transactional and analytical workloads is lastly potential to allow good information high quality, sooner time to marketplace for innovation, and decreased value of the complete information pipeline. Information consistency issues throughout all functions and databases: A Kafka Subject with an information contract (= Schema with insurance policies) brings information consistency out of the field!

What does your information structure appear to be right now? Does the Shift Left Structure make sense to you? What’s your technique to get there? Let’s join on LinkedIn and focus on it!