A specter is haunting trendy growth: as our structure has grown extra mature, developer velocity has slowed. A main reason for misplaced developer velocity is a decline in testing: in testing pace, accuracy, and reliability. Duplicating environments for microservices has grow to be a standard follow within the quest for constant testing and manufacturing setups. Nonetheless, this strategy typically incurs vital infrastructure prices that may have an effect on each funds and effectivity.

Testing companies in isolation isn’t often efficient; we wish to check these elements collectively.

Excessive Prices of Surroundings Duplication

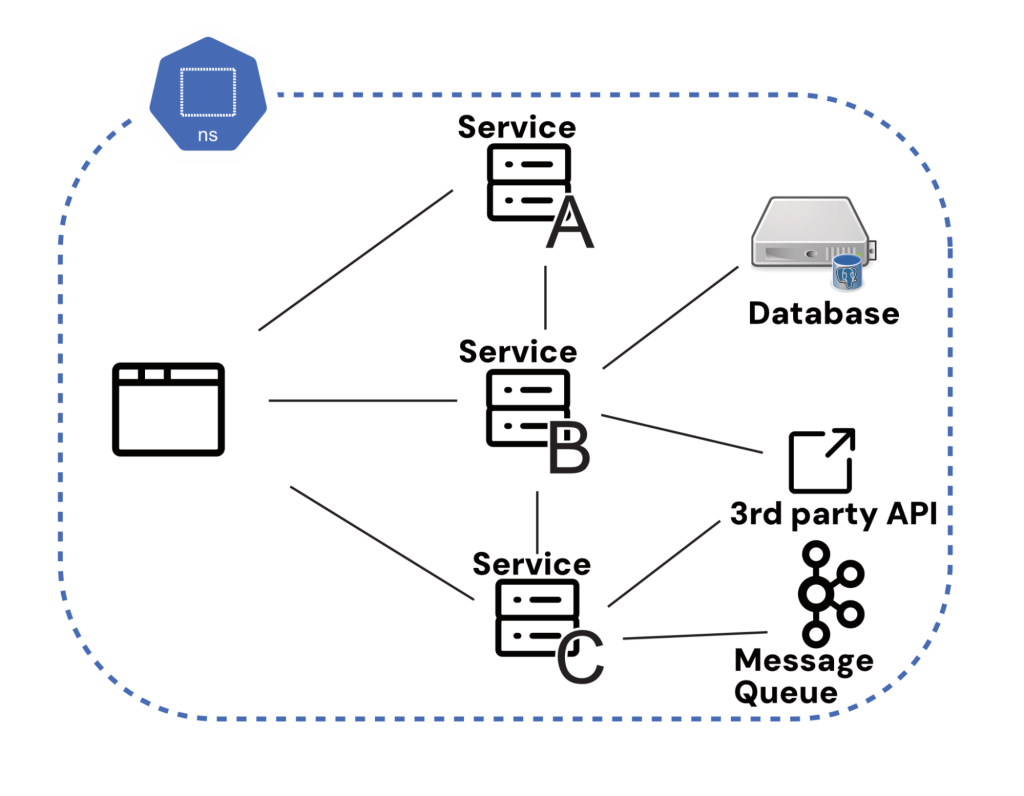

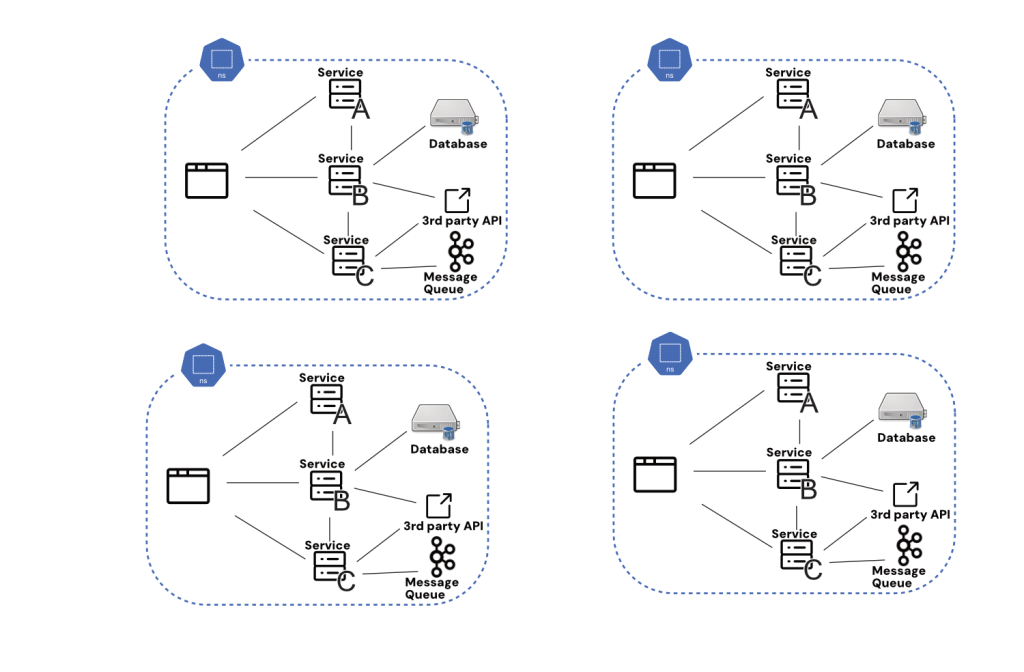

Duplicating environments entails replicating complete setups, together with all microservices, databases, and exterior dependencies. This strategy has the benefit of being technically fairly simple, no less than at first blush. Beginning with one thing like a namespace, we will use trendy container orchestration to duplicate companies and configuration wholesale.

The issue, nonetheless, comes within the precise implementation.

For instance, a significant FinTech firm was reported to have spent over $2 million yearly simply on cloud prices. The corporate spun up many environments for previewing modifications and for the builders to check them, every mirroring their manufacturing setup. The prices included server provisioning, storage, and community configurations, all of which added up considerably. Every crew wanted its personal reproduction, they usually anticipated it to be accessible more often than not. Additional, they didn’t wish to look forward to lengthy startup instances, so in the long run, all these environments have been working 24/7 and racking up internet hosting prices the entire time.

Whereas namespacing looks like a intelligent answer to surroundings replication, it simply borrows the identical complexity and price points from replicating environments wholesale.

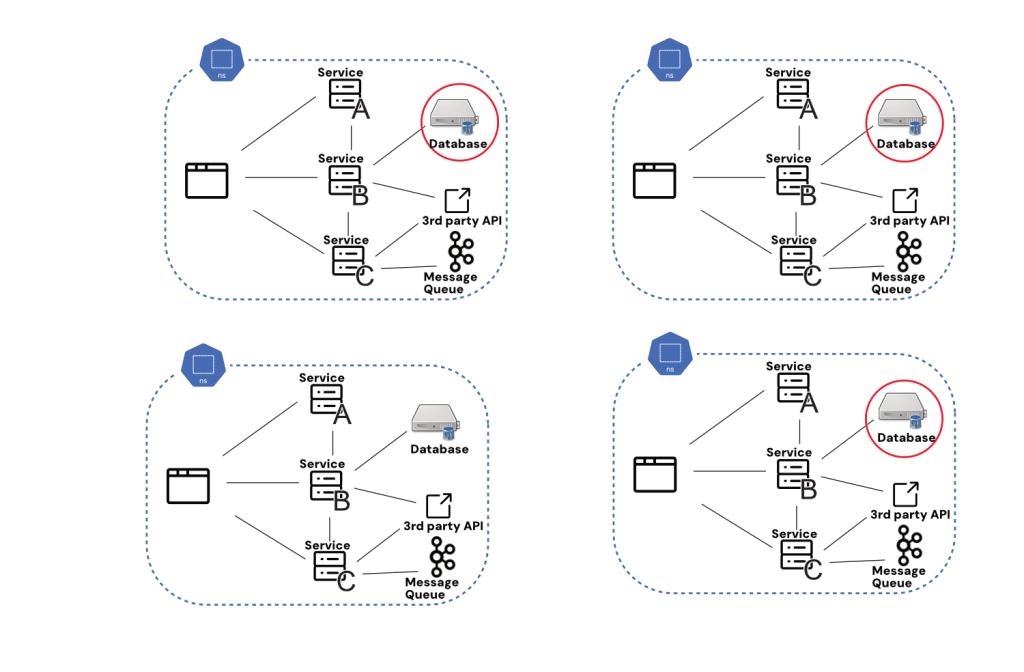

Synchronization Issues

Issues of synchronization are one problem that rears its head when making an attempt to implement replicated testing environments at scale. Basically, for all inner companies, how sure are we that every replicated surroundings is working essentially the most up to date model of each service? This feels like an edge case or small concern till we keep in mind the entire level of this setup was to make testing extremely correct. Discovering out solely when pushing to manufacturing that current updates to Service C have damaged my modifications to Service B is greater than irritating; it calls into query the entire course of.

Once more, there appear to be technical options to this downside: Why don’t we simply seize the newest model of every service at startup? The problem right here is the influence on velocity: If we now have to attend for an entire clone to be pulled configured after which began each time we wish to check, we’re shortly speaking about many minutes and even hours to attend earlier than our supposedly remoted reproduction testing surroundings is prepared for use.

Who’s ensuring particular person assets are synced?

This problem, just like the others talked about right here, is particular to scale: You probably have a small cluster that may be cloned and began in two minutes, little or no of this text applies to you. But when that’s the case, it’s doubtless you may sync all of your companies’ states by sending a fast Slack message to your single two-pizza crew.

Third-party dependencies are one other wrinkle with a number of testing environments. Secrets and techniques dealing with insurance policies typically imply that third-party dependencies can’t have all their authentication information on a number of replicated testing environments; because of this, these third-party dependencies can’t be examined at an early stage. This places stress again on staging as that is the one level the place an actual end-to-end check can occur.

Upkeep Overhead

Managing a number of environments additionally brings a substantial upkeep burden. Every surroundings must be up to date, patched, and monitored independently, resulting in elevated operational complexity. This will pressure IT assets, as groups should make sure that every surroundings stays in sync with the others, additional escalating prices.

A notable case concerned a big enterprise that discovered its duplicated environments more and more difficult to keep up. Testing environments grew to become so divergent from manufacturing that it led to vital points when deploying updates. The corporate skilled frequent failures as a result of modifications examined in a single surroundings didn’t precisely mirror the state of the manufacturing system, resulting in expensive delays and rework. The outcome was small groups “going rogue,” pushing their modifications straight to staging, and solely checking in the event that they labored there. Not solely have been the replicated environments deserted, hurting staging’s reliability, however it additionally meant that the platform crew was nonetheless paying to run environments that nobody was utilizing.

Scalability Challenges

As purposes develop, the variety of environments might have to extend to accommodate numerous levels of growth, testing, and manufacturing. Scaling these environments can grow to be prohibitively costly, particularly when coping with excessive volumes of microservices. The infrastructure required to assist quite a few replicated environments can shortly outpace funds constraints, making it difficult to keep up cost-effectiveness.

For example, a tech firm that originally managed its environments by duplicating manufacturing setups discovered that as its service portfolio expanded, the prices related to scaling these environments grew to become unsustainable. The corporate confronted problem in maintaining with the infrastructure calls for, resulting in a reassessment of its technique.

Different Methods

Given the excessive prices related to surroundings duplication, it’s price contemplating different methods. One strategy is to make use of dynamic surroundings provisioning, the place environments are created on demand and torn down when now not wanted. This technique may help optimize useful resource utilization and cut back prices by avoiding the necessity for completely duplicated setups. This will preserve prices down however nonetheless comes with the trade-off of sending some testing to staging anyway. That’s as a result of there are shortcuts that we should take to spin up these dynamic environments like utilizing mocks for third-party companies. This will put us again at sq. one by way of testing reliability, that’s how nicely our checks mirror what’s going to occur in manufacturing.

At this level, it’s affordable to contemplate different strategies that use technical fixes to make staging and different near-to-production environments simpler to check on. One such is request isolation, a mannequin for letting a number of checks happen concurrently in the identical shared surroundings.

Conclusion: A Value That Doesn’t Scale

Whereas duplicating environments would possibly seem to be a sensible answer for making certain consistency in microservices, the infrastructure prices concerned might be vital. By exploring different methods corresponding to dynamic provisioning and request isolation, organizations can higher handle their assets and mitigate the monetary influence of sustaining a number of environments. Actual-world examples illustrate the challenges and prices related to conventional duplication strategies, underscoring the necessity for extra environment friendly approaches in trendy software program growth.