Snowflake is a strong cloud-based information warehousing platform recognized for its scalability and suppleness. To completely leverage its capabilities and enhance environment friendly information processing, it is essential to optimize question efficiency.

Understanding Snowflake Structure

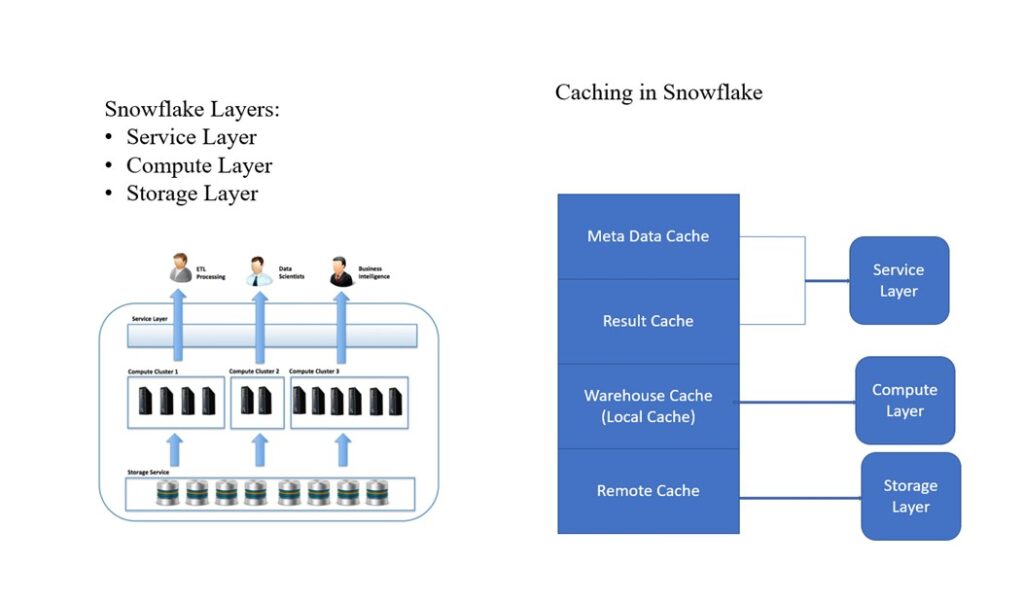

Let’s briefly cowl Snowflake structure earlier than we take care of information modeling and optimization strategies. Snowflake’s structure consists of three foremost layers:

- Storage layer: The place information is saved in a compressed format

- Compute layer: Supplies the computational sources for querying and processing information

- Cloud companies layer: Manages metadata, safety, and question optimization

Efficient information modeling is a vital design exercise in optimizing information storage, querying efficiency, and total information administration in Snowflake. Snowflake cloud structure permits us to design and implement varied information modeling methodologies. Every of the methodologies affords a singular profit relying on the enterprise requirement.

The present article explores superior information modeling strategies and focuses on a star schema, snowflake schema, and hybrid approaches.

What Is Information Modeling?

Information modeling buildings the info in a manner that helps environment friendly storage and retrieval. Snowflake is a cloud-based information warehouse that gives a scalable platform for implementing advanced information fashions that cater to numerous analytical wants. Efficient information modeling ensures optimized efficiency, straightforward to keep up, and streamlined information processing.

What Is Star Schema?

The star schema is the most well-liked information modeling method utilized in information warehousing. It consists of a central truth desk linked to a number of dimension tables. Star schema is thought for its simplicity and ease of use, making it a most well-liked selection for all analytical functions.

Construction of Star Schema

- Reality desk: Accommodates quantitative information and metrics; It data transactions or occasions that usually embody overseas keys to dimension tables.

- Dimension tables: Accommodates descriptive attributes associated to the actual fact information; it supplies the context of the actual fact dataset that you simply analyze and helps in filtering, grouping, and aggregating information.

Instance

Let’s analyze gross sales information.

CREATE OR REPLACE TABLE gross sales (

sale_id INT,

product_id INT,

customer_id INT,

date_id DATE,

quantity DECIMAL

);- Dimension Tables:

merchandise,prospects,dates

CREATE OR REPLACE TABLE merchandise (

product_id INT,

product_name STRING,

class STRING

);

CREATE OR REPLACE TABLE prospects (

customer_id INT,

customer_name STRING,

metropolis STRING

);

CREATE OR REPLACE TABLE dates (

date_id DATE,

yr INT,

month INT,

day INT

);- To seek out the full gross sales quantity by product class:

SELECT p.class, SUM(s.quantity) AS total_sales

FROM gross sales s

JOIN merchandise p ON s.product_id = p.product_id

GROUP BY p.class;What Is Snowflake Schema?

The snowflake schema is the normalized type of the star schema. It additional organizes dimension tables into a number of associated tables (that are hierarchical) to design and implement the snowflake schema. This technique helps scale back information redundancy and improves information integrity.

Construction of Snowflake Schema

- Reality desk: It’s just like the star schema.

- Normalized dimension tables: Dimension tables are cut up into sub-dimension tables, leading to a snowflake schema.

Instance

The above instance used for star schema is additional expanded to snowflake schema as beneath:

- Reality Desk:

gross sales(similar as within the star schema) - Dimension Tables:

CREATE OR REPLACE TABLE merchandise (

product_id INT,

product_name STRING,

category_id INT

);

CREATE OR REPLACE TABLE product_categories (

category_id INT,

category_name STRING

);

CREATE OR REPLACE TABLE prospects (

customer_id INT,

customer_name STRING,

city_id INT

);

CREATE OR REPLACE TABLE cities (

city_id INT,

city_name STRING

);

CREATE OR REPLACE TABLE dates (

date_id DATE,

yr INT,

month INT,

day INT

);- To seek out the full gross sales quantity by

metropolis:

SELECT c.city_name, SUM(s.quantity) AS total_sales

FROM gross sales s

JOIN prospects cu ON s.customer_id = cu.customer_id

JOIN cities c ON cu.city_id = c.city_id

GROUP BY c.city_name;Hybrid Approaches

Hybrid information modeling combines components of each the star and snowflake schemas to steadiness efficiency and normalization. This strategy helps deal with the constraints of every schema kind and is helpful in advanced information environments.

Construction of Hybrid Schema

- Reality desk: Just like star and snowflake schemas.

- Dimension tables: Some dimensions could also be normalized (Snowflake type) whereas others are denormalized (star type) to steadiness between normalization and efficiency.

Instance

Combining facets of each schemas:

- Reality Desk:

gross sales - Dimension Tables:

CREATE OR REPLACE TABLE merchandise (

product_id INT,

product_name STRING,

class STRING

);

CREATE OR REPLACE TABLE product_categories (

category_id INT,

category_name STRING

);

CREATE OR REPLACE TABLE prospects (

customer_id INT,

customer_name STRING,

city_id INT

);

CREATE OR REPLACE TABLE cities (

city_id INT,

city_name STRING

);

CREATE OR REPLACE TABLE dates (

date_id DATE,

yr INT,

month INT,

day INT

);On this hybrid strategy, merchandise use denormalized dimensions for efficiency advantages, whereas product classes stay normalized.

- To investigate whole gross sales by product class:

SELECT p.class, SUM(s.quantity) AS total_sales

FROM gross sales s

JOIN merchandise p ON s.product_id = p.product_id

GROUP BY p.class;Finest Practices for Information Modeling in Snowflake

- Perceive the info and question patterns: Select the schema that most closely fits your organizational information and typical question patterns.

- Optimize for efficiency: De-normalize the place efficiency must be optimized and use normalization to cut back redundancy and enhance information integrity.

- Leverage Snowflake options: By using Snowflake capabilities, comparable to clustering keys and materialized views, we may improve the efficiency and handle massive datasets effectively.

The superior information modeling characteristic of Snowflake includes choosing the suitable schema methodology —star, snowflake, or hybrid — to optimize information storage and enhance question efficiency.

Every schema has its personal and distinctive benefits:

- The star schema for simplicity and velocity

- The snowflake schema for information integrity and decreased redundancy

- Hybrid schemas for balancing each facets

By understanding and making use of these methodologies successfully, organizations can obtain environment friendly information administration and derive invaluable insights from their information journey.

Efficiency Optimization in Snowflake

To leverage the capabilities and guarantee environment friendly information processing, it is essential to optimize question efficiency and perceive the important thing strategies and finest practices to optimize the efficiency of Snowflake, specializing in clustering keys, caching methods, and question tuning.

What Are Clustering Keys?

Clustering keys assist in figuring out the bodily ordering of information inside tables, which may considerably impression question efficiency when coping with massive datasets. Clustering keys assist Snowflake effectively find and retrieve information by decreasing the quantity of scanned information. These assist in filtering information on particular columns or ranges.

The best way to Implement Clustering Keys

- Determine appropriate columns: Select columns which might be often utilized in filter situations or be part of operations. For instance, in case your queries usually filter by

order_date, it’s a superb candidate for clustering. - Create a desk with Clustering Keys:

CREATE OR REPLACE TABLE gross sales (

order_id INT,

customer_id INT,

order_date DATE,

quantity DECIMAL

)

CLUSTER BY (order_date);The gross sales desk is clustered by the order_date column.

3. Re-clustering: Information distribution alters over a interval necessitating reclustering. The RECLUSTER command can be utilized to optimize clustering.

ALTER TABLE gross sales RECLUSTER;

Caching MethodsSnowflake has varied caching mechanisms to reinforce question efficiency. We’ve got 2 kinds of caching mechanisms: consequence caching and information caching.

Consequence Caching

Snowflake caches the outcomes of queries within the reminiscence to keep away from redundant computations. If the identical question is submitted to run once more with the identical parameters, Snowflake can return the cached consequence immediately.

Finest Practices for Consequence Caching

- Guarantee queries are written in a constant method to make the most of consequence caching.

- Keep away from pointless advanced computations if the consequence has been cached.

Information Caching

Information caching happens on the compute layer. When a question accesses information, it’s cached within the native compute node’s reminiscence for quicker retrievals.

Finest Practices for Information Caching

- Use warehouses appropriately: Use devoted warehouses for high-demand queries to make sure enough caching sources.

- Scale warehouses: Improve the dimensions of the compute warehouse if you happen to expertise efficiency points because of inadequate caching to keep away from disk spillage.

What Is Disk Spillage?

Disk spillage, often known as disk spilling, happens when information that will usually match right into a system’s reminiscence (RAM) exceeds the accessible reminiscence capability, forcing the system to quickly retailer the surplus information on disk storage. This course of is widespread in varied computing environments, together with databases and large-scale information processing techniques.

Forms of Spillage

- Reminiscence overload: When an utility or database performs operations that require extra reminiscence than is offered, it triggers disk spillage.

- Momentary storage: To deal with the surplus information, the system writes the overflow to disk storage. This often includes creating momentary recordsdata or utilizing swap house.

- Efficiency impression: Disk storage is considerably slower than RAM. Subsequently, disk spillage can result in efficiency degradation as a result of accessing information from disk is far slower in comparison with accessing it from reminiscence.

- Use instances: Disk spillage usually happens in situations like sorting massive datasets, executing advanced queries, or operating large-scale analytics operations.

- Administration: Correct tuning and optimization can mitigate the consequences of disk spillage. This may contain rising accessible reminiscence, optimizing queries, or leveraging extra environment friendly algorithms to cut back the necessity for disk storage.

What Is Question Tuning?

Question tuning optimizing SQL queries to cut back execution time and useful resource consumption.

Optimize SQL Statements

- Use correct joins: Want

INNER JOINoverOUTER JOIN, if attainable

SELECT a.*, b.*

FROM orders a

INNER JOIN prospects b ON a.customer_id = b.customer_id;2. Keep away from SELECT *: Choose solely the required columns to cut back information processing.

SELECT order_id, quantity

FROM gross sales

WHERE order_date >= '2023-01-01';3. Leverage window features: Use window features for calculations that should be carried out throughout rows.

SELECT order_id, quantity,

SUM(quantity) OVER (PARTITION BY customer_id) AS total_amount

FROM gross sales;

Analyze Question Execution Plans

Use the QUERY_HISTORY view to research question efficiency and determine bottlenecks.

SELECT *

FROM TABLE(QUERY_HISTORY())

WHERE QUERY_TEXT ILIKE '%gross sales%'

ORDER BY START_TIME DESC;Use Materialized Views

Materialized views retailer the outcomes of advanced queries and might be refreshed periodically. They enhance efficiency for often accessed and sophisticated queries.

CREATE OR REPLACE MATERIALIZED VIEW mv_sales_summary AS

SELECT order_date, SUM(quantity) AS total_amount

FROM gross sales

GROUP BY order_date;Monitoring and Upkeep

Steady monitoring and upkeep are very important for efficiency optimization. Evaluate and optimize clustering keys, analyze question efficiency, and alter warehouse sizes primarily based on question hundreds.

Key Instruments for Monitoring

- Snowflake’s Question Profile: Supplies insights into question execution

- Useful resource

- Displays: Assist observe compute useful resource utilization and handle prices

Optimizing efficiency in Snowflake includes the efficient use of clustering keys, strategic caching, and meticulous question tuning. By implementing the above strategies and finest practices, organizations can improve question efficiency, scale back useful resource consumption, and obtain environment friendly information processing.

Steady monitoring and proactive upkeep can guarantee sustained efficiency and scalability within the Snowflake surroundings.

Ultimate Ideas

By understanding and making use of the methodologies mentioned above on information fashions and question optimizations, organizations can obtain environment friendly information administration and derive invaluable insights all through the info journey.