It’s a really dynamic world at this time. Data strikes quick. Companies generate knowledge consistently. Actual-time evaluation is now important. Stream processing within the serverless cloud solves this. Gartner predicts that by 2025, over 75% of enterprise knowledge might be processed outdoors conventional knowledge facilities. Confluent states that stream processing lets corporations act on knowledge because it’s created. This offers them an edge.

Actual-time processing reduces delays. It scales simply and adapts to altering wants. With a serverless cloud, companies can give attention to knowledge insights with out worrying about managing infrastructure.

In at this time’s publish, we’ll reply the query of stream processing and the way it’s accomplished and describe how one can design a extremely accessible and horizontally scalable reliable stream processing system on AWS. Right here, we’ll briefly focus on the market space of this know-how and its future.

What Is Stream Processing?

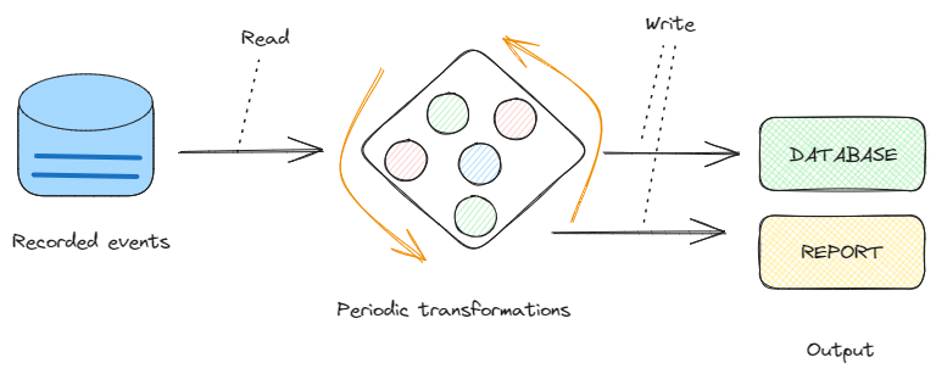

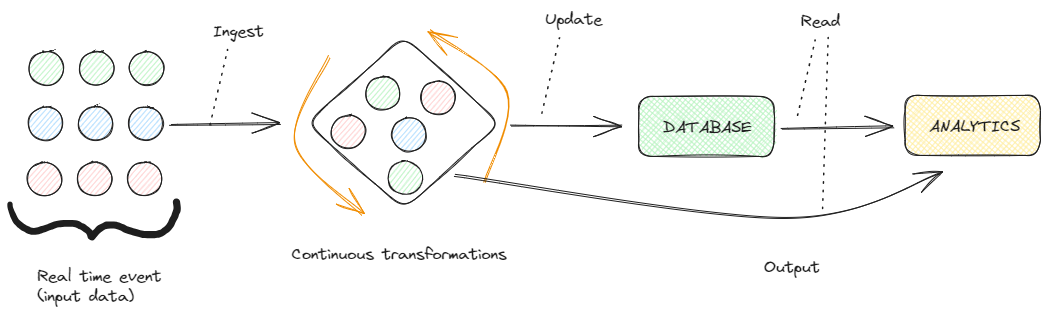

Stream processing analyzes knowledge in real-time because it flows by means of a system. Not like batch processing, which processes knowledge after it is saved, stream processing handles steady knowledge from sources like IoT gadgets, transactions, or social media.

Right now, trade instruments like Apache Kafka and Apache Flink handle knowledge ingestion and processing. These methods should meet low latency, scalability, and fault tolerance requirements, guaranteeing fast, dependable knowledge dealing with. They typically purpose for precisely one processing to keep away from errors. Stream processing is significant in industries requiring rapid data-driven selections, similar to finance, telecommunications, and on-line companies.

Key Ideas in Stream Processing

Occasion-driven structure depends on occasions to set off and talk between companies, selling responsiveness and scalability. Stream processing permits real-time knowledge dealing with by processing occasions as they happen, guaranteeing well timed insights and actions. This method suits properly the place methods should react shortly to altering situations, similar to in monetary buying and selling or IoT purposes.

Knowledge Streams

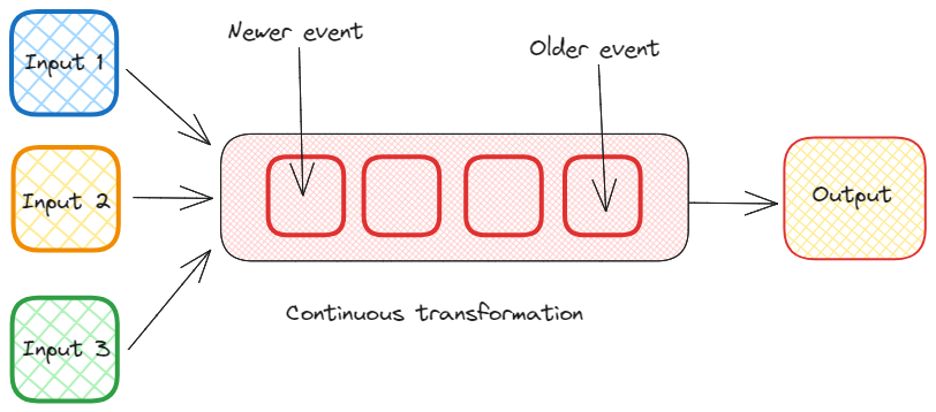

A knowledge stream is a steady movement of information data. These data are sometimes time-stamped and might come from numerous sources like IoT gadgets, social media feeds, or transactional databases.

Stream Processing Engine

Think about a inventory buying and selling platform the place costs fluctuate quickly. An event-driven structure captures every worth change as an occasion.

The stream processing engine filters related worth modifications, aggregates traits, and transforms the information to supply real-time analytics and automatic buying and selling selections. This ensures that the platform can react immediately to market situations, executing trades on the optimum moments.

Occasion Time

That is when an occasion (a knowledge report) occurred. It’s important in stream processing to make sure correct evaluation, particularly when coping with out-of-order occasions.

Windowing

In stream processing, windowing is a method to group knowledge data inside a sure timeframe. For instance, calculate the common temperature reported by sensors each minute.

Stateful vs. Stateless Processing

Stateful processing retains monitor of previous knowledge data to supply context for the present knowledge, whereas stateless processing handles every knowledge report independently.

Visualizing Stream Processing

That can assist you higher perceive stream processing, let’s visualize the ideas:

- Stream processing engine:

Why Stream Processing Issues within the Trendy World

Since organizations rely increasingly more on near-real-time data to determine the optimum selections, stream processing emerged as the important thing answer. As an example, within the monetary sector, figuring out fraudulent transactions within the means of incidence can save some huge cash. In e-commerce, real-time suggestions can enhance general buyer enjoyment and loyalty in addition to improve gross sales.

Market Section and Development

The marketplace for stream processing has been creating much more actively lately. From the trade outputs, the stream processing market was estimated to be at round $ 7 billion on the world stage. The variety of IoT gadgets employed, calls for for quick analytical outcomes, and progress of cloud companies play a task on this case.

Within the world market, the main contenders are Amazon Net Companies, Microsoft Azure, Google Cloud Platform, and IBM. Kinesis and Lambda companies of AWS are mostly used for extending serverless stream processing purposes.

Constructing a Stream Processing Software With Lambda and Kinesis

Let’s comply with the steps to arrange a fundamental stream processing software utilizing AWS Lambda and Kinesis.

Step 1: Setting Up a Kinesis Knowledge Stream

- Create a stream: Go to the AWS Administration Console, navigate to Kinesis, and create a brand new Knowledge Stream. Identify your stream and specify the variety of shards (every can deal with as much as 1 MB of information per second).

- Configure producers: Arrange knowledge producers to ship knowledge to your Kinesis stream. This software may log consumer exercise or ship sensor knowledge to IoT gadgets.

- Monitor stream: Use the Kinesis dashboard to watch the information movement into your stream. Guarantee your stream is wholesome and able to dealing with the incoming knowledge.

Step 2: Making a Lambda Perform to Course of the Stream

- Create a Lambda Perform: Within the AWS Administration Console, navigate to Lambda and create a brand new perform. Select a runtime (e.g., Python, Node.js), and configure the perform’s execution position to permit entry to the Kinesis stream.

- Add Kinesis as a set off: Add your Kinesis stream as a set off within the perform’s configuration. This setup will invoke the Lambda perform each time new knowledge arrives within the stream.

- Write the processing code: Implement the logic to course of every report. For instance, if you happen to analyse consumer exercise, your code would possibly filter out irrelevant knowledge and push significant insights to a database.

import json

def lambda_handler(occasion, context):

for report in occasion['Records']:

# Kinesis knowledge is base64 encoded, so decode right here

payload = json.masses(report['kinesis']['data'])

# Course of the payload

print(f"Processed record: {payload}")4. Check and Deploy: Check the perform with pattern knowledge to make sure it really works as anticipated. As soon as glad, deploy the perform, robotically processing incoming stream knowledge.

Step 3: Scaling and Optimization

Occasion supply mapping in AWS Lambda gives vital options for scaling and optimizing occasion processing. The Parallelization Issue controls the variety of concurrent batches from every shard, boosting throughput.

Lambda Concurrency contains Reserved Concurrency to ensure accessible situations and Provisioned Concurrency to cut back chilly begin latency. For error dealing with, Retries robotically reattempt failed executions, whereas Bisect Batch on Perform Error splits failed batches for extra granular retries.

In scaling, Lambda adjusts robotically to knowledge quantity, however Reserved Concurrency ensures constant efficiency by protecting a minimal variety of situations able to deal with incoming occasions with out throttling.

Conclusion

Stream processing within the serverless cloud is a robust approach to deal with real-time knowledge. You may construct scalable purposes with out managing servers with AWS Lambda and Kinesis. This method is right for situations requiring rapid insights.