Introduction to Knowledge Joins

On the earth of information, a “join” is like merging data from totally different sources right into a unified end result. To do that, it wants a situation – sometimes a shared column – to hyperlink the sources collectively. Consider it as discovering frequent floor between totally different datasets.

In SQL, these sources are known as “tables,” and the results of utilizing a JOIN clause is a brand new desk. Essentially, conventional (batch) SQL joins function on static datasets, the place you’ve got prior data of the variety of rows and the content material inside the supply tables earlier than executing the Be a part of. These be a part of operations are sometimes easy to implement and computationally environment friendly. Nonetheless, the dynamic and unbounded nature of streaming information presents distinctive challenges for performing joins in near-real-time eventualities.

Streaming Knowledge Joins

In streaming information functions, a number of of those sources are steady, unbounded streams of knowledge. The be a part of must occur in (close to) real-time. On this state of affairs, you do not know the variety of rows or the precise content material beforehand.

To design an efficient streaming information be a part of answer, we have to dive deeper into the character of our information and its sources. Questions to think about embrace:

- Figuring out sources and keys: That are the first and secondary information sources? What’s the frequent key that shall be used to attach data throughout these sources?

- Be a part of kind: What sort of Be a part of (

Internal Be a part of,Left Be a part of,Proper Be a part of,Full Outer Be a part of) is required? - Be a part of window: How lengthy ought to we await an identical occasion from the secondary supply to reach for a given major occasion (or vice-versa)? This immediately impacts latency and Service Degree Agreements (SLAs).

- Success standards: What share of major occasions will we count on to be efficiently joined with their corresponding secondary occasions?

By rigorously analyzing these features, we are able to tailor a streaming information be a part of answer that meets the precise necessities of our software.

The streaming information be a part of panorama is wealthy with choices. Established frameworks like Apache Flink and Apache Spark (additionally accessible on cloud platforms like AWS, GCP, and Databricks) present sturdy capabilities for dealing with streaming joins. Moreover, modern options that optimize particular features of the infrastructure, equivalent to Meta’s streaming be a part of specializing in reminiscence consumption, are repeatedly rising.

Scope

The aim of this text is not to supply a tutorial on utilizing present options. As a substitute, we’ll delve into the intricacies of a selected streaming information be a part of answer, exploring the tradeoffs and assumptions concerned in its design. This strategy will illuminate the underlying ideas and concerns that drive lots of the out-of-the-box streaming be a part of capabilities accessible out there.

By understanding the mechanics of this explicit answer, you may acquire worthwhile insights into the broader panorama of streaming information joins and be higher outfitted to decide on the precise software in your particular use case.

Be a part of Key

The key is a shared column or discipline that exists in each datasets. The particular Be a part of Key you select relies on the kind of information you are working with and the issue you are attempting to resolve. We use this key to index incoming occasions in order that when new occasions arrive, we are able to shortly search for and discover any associated occasions which can be already saved.

Be a part of Window

The be a part of window is sort of a time-frame the place occasions from totally different sources are allowed to “meet and match.” It is an interval throughout which we contemplate occasions eligible to be joined collectively. To set the precise be a part of window, we have to perceive how shortly occasions arrive from every information supply. This ensures that even when an occasion is a bit late, we nonetheless have its associated occasions accessible and able to be joined.

Architecting Streaming Knowledge Joins

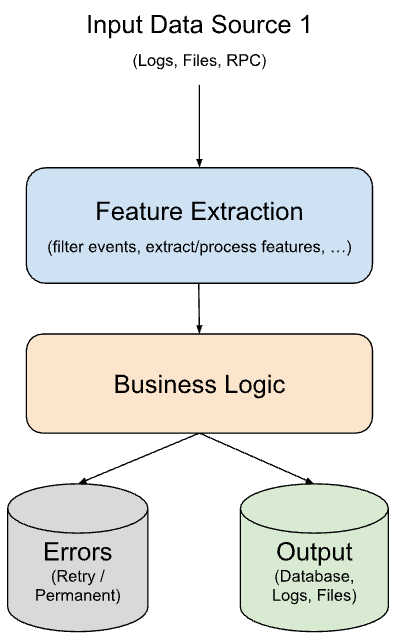

Here is a simplified illustration of a typical streaming information pipeline. The person parts are proven for readability, however they would not essentially be separate methods or jobs in a manufacturing surroundings.

Description

A typical streaming information pipeline processes incoming occasions from a knowledge supply (Supply 1), usually passing them via a c. This element could be regarded as a approach to refine the information: filtering out irrelevant occasions, deciding on particular options, or reworking uncooked information into extra usable codecs. The refined occasions are then despatched to the Enterprise Logic element, the place the core processing or evaluation occurs. This Function Extraction step is optionally available; some pipelines could ship uncooked occasions on to the Enterprise Logic element.

Downside

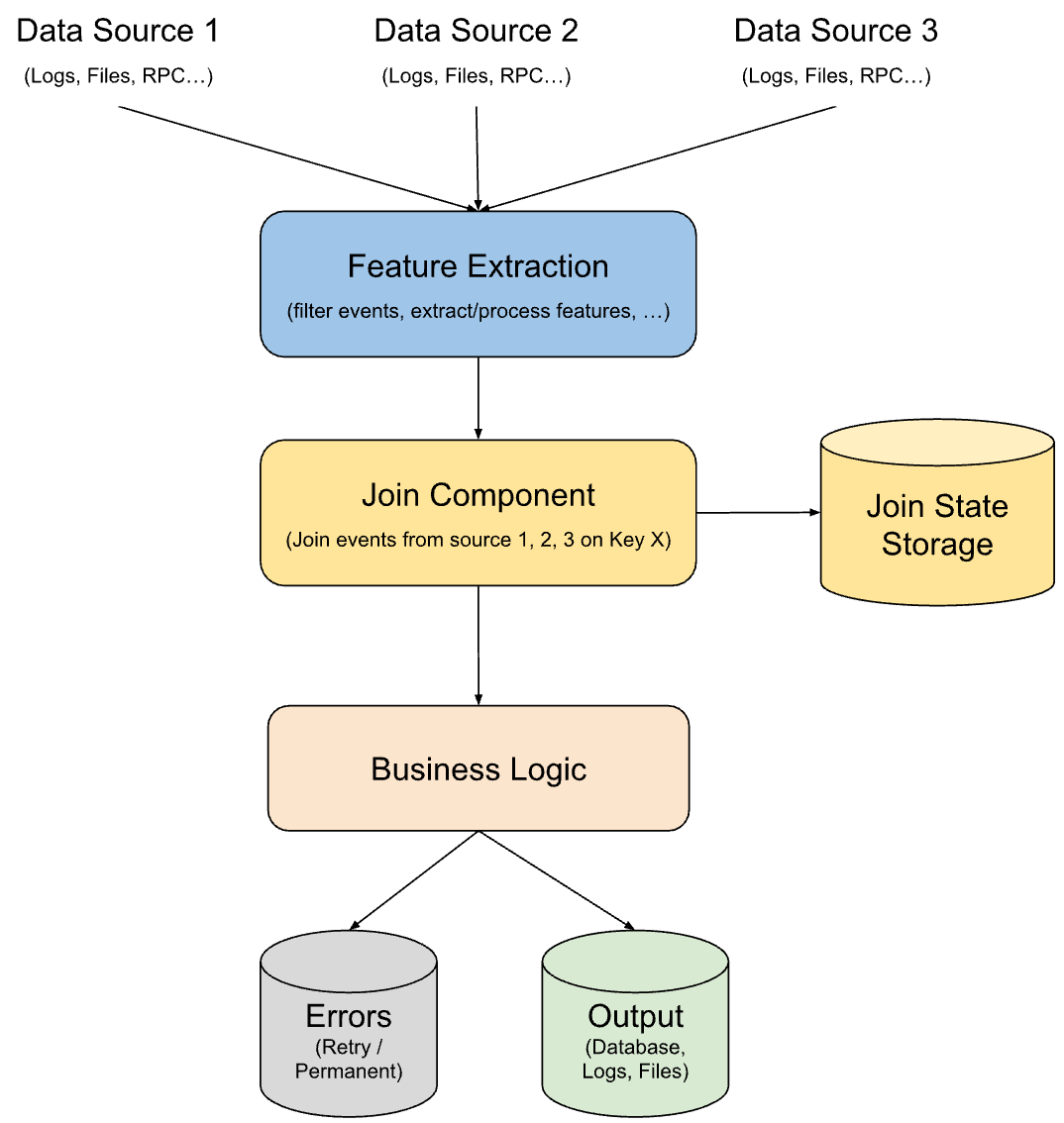

Now, think about our pipeline wants to mix data from extra sources (Supply 2 and Supply 3) to counterpoint the principle information stream. Nonetheless, we have to do that with out considerably slowing down the processing pipeline or affecting its efficiency targets.

Answer

To handle this, we introduce a Be a part of Part simply earlier than the Enterprise Logic step. This element will merge occasions from all of the enter sources primarily based on a shared distinctive identifier, let’s name it Key X. Occasions from every supply will circulation into this Be a part of Part (probably after present process Function Extraction).

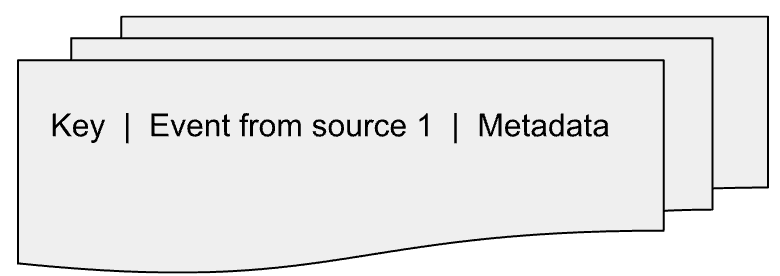

The Be a part of Part will make the most of a state storage (like a database) to maintain observe of incoming occasions primarily based on Key X. Consider it as creating separate tables within the database for every enter supply, with every desk indexing occasions by Key X. As new occasions arrive, they’re added to their corresponding desk (like Occasion from supply 1 to desk 1, occasion 2 to desk 2, and so on.) together with some extra metadata. This Be a part of State could be imagined as follows:

Be a part of Set off Circumstances

All Anticipated Occasions Arrive

This implies we have obtained occasions from all our information sources (Supply 1, Supply 2, and Supply 3) for a selected Key X.

-

We will verify for this each time we’re about so as to add a brand new occasion to our state storage. For instance, if the Be a part of Part is at present processing an occasion with Key X from Supply 2, it’s going to shortly verify if there are already matching rows within the tables for Supply 1 and Supply 3 with the identical Key X. If that’s the case, it is time to be a part of!

Be a part of Interval Expires

This occurs when not less than one occasion with a selected Key X has been ready too lengthy to be joined. We set a time restrict (the be a part of window) for the way lengthy an occasion can wait.

-

To implement this, we are able to set an expiration time (TTL) on every row in our tables. When the TTL expires, it triggers a notification to the Be a part of Part, letting it know that this occasion must be joined now, even when it is lacking some matches. As an example, if our be a part of window is quarter-hour and an occasion from Supply 2 by no means reveals up, the Be a part of Part will get a notification concerning the occasions from Supply 1 and Supply 3 which can be ready to be joined with that lacking Supply 2 occasion. One other approach to deal with that is to have a periodic job that checks the tables for any expired keys and sends notifications to the Be a part of Part.

Observe: This second state of affairs is simply related for sure varieties of use circumstances the place we wish to embrace occasions even when they do not have a whole match. If we solely care about full units of occasions (like INNER JOIN), we are able to ignore this time-out set off.

How the Be a part of Occurs

When both of our set off situations is met — both we’ve got a whole set of occasions or an occasion has timed out — the Be a part of Part springs into motion. It fetches all of the related occasions from the storage tables and performs the be a part of operation. If some required occasions are lacking (and we’re doing a sort of be a part of that requires full matches), the unfinished occasion could be discarded. The ultimate joined occasion, containing data from all of the sources, is then handed on to the Enterprise Logic element for additional processing.

Visualization

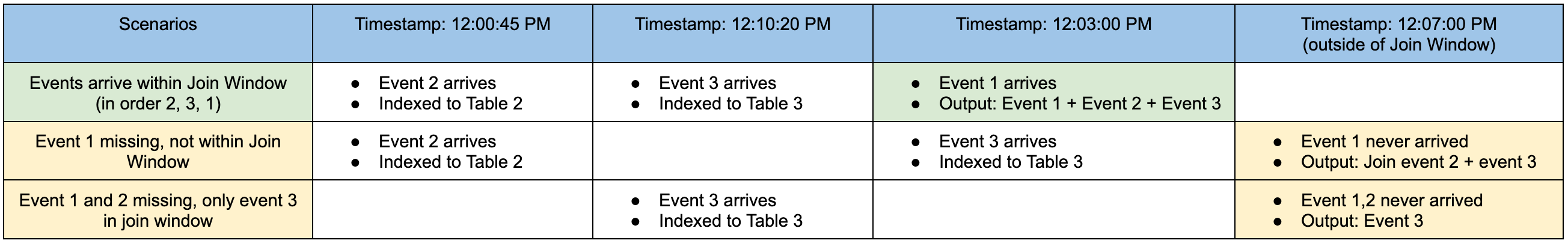

Let’s make this a bit simpler to image. Think about that occasions from all three sources (Supply 1, Supply 2, and Supply 3) occur concurrently at 12:00:00 PM. Take into account the be a part of window as 5 minutes.

Optimizations

Set Expiration Instances (TTLs)

By setting a TTL for every row in our be a part of state storage, we allow the database to mechanically clear up previous occasions which have handed their be a part of window.

Compact Storage

As a substitute of storing total occasions, retailer them in a compressed format (like bytes) to additional scale back the quantity of cupboard space wanted in our database.

Outer Be a part of Optimization

If the use case is to carry out an OUTER JOIN and one of many occasion streams (as an example Supply 1) is just too large to be totally listed in our storage, we are able to regulate our strategy. As a substitute of indexing all the things from Supply 1, we are able to give attention to indexing the occasions from Supply 2 and Supply 3. Then, when an occasion from Supply 1 arrives, we are able to carry out focused lookups into the listed occasions from the opposite sources to finish the be a part of.

Restrict Failed Joins

Becoming a member of occasions could be computationally costly. By minimizing the variety of failed be a part of makes an attempt (the place we attempt to be a part of occasions that do not have matches), we are able to scale back reminiscence utilization and maintain our streaming pipeline working easily. We will use the Function Extraction element earlier than the Be a part of Part to filter out occasions which can be unlikely to have matching occasions from different sources.

Tuning Be a part of Window

Whereas understanding the arrival patterns of occasions out of your enter sources is essential, it is not the one issue to think about when fine-tuning your Be a part of Window. Elements equivalent to information supply reliability, latency necessities (SLAs), and scalability additionally play important roles.

- Bigger be a part of window: Will increase the probability of efficiently becoming a member of occasions, in case of delays in occasion arrival instances; could result in elevated latency because the system waits longer for potential matches

- Smaller be a part of window: Reduces latency and reminiscence footprint as occasions are processed and probably discarded extra shortly; be a part of success fee is likely to be low, particularly if there are delays in occasion arrival

Discovering the optimum Be a part of Window worth usually requires experimentation and cautious consideration of your particular use case and efficiency necessities.

Monitoring Is Key

It is at all times follow to arrange alerts and monitoring in your be a part of element. This lets you proactively determine anomalies, equivalent to occasions from one supply persistently arriving a lot later than others, or a drop within the total be a part of success fee. By staying on prime of those points, you’ll be able to take corrective motion and guarantee your streaming be a part of answer operates easily and effectively.

Conclusion

Streaming information joins is a crucial software for unlocking the complete potential of real-time information processing. Whereas they current distinctive challenges in comparison with conventional SQL (batch) joins, hopefully, this text has given you the thought to design efficient options.

Keep in mind, there isn’t any one-size-fits-all strategy. The best answer will depend upon the precise traits of your information, your efficiency necessities, and your accessible infrastructure. By rigorously contemplating components equivalent to be a part of keys, be a part of home windows, and optimization strategies, you’ll be able to construct sturdy and environment friendly streaming pipelines that ship well timed, actionable insights.

Because the streaming information panorama continues to evolve, so too will the options for dealing with joins. Continue learning about new applied sciences and finest practices to ensure your pipelines keep forward of the curve because the world of information retains altering.