Subject Tagging

Subject tagging is a crucial and broadly relevant downside in Pure Language Processing, which entails tagging a bit of content material — like a webpage, guide, weblog submit, or video — with its subject. Regardless of the supply of ML fashions like subject fashions and Latent Dirichlet Evaluation [1], subject tagging has traditionally been a labor-intensive activity, particularly when there are numerous fine-grained matters. There are quite a few functions to topic-tagging, together with:

- Content material group, to assist customers of internet sites, libraries, and different sources of huge quantities of content material to navigate by means of the content material

- Recommender programs, the place ideas for merchandise to purchase, articles to learn, or movies to observe are generated wholly or partially utilizing their matters or subject tags

- Information evaluation and social media administration — to grasp the recognition of matters and topics to prioritize

Massive Language Fashions (LLMs) have vastly simplified subject tagging by leveraging their multimodal and long-context capabilities to course of giant paperwork successfully. Nevertheless, LLMs are computationally costly and require the person to grasp the trade-offs between the standard of the LLM and the computational or greenback price of utilizing them.

LLMs for Subject Tagging

There are numerous methods of casting the subject tagging downside to be used with an LLM.

- Zero-shot/few-shot prompting

- Prompting with choices

- Twin encoder

We illustrate the above methods utilizing the instance of tagging Wikipedia articles.

1. Zero-Shot/Few-Shot Prompting

Prompting is the best technique for utilizing an LLM, however the high quality of the outcomes is determined by the scale of the LLM.

Zero-shot prompting [2] entails immediately instructing the LLM to carry out the duty. For example:

What are the three matters the above textual content is speaking about? Zero-shot is totally unconstrained, and the LLM is free to output textual content in any format. To alleviate this difficulty, we have to add constraints to the LLM.

Zero-shot prompting

Few-shot prompting supplies the LLM examples to information its output. Particularly, we can provide the LLM a number of examples of content material together with their matters, and ask the LLM for the matters of recent content material.

Subjects: Physics, Science, Trendy Physics

Subjects: Baseball, Sport

Subjects:

Few-shot prompting

Benefits

- Simplicity: The method is simple and straightforward to grasp.

- Ease of comparability: It’s easy to match the outcomes of a number of LLMs.

Disadvantages

- Much less management: There may be restricted management over the LLM’s output, which may result in points like duplicate matters (e.g., “Science” and “Sciences”).

- Doable excessive price: Few-shot prompting may be costly, particularly with giant content material like whole Wikipedia pages. Extra examples improve the LLM’s enter size, thus elevating prices.

2. Prompting With Choices

This method is helpful when you may have a small and predefined set of matters, or a technique of narrowing right down to a manageable dimension, and wish to use the LLM to pick out from this small set of choices.

Since that is nonetheless prompting, each zero-shot and few-shot prompting might work. In follow, because the activity of choosing from a small set of matters is way less complicated than arising with the matters, zero-shot prompting may be most well-liked because of its simplicity and decrease computational price.

An instance immediate is:

Doable matters: Physics, Biology, Science, Computing, Baseball …

Which of the above doable matters is related to the above textual content? Choose as much as 3 matters.

Prompting with choices

Benefits of Prompting With Choices

- Larger management: The LLM selects from offered choices, making certain extra constant outputs.

- Decrease computational price: Easier activity permits the usage of a smaller LLM, lowering prices.

- Alignment with current buildings: Helpful when adhering to pre-existing content material group, akin to library programs or structured webpages.

Disadvantages of Prompting With Choices

- Must slim down matters: Requires a mechanism to precisely cut back the subject choices to a small set.

- Validation requirement: Further validation is required to make sure the LLM doesn’t output matters outdoors the offered set, notably if utilizing smaller fashions.

3. Twin Encoder

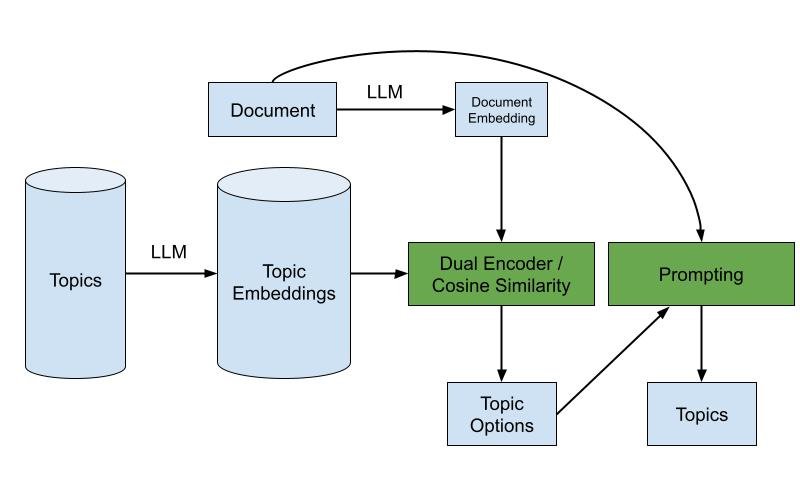

A twin encoder leverages encoder-decoder LLMs to transform textual content into embeddings, facilitating subject tagging by means of similarity measurements. That is in distinction to prompting, which works with each encoder-decoder and decoder-only LLMs.

Course of

- Convert matters to embeddings: Generate embeddings for every subject, probably together with detailed descriptions. This step may be carried out offline.

- Convert content material to embeddings: Use an LLM to transform the content material into embeddings.

- Similarity measurement: Use cosine similarity to seek out the closest matching matters.

Benefits of Twin Encoder

- Value-effective: When already utilizing embeddings, this technique avoids reprocessing paperwork by means of the LLM.

- Pipeline integration: This may be mixed with prompting methods for a extra strong tagging system.

Drawback of Twin Encoder

- Mannequin constraint: Requires an encoder-decoder LLM, which is usually a limiting issue since many more moderen LLMs are decoder-only.

Hybrid Method

A hybrid strategy can leverage the strengths of each prompting with choices and the twin encoder technique:

- Slim down matters utilizing the twin encoder: Convert the content material and matters to embeddings and slim the matters primarily based on similarity.

- Ultimate subject choice utilizing prompting with choices: Use a smaller LLM to refine the subject choice from the narrowed set.

Hybrid strategy

Conclusion

Subject tagging with LLMs gives important benefits over conventional strategies, offering larger effectivity and accuracy. By understanding and leveraging completely different methods — zero-shot/few-shot prompting, prompting with choices, and twin encoder — one can tailor the strategy to particular wants and constraints. Every technique has distinctive strengths and trade-offs, and mixing them appropriately can yield the best outcomes for organizing and analyzing giant volumes of content material utilizing matters.

References

[1] LDA Paper

[2] Superb-tuned Language Fashions Are Zero-Shot Learners