The newest Ray-Ban glasses from Meta are geared up with an built-in digicam and handle the balancing act between trend and know-how. The cool design and {hardware} are thrilling for content material creators. Nevertheless, an uneasy feeling and questions stay: What occurs to the photographs and movies that the glasses file? How does Meta use this knowledge?

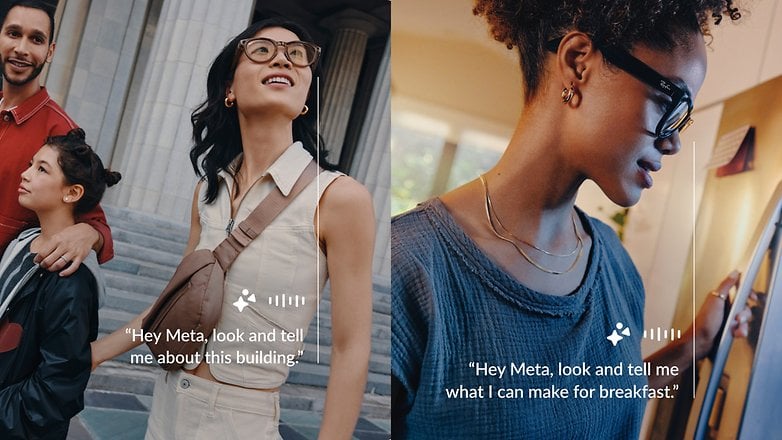

Meta, the dad or mum firm behind Fb, Instagram, and WhatsApp, is understood for creating revolutionary applied sciences that change the way in which we talk and work together — and pushing boundaries. With its newest pair of glasses which had been developed by collaborating with Ray-Ban, the corporate goals to create actual added worth by way of synthetic intelligence in on a regular basis life. These glasses can even use picture knowledge from the atmosphere to offer contextual info or suggestions.

The darkish aspect of knowledge processing

One of many key questions that arises is that this: The place is a lot of the picture knowledge processed? The reply: as a result of a scarcity of regionally accessible computing energy, picture knowledge isn’t analyzed on the machine itself, however on Meta’s cloud servers. This naturally permits way more spectacular options, however it additionally raises considerations about knowledge safety practices.

Data despatched to the cloud isn’t merely evaluated, but additionally used to coach AI fashions and due to this fact, stays saved not directly. A paper the place Google Deepmind was concerned confirmed why this may be problematic. Researchers succeeded in extracting coaching knowledge from an AI mannequin from OpenAI by way of intelligent prompting.

Picture knowledge as AI coaching materials

Meta has now confirmed with Techcrunch that picture and video knowledge can be used to coach Meta AI however provided that the customers really use the in-house Meta AI. This could solely apply to recordings that customers have analyzed through the AI. Nevertheless, what sounds clear and clear in Meta’s official assertion will most likely be hidden in prolonged ToS and sophisticated menus in actuality, and this is able to not be the primary time such a factor occurred.

After all, this at present solely applies to the USA and Canada, because the AI options usually are not but accessible elsewhere. The strict knowledge safety rules in Europe will result in greater necessities, however legitimate questions stay: How can it actually guarantee that knowledge, as soon as launched, isn’t misused? How can it decide in apply what really leads to the cloud and what would not?

Meta’s accountability

Meta has the accountability to transparently talk how knowledge processing works and the way our knowledge is protected. At a time when privateness has turn out to be a key concern, customers have to be educated and empowered to make knowledgeable selections about how and once they share their knowledge. The continued integration of know-how into our each day lives shouldn’t come on the expense of our privateness.

In a world the place know-how and way of life are more and more merging, it’s essential for customers to maintain a vital eye on the merchandise used. The Ray-Ban glasses from Meta are just the start of a brand new period the place knowledge and private info play a key function, to not point out glasses had been designed to share extra non-public knowledge than ever earlier than.